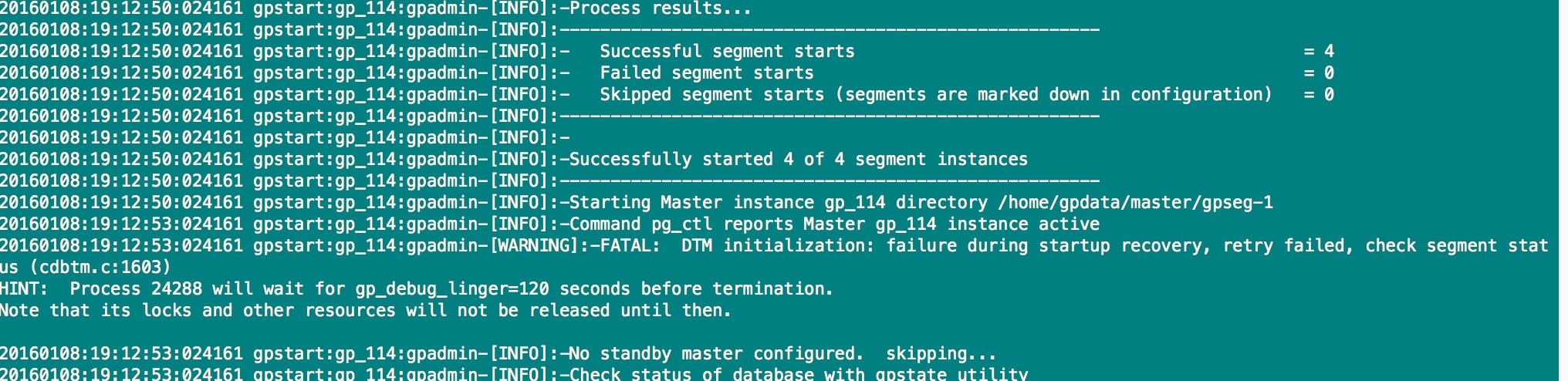

(急)跌跌撞撞前行:greenplum FATAL: DTM initialization: failure during startup recovery, retry failed, check segment status (cdbtm.c:1603)

问题:

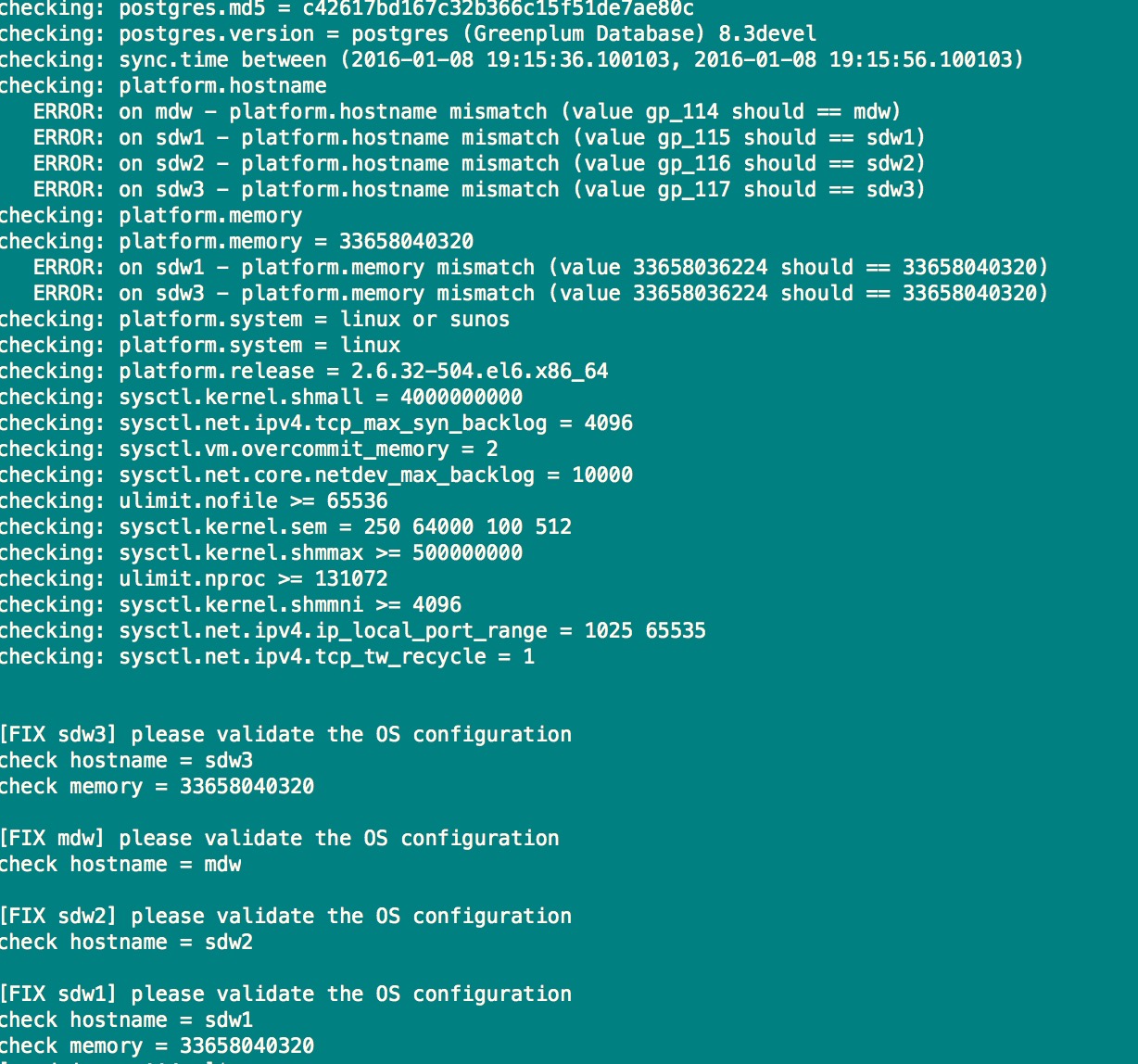

使用gpcheckos检查各主机差别:

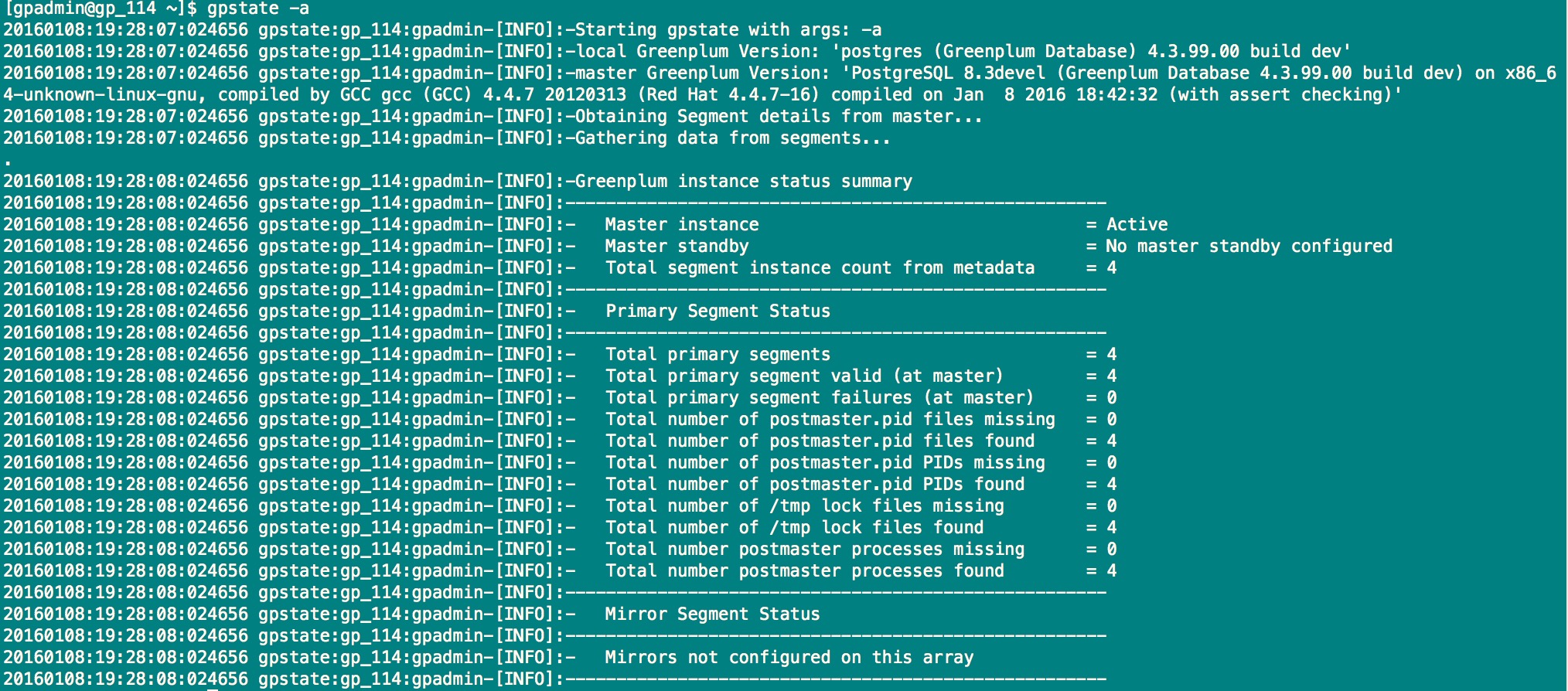

使用gpstate检查集群运行状态:

集群可以启动,但是DTM初始化失败,导致无法连接数据库。

主机名称使用的是别名,应该没有影响,内存确实稍微又写差别,但是这点差别应该不至于造成这种后果。

防火墙是关闭的,

求大神指导,这个可能是问题的关键(cdbtm.c:1603)

-

一枚PGer

找到问题的答案了,原来shared_buffers确实是不需要设置那么大的,摘一段放这,想看详细的请点连接。

https://support.pivotal.io/hc/communities/public/questions/205239098-Unable-to-set-shared-buffers-to-2-GBMichael,

While pg_tune is a good utility for a standalone database - it does not apply to the MPP setup used by Greenplum which needs overhead to operate multiple standalone databases communicating on a private network.

You cannot set the shared buffers to a higher value, because it is set as an integer -- which caps out at 2GB, and that would be overflow.For tuning, you really want to focus on the gp_vmem_protect_limt, statement_memory and resource queues.

And while you want to squeeze every bit of performance out of your cluster - you need to leave room for overhead and things that aren't calculated in the memory usage - simply because greenplum or the OS has to do them -- like unpacking the plan.An OS setting of vm.overcommit_memory = 2 with the default vm.overcommit_ratio being .5 limits the amount of available OS Virtual Memory to: ( swap + ( vm.overcommit_ratio * RAM ) )

In your case, this would be a segment max of (Swap + (64 * .5))Your gp_vmem_protect_limit should be set to: ( OS Virtual Memory *.9 / Number of Primary Segments Per Server )

This leaves 10% overhead, but does not account for a saturated system with failover... so you could set it for less for stability.

For example, if you have mirroring enabled (recommended) and you have failover, one or more nodes would now be carrying one or more additional primary segments -- so if you were tuned for 4 primaries per node and failed over under heavy load, you increase the possibility of the segments running out of memory.Your statment_mem should be set to:

( gp_vmem_protect_limt / number of concurrent queries allowed by the resource queues)I would recommend leaving the default setting for any non-custom queue, which leaves you room to bump up the other for queues with massive queries -- though you can simply set the presql at runtime as necessary: set statement_mem=xxx; select....

These settings safeguard your system from virtual memory crashes -- if they are setup correctly, the resource queue will prevent queries that would exceed your gp_vmem limit. Your gp_vmem limit should protect your OS from running out of virtual memory. Your OS virtual memory settings should prevent your OS from running out of memory...

Correctly tuned, they allow your queries to run with optimal performance with overhead to manage all the moving components.

GPDB will always try to use 100% of it's resources, unless otherwise capped by maximums on the resource queues.

To get a better understanding of this, take a look at this short video, which does a very good job of explaining how they work effectively:

https://www.youtube.com/watch?v=1b0mHsT_woUHope this helps --

2019-07-17 18:23:51赞同 展开评论 打赏 -

公益是一辈子的事, I am digoal, just do it. 阿里云数据库团队, 擅长PolarDB, PostgreSQL, DuckDB, ADB等, 长期致力于推动开源数据库技术、生态在中国的发展与开源产业人才培养. 曾荣获阿里巴巴麒麟布道师称号、2018届OSCAR开源尖峰人物.

主机名不匹配

2019-07-17 18:23:51赞同 展开评论 打赏 -

版权声明:本文内容由阿里云实名注册用户自发贡献,版权归原作者所有,阿里云开发者社区不拥有其著作权,亦不承担相应法律责任。具体规则请查看《阿里云开发者社区用户服务协议》和《阿里云开发者社区知识产权保护指引》。如果您发现本社区中有涉嫌抄袭的内容,填写侵权投诉表单进行举报,一经查实,本社区将立刻删除涉嫌侵权内容。