1 Introduction

a lock service called Chubby, It is intended for use within a loosely-coupled distributed system consisting of moderately large numbers of small machines connected by a high-speed network.

We expected Chubby to help developers deal with coarse-grained synchronization within their systems, and in particular to deal with the problem of electing a leader from among a set of otherwise equivalent servers.

Google File System uses a Chubby lock to appoint a GFS master server

Bigtable uses Chubby in several ways: to elect a master, to allow the master to discover the servers it controls, and to permit clients to find the master.

In addition, both GFS and Bigtable use Chubby as a well-known and available location to store a small amount of meta-data; in effect they use Chubby as the root of their distributed data structures.

一种分布式锁服务, 用于解决分布式的一致性问题

从Chubby最著名的两个应用场景, GFS和Bigtable上来看, 主要用于elect master和高可用的配置管理, 比如系统的元数据

Readers familiar with distributed computing will recognize the election of a primary among peers as an instance of thedistributed consensus problem, and realize we require a solution using asynchronous communication which allow packets to be lost, delayed, and reordered.

Asynchronous consensus is solved by the Paxos protocol.

Indeed, all working protocols for asynchronous consensus we have so far encountered have Paxos at their core.

Paxos maintains safety without timing assumptions, but clocks must be introduced to ensure liveness; this overcomes the impossibility result of Fischer et al.

Chubby要解决的问题的本质是在异步通信环境下的分布式一致性问题

并给出著名的论断, 所有有效的解决异步一致性问题的协议其核心本质都是Paxos算法

针对2PC算法缺乏活性(liveness), 因为在异步环境中无法区分失败和慢操作, 导致的FLP结论, Paxos克服了这种不可能, 确保在有进程失败的情况下, 依然可以达成一致性

Building Chubby was an engineering effort required to fill the needs mentioned above; it was not research.

We claim no new algorithms or techniques. The purpose of this paper is to describe what we did and why, rather than to advocate it.

本文讨论的都是工程问题而不是reseach, 因为这个问题本身Paxos算法已经可用解决

所以不关心工程实现的同学可以绕道了...

2 Design

2.1 Rationale(设计思路)

1. 为何实现lock service, 而非一个标准的Paxos库

无疑提供标准的Paxos库, 会具有更高的通用性, 用户可以在任意集群上通过Paxos库来搭建一致性集群.

但是Chubby的目标, 让用户更加简单来使用Paxos协议,

哪怕用户不懂paxos(并不是那么好懂)

哪怕用户没有自己的集群而只有一个client

哪怕是在系统design初期完全没有考虑一致性问题...等情况下仍然可以简单的使用

所以总结一下, 实现Lock Service的好处如下

a. 便于已有系统的移植, 对于系统初期设计没有考虑到分布式一致性问题, 后期如果基于Paxos库, 难度和修改比较大. 而如果基于lock service就容易的多

b. Chubby不但用于elect master, 并且提供机制公布结果(mechanism for advertising the results), 还能够通过consistent client caching机制(rather than time-based caching)来保证所有client端cache的一致性. 这也是为什么Chubby作为name server非常成功的原因

c. 基于锁的接口更为程序员所熟悉

d. 分布式协同算法使用quorums做决策, 如果基于paxos库, 要求用户使用时必须先搭建集群.而基于lock service, 只有一个客户端也能成功取到锁

2. 提供小文件(small-files)存储, 而非单纯锁服务

Chubby首先是个lock service, 然后出于方便, 提供文件的存储, 但这不是他的主要目的, 所以不要要求high throughput或存储大文件

如上面b所说, 需要advertise结果或保存配置参数, 诸如此类的需要, 就直接解决了, 省得还要依赖另一个service

3. 粗粒度锁

Fine-grained Lock, in which they might be held only for a short duration (seconds or less);

Coarse-grained Lock, which would then handle all access to that data for a considerable time, perhaps hours or days.

Chubby只支持粗粒度锁, 因为它所使用的场景, 比如elect master, metadata存储...一旦决定, 不会频繁的变化, 没有必要支持细粒度

粗粒度锁的好处

a. 负载轻, 粗粒度所以不用频繁去访问, 对于Chubby这样基于master的service, 在面对大量client的时候, 这点非常重要

b. 临时性的锁服务器不可用对client的影响比较小

问题是,

因为粗粒度, 所以client往往会cache得到的结果, 以避免频繁的访问master

那么如何保证cache的一致性?

当master fail-over时如果保证lock service的结果不丢失并继续有效?

4. Design中的其他考虑

-

Chubby服务需要考虑支持大量的client(数千), 当然粗粒度锁部分的解决这个问题, 如果还是不够后面提到可以使用proxy或partition的方式

-

避免客户端反复轮询, 需要提供一种事件通知机制

-

在客户端提供缓存, 并提供一致性缓存(consistent caching)机制

- 提供了包括访问控制在内的安全机制

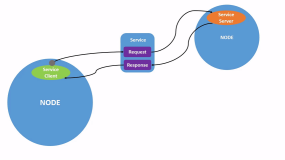

2.2 System structure

Chubby的架构其实很简单, 包含client libary和chubby server

Chubby cell, 一个chubby集群

Replicas, 集群中任意一个server

Master, replicas中需要elect master

a, master具有master lease(租约), 不是永久的, 避免通信问题导致block

b, master lease需要定期的续约(periodically renewed)

c, 只有master有发起读写请求的权力, 而replicas只能通过一致性协议和master进行同步

d, client通过发送master location requests给任意replica来定位master

Master fail, 在该master的租约到期后, 重新elect

Replica fail, 相对复杂一些, 详细见下面

A Chubby cell consists of a small set of servers (typically five) known as replicas.

The replicas use a distributed consensus protocol to elect a master; the master must obtain votes from a majority of the replicas, plus promises that those replicas will not elect a different master for an interval of a few seconds known as themaster lease. The master lease is periodically renewed by the replicas provided the master continues to win a majority of the vote

The replicas maintain copies of a simple database, but only the master initiates reads and writes of this database.

All other replicas simply copy updates from the master, sent using the consensus protocol.

Clients find the master by sending master location requests to the replicas listed in the DNS. Non-master replicas respond to such requests by returning the identity of the master.

If a master fails, the other replicas run the election protocol when their master leases expire

If a replica fails and does not recover for a few hours,

1. a simple replacement system selects a fresh machine from a free pool and starts the lock server binary on it.

2. It then updates the DNS tables, replacing the IP address of the failed replica with that of the new one.

3. The current master polls the DNS periodically and eventually notices the change.

It then updates the list of the cell’s members in the cell’s database; this list is kept consistent across all the members via the normal replication protocol.

4. In the meantime, the new replica obtains a recent copy of the database from a combination of backups stored on file servers and updates from active replicas.

5. Once the new replica has processed a request that the current master is waiting to commit, the replica is permitted to vote in the elections for new master.

2.3 Files, directories, and handles

Chubby作为文件存储系统, 文件系统是怎样组织的?

如下面的例子, 和UNIX文件系统很像

首先ls表示lock service, 第二个表示chubby cell, 后面的是各个cell内部的文件结构, 由各个cell自己解析

通过在路径第二级设置不同的chubby cell name, 可以简单的把目录放到不同的chubby集群上

Chubby的文件系统和UNIX的不同, 简单的说similar but simpler, 具体参考下面

对于文件或目录, 在Chubby中统一叫做Node, node分为permanent or ephemeral, 并且任一node都可用作为advisory reader/writer lock

关于文件node和handle的相关元数据, 具体参考下面

Chubby exports a file system interface similar to, but simpler than that of UNIX.

A typical name is: /ls/foo/wombat/pouch

The ls prefix is common to all Chubby names, and stands for lock service.

The second component (foo) is the name of a Chubby cell

A special cell name ‘local’ indicates that the client’s local Chubby cell should be used

The remainder of the name, /wombat/pouch, is interpreted within the named Chubby cell.

和UNIX文件系统的区别如下,

The design differs from UNIX in a ways that ease distribution.

1. To allow the files in different directories to be served from different Chubby masters, we do not expose operations that can move files from one directory to another

2. we do not maintain directory modified times

3. we avoid path-dependent permission semantics (that is, access to a file is controlled by the permissions on the file itself rather than on directories on the path leading to the file)

4. To make it easier to cache file meta-data, the system does not reveal last-access times

The name space contains only files and directories, collectively called nodes which be either permanent or ephemeral.

Any node can act as an advisory reader/writer lock.

Ephemeral Node

Any node may be deleted explicitly, but ephemeral nodes are also deleted if no client has them open (and, for directories, they are empty).

Ephemeral files are used as temporary files, and as indicators to others that a client is alive.

Node包含如下元数据,

a. three names of access control lists (ACLs) used to control reading, writing and changing the ACL names for the node.

关于Chubby的ACL机制

Unless overridden, a node inherits the ACL names of its parent directory on creation.

ACLs are themselves files located in an ACL directory, which is a well-known part of the cell’s local name space.

These ACL files consist of simple lists of names of principals; readers may be reminded of Plan 9’s groups [21].

Thus, if file F’s write ACL name is foo, and the ACL directory contains a file foo that contains an entry ‘bar’, then user ‘bar’ is permitted to write ‘F’.

Users are authenticated by a mechanism built into the RPC system.

Because Chubby’s ACLs are simply files, they are automatically available to other services that wish to use similar access control mechanisms.

b. four monotonicallyincreasing 64-bit numbers that allow clients to detect changes easily:

• an instance number; greater than the instance number of any previous node with the same name.

• a content generation number (files only); this increases when the file’s contents are written.

• a lock generation number; this increases when the node’s lock transitions from free to held.

• an ACL generation number; this increases when the node’s ACL names are written.

c. Chubby also exposes a 64-bit file-content checksum so clients may tell whether files differ.

Client在打开node的时候, 会获得类似UNIX file descriptors的handles,

Clients open nodes to obtain handles that are analogous to UNIX file descriptors. Handles include:

• check digits that prevent clients from creating or guessing handles, so full access control checks need be performed only when handles are created (compare with UNIX, which checks its permissions bits at open time, but not at each read/write because file descriptors cannot be forged).

Handles的校验位, 因为仅仅在handles创建的时候进行权限检查, 具体操作的时候不会再次check权限, 所以必须有机制保证handles不是伪造, 否则有安全问题

• a sequence number that allows a master to tell whether a handle was generated by it or by a previous master.

master用于判断该handle是否由我创建, 或之前的master创建

• mode information provided at open time to allow the master to recreate its state if an old handle is presented to a newly restarted master.

用于标志是否允许新的master重建旧handle(由旧master创建的handle)的状态信息

2.4 Locks and sequencers

Each Chubby file and directory can act as a reader-writer lock:

either one client handle may hold the lock in exclusive (writer) mode, or any number of client handles may hold the lock in shared (reader) mode.

Like the mutexes known to most programmers, locks are advisory.

Advisory Lock, 相对于mandatory locks

Lock一般由file实现, advisory表示只关注取得锁这种互斥关系, 而不在乎锁文件本身是否可以被access

That is, they conflict only with other attempts to acquire the same lock: holding a lock called F neither is necessary to access the file F, nor prevents other clients from doing so.

为何使用Advisory Lock?

We rejected mandatory locks, which make locked objects inaccessible to clients not holding their locks:

a. Chubby锁经常保护由其他服务实现的资源,而不仅仅是与锁关联的文件

b. 我们不想强迫用户在他们为了调试和管理而访问这些锁定的文件时关闭应用程序

c. 我们的开发者用常规的方式来执行错误检测,例如"lock X is held”, 所以他们从强制检查中受益很少. 意思一般都会先check “lock X is held”, 不会直接访问文件通过强制检查来check lock

获取锁需要写权限

In Chubby, acquiring a lock in either mode requires write permission so that an unprivileged reader cannot prevent a writer from making progress.

在分布式环境下锁机制是非常复杂的, 由于通信的不确定性和节点失败

Locking is complex in distributed systems because communication is typically uncertain, and processes may fail independently.

例子, 要求互斥R请求和S操作(比如两者需要修改同一个文件)

进程A, 获取锁L, 并发送请求R, 然后fail

进程B, 试图获取锁L, 如果能获取, 并又没有收到请求R, 则执行S操作

正常情况下, A会保持锁L, 所以B没法获取L, 则无法执行S

即使在A fail的case下, 如果R能够及时被B收到, 也可以避免S的执行 (因为A fail, 到B获得L之间肯定有一段时间T)

但如果R请求delay(B既获得L, 又没有收到R), 就可能导致S操作发生后又接收到R 请求, 互斥失败, 导致数据被写脏

这个问题的本质是, 不同generation的lock的操作或消息混在一起

而在应用层, 只知道是否获得锁, 并不会区别锁的generation, 所以带来的问题是, 导致多个命令同时被选中

解决办法, 如下, 给每个命令带上Sequencer, 以区别来自不同generation lock的操作

Chubby选择给所有使用lock的命令进行标号, Sequencer

It is costly to introduce sequence numbers into all the interactions in an existing complex system.

Instead, Chubby provides a means by which sequence numbers can be introduced into only those interactions that make use of locks.

Sequencer, 用于保存锁状态

At any time, a lock holder may request a sequencer, an opaque byte-string that describes the state of the lock immediately after acquisition.

It contains the name of the lock, the mode in which it was acquired(exclusive or shared), and the lock generation number.

基于这个机制, A获取L的同时, 生成sequencer L1, 并将L1发送给文件服务器, 并发送带L1的请求R, 然后fail

由于A fail, B获得L, 生成sequencer L2, 并将L2发送给文件服务器, 然后执行S操作更新文件

R到达, B相应R, 去更新文件时, 文件服务器会check R带的sequencer L1, 发现这个lock已经过期, 拒绝该请求

从而完成互斥

如何check当前lock sequencer状况?

The validity of a sequencer can be checked against the server’s Chubby cache or, if the server does not wish to maintain a session with Chubby, against the most recent sequencer that the server has observed.

Chubby对于不支持Sequencer的server提供如下简单的方案,

其实就是通过lock-delay增加前面例子中的时间T(即不同generation lock之间的间隔), 通过这个方法来减少delay或reorder的风险

不完美的就是不能完全保证, 取决于lock-delay到底设多长

Chubby provides an imperfect but easier mechanism to reduce the risk of delayed or re-ordered requests to servers that do not support sequencers.

If a client releases a lock in the normal way, it is immediately available for other clients to claim, as one would expect.

However, if a lock becomes free because the holder has failed or become inaccessible, the lock server will prevent other clients from claiming the lock for a period called the lock-delay.

2.5 Events

为了避免client反复的轮询, Chubby提供event机制

Client可用订阅一系列的events, 当创建handle的时候. 这些event会异步的通过up-call传给client

具体哪些event, 参考下面, 最后两种event极少被时候, 原因参考原文

Chubby会保证在操作发生后再发送event, 所以client得到event后一定可以看到最新的状态

Chubby clients may subscribe to a range of events when they create a handle. These events are delivered to the client asynchronously via an up-call from the Chubby library.

Events include:

• file contents modified—often used to monitor the location of a service advertised via the file.

• child node added, removed, or modified—used to implement mirroring (§2.12).

(In addition to allowing new files to be discovered, returning events for child nodes makes it possible to monitor ephemeral files without affecting their reference counts.)

• Chubby master failed over—warns clients that other events may have been lost, so data must be rescanned.

• a handle (and its lock) has become invalid—this typically suggests a communications problem.

• lock acquired—can be used to determine when a primary has been elected.

• conflicting lock request from another client—allows the caching of locks.

Events are delivered after the corresponding action has taken place.

Thus, if a client is informed that file contents have changed, it is guaranteed to see the new data (or data that is yet more recent) if it subsequently reads the file.

2.6 API

Clients see a Chubby handle as a pointer to an opaque structure that supports various operations.

Handles are created only by Open(), and destroyed with Close().

Open打开类似UNIX file descriptor的handle, 只有open本身是需要node name的, 其他的所有操作可用直接基于handle

Open() opens a named file or directory to produce a handle, analogous to a UNIX file descriptor. Only this call takes a node name; all others operate on handles.

Open API需要如下参数:

• how the handle will be used (reading; writing and locking; changing the ACL); the handle is created only if the client has the appropriate permissions.

• events that should be delivered (see §2.5).

• the lock-delay (§2.4).

• whether a new file or directory should (or must) be created.

If a file is created, the caller may supply initial contents and initial ACL names.

The return value indicates whether the file was in fact created.

其他接口如Close(), SetContents(), Acquire(), GetSequencer(), 参考原论文

下面给出一个例子, 如何使用API完成primary election?

Clients can use this API to perform primary election as follows:

1. All potential primaries open the lock file and attempt to acquire the lock. One succeeds and becomes the primary, while the others act as replicas.

2. The primary writes its identity into the lock file with SetContents(), so that it can be found by clients and replicas, which read the file with GetContentsAndStat(), perhaps in response to a file-modification event (§2.5).

3a. Ideally, the primary obtains a sequencer with GetSequencer(), which it then passes to servers it communicates with; they should confirm with CheckSequencer() that it is still the primary.

3b. A lock-delay may be used with services that cannot check sequencers (§2.4).

2.7 Caching

Client cache是Chubby很重要的feature, 因为对于粗粒度锁, 为了避免反复去master读取数据, 会在client cache文件数据和node的元数据

问题是怎么样保证大量client上cache的一致性?

Chubby master维护所有有cache的client list, 并通过发送invalidation(失效通知)的方式来保证所有client cache 的一致性

具体思路是, 在数据变更时, 先发一轮invalidation, 并等所有client cache失效后(收到ack或lease超时), 再更新

除了cache文件数据和node的元数据, client还可用cache handle和lock

To reduce read traffic, Chubby clients cache file data and node meta-data (including file absence) in a consistent, write-through cache held in memory.

The cache is maintained by a lease mechanism described below, and kept consistent by invalidations sent by the master, which keeps a list of what each client may be caching.

The protocol ensures that clients see either a consistent view of Chubby state, or an error.

1. When file data or meta-data is to be changed, the modification is blocked while the master sends invalidations for the data to every client that may have cached it; this mechanism sits on top of KeepAlive RPCs, discussed more fully in the next section.

2. On receipt of an invalidation, a client flushes the invalidated state(清除失效数据) and acknowledgesby making its next KeepAlive call.

3. The modification proceeds only after the server knows that each client has invalidated its cache,

either because the client acknowledged the invalidation,

or because the client allowed its cache lease to expire.

invalidations只需要发送一轮, 对于那些没有ack的client, 只要等到lease结束, 就可用认为这些client没有cache

Only one round of invalidations is needed because the master treats the node as uncachable while cache invalidations remain unacknowledged.

当invalidation的过程中, 大部分client是没有cache的, 对于此时的读操作怎么处理?

Default的方式是不做处理, 没有cache就让读操作直接去master读, 毕竟写操作数量远远小于读操作

但是这样带来的问题, 会导致这段时间master负载过重, 所以另一种方案是, 在invalidation期间, client发现没有cache就block所有的读操作

两种方式可用配合使用...

This approach allows reads always to be processed without delay; this is useful because reads greatly outnumber writes.

An alternative would be to block calls that access the node during invalidation; this would make it less likely that over-eager clients will bombard the master(急切的client, 轰炸式访问master) with uncached accesses during invalidation, at the cost of occasional delays. If this were a problem, one could imagine adopting a hybrid scheme that switched tactics if overload were detected.

讨论如果保持cache一致性的思路, 让cache失效, 还是增量更新cache?

本文从效率考虑, 选择先让cache失效, 再重新cache

The caching protocol is simple: it invalidates cached data on a change, and never updates it.

It would be just as simple to update rather than to invalidate, but updateonly protocols can be arbitrarily inefficient; a client that accessed a file might receive updates indefinitely, causing an unbounded number of unnecessary updates.

采用保持client cache强一致性, 而非更弱的一致性的理由

Despite the overheads of providing strict consistency, we rejected weaker models because we felt that programmers would find them harder to use.

Similarly, mechanisms such as virtual synchrony that require clients to exchange sequence numbers in all messages were considered inappropriate in an environment with diverse preexisting communication protocols.

除了data和meta-data, client还cache handles, 当然是在保证不影响client观察到的语义的前提下.

In addition to caching data and meta-data, Chubby clients cache open handles.

Thus, if a client opens a file it has opened previously, only the first Open() call necessarily leads to an RPC to the master.

This caching is restricted in minor ways so that it never affects the semantics observed by the client: handles on ephemeral files cannot be held open if the application has closed them;

and handles that permit locking can be reused, but cannot be used concurrently by multiple application handles.

Client还允许长时间cache lock, 方便下次client还要使用该lock, 当其他的client需要aquire这个lock时, 发event通知它, 它才release这个lock

象2.5说的, 这种方式几乎没人这样用

Chubby’s protocol permits clients to cache locks—that is, to hold locks longer than strictly necessary in the hope that they can be used again by the same client.

An event informs a lock holder if another client has requested a conflicting lock, allowing the holder to release the lock just when it is needed elsewhere (see §2.5).

2.8 Sessions and KeepAlives

Chubby session, Chubby cell 和 a Chubby client之间的关联(relationship ). client’s handles, locks, and cached data在session中都是有效的

Session lease, session是具有期限的, 称为lease, master保证在lease内不会单方面的终止session. lease到期, 称为session lease timeout

KeepAlives, 如果要保持session, 就需要不断通过keepAlives来让master advance(延长) session lease

A Chubby session is a relationship between a Chubby cell and a Chubby client; it exists for some interval of time, and is maintained by periodic handshakes called KeepAlives.

Unless a Chubby client informs the master otherwise, the client’s handles, locks, and cached data all remain valid provided its session remains valid.

A client requests a new session on first contacting the master of a Chubby cell.

It ends the session explicitly either when it terminates, or if the session has been idle (with no open handles and no calls for a minute).

Each session has an associated lease—an interval of time extending into the future during which the master guarantees not to terminate the session unilaterally. The end of this interval is called the session lease timeout.

Master可以任意延长lease, 但不能缩短lease, 并且在下面三种情况下会延长lease

The master is free to advance(延长) this timeout further into the future, but may not move it backwards(缩短) in time. The master advances the lease timeout in three circumstances:

a. on creation of the session

b. when a master fail-over occurs (see below)

c. when it responds to a KeepAlive RPC from the client

KeepAlives的具体流程

1. Master会block住client发来的keepAlive请求, 直到client当前的lease快过期的时候, 再reply该keepAlive请求并附带新的lease timeout.

lease timeout的具体值, master可以随便定, 默认是12s, 当master认为负载过重的情况下, 可以加大timeout值, 以降低负载

On receiving a KeepAlive, the master typically blocks the RPC (doesnot allow it to return) until the client’s previous lease interval is close to expiring

The master later allows the RPC to return to the client, and thus informs the client of the new lease timeout.

The master may extend the timeout by any amount. The default extension is 12s, but an overloaded master may use higher values to reduce the number of KeepAlive calls it must process.

2. Client在收到master的返回后, 发送新的KeepAlive到master. 可见client会保证总有一个keepAlive被blocked在master

The client initiates a new KeepAlive immediately after receiving the previous reply. Thus, the client ensures that there is almost always a KeepAlive call blocked at the master.

3. KeepAlives reply除了用于extending lease外, 还用于传递event和cache invalidations, 所以当出现event或cache invalidations时, KeepAlives会被提前reply

As well as extending the client’s lease, the KeepAlive reply is used to transmit events and cache invalidations back to the client.

The master allows a KeepAlive to return early when an event or invalidation is to be delivered.

为何在KeepAlive上附带event和invalidation? 而不是分开发送?

Piggybacking(捎带) events on KeepAlive replies ensures that clients cannot maintain a session without acknowledging cache invalidations, and causes all Chubby RPCs to flow from client to master. This simplifies the client, and allows the protocol to operate through firewalls that allow initiation of connections in only one direction.

Client的local lease timeout只是近似于(略小于)master上的lease timeout, 因为考虑到KeepAlive以及响应消耗的时间, 以及master的时钟和client的不同步

The client maintains a local lease timeout that is a conservative approximation of the master’s lease timeout. It differs from the master’s lease timeout because the client must make conservative assumptions both of the time its KeepAlive reply spent in flight, and the rate at which the master’s clock is advancing; to maintain consistency, we require that the server’s clock advance no faster than a known constant factor faster than the client’s.

Client local lease过期时的处理流程

client lease超时, 无法确定master是否已经终结该session, 清空cache, 试图在宽限期内和master完成keepalive, 成功就recover, 宽限期结束仍未成功就意味session expired

在lease过期的情况下, 谁都有权力终结session, 所以宽限期结束的情况下, 仍然没有master的消息, client可以单方面终结session

并返回error给API调用, 从而避免API并无限的block…

If a client’s local lease timeout expires, it becomes unsure whether the master has terminated its session.

1. The client empties and disables its cache, and we say that its session is in ‘jeopardy’(危险境地).

2. The client waits a further interval called the grace period(宽限期), 45s by default.

3. If the client and master manage to exchange a successful KeepAlive before the end of the client’s grace period, the client enables its cache once more.

Otherwise, the client assumes that the session has expired.

This is done so that Chubby API calls do not block indefinitely when a Chubby cell becomes inaccessible; calls return with an error if the grace period ends before communication is re-established.

同时Chubby library可以通过jeopardy event, safe event, expired event来通知application

App收到jeopardy event可以暂停自身, 在收到safe event后再recover, 这样避免在出现master不可用时的app重启(尤其对有large startup overhead的app)

The Chubby library can inform the application when the grace period begins via a jeopardy event.

When the session is known to have survived the communications problem, a safe event tells the client to proceed;

if the session times out instead, an expired event is sent.

This information allows the application to quiesce(停止) itself when it is unsure of the status of its session, and to recover without restarting if the problem proves to be transient.

This can be important in avoiding outages in services with large startup overhead.

If a client holds a handle H on a node and any operation on H fails because the associated session has expired,

all subsequent operations on H (except Close() and Poison()) will fail in the same way. Clients can use this to guarantee that network and server outages cause only a suffix of a sequence of operations to be lost, rather than an arbitrary subsequence, thus allowing complex changes to be marked as committed with a final write.

2.9 Fail-overs

现在考虑当master fail的时候, session的状况

最好的情况, 在client lease没有过期的情况下, 就已经完成new master的election, 这样对client没有什么影响, 即master的fail-over对client是透明的

其次, 在client lease过期, 进入grace period, client会清空当前cache并通知app进入危险期, 并暂停运行, 如果在grace period中, client恢复了和new master之间的session, 再通知app恢复正常状况, 这种case, 对client和app有一定的影响, 但只是造成执行的暂停和的delay.

最差, 在grace period过期后, 仍然无法恢复和new master之间的session, client只能放弃该session, 并向app报告失败

When a master fails or otherwise loses mastership, it discards its in-memory state about sessions, handles, and locks.

The authoritative timer for session leases runs at the master, so until a new master is elected the session lease timer is stopped; this is legal because it is equivalent to extending the client’s lease.

If a master election occurs quickly, clients can contact the new master before their local (approximate) lease timers expire.

If the election takes a long time, clients flush their caches and wait for the grace period while trying to find the new master.

Thus the grace period allows sessions to be maintained across fail-overs that exceed the normal lease timeout.

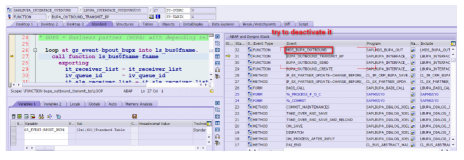

Fail-over 详细交互流程

Figure 2 shows the sequence of events in a lengthy master fail-over event in which the client must use its grace period to preserve its session.

Time increases from left to right, but times are not to scale.

Client session leases are shown as thick arrows both as viewed by both the old and new masters (M1-3, above) and the client (C1-3, below).

Upward angled arrows indicate KeepAlive requests, and downward angled arrows their replies.

横向的粗线表示session lease, 中间带箭头的细线代表keepAlive请求和回复

应该图比较清晰, 当new master被选出后, client第一次发出的Keepalive(4)会被拒绝(由于epoch number), (5)会回复client新的epoch number

第二次发送的Keepalive(6)会成功, 但仍然不能extend master lease (因为M3是保守的, 意思是执行master重建过程中的5,6操作?)

在收到(7)后, client可以通过(8)extend lease C3

(1)The original master has session lease M1 for the client, while the client has a conservative approximation C1 (C1只是M1的保守近似, 图上明显要小于M1, 因为要考虑到消息传递时间和时钟同步问题)

(2)The master commits to lease M2 before informing the client via KeepAlive reply 2; the client is able to extend its view of the lease C2.

(3)The master dies before replying to the next KeepAlive, and some time elapses before another master is elected.

Eventually the client’s approximation of its lease (C2) expires. The client then flushes its cache and starts a timer for the grace period.

During this period, the client cannot be sure whether its lease has expired at the master.

It does not tear down its session, but it blocks all application calls on its API to prevent the application from observing inconsistent data.

At the start of the grace period, the Chubby library sends a jeopardy event to the application to allow it to quiesce itself until it can be sure of the status of its session.

(4)Eventually a new master election succeeds.

The master initially uses a conservative approximation M3 of the session lease that its predecessor may have had for the client.

(5)The first KeepAlive request (4) from the client to the new master is rejected because it has the wrong master epoch number (described in detail below).

(6)The retried request (6) succeeds but typically does not extend the master lease further because M3 was conservative.

(7)However the reply (7) allows the client to extend its lease (C3) once more, and optionally inform the application that its session is no longer in jeopardy.

Because the grace period was long enough to cover the interval between the end of lease C2 and the beginning of lease C3, the client saw nothing but a delay.

Had the grace period been less than that interval, the client would have abandoned the session and reported the failure to the application.

New Master重建过程

Once a client has contacted the new master, the client library and master co-operate to provide the illusion to the application that no failure has occurred.

To achieve this, the new master must reconstruct a conservative approximation of the in-memory state that the previous master had.

It does this partly by reading data stored stably on disc (replicated via the normal database replication protocol), partly by obtaining state from clients, and partly by conservative assumptions. The database records each session, held lock, and ephemeral file.

在new master被elect出来后, 需要进行一系列的重建操作, 才能恢复并处理session相关的操作

首先生成新的epoch number, 避免老的延长的request的干扰

接着, 恢复session和保守的session lease, 并确保所有session都回应fail-over event或者已经过期

最后, 处理遗留的handle和ephemeral files

A newly elected master proceeds:

1. 生成新的epoch number, 并拒绝所有使用旧epoch number的client请求

It first picks a new client epoch number, which clients are required to present on every call.

The master rejects calls from clients using older epoch numbers, and provides the new epoch number.

This ensures that the new master will not respond to a very old packet that was sent to a previous master, even one running on the same machine.

2. 开始响应master-location requests, 但仍不能执行任何session相关的操作请求

The new master may respond to master-location requests, but does not at first process incoming session-related operations.

3. 从DB中读出之前保存的session和lock, 并在内存中恢复, 将session lease设为旧master已经使用的最大值

It builds in-memory data structures for sessions and locks that are recorded in the database.

Session leases are extended to the maximum that the previous master may have been using.

4. 开始响应KeepAlives requests, 但仍不能执行其他session相关的操作请求

The master now lets clients perform KeepAlives, but no other session-related operations.

5. 对每个session(lease没有到期的, 因为如果lease到期client会自动flush cache), 给client发送fail-over event, client会flush cache, 并通知app

It emits a fail-over event to each session; this causes clients to flush their caches (because they may have missed invalidations), and to warn applications that other events may have been lost.

6. 等每个session的client回复fail-over event或session lease到期

The master waits until each session acknowledges the fail-over event or lets its session expire.

7. 开始正常响应所有的操作

The master allows all operations to proceed.

8. 如果client使用旧的(prior to the fail-over)handle, master会在memory中重建该handle, 但是如果这个重建的handle被closed, master将记录并保证在该epoch内不被重建

If a client uses a handle created prior to the fail-over (determined from the value of a sequence number in the handle),

the master recreates the in-memory representation of the handle and honours the call.

If such a recreated handle is closed, the master records it in memory so that it cannot be recreated in this master epoch;

this ensures that a delayed or duplicated network packet cannot accidentally recreate a closed handle.

A faulty client can recreate a closed handle in a future epoch, but this is harmless given that the client is already faulty.

9. 一段时间后(比如, 一分钟), master会删除没有open file handle的临时文件

After some interval (a minute, say), the master deletes ephemeral files that have no open file handles.

Clients should refresh handles on ephemeral files during this interval after a fail-over.

This mechanism has the unfortunate effect that ephemeral files may not disappear promptly if the last client on such a file loses its session during a fail-over.

2.10 Database implementation

数据库的实现使用BerkeleyDB, 使用B树来索引sorted key(path)

BerkeleyDB使用分布式一致性协议来同步数据库log, 以实现replicate

The first version of Chubby used the replicated version of Berkeley DB [20] as its database.

Berkeley DB provides B-trees that map byte-string keys to arbitrary bytestring values. We installed a key comparison function that sorts first by the number of components in a path name; this allows nodes to by keyed by their path name, while keeping sibling nodes adjacent in the sort order.

Because Chubby does not use path-based permissions, a single lookup in the database suffices for each file access.

Berkeley DB’s uses a distributed consensus protocol to replicate its database logs over a set of servers. Once master leases were added, this matched the design of Chubby, which made implementation straightforward.

While Berkeley DB’s B-tree code is widely-used and mature, the replication code was added recently, and has fewer users. Software maintainers must give priority to maintaining and improving their most popular product features. While Berkeley DB’s maintainers solved the problems we had, we felt that use of the replication code exposed us to more risk than we wished to take. As a result,

we have written a simple database using write ahead logging and snapshotting similar to the design of Birrell et al. [2]. As before, the database log is distributed among the replicas using a distributed consensus protocol. Chubby used few of the features of Berkeley DB, and so this rewrite allowed significant simplification of the system as a whole; for example, while we needed atomic operations, we did not need general transactions.

2.11 Backup

会定期对本地chubby cell的database产生snapshot文件, 并发到异地(different building)的GFS服务器上

放在其他的building, 除了防止building damage,

有效避免循环依赖, 因为GFS本身也使用Chubby Cell

在初始化新的replica时, 避免对正在提供服务的replica造成很大的压力

Every few hours, the master of each Chubby cell writes a snapshot of its database to a GFS file server [7] in a different building. The use of a separate building ensures both that the backup will survive building damage, and that the backups introduce no cyclic dependencies in the system; a GFS cell in the same building potentially might rely on the Chubby cell for electing its master.

Backups provide both disaster recovery and a means for initializing the database of a newly replaced replica without placing load on replicas that are in service.

2.12 Mirroring

Chubby可以提供文件镜像(Mirroring)机制.

由于文件一遍比较小, 所以镜像操作一般都很快, 并且Chubby有event机制来保证, 当文件状态发生改变时通知各个镜像.

在网络正常的情况下, 1秒以内file changes可以同步到全球各个镜像cell.

当某个镜像没有响应时, 主cell会一直block changes, 保证状态不变, 直到所有镜像都有响应.

镜像机制常常被用于在全球不同的data center之间同步配置文件.

Chubby allows a collection of files to be mirrored from one cell to another.

Mirroring is fast because the files are small and the event mechanism (§2.5) informs the mirroring code immediately if a file is added, deleted, or modified.

Provided there are no network problems, changes are reflected in dozens of mirrors world-wide in well under a second.

If a mirror is unreachable, it remains unchanged until connectivity is restored.

Updated files are then identified by comparing their checksums.

Mirroring is used most commonly to copy configuration files to various computing clusters distributed around the world.

A special cell, named global, contains a subtree /ls/global/master that is mirrored to the subtree /ls/cell/slave in every other Chubby cell.

The global cell is special because its five replicas are located in widely-separated parts of the world, so it is almost always accessible from most of the organization.

Among the files mirrored from the global cell are Chubby’s own access control lists, various files in which Chubby cells and other systems advertise their presence to our monitoring services, pointers to allow clients to locate large data sets such as Bigtable cells, and many configuration files for other systems.

本文章摘自博客园,原文发布日期:2013-04-27