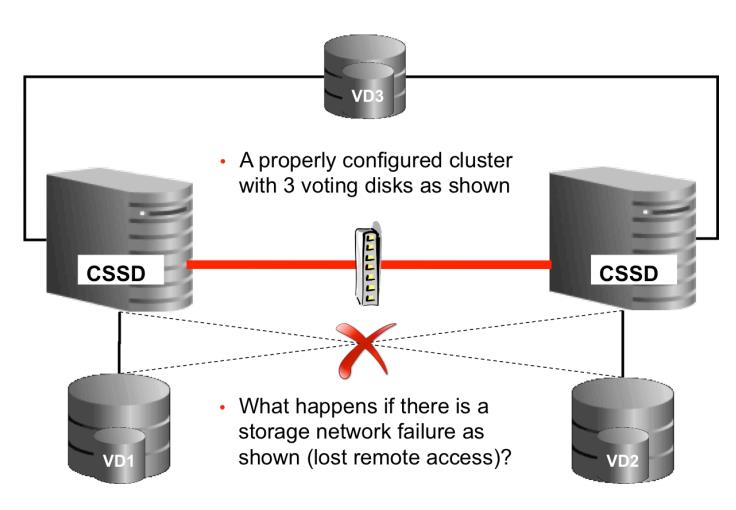

故障模拟,存储网络中断,导致存储分裂( Storage Split )。

网络架构,2个点的rac ,使用双存储+第三方仲裁。 如果存储链路,断开会发生什么现象?

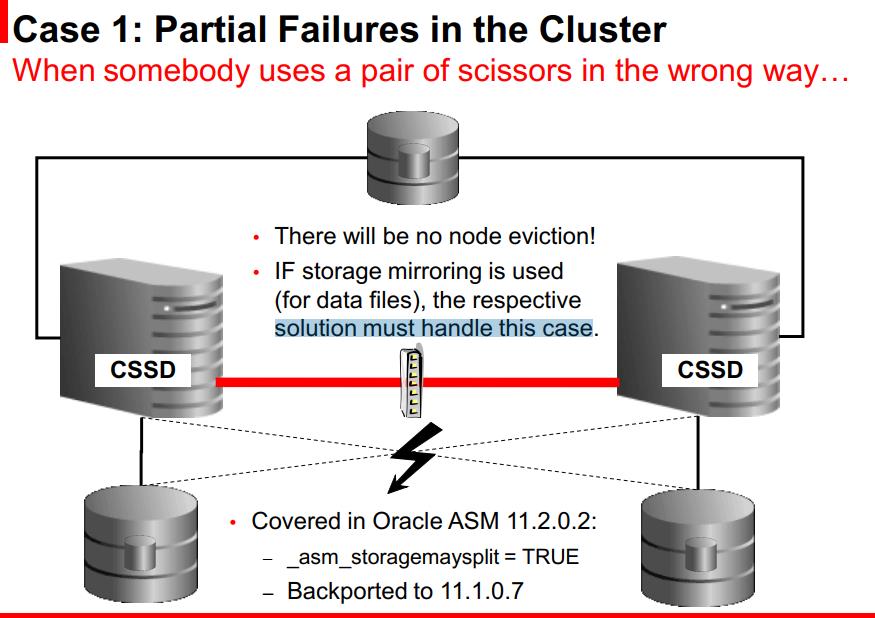

oracle 的解决方案:

基础环境信息:

[grid@ora02 ~]$ crsctl query crs softwareversion

Oracle Clusterware version on node [ora02] is [11.2.0.4.0]

ora01: /dev/oracleasm/disks/VOTE01

ora02: /dev/oracleasm/disks/VOTE02

仲裁磁盘: /dev/oracleasm/disks/VOTEZC (QUORUM)

切断存储链路之后:

ora01 主机可以看到 vote01 VOTEZC磁盘

ora02 主机可以看到 vote02 VOTEZC磁盘

磁盘组信息:

DG_NAME DG_STATE TYPE DSK_NO DSK_NAME PATH MOUNT_S FAILGROUP STATE

--------------- ---------- ------ ---------- ---------- ------------------------------------------------------------ ------- -------------------- --------

CRS MOUNTED NORMAL 0 CRS_0000 /dev/oracleasm/disks/VOTE01 CACHED CRS_0000 NORMAL

CRS MOUNTED NORMAL 1 CRS_0001 /dev/oracleasm/disks/VOTE02 CACHED CRS_0001 NORMAL

CRS MOUNTED NORMAL 3 CRS_0003 /dev/oracleasm/disks/VOTEZC CACHED SYSFG3 NORMAL

DISK_NUMBER NAME PATH HEADER_STATUS OS_MB TOTAL_MB FREE_MB REPAIR_TIMER V FAILGRO

----------- ---------- ------------------------------ -------------------- ---------- ---------- ---------- ------------ - -------

1 CRS_0001 /dev/oracleasm/disks/VOTE02 MEMBER 2047 2047 1617 11861 N REGULAR

0 CRS_0000 /dev/oracleasm/disks/VOTE01 MEMBER 2055 2055 1657 0 Y REGULAR

3 CRS_0003 /dev/oracleasm/disks/VOTEZC MEMBER 2055 2055 2021 0 Y QUORUM

GROUP_NUMBER NAME COMPATIBILITY DATABASE_COMPATIBILITY V

------------ ---------- ------------------------------------------------------------ ------------------------------------------------------------ -

1 CRS 11.2.0.0.0 11.2.0.0.0 集群状态信息:

[grid@ora01 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.CRS.dg

ONLINE ONLINE ora01

ONLINE ONLINE ora02

ora.LISTENER.lsnr

ONLINE ONLINE ora01

ONLINE ONLINE ora02

ora.asm

ONLINE ONLINE ora01 Started

ONLINE ONLINE ora02 Started

ora.gsd

OFFLINE OFFLINE ora01

OFFLINE OFFLINE ora02

ora.net1.network

ONLINE ONLINE ora01

ONLINE ONLINE ora02

ora.ons

ONLINE ONLINE ora01

ONLINE ONLINE ora02

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE ora02

ora.cvu

1 ONLINE ONLINE ora02

ora.oc4j

1 ONLINE ONLINE ora02

ora.ora01.vip

1 ONLINE ONLINE ora01

ora.ora02.vip

1 ONLINE ONLINE ora02

ora.scan1.vip

1 ONLINE ONLINE ora02 votedisk 信息

[grid@ora01 ~]$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 366b8fb4dcd24f70bf65e8b028be14b9 (/dev/oracleasm/disks/VOTE01) [CRS]

2. ONLINE 3f765b45e8b24f75bfb17088d03cb905 (/dev/oracleasm/disks/VOTEZC) [CRS]

3. ONLINE 87754e5e2d554fe3bf9b0f6381c2c2dc (/dev/oracleasm/disks/VOTE02) [CRS]

Located 3 voting disk(s).存储链路切断,告警日志信息(Sun May 26 14:14:25 CST 2019)

导致结果:ora01 主机正常,ora02 主机被驱逐:

[grid@ora01 ~]$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 3f765b45e8b24f75bfb17088d03cb905 (/dev/oracleasm/disks/VOTEZC) [CRS]

2. ONLINE 8ff7f13ec1fd4f36bf99451e933f03e0 (/dev/oracleasm/disks/VOTE01) [CRS]

Located 2 voting disk(s).

[grid@ora01 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.CRS.dg

ONLINE ONLINE ora01

ora.LISTENER.lsnr

ONLINE ONLINE ora01

ora.asm

ONLINE ONLINE ora01 Started

ora.gsd

OFFLINE OFFLINE ora01

ora.net1.network

ONLINE ONLINE ora01

ora.ons

ONLINE ONLINE ora01

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE ora01

ora.cvu

1 ONLINE ONLINE ora01

ora.oc4j

1 ONLINE ONLINE ora01

ora.ora01.vip

1 ONLINE ONLINE ora01

ora.ora02.vip

1 ONLINE INTERMEDIATE ora01 FAILED OVER

ora.scan1.vip

1 ONLINE ONLINE ora01 ORA01 asm alert

Sun May 26 14:14:41 2019

WARNING: Waited 15 secs for write IO to PST disk 1 in group 1.

WARNING: Waited 15 secs for write IO to PST disk 1 in group 1.

Sun May 26 14:16:34 2019

WARNING: Read Failed. group:1 disk:1 AU:1 offset:0 size:4096

WARNING: Read Failed. group:1 disk:1 AU:1 offset:4096 size:4096

WARNING: Write Failed. group:1 disk:1 AU:1 offset:0 size:4096

WARNING: GMON has insufficient disks to maintain consensus. Minimum required is 2: updating 2 PST copies from a total of 3.

NOTE: group CRS: updated PST location: disk 0003 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0000 (PST copy 1)

WARNING: Write Failed. group:1 disk:1 AU:1 offset:1044480 size:4096

WARNING: Hbeat write to PST disk 1.3915288679 in group 1 failed. [4]

Sun May 26 14:16:34 2019

NOTE: process _b000_+asm1 (61111) initiating offline of disk 1.3915288679 (CRS_0001) with mask 0x7e in group 1

NOTE: checking PST: grp = 1

GMON checking disk modes for group 1 at 14 for pid 24, osid 61111

WARNING: GMON has insufficient disks to maintain consensus. Minimum required is 2: updating 2 PST copies from a total of 3.

NOTE: group CRS: updated PST location: disk 0003 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0000 (PST copy 1)

NOTE: checking PST for grp 1 done.

NOTE: sending set offline flag message 764564842 to 1 disk(s) in group 1

WARNING: Disk CRS_0001 in mode 0x7f is now being offlined

NOTE: initiating PST update: grp = 1, dsk = 1/0xe95e9067, mask = 0x6a, op = clear

WARNING: Write Failed. group:1 disk:1 AU:1 offset:0 size:4096

WARNING: GMON has insufficient disks to maintain consensus. Minimum required is 2: updating 2 PST copies from a total of 3.

NOTE: group CRS: updated PST location: disk 0003 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0000 (PST copy 1)

GMON updating disk modes for group 1 at 15 for pid 24, osid 61111

Sun May 26 14:16:35 2019

NOTE: Attempting voting file refresh on diskgroup CRS

WARNING: Read Failed. group:1 disk:1 AU:0 offset:0 size:4096

NOTE: Refresh completed on diskgroup CRS

. Found 3 voting file(s).

NOTE: Voting file relocation is required in diskgroup CRS

NOTE: Attempting voting file relocation on diskgroup CRS

WARNING: Read Failed. group:1 disk:1 AU:0 offset:0 size:4096

NOTE: Successful voting file relocation on diskgroup CRS

NOTE: group CRS: updated PST location: disk 0003 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0000 (PST copy 1)

Sun May 26 14:16:36 2019

Received dirty detach msg from inst 2 for dom 1

Sun May 26 14:16:36 2019

List of instances:

1 2

Dirty detach reconfiguration started (new ddet inc 1, cluster inc 4)

Global Resource Directory partially frozen for dirty detach

* dirty detach - domain 1 invalid = TRUE

130 GCS resources traversed, 0 cancelled

Dirty Detach Reconfiguration complete

Sun May 26 14:16:37 2019

NOTE: SMON starting instance recovery for group CRS domain 1 (mounted)

NOTE: F1X0 found on disk 0 au 2 fcn 0.3148

NOTE: SMON skipping disk 1 (mode=00000015)

NOTE: starting recovery of thread=2 ckpt=6.78 group=1 (CRS)

NOTE: ASM recovery sucessfully read ACD from one mirror side

Errors in file /u01/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_smon_60121.trc:

ORA-15062: ASM disk is globally closed

ORA-15062: ASM disk is globally closed

NOTE: SMON waiting for thread 2 recovery enqueue

NOTE: SMON about to begin recovery lock claims for diskgroup 1 (CRS)

NOTE: SMON successfully validated lock domain 1

Errors in file /u01/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_smon_60121.trc:

ORA-15025: could not open disk "/dev/oracleasm/disks/VOTE02"

ORA-27041: unable to open file

Linux-x86_64 Error: 6: No such device or address

Additional information: 3

NOTE: advancing ckpt for group 1 (CRS) thread=2 ckpt=6.78

NOTE: cache initiating offline of disk 1 group CRS

NOTE: PST update grp = 1 completed successfully

NOTE: initiating PST update: grp = 1, dsk = 1/0xe95e9067, mask = 0x7e, op = clear

GMON updating disk modes for group 1 at 16 for pid 24, osid 61111

NOTE: group CRS: updated PST location: disk 0003 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0000 (PST copy 1)

NOTE: cache closing disk 1 of grp 1: CRS_0001

NOTE: successfully wrote at least one mirror side for diskgroup CRS

NOTE: SMON did instance recovery for group CRS domain 1

NOTE: PST update grp = 1 completed successfully

NOTE: Attempting voting file refresh on diskgroup CRS

NOTE: Refresh completed on diskgroup CRS

. Found 2 voting file(s).

NOTE: Voting file relocation is required in diskgroup CRS

NOTE: Attempting voting file relocation on diskgroup CRS

NOTE: Successful voting file relocation on diskgroup CRS

Sun May 26 14:16:41 2019

NOTE: successfully read ACD block gn=1 blk=0 via retry read

Errors in file /u01/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_lgwr_60117.trc:

ORA-15062: ASM disk is globally closed

NOTE: successfully read ACD block gn=1 blk=0 via retry read

Errors in file /u01/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_lgwr_60117.trc:

ORA-15062: ASM disk is globally closed

Sun May 26 14:17:48 2019

Reconfiguration started (old inc 4, new inc 6)

List of instances:

1 (myinst: 1)

Global Resource Directory frozen

Communication channels reestablished

Master broadcasted resource hash value bitmaps

Non-local Process blocks cleaned out

Sun May 26 14:17:48 2019

LMS 0: 0 GCS shadows cancelled, 0 closed, 0 Xw survived

Set master node info

Submitted all remote-enqueue requests

Dwn-cvts replayed, VALBLKs dubious

All grantable enqueues granted

Post SMON to start 1st pass IR

Submitted all GCS remote-cache requests

Post SMON to start 1st pass IR

Fix write in gcs resources

Reconfiguration complete

Sun May 26 14:18:22 2019

WARNING: Disk 1 (CRS_0001) in group 1 will be dropped in: (16200) secs on ASM inst 1

Sun May 26 14:18:26 2019

NOTE: successfully read ACD block gn=1 blk=0 via retry read

Errors in file /u01/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_lgwr_60117.trc:

ORA-15062: ASM disk is globally closed

NOTE: successfully read ACD block gn=1 blk=0 via retry read

Errors in file /u01/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_lgwr_60117.trc:

ORA-15062: ASM disk is globally closedORA01 grid alert

2019-05-26 14:16:04.237:

[cssd(59878)]CRS-1615:No I/O has completed after 50% of the maximum interval. Voting file /dev/oracleasm/disks/VOTE02 will be considered not functional in 99420 milliseconds

2019-05-26 14:16:34.485:

[cssd(59878)]CRS-1649:An I/O error occured for voting file: /dev/oracleasm/disks/VOTE02; details at (:CSSNM00060:) in /u01/app/11.2.0/grid/log/ora01/cssd/ocssd.log.

2019-05-26 14:16:34.485:

[cssd(59878)]CRS-1649:An I/O error occured for voting file: /dev/oracleasm/disks/VOTE02; details at (:CSSNM00059:) in /u01/app/11.2.0/grid/log/ora01/cssd/ocssd.log.

2019-05-26 14:16:35.213:

[cssd(59878)]CRS-1626:A Configuration change request completed successfully

2019-05-26 14:16:35.293:

[cssd(59878)]CRS-1601:CSSD Reconfiguration complete. Active nodes are ora01 ora02 .

2019-05-26 14:17:47.915:

[cssd(59878)]CRS-1625:Node ora02, number 2, was manually shut down

2019-05-26 14:17:47.931:

[cssd(59878)]CRS-1601:CSSD Reconfiguration complete. Active nodes are ora01 .

2019-05-26 14:17:47.945:

[crsd(60182)]CRS-5504:Node down event reported for node 'ora02'.

2019-05-26 14:17:52.789:

[crsd(60182)]CRS-2773:Server 'ora02' has been removed from pool 'Free'.ora01 ocssd.log

2019-05-26 14:16:34.485: [ CSSD][2956273408](:CSSNM00060:)clssnmvReadBlocks: read failed at offset 4 of /dev/oracleasm/disks/VOTE02

2019-05-26 14:16:34.485: [ CSSD][2956273408]clssnmvDiskAvailabilityChange: voting file /dev/oracleasm/disks/VOTE02 now offline

2019-05-26 14:16:34.485: [ CSSD][2956273408]clssnmvVoteDiskValidation: Failed to perform IO on toc block for /dev/oracleasm/disks/VOTE02

2019-05-26 14:16:34.485: [ CSSD][2956273408]clssnmvWorkerThread: disk /dev/oracleasm/disks/VOTE02 corrupted

2019-05-26 14:16:34.485: [ SKGFD][2957850368]ERROR: -9(Error 27072, OS Error (Linux-x86_64 Error: 5: Input/output error

Additional information: 4

Additional information: 524305

Additional information: -1)

)

2019-05-26 14:16:34.485: [ CSSD][2957850368](:CSSNM00059:)clssnmvWriteBlocks: write failed at offset 17 of /dev/oracleasm/disks/VOTE02

2019-05-26 14:16:34.543: [ CSSD][2470409984]clssscMonitorThreads clssnmvDiskPingThread not scheduled for 129890 msecs

2019-05-26 14:16:34.543: [ CSSD][2470409984]clssscMonitorThreads clssnmvWorkerThread not scheduled for 129070 msecs

2019-05-26 14:16:34.543: [ SKGFD][2956273408]Lib :UFS:: closing handle 0x7f0c8c15a820 for disk :/dev/oracleasm/disks/VOTE02:

2019-05-26 14:16:34.544: [ CSSD][2956273408]clssnmvScanCompletions: completed 1 items

2019-05-26 14:16:34.544: [ SKGFD][2959832832]Lib :UFS:: closing handle 0x14250d0 for disk :/dev/oracleasm/disks/VOTE02:

2019-05-26 14:16:35.213: [ CSSD][2471986944]clssnmDoSyncUpdate: Sync 454365169 complete!

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmHandleUpdate: sync[454365169] src[1], msgvers 4 icin 454365165

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmCompleteConfigChange: Completed configuration change reconfig for CIN 0:1558876364:6 with status 1

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmCompleteConfigChange: Committed configuration change for CIN 0:1558876364:6

2019-05-26 14:16:35.213: [ CSSD][2470409984] misscount 30 reboot latency 3

2019-05-26 14:16:35.213: [ CSSD][2470409984] long I/O timeout 200 short I/O timeout 27

2019-05-26 14:16:35.213: [ CSSD][2470409984] diagnostic wait 0 active version 11.2.0.4.0

2019-05-26 14:16:35.213: [ CSSD][2470409984] Listing unique IDs for 2 voting files:

2019-05-26 14:16:35.213: [ CSSD][2470409984] voting file 1: 3f765b45-e8b24f75-bfb17088-d03cb905

2019-05-26 14:16:35.213: [ CSSD][2470409984] voting file 2: 8ff7f13e-c1fd4f36-bf99451e-933f03e0

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmSetParamsFromConfig: remote SIOT 27000, local SIOT 27000, LIOT 200000, misstime 30000, reboottime 3000,

impending misstime 15000, voting file reopen delay 4000

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmvDiskStateChange: state from configured to deconfigured disk /dev/oracleasm/disks/VOTE02

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmCompleteGMReq: Completed request type 1 with status 1

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssgmDoneQEle: re-queueing req 0x7f0ca8253830 status 1

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmHandleUpdate: Using new configuration to CIN 1558876364, unique 6

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmHandleUpdate: common properties are 1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmUpdateNodeState: node ora01, number 1, current state 3, proposed state 3, current unique 1558850975, pro

posed unique 1558850975, prevConuni 0, birth 454365165

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmUpdateNodeState: node ora02, number 2, current state 3, proposed state 3, current unique 1558850985, pro

posed unique 1558850985, prevConuni 0, birth 454365166

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmSendAck: node 1, ora01, syncSeqNo(454365169) type(15)

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmQueueClientEvent: Sending Event(1), type 1, incarn 454365169

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmQueueClientEvent: Node[1] state = 3, birth = 454365165, unique = 1558850975

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmQueueClientEvent: Node[2] state = 3, birth = 454365166, unique = 1558850985

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmHandleUpdate: SYNC(454365169) from node(1) completed

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmHandleUpdate: NODE 1 (ora01) IS ACTIVE MEMBER OF CLUSTER

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmHandleUpdate: NODE 2 (ora02) IS ACTIVE MEMBER OF CLUSTER

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssscUpdateEventValue: NMReconfigInProgress val -1, changes 15

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmHandleUpdate: local disk timeout set to 200000 ms, remote disk timeout set to 200000

2019-05-26 14:16:35.213: [ CSSD][2470409984]clssnmHandleAck: node ora01, number 1, sent ack type 15 for wrong reconfig; ack is for reconfig 454365169 and

we are on reconfig 454365170

ORA02 asm 日志:

Sun May 26 14:16:26 2019

WARNING: Read Failed. group:1 disk:0 AU:1 offset:0 size:4096

WARNING: Read Failed. group:1 disk:0 AU:1 offset:4096 size:4096

WARNING: Read Failed. group:1 disk:0 AU:1 offset:0 size:4096

WARNING: Write Failed. group:1 disk:0 AU:1 offset:0 size:4096

WARNING: GMON has insufficient disks to maintain consensus. Minimum required is 2: updating 2 PST copies from a total of 3.

NOTE: group CRS: updated PST location: disk 0003 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0001 (PST copy 1)

WARNING: GMON has insufficient disks to maintain consensus. Minimum required is 2: updating 2 PST copies from a total of 3.

NOTE: group CRS: updated PST location: disk 0003 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0001 (PST copy 1)

WARNING: Disk CRS_0001 in mode 0x7f is now being offlined

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

Sun May 26 14:16:28 2019

NOTE: cache dismounting (not clean) group 1/0x26CEC90C (CRS)

NOTE: messaging CKPT to quiesce pins Unix process pid: 58335, image: oracle@ora02 (B000)

Sun May 26 14:16:28 2019

NOTE: halting all I/Os to diskgroup 1 (CRS)

Sun May 26 14:16:28 2019

NOTE: LGWR doing non-clean dismount of group 1 (CRS)

NOTE: LGWR sync ABA=6.77 last written ABA 6.77

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

Sun May 26 14:16:28 2019

kjbdomdet send to inst 1

detach from dom 1, sending detach message to inst 1

Sun May 26 14:16:28 2019

List of instances:

1 2

Dirty detach reconfiguration started (new ddet inc 1, cluster inc 4)

Global Resource Directory partially frozen for dirty detach

* dirty detach - domain 1 invalid = TRUE

Sun May 26 14:16:28 2019

NOTE: Attempting voting file refresh on diskgroup CRS

WARNING: Read Failed. group:1 disk:0 AU:0 offset:0 size:4096

WARNING: Read Failed. group:1 disk:1 AU:0 offset:0 size:4096

Sun May 26 14:16:28 2019

NOTE: Refresh completed on diskgroup CRS

. Found 2 voting file(s).

NOTE: Voting file relocation is required in diskgroup CRS

NOTE: process _b001_+asm2 (58338) initiating offline of disk 0.3915266559 (CRS_0000) with mask 0x7e in group 1

NOTE: checking PST: grp = 1

146 GCS resources traversed, 0 cancelled

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

NOTE: Attempting voting file relocation on diskgroup CRS

WARNING: Read Failed. group:1 disk:0 AU:0 offset:0 size:4096

WARNING: Read Failed. group:1 disk:1 AU:0 offset:0 size:4096

Dirty Detach Reconfiguration complete

NOTE: Failed voting file relocation on diskgroup CRS

ERROR: ORA-15130 in COD recovery for diskgroup 1/0x26cec90c (CRS)

ERROR: ORA-15130 thrown in RBAL for group number 1

GMON checking disk modes for group 1 at 5 for pid 28, osid 58338

Errors in file /u01/app/grid/diag/asm/+asm/+ASM2/trace/+ASM2_rbal_57550.trc:

ORA-15130: diskgroup "CRS" is being dismounted

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

NOTE: checking PST for grp 1 done.

NOTE: initiating PST update: grp = 1, dsk = 0/0xe95e39ff, mask = 0x6a, op = clear

WARNING: Write Failed. group:1 disk:0 AU:1 offset:0 size:4096

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

GMON updating disk modes for group 1 at 6 for pid 28, osid 58338

WARNING: Write Failed. group:1 disk:0 AU:1 offset:4096 size:4096

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

WARNING: Offline for disk CRS_0000 in mode 0x7f failed.

NOTE: Suppress further IO Read errors on group:1 disk:0

Sun May 26 14:16:29 2019

WARNING: dirty detached from domain 1

NOTE: cache dismounted group 1/0x26CEC90C (CRS)

NOTE: cache deleting context for group CRS 1/0x26cec90c

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

SQL> alter diskgroup CRS dismount force /* ASM SERVER:651086092 */

WARNING: GMON failed to write a quorum of target disks in group 1 (1 of 2)

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

ERROR: no read quorum in group: required 2, found 0 disks

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

ERROR: no read quorum in group: required 2, found 0 disks

ERROR: Could not read PST for grp 1. Force dismounting the disk group.

GMON dismounting group 1 at 7 for pid 23, osid 58335

NOTE: Disk CRS_0000 in mode 0x7f marked for de-assignment

NOTE: Disk CRS_0001 in mode 0x7f marked for de-assignment

NOTE: Disk CRS_0003 in mode 0x7f marked for de-assignment

SUCCESS: diskgroup CRS was dismounted

SUCCESS: alter diskgroup CRS dismount force /* ASM SERVER:651086092 */

SUCCESS: ASM-initiated MANDATORY DISMOUNT of group CRS

Sun May 26 14:16:29 2019

NOTE: diskgroup resource ora.CRS.dg is offline

Sun May 26 14:17:41 2019

Received an instance abort message from instance 1

Please check instance 1 alert and LMON trace files for detail.

Sun May 26 14:17:41 2019

NOTE: ASMB process exiting, either shutdown is in progress

NOTE: or foreground connected to ASMB was killed.

Sun May 26 14:17:41 2019

Received an instance abort message from instance 1

Please check instance 1 alert and LMON trace files for detail.

Sun May 26 14:17:41 2019

NOTE: client exited [57576]

LMD0 (ospid: 57532): terminating the instance due to error 481

Instance terminated by LMD0, pid = 57532ORA02 GRID alert 日志:

2019-05-26 14:15:57.472:

[cssd(57356)]CRS-1615:No I/O has completed after 50% of the maximum interval. Voting file /dev/oracleasm/disks/VOTE01 will be considered not functional in 99010 milliseconds

2019-05-26 14:16:26.501:

[cssd(57356)]CRS-1649:An I/O error occured for voting file: /dev/oracleasm/disks/VOTE01; details at (:CSSNM00059:) in /u01/app/11.2.0/grid/log/ora02/cssd/ocssd.log.

2019-05-26 14:16:26.501:

[cssd(57356)]CRS-1649:An I/O error occured for voting file: /dev/oracleasm/disks/VOTE01; details at (:CSSNM00060:) in /u01/app/11.2.0/grid/log/ora02/cssd/ocssd.log.

2019-05-26 14:16:27.289:

[cssd(57356)]CRS-1604:CSSD voting file is offline: /dev/oracleasm/disks/VOTE02; details at (:CSSNM00069:) in /u01/app/11.2.0/grid/log/ora02/cssd/ocssd.log.

2019-05-26 14:16:27.354:

[cssd(57356)]CRS-1626:A Configuration change request completed successfully

2019-05-26 14:16:27.365:

[cssd(57356)]CRS-1601:CSSD Reconfiguration complete. Active nodes are ora01 ora02 .

2019-05-26 14:16:47.135:

[cssd(57356)]CRS-1614:No I/O has completed after 75% of the maximum interval. Voting file /dev/oracleasm/disks/VOTE01 will be considered not functional in 49350 milliseconds

2019-05-26 14:17:17.151:

[cssd(57356)]CRS-1613:No I/O has completed after 90% of the maximum interval. Voting file /dev/oracleasm/disks/VOTE01 will be considered not functional in 19330 milliseconds

2019-05-26 14:17:37.160:

[cssd(57356)]CRS-1604:CSSD voting file is offline: /dev/oracleasm/disks/VOTE01; details at (:CSSNM00058:) in /u01/app/11.2.0/grid/log/ora02/cssd/ocssd.log.

2019-05-26 14:17:37.160:

[cssd(57356)]CRS-1606:The number of voting files available, 1, is less than the minimum number of voting files required, 2, resulting in CSSD termination to ensure data integrity; details at (:CSSNM00018:) in /u01/app/11.2.0/grid/log/ora02/cssd/ocssd.log

2019-05-26 14:17:37.160:

[cssd(57356)]CRS-1656:The CSS daemon is terminating due to a fatal error; Details at (:CSSSC00012:) in /u01/app/11.2.0/grid/log/ora02/cssd/ocssd.log

2019-05-26 14:17:37.258:

[cssd(57356)]CRS-1652:Starting clean up of CRSD resources.

2019-05-26 14:17:38.969:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57738)]CRS-5016:Process "/u01/app/11.2.0/grid/opmn/bin/onsctli" spawned by agent "/u01/app/11.2.0/grid/bin/oraagent.bin" for action "check" failed: details at "(:CLSN00010:)" in "/u01/app/11.2.0/grid/log/ora02/agent/crsd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:17:39.783:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57738)]CRS-5016:Process "/u01/app/11.2.0/grid/bin/lsnrctl" spawned by agent "/u01/app/11.2.0/grid/bin/oraagent.bin" for action "check" failed: details at "(:CLSN00010:)" in "/u01/app/11.2.0/grid/log/ora02/agent/crsd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:17:39.818:

[cssd(57356)]CRS-1654:Clean up of CRSD resources finished successfully.

2019-05-26 14:17:39.820:

[cssd(57356)]CRS-1655:CSSD on node ora02 detected a problem and started to shutdown.

2019-05-26 14:17:40.021:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57738)]CRS-5822:Agent '/u01/app/11.2.0/grid/bin/oraagent_grid' disconnected from server. Details at (:CRSAGF00117:) {0:3:7} in /u01/app/11.2.0/grid/log/ora02/agent/crsd/oraagent_grid/oraagent_grid.log.

2019-05-26 14:17:41.172:

[/u01/app/11.2.0/grid/bin/orarootagent.bin(57742)]CRS-5822:Agent '/u01/app/11.2.0/grid/bin/orarootagent_root' disconnected from server. Details at (:CRSAGF00117:) {0:5:27} in /u01/app/11.2.0/grid/log/ora02/agent/crsd/orarootagent_root/orarootagent_root.log.

2019-05-26 14:17:43.792:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:17:44.108:

[ohasd(57121)]CRS-2765:Resource 'ora.crsd' has failed on server 'ora02'.

2019-05-26 14:17:47.436:

[ohasd(57121)]CRS-2765:Resource 'ora.asm' has failed on server 'ora02'.

2019-05-26 14:17:47.657:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:17:55.018:

[crsd(58426)]CRS-0805:Cluster Ready Service aborted due to failure to communicate with Cluster Synchronization Service with error [3]. Details at (:CRSD00109:) in /u01/app/11.2.0/grid/log/ora02/crsd/crsd.log.

2019-05-26 14:17:56.161:

[ohasd(57121)]CRS-2765:Resource 'ora.cssdmonitor' has failed on server 'ora02'.

2019-05-26 14:17:58.439:

[ohasd(57121)]CRS-2765:Resource 'ora.crsd' has failed on server 'ora02'.

2019-05-26 14:17:58.837:

[ohasd(57121)]CRS-2765:Resource 'ora.evmd' has failed on server 'ora02'.

2019-05-26 14:17:59.573:

[ohasd(57121)]CRS-2765:Resource 'ora.ctssd' has failed on server 'ora02'.

2019-05-26 14:17:59.875:

[ohasd(57121)]CRS-2765:Resource 'ora.cssd' has failed on server 'ora02'.

2019-05-26 14:18:00.079:

[ohasd(57121)]CRS-2765:Resource 'ora.cluster_interconnect.haip' has failed on server 'ora02'.

2019-05-26 14:18:01.076:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:18:02.615:

[cssd(58475)]CRS-1713:CSSD daemon is started in clustered mode

2019-05-26 14:18:04.251:

[cssd(58475)]CRS-1656:The CSS daemon is terminating due to a fatal error; Details at (:CSSSC00012:) in /u01/app/11.2.0/grid/log/ora02/cssd/ocssd.log

2019-05-26 14:18:04.292:

[cssd(58475)]CRS-1603:CSSD on node ora02 shutdown by user.

2019-05-26 14:18:10.405:

[ohasd(57121)]CRS-2765:Resource 'ora.cssdmonitor' has failed on server 'ora02'.

2019-05-26 14:18:11.016:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:18:11.053:

[ohasd(57121)]CRS-2878:Failed to restart resource 'ora.cssd'

2019-05-26 14:18:11.126:

[ohasd(57121)]CRS-2769:Unable to failover resource 'ora.cssd'.

2019-05-26 14:18:12.493:

[cssd(58578)]CRS-1713:CSSD daemon is started in clustered mode

2019-05-26 14:18:13.179:

[cssd(58578)]CRS-1656:The CSS daemon is terminating due to a fatal error; Details at (:CSSSC00012:) in /u01/app/11.2.0/grid/log/ora02/cssd/ocssd.log

2019-05-26 14:18:13.219:

[cssd(58578)]CRS-1603:CSSD on node ora02 shutdown by user.

2019-05-26 14:18:16.396:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:18:19.274:

[ohasd(57121)]CRS-2765:Resource 'ora.cssdmonitor' has failed on server 'ora02'.

2019-05-26 14:18:21.675:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:18:26.999:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:18:32.197:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:18:32.208:

[ohasd(57121)]CRS-2878:Failed to restart resource 'ora.asm'

2019-05-26 14:18:32.209:

[ohasd(57121)]CRS-2769:Unable to failover resource 'ora.asm'.

2019-05-26 14:18:37.385:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:18:42.574:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-05-26 14:18:47.758:

[/u01/app/11.2.0/grid/bin/oraagent.bin(57265)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/ora02/agent/ohasd/oraagent_grid/oraagent_grid.log"