CDH6

目前CDH的最新版本是6.0.1,支持Hadoop 3.0.0,本文将介绍如何使CDH6的相关组件(Hadoop/Hive/Spark/Impala等)能够读写OSS。CDH5对OSS的支持在这篇文章介绍。

CDH6支持读写OSS

搭建CDH集群

首先根据官方文档搭建好CDH6集群

增加OSS配置

通过CM来增加配置(对于没有CM管理的集群,可以通过修改core-site.xml来达到)

这里以CM为例,需要增加如下配置:

| 配置项 | 值 | 说明 |

|---|---|---|

| fs.oss.endpoint | 如 oss-cn-zhangjiakou-internal.aliyuncs.com | 要连接的endpoint |

| fs.oss.accessKeyId | access key id | |

| fs.oss.accessKeySecret | access key secret | |

| fs.oss.impl | org.apache.hadoop.fs.aliyun.oss.AliyunOSSFileSystem | hadoop oss文件系统实现类,目前固定为这个 |

| fs.oss.buffer.dir | /tmp/oss | 临时文件目录 |

| fs.oss.connection.secure.enabled | false | 是否enable https, 根据需要来设置,enable https会影响性能 |

| fs.oss.connection.maximum | 2048 | 与oss的连接数,根据需要设置 |

相关参数的解释可以在这里找到

重启集群,验证读写OSS

增加配置后,根据CM提示重启集群,重启后,可以测试

# 测试写

hadoop fs -mkdir oss://{your-bucket-name}/hadoop-test

# 测试读

hadoop fs -ls oss://{your-bucket-name}/Apache Impala查询OSS的数据

关于Apache Impala的介绍可以查看官方文档,下面主要介绍使用Apache Impala查询OSS,并且运行TPC-DS的那些查询语句。

虽然CDH6天然支持OSS,但是它里面的Impala组件却默认没有将OSS的支持放到它的CLASSPATH里面去,因此我们需要在所有的Impala节点执行如下命令

下面的步骤需要在所有的Impala节点执行

进入到$CDH_HOME/lib/impala目录, 执行如下命令

[root@cdh-master impala]# cd lib/

[root@cdh-master lib]# ln -s ../../../jars/hadoop-aliyun-3.0.0-cdh6.0.1.jar hadoop-aliyun.jar

[root@cdh-master lib]# ln -s ../../../jars/aliyun-sdk-oss-2.8.3.jar aliyun-sdk-oss-2.8.3.jar

[root@cdh-master lib]# ln -s ../../../jars/jdom-1.1.jar jdom-1.1.jar进入到$CDH_HOME/bin目录,修改impalad/statestored/catalogd这三个文件,在文件最后一行exec命令前,增加如下一行

export CLASSPATH=$CLASSPATH:${IMPALA_HOME}/lib/hadoop-aliyun.jar:${IMPALA_HOME}/lib/aliyun-sdk-oss-2.8.3.jar:${IMPALA_HOME}/lib/jdom-1.1.jar重启所有节点的impala相关进程,这样impala就可以查询OSS的数据。

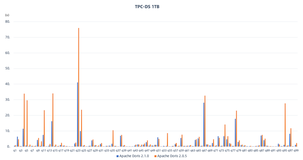

运行TPC-DS查询

TPC-DS查询在这里,https://github.com/hortonworks/hive-testbench/tree/hive14

基于Hive,因为Hive QL与Impala SQl兼容性比较高,所以我们用这个作为实验(有几个query因为兼容性问题不能运行)

执行如下命令,生成sample数据到OSS

[root@cdh-master ~]# git clone https://github.com/hortonworks/hive-testbench.git

[root@cdh-master ~]# cd hive-testbench

[root@cdh-master hive-testbench]# git checkout hive14

[root@cdh-master hive-testbench]# ./tpcds-build.sh

[root@cdh-master hive-testbench]# FORMAT=textfile ./tpcds-setup.sh 50 oss://{your-bucket-name}/这个benchmark的建表语句与Apache Impala的建表语句兼容,复制ddl-tpcds/text/alltables.sql中的建表语句,修改${LOCATION}即可,例如:

[root@cdh-master hive-testbench]# impala-shell -i cdh-slave01 -d default

Starting Impala Shell without Kerberos authentication

Connected to cdh-slave01:21000

Server version: impalad version 3.0.0-cdh6.0.1 RELEASE (build 9a74a5053de5f7b8dd983802e6d75e58d31472db)

***********************************************************************************

Welcome to the Impala shell.

(Impala Shell v3.0.0-cdh6.0.1 (9a74a50) built on Wed Sep 19 11:27:37 PDT 2018)

Want to know what version of Impala you're connected to? Run the VERSION command to

find out!

***********************************************************************************

Query: use `default`

[cdh-slave01:21000] default> create external table call_center(

> cc_call_center_sk bigint

> , cc_call_center_id string

> , cc_rec_start_date string

> , cc_rec_end_date string

> , cc_closed_date_sk bigint

> , cc_open_date_sk bigint

> , cc_name string

> , cc_class string

> , cc_employees int

> , cc_sq_ft int

> , cc_hours string

> , cc_manager string

> , cc_mkt_id int

> , cc_mkt_class string

> , cc_mkt_desc string

> , cc_market_manager string

> , cc_division int

> , cc_division_name string

> , cc_company int

> , cc_company_name string

> , cc_street_number string

> , cc_street_name string

> , cc_street_type string

> , cc_suite_number string

> , cc_city string

> , cc_county string

> , cc_state string

> , cc_zip string

> , cc_country string

> , cc_gmt_offset double

> , cc_tax_percentage double

> )

> row format delimited fields terminated by '|'

> location 'oss://{your-bucket-name}/50/call_center';

Query: create external table call_center(

cc_call_center_sk bigint

, cc_call_center_id string

, cc_rec_start_date string

, cc_rec_end_date string

, cc_closed_date_sk bigint

, cc_open_date_sk bigint

, cc_name string

, cc_class string

, cc_employees int

, cc_sq_ft int

, cc_hours string

, cc_manager string

, cc_mkt_id int

, cc_mkt_class string

, cc_mkt_desc string

, cc_market_manager string

, cc_division int

, cc_division_name string

, cc_company int

, cc_company_name string

, cc_street_number string

, cc_street_name string

, cc_street_type string

, cc_suite_number string

, cc_city string

, cc_county string

, cc_state string

, cc_zip string

, cc_country string

, cc_gmt_offset double

, cc_tax_percentage double

)

row format delimited fields terminated by '|'

location 'oss://{your-bucket-name}/50/call_center'

+-------------------------+

| summary |

+-------------------------+

| Table has been created. |

+-------------------------+

Fetched 1 row(s) in 4.10s注意将建表语句中的{your-bucket-name}替换为你自己的bucket

所有的表都建好后,我们可以查看一下

[cdh-slave01:21000] default> show tables;

Query: show tables

+------------------------+

| name |

+------------------------+

| call_center |

| catalog_page |

| catalog_returns |

| catalog_sales |

| customer |

| customer_address |

| customer_demographics |

| date_dim |

| household_demographics |

| income_band |

| inventory |

| item |

| promotion |

| reason |

| ship_mode |

| store |

| store_returns |

| store_sales |

| time_dim |

| warehouse |

| web_page |

| web_returns |

| web_sales |

| web_site |

+------------------------+

Fetched 24 row(s) in 0.03s下面我们就可以在Impala上执行TPC-DS的查询,查询的SQL文件在sample-queries-tpcds目录下

[root@cdh-master hive-testbench]# ls sample-queries-tpcds

query12.sql query20.sql query27.sql query39.sql query46.sql query54.sql query64.sql query71.sql query7.sql query87.sql query93.sql README.md

query13.sql query21.sql query28.sql query3.sql query48.sql query55.sql query65.sql query72.sql query80.sql query88.sql query94.sql testbench.settings

query15.sql query22.sql query29.sql query40.sql query49.sql query56.sql query66.sql query73.sql query82.sql query89.sql query95.sql testbench-withATS.settings

query17.sql query24.sql query31.sql query42.sql query50.sql query58.sql query67.sql query75.sql query83.sql query90.sql query96.sql

query18.sql query25.sql query32.sql query43.sql query51.sql query60.sql query68.sql query76.sql query84.sql query91.sql query97.sql

query19.sql query26.sql query34.sql query45.sql query52.sql query63.sql query70.sql query79.sql query85.sql query92.sql query98.sql我们执行query13.sql

[root@cdh-master hive-testbench]# impala-shell -i cdh-slave01 -d default -f sample-queries-tpcds/query13.sql

Starting Impala Shell without Kerberos authentication

Connected to cdh-slave01:21000

Server version: impalad version 3.0.0-cdh6.0.1 RELEASE (build 9a74a5053de5f7b8dd983802e6d75e58d31472db)

Query: use `default`

Query: select avg(ss_quantity)

,avg(ss_ext_sales_price)

,avg(ss_ext_wholesale_cost)

,sum(ss_ext_wholesale_cost)

from store_sales

,store

,customer_demographics

,household_demographics

,customer_address

,date_dim

where store.s_store_sk = store_sales.ss_store_sk

and store_sales.ss_sold_date_sk = date_dim.d_date_sk and date_dim.d_year = 2001

and((store_sales.ss_hdemo_sk=household_demographics.hd_demo_sk

and customer_demographics.cd_demo_sk = store_sales.ss_cdemo_sk

and customer_demographics.cd_marital_status = 'M'

and customer_demographics.cd_education_status = '4 yr Degree'

and store_sales.ss_sales_price between 100.00 and 150.00

and household_demographics.hd_dep_count = 3

)or

(store_sales.ss_hdemo_sk=household_demographics.hd_demo_sk

and customer_demographics.cd_demo_sk = store_sales.ss_cdemo_sk

and customer_demographics.cd_marital_status = 'D'

and customer_demographics.cd_education_status = 'Primary'

and store_sales.ss_sales_price between 50.00 and 100.00

and household_demographics.hd_dep_count = 1

) or

(store_sales.ss_hdemo_sk=household_demographics.hd_demo_sk

and customer_demographics.cd_demo_sk = ss_cdemo_sk

and customer_demographics.cd_marital_status = 'U'

and customer_demographics.cd_education_status = 'Advanced Degree'

and store_sales.ss_sales_price between 150.00 and 200.00

and household_demographics.hd_dep_count = 1

))

and((store_sales.ss_addr_sk = customer_address.ca_address_sk

and customer_address.ca_country = 'United States'

and customer_address.ca_state in ('KY', 'GA', 'NM')

and store_sales.ss_net_profit between 100 and 200

) or

(store_sales.ss_addr_sk = customer_address.ca_address_sk

and customer_address.ca_country = 'United States'

and customer_address.ca_state in ('MT', 'OR', 'IN')

and store_sales.ss_net_profit between 150 and 300

) or

(store_sales.ss_addr_sk = customer_address.ca_address_sk

and customer_address.ca_country = 'United States'

and customer_address.ca_state in ('WI', 'MO', 'WV')

and store_sales.ss_net_profit between 50 and 250

))

Query submitted at: 2018-10-30 11:44:47 (Coordinator: http://cdh-slave01:25000)

Query progress can be monitored at: http://cdh-slave01:25000/query_plan?query_id=ff4b3157eddfc3c4:8a31c6500000000

+-------------------+-------------------------+----------------------------+----------------------------+

| avg(ss_quantity) | avg(ss_ext_sales_price) | avg(ss_ext_wholesale_cost) | sum(ss_ext_wholesale_cost) |

+-------------------+-------------------------+----------------------------+----------------------------+

| 30.87106918238994 | 2352.642327044025 | 2162.600911949685 | 687707.09 |

+-------------------+-------------------------+----------------------------+----------------------------+

Fetched 1 row(s) in 353.16s这个查询涉及到6张表,store/store_sales/customer_demographics/household_demographics/customer_address/date_dim,分别看一下它们的状态

[cdh-slave01:21000] default> show table STATS store;

Query: show table STATS store

+-------+--------+---------+--------------+-------------------+--------+-------------------+-------------------------------------------+

| #Rows | #Files | Size | Bytes Cached | Cache Replication | Format | Incremental stats | Location |

+-------+--------+---------+--------------+-------------------+--------+-------------------+-------------------------------------------+

| -1 | 1 | 37.56KB | NOT CACHED | NOT CACHED | TEXT | false | oss://{your-bucket-name}/50/store |

+-------+--------+---------+--------------+-------------------+--------+-------------------+-------------------------------------------+

Fetched 1 row(s) in 0.01s

[cdh-slave01:21000] default> show table STATS store_sales;

Query: show table STATS store_sales

+-------+--------+---------+--------------+-------------------+--------+-------------------+-------------------------------------------------+

| #Rows | #Files | Size | Bytes Cached | Cache Replication | Format | Incremental stats | Location |

+-------+--------+---------+--------------+-------------------+--------+-------------------+-------------------------------------------------+

| -1 | 50 | 18.75GB | NOT CACHED | NOT CACHED | TEXT | false | oss://{your-bucket-name}/50/store_sales |

+-------+--------+---------+--------------+-------------------+--------+-------------------+-------------------------------------------------+

Fetched 1 row(s) in 0.01s

[cdh-slave01:21000] default> show table STATS customer_demographics;

Query: show table STATS customer_demographics

+-------+--------+---------+--------------+-------------------+--------+-------------------+-----------------------------------------------------------+

| #Rows | #Files | Size | Bytes Cached | Cache Replication | Format | Incremental stats | Location |

+-------+--------+---------+--------------+-------------------+--------+-------------------+-----------------------------------------------------------+

| -1 | 50 | 76.92MB | NOT CACHED | NOT CACHED | TEXT | false | oss://{your-bucket-name}/50/customer_demographics |

+-------+--------+---------+--------------+-------------------+--------+-------------------+-----------------------------------------------------------+

Fetched 1 row(s) in 0.01s

[cdh-slave01:21000] default> show table STATS household_demographics;

Query: show table STATS household_demographics

+-------+--------+----------+--------------+-------------------+--------+-------------------+------------------------------------------------------------+

| #Rows | #Files | Size | Bytes Cached | Cache Replication | Format | Incremental stats | Location |

+-------+--------+----------+--------------+-------------------+--------+-------------------+------------------------------------------------------------+

| -1 | 1 | 148.10KB | NOT CACHED | NOT CACHED | TEXT | false | oss://{your-bucket-name}/50/household_demographics |

+-------+--------+----------+--------------+-------------------+--------+-------------------+------------------------------------------------------------+

Fetched 1 row(s) in 0.01s

[cdh-slave01:21000] default> show table STATS customer_address;

Query: show table STATS customer_address

+-------+--------+---------+--------------+-------------------+--------+-------------------+------------------------------------------------------+

| #Rows | #Files | Size | Bytes Cached | Cache Replication | Format | Incremental stats | Location |

+-------+--------+---------+--------------+-------------------+--------+-------------------+------------------------------------------------------+

| -1 | 1 | 40.54MB | NOT CACHED | NOT CACHED | TEXT | false | oss://{your-bucket-name}/50/customer_address |

+-------+--------+---------+--------------+-------------------+--------+-------------------+------------------------------------------------------+

Fetched 1 row(s) in 0.01s

[cdh-slave01:21000] default> show table STATS date_dim;

Query: show table STATS date_dim

+-------+--------+--------+--------------+-------------------+--------+-------------------+----------------------------------------------+

| #Rows | #Files | Size | Bytes Cached | Cache Replication | Format | Incremental stats | Location |

+-------+--------+--------+--------------+-------------------+--------+-------------------+----------------------------------------------+

| -1 | 1 | 9.84MB | NOT CACHED | NOT CACHED | TEXT | false | oss://{your-bucket-name}/50/date_dim |

+-------+--------+--------+--------------+-------------------+--------+-------------------+----------------------------------------------+

Fetched 1 row(s) in 0.01s参考文章

https://yq.aliyun.com/articles/658473?spm=a2c4e.11155435.0.0.2f8b33125xbe9H

https://github.com/apache/hadoop/blob/trunk/hadoop-tools/hadoop-aliyun/src/site/markdown/tools/hadoop-aliyun/index.md

https://yq.aliyun.com/articles/658471?spm=a2c4e.11155435.0.0.2f8b33125xbe9H

https://yq.aliyun.com/articles/292792?spm=a2c4e.11155435.0.0.7ccba82fbDwfhK