一 原理简介

1.HAProxy

HAProxy提供高可用性、负载均衡以及基于TCP和HTTP应用的代理,支持虚拟主机,它是免费、快速并且可靠的一种解决方案。HAProxy特别适用于那些负载特大的web站点,这些站点通常又需要会话保持或七层处理。HAProxy运行在时下的硬件上,完全可以支持数以万计的并发连接。并且它的运行模式使得它可以很简单安全的整合进当前的架构中, 同时可以保护web服务器不被暴露到网络上。

2.Keepalived

Keepalived 是一个基于VRRP协议来实现的LVS服务高可用方案,可以利用其来避免单点故障。一个LVS服务会有2台服务器运行Keepalived,一台为主服务器(MASTER),一台为备份服务器(BACKUP),但是对外表现为一个虚拟IP,主服务器会发送特定的消息给备份服务器,当备份服务器收不到这个消息的时候,即主服务器宕机的时候,备份服务器就会接管虚拟IP,继续提供服务,从而保证了高可用性。Keepalived是VRRP的完美实现。

3.vrrp协议

在现实的网络环境中,两台需要通信的主机大多数情况下并没有直接的物理连接。对于这样的情况,它们之间路由怎样选择?主机如何选定到达目的主机的下一跳路由,这个问题通常的解决方法有两种:

在主机上使用动态路由协议(RIP、OSPF等)

在主机上配置静态路由

很明显,在主机上配置动态路由是非常不切实际的,因为管理、维护成本以及是否支持等诸多问题。配置静态路由就变得十分流行,但路由器(或者说默认网关default gateway)却经常成为单点故障。VRRP的目的就是为了解决静态路由单点故障问题,VRRP通过一竞选(election)协议来动态的将路由任务交给LAN中虚拟路由器中的某台VRRP路由器。

二 运行环境

1.系统

CentOS Linux release 7.2.1511 (Core)

2.应用软件

haproxy-1.5.14-3.el7.x86_64

keepalived-1.2.13-7.el7.x86_64

httpd-2.4.6-45.el7.centos.x86_64

php-5.4.16-42.el7.x86_64

mariadb-server-5.5.52-1.el7.x86_64

mariadb.x86_64

wordpress-4.3.1-zh_CN.zip

nfs-utils-1.3.0-0.33.el7.x86_64

rpcbind-0.2.0-38.el7.x86_64

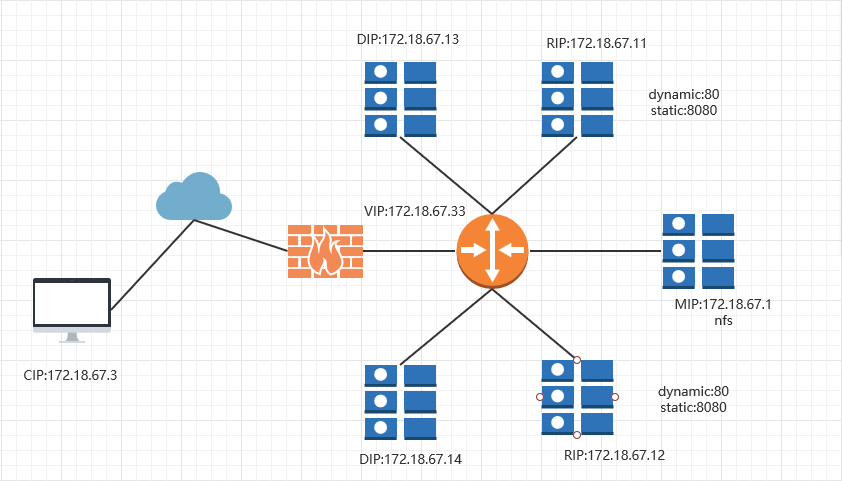

3.IP配置

负载均衡器

DIP1:172.18.67.13

DIP2:172.18.67.14

后端Real Server

RIP1:172.18.67.11

RIP2:172.18.67.12

数据库服务器

MIP:172.18.67.1

客户端IP

IP:172.18.67.3

VIP:172,.18.67.33

三 架构拓扑及应用软件安装

1.拓扑图

2.部署应用软件

在IP为172.18.67.13与172.18.67.14的服务器上安装部署haproxy、keepalived

[root@inode2 ~]# yum install haproxy keepalived -y [root@inode3 ~]# yum install haproxy keepalived -y

在IP为172.18.67.11与172.18.67.12的服务器上安装部署httpd、php

[root@inode4 ~]# yum install httpd php -y [root@inode5 ~]# yum install httpd php -y

在IP为172.18.67.1的服务器上部署mariadb、mariadb-server、php-mysql

[root@inode6 ~]# yum install mariadb mariadb-server php-mysql -y

另外由于数据库采用文件共享的方式,所以还要安装nfs,分别在Real Server端和数据库服务器端安装nfs的应用软件nfs-utils、rpcbind

[root@inode4 ~]# yum install nfs-utils rpcbind -y [root@inode5 ~]# yum install nfs-utils rpcbind -y [root@inode6 ~]# yum install nfs-utils rpcbind -y

四 配置

1.Real Server配置

在这里我们将IP为172.18.67.11和172.18.67.12的服务器80端口设置为动态资源站,将IP为172.18.67.11和172.18.67.12的8080端口服务器模拟另两台服务器设置成静态资源站:将wordpress应用分别解压至/var/www/html/下,并修改该目录的属主和属组

[root@inode4 ~]# unzip wordpress-4.3.1-zh_CN.zip -C /var/www/html/ [root@inode4 ~]# chown -R apache:apache /var/www/html/wordpress [root@inode5 ~]# unzip wordpress-4.3.1-zh_CN.zip -C /var/www/html/ [root@inode5 ~]# chown -R apache:apache /var/www/html/wordpress

修改后端Server的httpd配置文件将网站目录从默认的/var/www/html修改为/var/www/html/wordpress。

2.nfs配置

数据库端修改配置文件

[root@inode6 ~]# vim /etc/exports /data/ 172.18.67.11(rw,async) /data/ 172.18.67.12(rw,async)

修改保存完毕后启动Real Server和数据库服务器的nfs应用

[root@inode4 ~]# systemctl start rpcbind [root@inode4 ~]# systemctl start nfs [root@inode5 ~]# systemctl start rpcbind [root@inode5 ~]# systemctl start nfs [root@inode6 ~]# systemctl start rpcbind [root@inode6 ~]# systemctl start nfs

创建数据存放目录及修改权

[root@inode6 ~]# mkdir /data [root@inode6 ~]# chown -R mysql:mysql /data

修改数据库配置文件数据存储目录

[root@inode6 ~]# vim /etc/my.cnf datadir=/data/

启动数据库

[root@inode6 ~]# systemctl start mariadb-server

将数据库目录挂载至web动态资源服务器

[root@inode4 ~]# mount -t nfs 172.18.67.1:/data/ /mnt [root@inode5 ~]# mount -t nfs 172.18.67.1:/data/ /mnt

3.keepalived配置

MASTER

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id inode2

vrrp_macst_group4 224.0.67.67

}

vrrp_instance http {

state MASTER

interface eno16777736

virtual_router_id 67

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass lKZvQVv9

}

virtual_ipaddress {

172.18.67.33/16 dev eno16777736

}

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

BACKUP

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id inode2

vrrp_macst_group4 224.0.67.67

}

vrrp_instance http {

state BACKUP

interface eno16777736

virtual_router_id 67

priority 98

advert_int 1

authentication {

auth_type PASS

auth_pass lKZvQVv9

}

virtual_ipaddress {

172.18.67.33/16 dev eno16777736

}

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

通知脚本

#!/bin/bash

#

contact='root@localhost'

notify() {

mailsubject="$(hostname) to be $1, vip floating"

mailbody="$(date +'%F %T'): vrrp transition, $(hostname) changed to be $1"

echo "$mailbody" | mail -s "$mailsubject" $contact

}

case $1 in

master)

notify master

;;

backup)

notify backup

;;

fault)

notify fault

;;

*)

echo "Usage: $(basename $0) {master|backup|fault}"

exit 1

;;

esac

4.haproxy配置

两节点的配置内容是一样的,如下:

[root@inode2 haproxy]# vim haproxy.cfg global log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon stats socket /var/lib/haproxy/stats defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 frontend web *:80 mode http maxconn 2000 acl url_static path_beg -i /static /images /javascript /stylesheets acl url_static path_end -i .jpg .gif .png .css .js .html .txt .htm use_backend staticsrvs if url_static default_backend appsrvs backend staticsrvs balance roundrobin server stcsrvs1 172.18.67.11:8080 check server stcsrvs2 172.18.67.12:8080 check backend appsrvs balance roundrobin server wp1 172.18.67.11:80 check server wp2 172.18.67.12:80 check listen stats bind :10086 stats enable stats uri /admin?stats stats auth admin:admin stats admin if TRUE

五 启动服务并测试

1.启动haproxy和keepalived

[root@inode2 ~]# systemctl restart haproxy [root@inode2 ~]# systemctl restart keepalived [root@inode3 ~]# systemctl restart haproxy [root@inode3 ~]# systemctl restart keepalived

2.测试

inode2:

[root@inode2 ~]# systemctl status -l keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled) Active: active (running) since Wed 2017-05-17 23:49:45 CST; 6s ago Process: 28940 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Main PID: 28941 (keepalived) CGroup: /system.slice/keepalived.service ├─28941 /usr/sbin/keepalived -D ├─28942 /usr/sbin/keepalived -D └─28943 /usr/sbin/keepalived -D May 17 23:49:45 inode2 Keepalived_vrrp[28943]: Registering gratuitous ARP shared channel May 17 23:49:45 inode2 Keepalived_vrrp[28943]: Opening file '/etc/keepalived/keepalived.conf'. May 17 23:49:45 inode2 Keepalived_vrrp[28943]: Configuration is using : 63025 Bytes May 17 23:49:45 inode2 Keepalived_vrrp[28943]: Using LinkWatch kernel netlink reflector... May 17 23:49:45 inode2 Keepalived_vrrp[28943]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)] May 17 23:49:46 inode2 Keepalived_vrrp[28943]: VRRP_Instance(http) Transition to MASTER STATE May 17 23:49:47 inode2 Keepalived_vrrp[28943]: VRRP_Instance(http) Entering MASTER STATE May 17 23:49:47 inode2 Keepalived_vrrp[28943]: VRRP_Instance(http) setting protocol VIPs. May 17 23:49:47 inode2 Keepalived_healthcheckers[28942]: Netlink reflector reports IP 172.18.67.33 added May 17 23:49:47 inode2 Keepalived_vrrp[28943]: VRRP_Instance(http) Sending gratuitous ARPs on eno16777736 for 172.18.67.33

我们看到inode2节点进入了MASTER模式,再查看inode3的状态

[root@inode3 ~]# systemctl start keepalived [root@inode3 ~]# systemctl status -l keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled) Active: active (running) since Wed 2017-05-17 23:51:08 CST; 5s ago Process: 42610 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Main PID: 42611 (keepalived) CGroup: /system.slice/keepalived.service ├─42611 /usr/sbin/keepalived -D ├─42612 /usr/sbin/keepalived -D └─42613 /usr/sbin/keepalived -D May 17 23:51:08 inode3 Keepalived_vrrp[42613]: Netlink reflector reports IP fe80::20c:29ff:fe78:24c3 added May 17 23:51:08 inode3 Keepalived_vrrp[42613]: Registering Kernel netlink reflector May 17 23:51:08 inode3 Keepalived_healthcheckers[42612]: Using LinkWatch kernel netlink reflector... May 17 23:51:08 inode3 Keepalived_vrrp[42613]: Registering Kernel netlink command channel May 17 23:51:08 inode3 Keepalived_vrrp[42613]: Registering gratuitous ARP shared channel May 17 23:51:08 inode3 Keepalived_vrrp[42613]: Opening file '/etc/keepalived/keepalived.conf'. May 17 23:51:08 inode3 Keepalived_vrrp[42613]: Configuration is using : 63023 Bytes May 17 23:51:08 inode3 Keepalived_vrrp[42613]: Using LinkWatch kernel netlink reflector... May 17 23:51:08 inode3 Keepalived_vrrp[42613]: VRRP_Instance(http) Entering BACKUP STATE May 17 23:51:08 inode3 Keepalived_vrrp[42613]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

不难看出inode3节点处于BACKUP状态,此时我们将inode2的keepalived服务停掉

[root@inode2 ~]# systemctl stop keepalived [root@inode3 ~]# systemctl status -l keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled) Active: active (running) since Wed 2017-05-17 23:51:08 CST; 1min 2s ago Process: 42610 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Main PID: 42611 (keepalived) CGroup: /system.slice/keepalived.service ├─42611 /usr/sbin/keepalived -D ├─42612 /usr/sbin/keepalived -D └─42613 /usr/sbin/keepalived -D May 17 23:51:08 inode3 Keepalived_vrrp[42613]: Opening file '/etc/keepalived/keepalived.conf'. May 17 23:51:08 inode3 Keepalived_vrrp[42613]: Configuration is using : 63023 Bytes May 17 23:51:08 inode3 Keepalived_vrrp[42613]: Using LinkWatch kernel netlink reflector... May 17 23:51:08 inode3 Keepalived_vrrp[42613]: VRRP_Instance(http) Entering BACKUP STATE May 17 23:51:08 inode3 Keepalived_vrrp[42613]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)] May 17 23:52:07 inode3 Keepalived_vrrp[42613]: VRRP_Instance(http) Transition to MASTER STATE May 17 23:52:08 inode3 Keepalived_vrrp[42613]: VRRP_Instance(http) Entering MASTER STATE May 17 23:52:08 inode3 Keepalived_vrrp[42613]: VRRP_Instance(http) setting protocol VIPs. May 17 23:52:08 inode3 Keepalived_healthcheckers[42612]: Netlink reflector reports IP 172.18.67.33 added May 17 23:52:08 inode3 Keepalived_vrrp[42613]: VRRP_Instance(http) Sending gratuitous ARPs on eno16777736 for 172.18.67.33

我们发现inode3节点进入了MASTER状态,因此体现出了高可用的特性

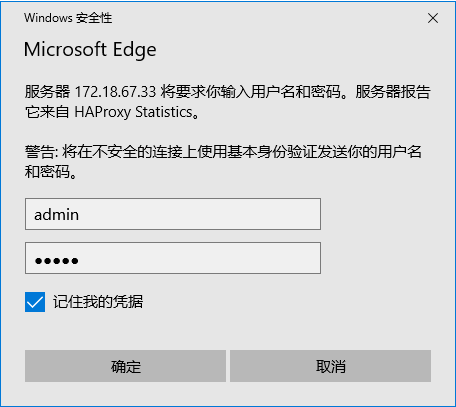

接下来我们测试haproxy的特性,在haproxy的配置文件里有下面这一段

listen stats bind :10086 stats enable stats uri /admin?stats stats auth admin:admin stats admin if TRUE

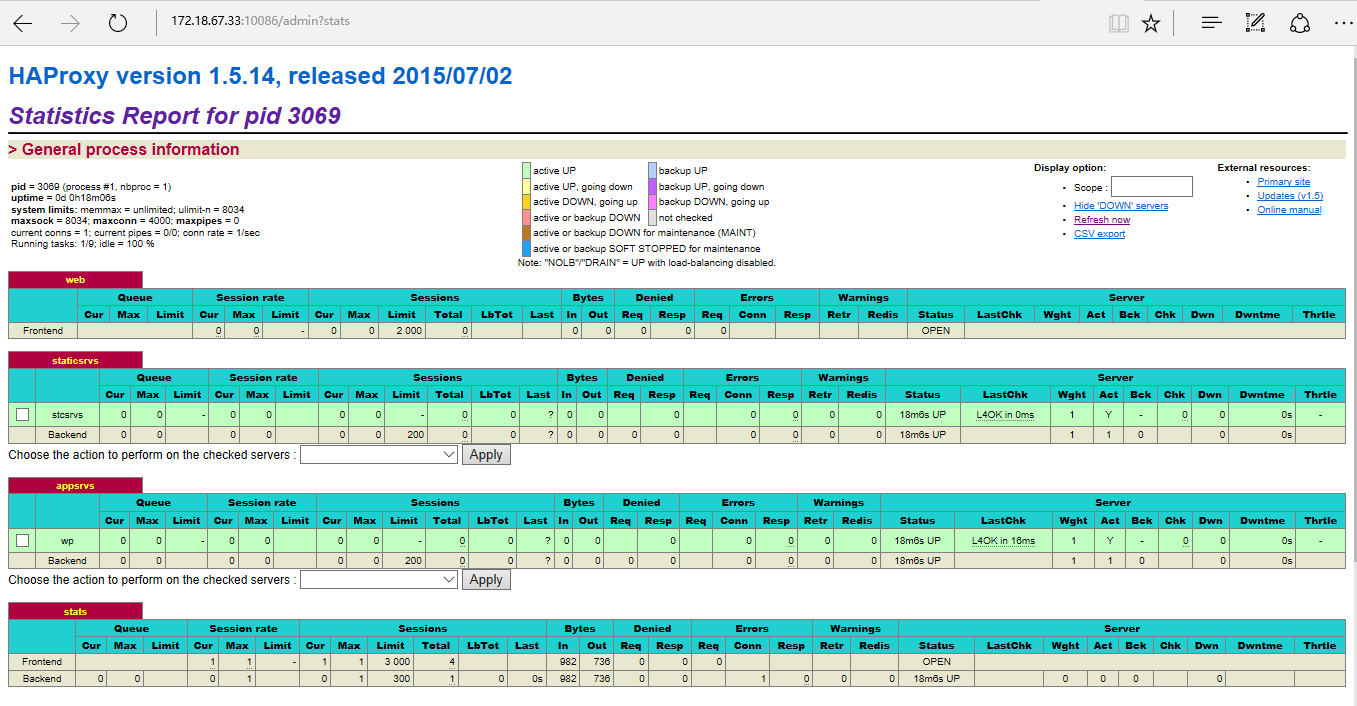

这段配置可以让我们在浏览器中查看和修改haproxy统计接口启用相关的参数,在浏览器中输入http://172.18.67.33:10086/admin?stats,就会出现下面这种状态,输入账号和密码,就进入了haproxy相关参数配置页面。

在下图中可以看出负载均衡的两台web服务器一台负责动态资源解析,另一台负责静态资源。动态资源的数据存放于后端的nfs服务器上。

接下来我们在浏览器中访问http://172.18.67.33就可以安装wordpress了。至此一个简单的高可用负载均衡服务搭建完毕。