解决kafka集群由于默认的__consumer_offsets这个topic的默认的副本数为1而存在的单点故障问题

抛出问题:

__consumer_offsets这个topic是由kafka自动创建的,默认50个,但是都存在一台kafka服务器上,这是不是就存在很明显的单点故障?

经测试,如果将存储consumer_offsets的这台机器kill掉,所有的消费者都停止消费了。请问这个问题是怎么解决的呢?

原因分析:

由于__consumer_offsets这个用于存储offset的分区是由kafka服务器默认自动创建的,那么它在创建该分区的时候,分区数和副本数的依据是什么?

分区数是固定的50,这个没什么可怀疑的,副本数呢?应该是一个默认值1,依据是,如果我们没有在server.properties文件中指定topic分区的副本数的话,它的默认值就是1。

__consumer_offsets是一个非常重要的topic,我们怎么能允许它只有一个副本呢?这样就存在单点故障,也就是如果该分区所在的集群宕机了的话,

我们的消费者就无法正常消费数据了。

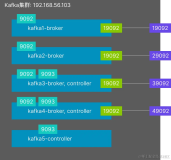

我在笔记本上搭建了kafka集群,共3个Broker,来解决这个问题。下面是一些记录。

说明:如果你的__consumer_offsets这个topic已经被创建了,而且只存在一台broker上,如果你直接使用命令删除这个topic是会报错了,提示这是kafka内置的topic,禁止删除。可以在zookeeper中删除(我是使用ZooInspector这个客户端连上zookeeper,删除和__consumer_offsets这个topic有关的目录或节点)。

然后就是修改kafka的核心配置文件server.properties,下面是第一台Broker的配置文件:

1 [root@hadoop01 kafka-logs]# cat /opt/kafka/config/server.properties 2 broker.id=0 3 listeners=PLAINTEXT://:9092 4 port=9092 5 num.network.threads=3 6 num.io.threads=8 7 socket.send.buffer.bytes=102400 8 socket.receive.buffer.bytes=102400 9 socket.request.max.bytes=104857600 10 log.dirs=/opt/logs/kafka-logs 11 num.partitions=3 12 num.recovery.threads.per.data.dir=1 13 log.retention.hours=168 14 log.segment.bytes=536870912 15 log.retention.check.interval.ms=300000 16 zookeeper.connect=192.168.71.11:2181,192.168.71.12:2181,192.168.71.13:2181 17 izookeeper.connection.timeout.ms=6000 18 delete.topic.enable=true 19 host.name=192.168.71.11 20 advertised.host.name=192.168.71.11 21 auto.create.topics.enable=true 22 default.replication.factor=3

在上面的配置中,我开启了自动创建topic,并指定了topic的分区数为3,副本数为3(因为我有3台Broker,我们希望每台机器上都有一个副本,从而保证分区的高可用)。

下面是第二台Broker的server.properties文件的配置:

1 [root@hadoop02 kafka-logs]# cat /opt/kafka/config/server.properties

2 broker.id=1

3 listeners=PLAINTEXT://:9092

4 port=9092

5 num.network.threads=3

6 num.io.threads=8

7 socket.send.buffer.bytes=102400

8 socket.receive.buffer.bytes=102400

9 socket.request.max.bytes=104857600

10 log.dirs=/opt/logs/kafka-logs

11 num.partitions=3

12 num.recovery.threads.per.data.dir=1

13 log.retention.hours=168

14 log.segment.bytes=536870912

15 log.retention.check.interval.ms=300000

16 zookeeper.connect=192.168.71.11:2181,192.168.71.12:2181,192.168.71.13:2181

17 izookeeper.connection.timeout.ms=6000

18 delete.topic.enable=true

19 host.name=192.168.71.12

20 advertised.host.name=192.168.71.12

21 auto.create.topics.enable=true

22 default.replication.factor=3

23 [root@hadoop02 kafka-logs]#

第3台Broker的server.properties文件的配置:

1 [root@hadoop03 kafka-logs]# cat /opt/kafka/config/server.properties

2 broker.id=2

3 listeners=PLAINTEXT://:9092

4 port=9092

5 num.network.threads=3

6 num.io.threads=8

7 socket.send.buffer.bytes=102400

8 socket.receive.buffer.bytes=102400

9 socket.request.max.bytes=104857600

10 log.dirs=/opt/logs/kafka-logs

11 num.partitions=3

12 num.recovery.threads.per.data.dir=1

13 log.retention.hours=168

14 log.segment.bytes=536870912

15 log.retention.check.interval.ms=300000

16 zookeeper.connect=192.168.71.11:2181,192.168.71.12:2181,192.168.71.13:2181

17 izookeeper.connection.timeout.ms=6000

18 delete.topic.enable=true

19 host.name=192.168.71.13

20 advertised.host.name=192.168.71.13

21 auto.create.topics.enable=true

22 default.replication.factor=3

23 [root@hadoop03 kafka-logs]#

配置好之后,启动3个Broker。此时__consumer_offsets分区不会被创建,它会在消费者开始消费数据的时候被创建。

首先启动一个消费者客户端来消费数据。然后启动一个生产者客户端来发送数据到kafka集群。

下面查看第一个Broker的topic,发现有50个__consumer_offsets分区:

77 [root@hadoop01 kafka-logs]# ll 78 total 224 79 -rw-r--r--. 1 root root 0 Mar 24 13:19 cleaner-offset-checkpoint 80 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-0 81 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-1 82 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-10 83 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-11 84 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-12 85 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-13 86 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-14 87 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-15 88 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-16 89 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-17 90 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-18 91 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-19 92 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-2 93 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-20 94 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-21 95 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-22 96 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-23 97 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-24 98 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-25 99 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-26 100 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-27 101 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-28 102 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-29 103 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-3 104 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-30 105 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-31 106 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-32 107 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-33 108 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-34 109 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-35 110 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-36 111 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-37 112 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-38 113 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-39 114 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-4 115 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-40 116 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-41 117 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-42 118 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-43 119 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-44 120 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-45 121 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-46 122 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-47 123 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-48 124 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-49 125 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-5 126 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-6 127 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-7 128 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-8 129 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-9 130 drwxr-xr-x. 2 root root 4096 Mar 24 13:54 friend-0 131 drwxr-xr-x. 2 root root 4096 Mar 24 13:54 friend-1 132 drwxr-xr-x. 2 root root 4096 Mar 24 13:54 friend-2 133 -rw-r--r--. 1 root root 54 Mar 24 13:19 meta.properties 134 -rw-r--r--. 1 root root 1228 Mar 24 13:54 recovery-point-offset-checkpoint 135 -rw-r--r--. 1 root root 1228 Mar 24 13:54 replication-offset-checkpoint 136 [root@hadoop01 kafka-logs]#

启动第二台Broker服务器,它也有50个"__consumer_offsets"分区:

77 [root@hadoop02 kafka-logs]# ll 78 total 224 79 -rw-r--r--. 1 root root 0 Mar 24 13:19 cleaner-offset-checkpoint 80 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-0 81 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-1 82 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-10 83 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-11 84 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-12 85 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-13 86 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-14 87 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-15 88 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-16 89 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-17 90 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-18 91 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-19 92 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-2 93 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-20 94 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-21 95 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-22 96 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-23 97 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-24 98 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-25 99 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-26 100 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-27 101 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-28 102 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-29 103 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-3 104 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-30 105 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-31 106 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-32 107 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-33 108 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-34 109 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-35 110 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-36 111 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-37 112 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-38 113 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-39 114 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-4 115 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-40 116 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-41 117 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-42 118 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-43 119 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-44 120 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-45 121 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-46 122 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-47 123 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-48 124 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-49 125 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-5 126 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-6 127 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-7 128 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-8 129 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-9 130 drwxr-xr-x. 2 root root 4096 Mar 24 13:54 friend-0 131 drwxr-xr-x. 2 root root 4096 Mar 24 13:54 friend-1 132 drwxr-xr-x. 2 root root 4096 Mar 24 13:54 friend-2 133 -rw-r--r--. 1 root root 54 Mar 24 13:19 meta.properties 134 -rw-r--r--. 1 root root 1228 Mar 24 13:54 recovery-point-offset-checkpoint 135 -rw-r--r--. 1 root root 1228 Mar 24 13:54 replication-offset-checkpoint 136 [root@hadoop02 kafka-logs]#

第3台Broker上的"__consumer_offsets"分区情况:

77 [root@hadoop03 kafka-logs]# ll 78 total 224 79 -rw-r--r--. 1 root root 0 Mar 24 13:19 cleaner-offset-checkpoint 80 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-0 81 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-1 82 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-10 83 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-11 84 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-12 85 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-13 86 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-14 87 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-15 88 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-16 89 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-17 90 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-18 91 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-19 92 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-2 93 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-20 94 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-21 95 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-22 96 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-23 97 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-24 98 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-25 99 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-26 100 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-27 101 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-28 102 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-29 103 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-3 104 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-30 105 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-31 106 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-32 107 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-33 108 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-34 109 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-35 110 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-36 111 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-37 112 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-38 113 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-39 114 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-4 115 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-40 116 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-41 117 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-42 118 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-43 119 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-44 120 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-45 121 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-46 122 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-47 123 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-48 124 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-49 125 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-5 126 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-6 127 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-7 128 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-8 129 drwxr-xr-x. 2 root root 4096 Mar 24 13:46 __consumer_offsets-9 130 drwxr-xr-x. 2 root root 4096 Mar 24 13:54 friend-0 131 drwxr-xr-x. 2 root root 4096 Mar 24 13:54 friend-1 132 drwxr-xr-x. 2 root root 4096 Mar 24 13:54 friend-2 133 -rw-r--r--. 1 root root 54 Mar 24 13:19 meta.properties 134 -rw-r--r--. 1 root root 1228 Mar 24 13:54 recovery-point-offset-checkpoint 135 -rw-r--r--. 1 root root 1228 Mar 24 13:54 replication-offset-checkpoint 136 [root@hadoop03 kafka-logs]# /opt/kafka/bin/kafka-topics.sh --describe --topic __consumer_offsets --zookeeper localhost:2181 137 Topic:__consumer_offsets PartitionCount:50 ReplicationFactor:3 Configs:segment.bytes=104857600,cleanup.policy=compact,compression.type=producer 138 Topic: __consumer_offsets Partition: 0 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0 139 Topic: __consumer_offsets Partition: 1 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 140 Topic: __consumer_offsets Partition: 2 Leader: 0 Replicas: 0,1,2 Isr: 1,2,0 141 Topic: __consumer_offsets Partition: 3 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2 142 Topic: __consumer_offsets Partition: 4 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0 143 Topic: __consumer_offsets Partition: 5 Leader: 0 Replicas: 0,2,1 Isr: 2,1,0 144 Topic: __consumer_offsets Partition: 6 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0 145 Topic: __consumer_offsets Partition: 7 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 146 Topic: __consumer_offsets Partition: 8 Leader: 0 Replicas: 0,1,2 Isr: 1,2,0 147 Topic: __consumer_offsets Partition: 9 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2 148 Topic: __consumer_offsets Partition: 10 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0 149 Topic: __consumer_offsets Partition: 11 Leader: 0 Replicas: 0,2,1 Isr: 2,1,0 150 Topic: __consumer_offsets Partition: 12 Leader: 1 Replicas: 1,2,0 Isr: 1,0,2 151 Topic: __consumer_offsets Partition: 13 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 152 Topic: __consumer_offsets Partition: 14 Leader: 0 Replicas: 0,1,2 Isr: 1,2,0 153 Topic: __consumer_offsets Partition: 15 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2 154 Topic: __consumer_offsets Partition: 16 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0 155 Topic: __consumer_offsets Partition: 17 Leader: 0 Replicas: 0,2,1 Isr: 2,1,0 156 Topic: __consumer_offsets Partition: 18 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0 157 Topic: __consumer_offsets Partition: 19 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 158 Topic: __consumer_offsets Partition: 20 Leader: 0 Replicas: 0,1,2 Isr: 1,2,0 159 Topic: __consumer_offsets Partition: 21 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2 160 Topic: __consumer_offsets Partition: 22 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0 161 Topic: __consumer_offsets Partition: 23 Leader: 0 Replicas: 0,2,1 Isr: 2,1,0 162 Topic: __consumer_offsets Partition: 24 Leader: 1 Replicas: 1,2,0 Isr: 1,0,2 163 Topic: __consumer_offsets Partition: 25 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 164 Topic: __consumer_offsets Partition: 26 Leader: 0 Replicas: 0,1,2 Isr: 1,2,0 165 Topic: __consumer_offsets Partition: 27 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2 166 Topic: __consumer_offsets Partition: 28 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0 167 Topic: __consumer_offsets Partition: 29 Leader: 0 Replicas: 0,2,1 Isr: 2,1,0 168 Topic: __consumer_offsets Partition: 30 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0 169 Topic: __consumer_offsets Partition: 31 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 170 Topic: __consumer_offsets Partition: 32 Leader: 0 Replicas: 0,1,2 Isr: 1,2,0 171 Topic: __consumer_offsets Partition: 33 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2 172 Topic: __consumer_offsets Partition: 34 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0 173 Topic: __consumer_offsets Partition: 35 Leader: 0 Replicas: 0,2,1 Isr: 2,1,0 174 Topic: __consumer_offsets Partition: 36 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0 175 Topic: __consumer_offsets Partition: 37 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 176 Topic: __consumer_offsets Partition: 38 Leader: 0 Replicas: 0,1,2 Isr: 1,2,0 177 Topic: __consumer_offsets Partition: 39 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2 178 Topic: __consumer_offsets Partition: 40 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0 179 Topic: __consumer_offsets Partition: 41 Leader: 0 Replicas: 0,2,1 Isr: 2,1,0 180 Topic: __consumer_offsets Partition: 42 Leader: 1 Replicas: 1,2,0 Isr: 1,0,2 181 Topic: __consumer_offsets Partition: 43 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 182 Topic: __consumer_offsets Partition: 44 Leader: 0 Replicas: 0,1,2 Isr: 1,2,0 183 Topic: __consumer_offsets Partition: 45 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2 184 Topic: __consumer_offsets Partition: 46 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0 185 Topic: __consumer_offsets Partition: 47 Leader: 0 Replicas: 0,2,1 Isr: 2,1,0 186 Topic: __consumer_offsets Partition: 48 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0 187 Topic: __consumer_offsets Partition: 49 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 188 [root@hadoop03 kafka-logs]# 189 [root@hadoop03 kafka-logs]# /opt/kafka/bin/kafka-topics.sh --describe --topic friend --zookeeper localhost:2181 190 Topic:friend PartitionCount:3 ReplicationFactor:3 Configs: 191 Topic: friend Partition: 0 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 192 Topic: friend Partition: 1 Leader: 0 Replicas: 0,1,2 Isr: 0,1,2 193 Topic: friend Partition: 2 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0 194 [root@hadoop03 kafka-logs]#

下面是启动消费者消费数据时的日志:

======启动一个消费者后的日志情况====================================================================== D:\java\jdk1.8.0_121\bin\java -Didea.launcher.port=7532 -Didea.launcher.bin.path=D:\java\IDEA-14.1.4\bin -Dfile.encoding=UTF-8 -classpath D:\java\jdk1.8.0_121\jre\lib\charsets.jar;D:\java\jdk1.8.0_121\jre\lib\deploy.jar;D:\java\jdk1.8.0_121\jre\lib\javaws.jar;D:\java\jdk1.8.0_121\jre\lib\jce.jar;D:\java\jdk1.8.0_121\jre\lib\jfr.jar;D:\java\jdk1.8.0_121\jre\lib\jfxswt.jar;D:\java\jdk1.8.0_121\jre\lib\jsse.jar;D:\java\jdk1.8.0_121\jre\lib\management-agent.jar;D:\java\jdk1.8.0_121\jre\lib\plugin.jar;D:\java\jdk1.8.0_121\jre\lib\resources.jar;D:\java\jdk1.8.0_121\jre\lib\rt.jar;D:\java\jdk1.8.0_121\jre\lib\ext\access-bridge-64.jar;D:\java\jdk1.8.0_121\jre\lib\ext\cldrdata.jar;D:\java\jdk1.8.0_121\jre\lib\ext\dnsns.jar;D:\java\jdk1.8.0_121\jre\lib\ext\jaccess.jar;D:\java\jdk1.8.0_121\jre\lib\ext\jfxrt.jar;D:\java\jdk1.8.0_121\jre\lib\ext\localedata.jar;D:\java\jdk1.8.0_121\jre\lib\ext\nashorn.jar;D:\java\jdk1.8.0_121\jre\lib\ext\sunec.jar;D:\java\jdk1.8.0_121\jre\lib\ext\sunjce_provider.jar;D:\java\jdk1.8.0_121\jre\lib\ext\sunmscapi.jar;D:\java\jdk1.8.0_121\jre\lib\ext\sunpkcs11.jar;D:\java\jdk1.8.0_121\jre\lib\ext\zipfs.jar;D:\zp\git\zp-kafka\zp-consumer-friend\build\classes\main;D:\zp\git\zp-kafka\zp-consumer-friend\build\resources\main;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.postgresql\postgresql\9.4-1201-jdbc41\870b0e689b514304461a9c1aba11920dc5de4321\postgresql-9.4-1201-jdbc41.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\joda-time\joda-time\2.5\c73038a3688525aad5cf33409df483178290cd64\joda-time-2.5.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.apache.commons\commons-lang3\3.3.2\90a3822c38ec8c996e84c16a3477ef632cbc87a3\commons-lang3-3.3.2.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.apache.commons\commons-io\1.3.2\b6dde38349ba9bb5e6ea6320531eae969985dae5\commons-io-1.3.2.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\commons-net\commons-net\3.3\cd0d5510908225f76c5fe5a3f1df4fa44866f81e\commons-net-3.3.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.fasterxml.jackson.core\jackson-databind\2.4.2\8e31266a272ad25ac4c089734d93e8d811652c1f\jackson-databind-2.4.2.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.apache.httpcomponents\httpclient\4.3.5\9783d89b8eea20a517a4afc5f979bd2882b54c44\httpclient-4.3.5.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.mybatis\mybatis\3.2.8\7b6bf82cea13570b5290d6ed841283a1fcce170\mybatis-3.2.8.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.mybatis\mybatis-spring\1.2.2\1e40a7f5373e4242075a1d386817e7dd49b1697d\mybatis-spring-1.2.2.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.github.miemiedev\mybatis-paginator\1.2.15\d5d9891d2d89b13b0856a00f04ff60dd1f95ffdb\mybatis-paginator-1.2.15.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.github.pagehelper\pagehelper\3.4.2\173072cf0dab08b102d7420932f678d0c60138b7\pagehelper-3.4.2.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\mysql\mysql-connector-java\5.1.32\d28c9a6cf0810fd0e2180e44029c10a54ca26de8\mysql-connector-java-5.1.32.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.alibaba\druid\1.0.9\f91e47c9018578e5ca4d2e808cc3351505ae3ebb\druid-1.0.9.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\jstl\jstl\1.2\74aca283cd4f4b4f3e425f5820cda58f44409547\jstl-1.2.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\commons-fileupload\commons-fileupload\1.3.1\c621b54583719ac0310404463d6d99db27e1052c\commons-fileupload-1.3.1.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\redis.clients\jedis\2.7.2\f2f47f1025ea5090263820e8598e56eb47f5c88a\jedis-2.7.2.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.apache.solr\solr-solrj\4.10.3\6e5ee2f18a6615d5419b61e60e3cae274ac66085\solr-solrj-4.10.3.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.alibaba\fastjson\1.2.4\fbcf8415e32859b473b336e5ac6422ee69b1185b\fastjson-1.2.4.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework.integration\spring-integration-kafka\1.3.0.RELEASE\6d46351ea70084d51cd43f62cd80bb20af1c9d96\spring-integration-kafka-1.3.0.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework.kafka\spring-kafka\1.1.3.RELEASE\a45832c6a155383b2a54659926c1dc325052d883\spring-kafka-1.1.3.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.apache.kafka\kafka_2.10\0.10.0.0\37899467b805929a2ae898d312cd789dbd902c3d\kafka_2.10-0.10.0.0.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.fasterxml.jackson.core\jackson-annotations\2.4.0\d6a66c7a5f01cf500377bd669507a08cfeba882a\jackson-annotations-2.4.0.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.fasterxml.jackson.core\jackson-core\2.4.2\ceb72830d95c512b4b300a38f29febc85bdf6e4b\jackson-core-2.4.2.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.apache.httpcomponents\httpcore\4.3.2\31fbbff1ddbf98f3aa7377c94d33b0447c646b6e\httpcore-4.3.2.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\commons-codec\commons-codec\1.6\b7f0fc8f61ecadeb3695f0b9464755eee44374d4\commons-codec-1.6.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.apache.commons\commons-pool2\2.3\62a559a025fd890c30364296ece14643ba9c8c5b\commons-pool2-2.3.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.apache.httpcomponents\httpmime\4.3.1\f7899276dddd01d8a42ecfe27e7031fcf9824422\httpmime-4.3.1.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.apache.zookeeper\zookeeper\3.4.6\1b2502e29da1ebaade2357cd1de35a855fa3755\zookeeper-3.4.6.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.codehaus.woodstox\wstx-asl\3.2.7\252c7faae9ce98cb9c9d29f02db88f7373e7f407\wstx-asl-3.2.7.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.noggit\noggit\0.5\8e6e65624d2e09a30190c6434abe23b7d4e5413c\noggit-0.5.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework.integration\spring-integration-core\4.1.6.RELEASE\7b95eb7f4c08070c345a723720fd5dbccf71b140\spring-integration-core-4.1.6.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.yammer.metrics\metrics-annotation\2.2.0\62962b54c490a95c0bb255fa93b0ddd6cc36dd4b\metrics-annotation-2.2.0.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.yammer.metrics\metrics-core\2.2.0\f82c035cfa786d3cbec362c38c22a5f5b1bc8724\metrics-core-2.2.0.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\io.projectreactor\reactor-core\2.0.6.RELEASE\a36e58c9d2d0ac1f47e17e8db60a7ebb9d09789c\reactor-core-2.0.6.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-messaging\4.3.5.RELEASE\480f1116f2060107493b91e72e21359b02aca776\spring-messaging-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework.retry\spring-retry\1.1.3.RELEASE\f9517754a9990194ed0daecb5653e48564d557ee\spring-retry-1.1.3.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.projectreactor\reactor-core\1.1.4.RELEASE\da621f1aef5f8cd5c22ae78afec53f1e8659caed\reactor-core-1.1.4.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.reactivestreams\reactive-streams\1.0.0\14b8c877d98005ba3941c9257cfe09f6ed0e0d74\reactive-streams-1.0.0.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.lmax\disruptor\3.2.1\db375f499e32c3f06549e9addf8b1647123d6426\disruptor-3.2.1.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\io.gatling\jsr166e\1.0\d1bf191a18dfe6e3157a4fbf6b527390d906ace6\jsr166e-1.0.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\commons-io\commons-io\2.3\cd8d6ffc833cc63c30d712a180f4663d8f55799b\commons-io-2.3.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.101tec\zkclient\0.8\c0f700a4a3b386279d7d8dd164edecbe836cbfdb\zkclient-0.8.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.scala-lang\scala-library\2.10.6\421989aa8f95a05a4f894630aad96b8c7b828732\scala-library-2.10.6.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\net.sf.jopt-simple\jopt-simple\4.9\ee9e9eaa0a35360dcfeac129ff4923215fd65904\jopt-simple-4.9.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\jline\jline\0.9.94\99a18e9a44834afdebc467294e1138364c207402\jline-0.9.94.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\io.netty\netty\3.7.0.Final\7a8c35599c68c0bf383df74469aa3e03d9aca87\netty-3.7.0.Final.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\junit\junit\3.8.1\99129f16442844f6a4a11ae22fbbee40b14d774f\junit-3.8.1.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.apache.kafka\kafka-clients\0.10.1.1\52f03b809c26f9676ddfcf130f13c80dfc929b98\kafka-clients-0.10.1.1.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\net.jpountz.lz4\lz4\1.3.0\c708bb2590c0652a642236ef45d9f99ff842a2ce\lz4-1.3.0.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.xerial.snappy\snappy-java\1.1.2.6\48d92871ca286a47f230feb375f0bbffa83b85f6\snappy-java-1.1.2.6.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\commons-logging\commons-logging\1.2\4bfc12adfe4842bf07b657f0369c4cb522955686\commons-logging-1.2.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.goldmansachs\gs-collections\5.1.0\7114c5349c816ea645b1ea3ffcc21fa073cbabc\gs-collections-5.1.0.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.slf4j\slf4j-log4j12\1.7.21\7238b064d1aba20da2ac03217d700d91e02460fa\slf4j-log4j12-1.7.21.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.slf4j\slf4j-api\1.7.21\139535a69a4239db087de9bab0bee568bf8e0b70\slf4j-api-1.7.21.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\log4j\log4j\1.2.17\5af35056b4d257e4b64b9e8069c0746e8b08629f\log4j-1.2.17.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-beans\4.3.5.RELEASE\e12bbc3277da28e2e2608a187f83091dc6c300bf\spring-beans-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-core\4.3.5.RELEASE\80299e3f80e8c6d5c076db2ba6adf44a4b52f578\spring-core-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-context\4.3.5.RELEASE\ca3391c0e17d0138335ba51b51371661d20d56a8\spring-context-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\com.goldmansachs\gs-collections-api\5.1.0\ea605cdf64cab5fc7b48c99f061d4c8db05b6ff1\gs-collections-api-5.1.0.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-aop\4.3.5.RELEASE\4f113218af716bd8d174c411f19b26418b5a70f6\spring-aop-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-expression\4.3.5.RELEASE\3689dc6c5b942ecde4122eac889ed87977d6f287\spring-expression-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-webmvc\4.3.5.RELEASE\c624659217edab07d8279fb0f90462136f089220\spring-webmvc-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-jdbc\4.3.5.RELEASE\7c09e38b6f6e0b178973dea06bb8fdc6d19aa596\spring-jdbc-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-aspects\4.3.5.RELEASE\8c00c15865a1da0a1ba143a50622a8436a56e097\spring-aspects-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-web\4.3.5.RELEASE\6641daccf2fddafc8358144f3a4f999130fdf144\spring-web-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.springframework\spring-tx\4.3.5.RELEASE\296d8ae21fadc0f115dae7d1e8d5c4f8c7de2c3e\spring-tx-4.3.5.RELEASE.jar;C:\Users\SYJ\.gradle\caches\modules-2\files-2.1\org.aspectj\aspectjweaver\1.8.9\db28774f477f07220eac18d5ec9c4e01f48589d7\aspectjweaver-1.8.9.jar;D:\java\IDEA-14.1.4\lib\idea_rt.jar com.intellij.rt.execution.application.AppMain com.zhaopin.AppMain [17/03/24 20:46:36:702][org.springframework.context.support.AbstractApplicationContext-prepareRefresh] Refreshing org.springframework.context.support.ClassPathXmlApplicationContext@6bc168e5: startup date [Fri Mar 24 20:46:36 CST 2017]; root of context hierarchy [17/03/24 20:46:36:971][org.springframework.beans.factory.xml.XmlBeanDefinitionReader-loadBeanDefinitions] Loading XML bean definitions from file [D:\zp\git\zp-kafka\zp-consumer-friend\build\resources\main\spring\applicationContext-consumer.xml] [17/03/24 20:46:37:472][org.springframework.beans.factory.xml.XmlBeanDefinitionReader-loadBeanDefinitions] Loading XML bean definitions from file [D:\zp\git\zp-kafka\zp-consumer-friend\build\resources\main\spring\applicationContext-dao.xml] [17/03/24 20:46:37:871][org.springframework.beans.factory.xml.XmlBeanDefinitionReader-loadBeanDefinitions] Loading XML bean definitions from file [D:\zp\git\zp-kafka\zp-consumer-friend\build\resources\main\spring\applicationContext-service.xml] [17/03/24 20:46:39:580][org.springframework.core.io.support.PropertiesLoaderSupport-loadProperties] Loading properties file from file [D:\zp\git\zp-kafka\zp-consumer-friend\build\resources\main\resource\db.properties] [17/03/24 20:46:39:580][org.springframework.core.io.support.PropertiesLoaderSupport-loadProperties] Loading properties file from file [D:\zp\git\zp-kafka\zp-consumer-friend\build\resources\main\resource\resource.properties] [17/03/24 20:46:41:058][org.apache.kafka.common.config.AbstractConfig-logAll] ConsumerConfig values: auto.commit.interval.ms = 1000 auto.offset.reset = latest bootstrap.servers = [192.168.71.11:9092, 192.168.71.12:9092, 192.168.71.13:9092] check.crcs = true client.id = connections.max.idle.ms = 540000 enable.auto.commit = true exclude.internal.topics = true fetch.max.bytes = 52428800 fetch.max.wait.ms = 500 fetch.min.bytes = 1 group.id = friend-group heartbeat.interval.ms = 3000 interceptor.classes = null key.deserializer = class org.apache.kafka.common.serialization.StringDeserializer max.partition.fetch.bytes = 1048576 max.poll.interval.ms = 300000 max.poll.records = 1 metadata.max.age.ms = 300000 metric.reporters = [] metrics.num.samples = 2 metrics.sample.window.ms = 30000 partition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor] receive.buffer.bytes = 65536 reconnect.backoff.ms = 50 request.timeout.ms = 305000 retry.backoff.ms = 100 sasl.kerberos.kinit.cmd = /usr/bin/kinit sasl.kerberos.min.time.before.relogin = 60000 sasl.kerberos.service.name = null sasl.kerberos.ticket.renew.jitter = 0.05 sasl.kerberos.ticket.renew.window.factor = 0.8 sasl.mechanism = GSSAPI security.protocol = PLAINTEXT send.buffer.bytes = 131072 session.timeout.ms = 15000 ssl.cipher.suites = null ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1] ssl.endpoint.identification.algorithm = null ssl.key.password = null ssl.keymanager.algorithm = SunX509 ssl.keystore.location = null ssl.keystore.password = null ssl.keystore.type = JKS ssl.protocol = TLS ssl.provider = null ssl.secure.random.implementation = null ssl.trustmanager.algorithm = PKIX ssl.truststore.location = null ssl.truststore.password = null ssl.truststore.type = JKS value.deserializer = class org.apache.kafka.common.serialization.StringDeserializer [17/03/24 20:46:41:066][org.apache.kafka.common.config.AbstractConfig-logAll] ConsumerConfig values: auto.commit.interval.ms = 1000 auto.offset.reset = latest bootstrap.servers = [192.168.71.11:9092, 192.168.71.12:9092, 192.168.71.13:9092] check.crcs = true client.id = consumer-1 connections.max.idle.ms = 540000 enable.auto.commit = true exclude.internal.topics = true fetch.max.bytes = 52428800 fetch.max.wait.ms = 500 fetch.min.bytes = 1 group.id = friend-group heartbeat.interval.ms = 3000 interceptor.classes = null key.deserializer = class org.apache.kafka.common.serialization.StringDeserializer max.partition.fetch.bytes = 1048576 max.poll.interval.ms = 300000 max.poll.records = 1 metadata.max.age.ms = 300000 metric.reporters = [] metrics.num.samples = 2 metrics.sample.window.ms = 30000 partition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor] receive.buffer.bytes = 65536 reconnect.backoff.ms = 50 request.timeout.ms = 305000 retry.backoff.ms = 100 sasl.kerberos.kinit.cmd = /usr/bin/kinit sasl.kerberos.min.time.before.relogin = 60000 sasl.kerberos.service.name = null sasl.kerberos.ticket.renew.jitter = 0.05 sasl.kerberos.ticket.renew.window.factor = 0.8 sasl.mechanism = GSSAPI security.protocol = PLAINTEXT send.buffer.bytes = 131072 session.timeout.ms = 15000 ssl.cipher.suites = null ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1] ssl.endpoint.identification.algorithm = null ssl.key.password = null ssl.keymanager.algorithm = SunX509 ssl.keystore.location = null ssl.keystore.password = null ssl.keystore.type = JKS ssl.protocol = TLS ssl.provider = null ssl.secure.random.implementation = null ssl.trustmanager.algorithm = PKIX ssl.truststore.location = null ssl.truststore.password = null ssl.truststore.type = JKS value.deserializer = class org.apache.kafka.common.serialization.StringDeserializer [17/03/24 20:46:41:480][org.apache.kafka.common.utils.AppInfoParser$AppInfo-<init>] Kafka version : 0.10.1.1 [17/03/24 20:46:41:480][org.apache.kafka.common.utils.AppInfoParser$AppInfo-<init>] Kafka commitId : f10ef2720b03b247 [17/03/24 20:46:41:625][org.springframework.context.support.DefaultLifecycleProcessor$LifecycleGroup-start] Starting beans in phase 0 [17/03/24 20:46:41:630][org.springframework.context.support.DefaultLifecycleProcessor$LifecycleGroup-start] Starting beans in phase 0 [17/03/24 20:46:58:908][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:46:58:911][org.apache.kafka.clients.consumer.internals.ConsumerCoordinator-onJoinPrepare] Revoking previously assigned partitions [] for group friend-group [17/03/24 20:46:58:912][org.springframework.kafka.listener.AbstractMessageListenerContainer$2-onPartitionsRevoked] partitions revoked:[] [17/03/24 20:46:58:912][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:46:59:240][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:46:59:558][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:46:59:559][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:46:59:566][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:46:59:688][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:46:59:689][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:46:59:692][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:46:59:807][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:46:59:808][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:46:59:814][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:46:59:941][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:46:59:942][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:00:520][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:00:828][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:00:829][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:00:841][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:01:182][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:01:183][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:02:911][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:03:352][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:03:354][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:03:946][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:04:394][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:04:398][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:04:450][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:04:676][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:04:678][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:04:687][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:05:307][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:05:308][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:05:655][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:06:182][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:06:183][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:06:190][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:08:076][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:08:077][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:11:343][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:11:514][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:11:516][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:11:548][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:11:698][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:11:700][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:11:714][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:13:814][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:13:816][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:47:17:025][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$1-onSuccess] Successfully joined group friend-group with generation 1 [17/03/24 20:47:17:026][org.apache.kafka.clients.consumer.internals.ConsumerCoordinator-onJoinComplete] Setting newly assigned partitions [] for group friend-group [17/03/24 20:47:17:027][org.springframework.kafka.listener.AbstractMessageListenerContainer$2-onPartitionsAssigned] partitions assigned:[] [17/03/24 20:47:23:055][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:23:237][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.12:9092 (id: 2147483646 rack: null) for group friend-group. [17/03/24 20:47:35:384][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.12:9092 (id: 2147483646 rack: null) dead for group friend-group [17/03/24 20:47:35:407][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:47:39:366][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-coordinatorDead] Marking the coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) dead for group friend-group [17/03/24 20:47:39:408][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$GroupCoordinatorResponseHandler-onSuccess] Discovered coordinator 192.168.71.11:9092 (id: 2147483647 rack: null) for group friend-group. [17/03/24 20:55:43:272][org.apache.kafka.clients.consumer.internals.ConsumerCoordinator-onJoinPrepare] Revoking previously assigned partitions [] for group friend-group ====发送一条消息到生产者之后,消费者输出日志如下========================================= [17/03/24 20:55:43:273][org.springframework.kafka.listener.AbstractMessageListenerContainer$2-onPartitionsRevoked] partitions revoked:[] [17/03/24 20:55:43:273][org.apache.kafka.clients.consumer.internals.AbstractCoordinator-sendJoinGroupRequest] (Re-)joining group friend-group [17/03/24 20:55:43:420][org.apache.kafka.clients.consumer.internals.AbstractCoordinator$1-onSuccess] Successfully joined group friend-group with generation 2 [17/03/24 20:55:43:424][org.apache.kafka.clients.consumer.internals.ConsumerCoordinator-onJoinComplete] Setting newly assigned partitions [friend-1, friend-0, friend-2] for group friend-group [17/03/24 20:55:43:425][org.springframework.kafka.listener.AbstractMessageListenerContainer$2-onPartitionsAssigned] partitions assigned:[friend-1, friend-0, friend-2] ====发送第二条消息到生产者后,消费者输出日志如下====================================================== [17/03/24 21:05:05:408][com.zhaopin.consumer.ConsumerService-onMessage] ====onMessage====ConsumerRecord(topic = friend, partition = 0, offset = 0, CreateTime = 1490360705152, checksum = 2832597311, serialized key size = 48, serialized value size = 172, key = 5f974fa3-676c-4474-a260-b4417c36bd34||zhaopin123, value = {"data":{"friends":[{"friendName":"55445","friendPhone":"55445"},{"friendName":"55445","friendPhone":"55445"}],"userId":55555,"userPhone":"55445"},"requestId":"zhaopin123"}) [17/03/24 21:05:06:137][com.alibaba.druid.pool.DruidDataSource-validationQueryCheck] testWhileIdle is true, validationQuery not set [17/03/24 21:05:06:181][com.alibaba.druid.pool.DruidDataSource-init] {dataSource-1} inited =====数据库friend表中增加了2条数据=============================================== 287835 55555 55445 1 0 55445 0 55445 287836 55555 55445 1 0 55445 0 55445