E-MapReduce的emr-2.0.0以下的HBase集群中并不包含Phoenix,也没有启动yarn,下面介绍一种通过创建集群时设置的引导操作来完成集群上Phoenix的部署。

- HBase+Phoenix部署

- HBase+Yarn+Phoenix部署

HBase+Phoenix部署

1.引导操作shell脚本(phoenix_bootstrap.sh)

入参:

| 参数名 | 取值 | 备注 |

|---|---|---|

| regionId | cn-hangzhou/cn-beijing | 创建集群所在的regionId |

| isVpc | 0/1 | 创建的是否是vpc集群 |

#!/bin/bash

regionId="$1"

isVpc=$2

echo $regionId

echo $isVpc

if [ ! $regionId ]; then

regionId="cn-hangzhou"

fi

if [ ! $isVpc ]; then

isVpc=0

fi

isMaster=`hostname --fqdn | grep emr-header-1`

masterIp=`cat /etc/hosts | grep emr-header-1 | awk '{print $1}'`

bucket=""

if [[ $regionId == "cn-hangzhou" ]]; then

bucket="emr-agent-pack"

elif [[ $regionId == "cn-beijing" ]]; then

bucket="emr-bj"

fi

phoenixpackUrl="http://emr-agent-pack.oss-cn-hangzhou-internal.aliyuncs.com/phoenix/phoenix-4.7.0-HBase-1.1-bin.tar.gz"

if [[ isVpc -eq 1 ]]; then

phoenixpackUrl="http://$bucket.vpc100-oss-$regionId.aliyuncs.com/phoenix/phoenix-4.7.0-HBase-1.1-bin.tar.gz"

else

phoenixpackUrl="http://$bucket.oss-$regionId-internal.aliyuncs.com/phoenix/phoenix-4.7.0-HBase-1.1-bin.tar.gz"

fi

cd /opt/apps

wget $phoenixpackUrl

tar xvf phoenix-4.7.0-HBase-1.1-bin.tar.gz

rm -rf /opt/apps/phoenix-4.7.0-HBase-1.1-bin.tar.gz

chown -R hadoop:hadoop /opt/apps/phoenix-4.7.0-HBase-1.1-bin

cp phoenix-4.7.0-HBase-1.1-bin/phoenix-4.7.0-HBase-1.1-server.jar /opt/apps/hbase-1.1.1/lib/

chown hadoop:hadoop /opt/apps/hbase-1.1.1/lib/phoenix-4.7.0-HBase-1.1-server.jar

if [[ $isMaster != "" ]]; then

echo "$masterIp emr-cluster" >>/etc/hosts

sed -i '$i<property>\n<name>hbase.master.loadbalancer.class</name>\n<value>org.apache.phoenix.hbase.index.balancer.IndexLoadBalancer</value>\n</property>\n<!-- Phoenix订制的索引观察者 -->\n<property>\n<name>hbase.coprocessor.master.classes</name>\n<value>org.apache.phoenix.hbase.index.master.IndexMasterObserver</value>\n</property>' /etc/emr/hbase-conf/hbase-site.xml

else

sed -i '$i<!-- Enables custom WAL edits to be written, ensuring proper writing/replay of the index updates. This codec supports the usual host of WALEdit options, most notably WALEdit compression. -->\n<property>\n<name>hbase.regionserver.wal.codec</name>\n<value>org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec</value>\n</property>\n<!-- Prevent deadlocks from occurring during index maintenance for global indexes (HBase 0.98.4+ and Phoenix 4.3.1+ only) by ensuring index updates are processed with a higher priority than data updates. It also prevents deadlocks by ensuring metadata rpc calls are processed with a higher priority than data rpc calls -->\n<property>\n<name>hbase.region.server.rpc.scheduler.factory.class</name>\n<value>org.apache.hadoop.hbase.ipc.PhoenixRpcSchedulerFactory</value>\n<description>Factory to create the Phoenix RPC Scheduler that uses separate queues for index and metadata updates</description>\n</property>\n<property>\n<name>hbase.rpc.controllerfactory.class</name>\n<value>org.apache.hadoop.hbase.ipc.controller.ServerRpcControllerFactory</value>\n<description>Factory to create the Phoenix RPC Scheduler that uses separate queues for index and metadata updates</description>\n</property>\n<!-- To support local index regions merge on data regions merge you will need to add the following parameter to hbase-site.xml in all the region servers and restart. (It’s applicable for Phoenix 4.3+ versions) -->\n<property>\n<name>hbase.coprocessor.regionserver.classes</name>\n<value>org.apache.hadoop.hbase.regionserver.LocalIndexMerger</value>\n</property>' /etc/emr/hbase-conf/hbase-site.xml

fi

sed -i '$a# ensure that we receive traces on the server\nhbase.sink.tracing.class=org.apache.phoenix.trace.PhoenixMetricsSink\n# Tell the sink where to write the metrics\nhbase.sink.tracing.writer-class=org.apache.phoenix.trace.PhoenixTableMetricsWriter\n# Only handle traces with a context of "tracing"\nhbase.sink.tracing.context=tracing' /etc/emr/hbase-conf/hadoop-metrics2-hbase.properties

echo "export PATH=/opt/apps/phoenix-4.7.0-HBase-1.1-bin/bin:\$PATH" >>/etc/profile.d/hadoop.sh

echo "export HADOOP_CLASSPATH=/opt/apps/phoenix-4.7.0-HBase-1.1-bin/*:\$HADOOP_CLASSPATH" >>/etc/profile.d/hadoop.sh

2.OSS存储phoenix_bootstrap.sh

将1中的phoenix_bootstrap.sh脚本上传到OSS中,创建集群的时候需要从OSS中选择

3.创建HBase集群(添加引导操作)

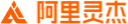

在E-MapReduce中创建HBase集群,在创建集群的基本信息页面,点击添加引导操作,选择2中OSS的phoenix_bootstrap.sh脚本,并且根据需求填写可选参数(即1中介绍的脚本入参),如下图所示(在杭州region创建classic集群)

4.验证

集群创建完成,状态显示为空闲后,登陆集群的master节点进行验证

1)示例1

执行:

sudo su hadoop

performance.py localhost 1000输出(截取部分):

CSV Upsert complete. 1000 rows upserted

Time: 1.298 sec(s)

COUNT(1)

----------------------------------------

2000

Time: 0.437 sec(s)

HO

--

CS

EU

NA

Time: 0.2 sec(s)

DOMAIN

----------------------------------------

Apple.com

Google.com

Salesforce.com

Time: 0.079 sec(s)

DAY

-----------------------

2016-06-13 00:00:00.000

Time: 0.076 sec(s)

COUNT(1)

----------------------------------------

45

Time: 0.068 sec(s)

2)示例2

执行:

sudo su hadoop

cd ~

echo '100,Jack,Doe' >>example.csv

echo '200,Tony,Poppins' >>example.csv

sqlline.py localhost

0: jdbc:phoenix:localhost> CREATE TABLE example (

my_pk bigint not null,

m.first_name varchar(50),

m.last_name varchar(50)

CONSTRAINT pk PRIMARY KEY (my_pk));

psql.py -t EXAMPLE localhost example.csv

验证

sqlline.py localhost

0: jdbc:phoenix:localhost> select * from example;

+--------+-------------+------------+

| MY_PK | FIRST_NAME | LAST_NAME |

+--------+-------------+------------+

| 100 | Jack | Doe |

| 200 | Tony | Poppins |

+--------+-------------+------------+HBase+Yarn+Phoenix部署

Phoenix也可以结合MapReduce来使用,需要额外部署Yarn。

1.引导操作shell脚本(yarn_bootstrap.sh)

E-MapReduce的HBase集群目前没有启动yarn,可通过引导操作来启动,脚本如下:

#!/bin/bash

HADOOP_SOFT_LINK_CONF_DIR="/etc/emr/hadoop-conf"

HDFS_HBASE_CONF_DIR="/etc/emr/hadoop-conf-2.6.0.hbase"

if test -d ${HADOOP_SOFT_LINK_CONF_DIR};then

rm -rf ${HADOOP_SOFT_LINK_CONF_DIR}

fi

ln -s ${HDFS_HBASE_CONF_DIR} ${HADOOP_SOFT_LINK_CONF_DIR}

isMaster=`hostname --fqdn | grep emr-header-1`

masterIp=`cat /etc/hosts | grep emr-header-1 | awk '{print $1}'`

headerecsid=`cat /etc/hosts | grep 'emr-header-1' |awk '{print $4}'`

sed -i "/<configuration>/a <property>\n<name>master_hostname</name>\n<value>$headerecsid</value>\n</property>" /etc/emr/hadoop-conf-2.6.0.hbase/core-site.xml

sed -i 's/#yarn_nodemanager_resource_cpu_vcores#/6/g' /etc/emr/hadoop-conf-2.6.0.hbase/yarn-site.xml

sed -i 's/#yarn_nodemanager_resource_memory_mb#/13107/g' /etc/emr/hadoop-conf-2.6.0.hbase/yarn-site.xml

sed -i 's/#yarn_scheduler_minimum_allocation_mb#/2184/g' /etc/emr/hadoop-conf-2.6.0.hbase/yarn-site.xml

sed -i 's/\/opt\/apps\/extra-jars\/*/\/opt\/apps\/extra-jars\/*,\n\/opt\/apps\/phoenix-4.7.0-HBase-1.1-bin\//g' /etc/emr/hadoop-conf-2.6.0.hbase/yarn-site.xml

sed -i 's/#mapreduce_reduce_java_opts#/-Xmx2184m/g' /etc/emr/hadoop-conf-2.6.0.hbase/mapred-site.xml

sed -i 's/#mapreduce_map_memory_mb#/3208/g' /etc/emr/hadoop-conf-2.6.0.hbase/mapred-site.xml

sed -i 's/#mapreduce_reduce_memory_mb#/3208/g' /etc/emr/hadoop-conf-2.6.0.hbase/mapred-site.xml

sed -i 's/#mapreduce_map_java_opts#/-Xmx2184m/g' /etc/emr/hadoop-conf-2.6.0.hbase/mapred-site.xml

if [[ $isMaster != "" ]]; then

sed -i 's/#loop#{disk_dirs}\/mapred#,#/file:\/\/\/mnt\/disk1\/mapred/g' /etc/emr/hadoop-conf-2.6.0.hbase/mapred-site.xml

sed -i 's/#loop#{disk_dirs}\/yarn#,#/file:\/\/\/mnt\/disk1\/yarn/g' /etc/emr/hadoop-conf-2.6.0.hbase/yarn-site.xml

sed -i 's/#loop#{disk_dirs}\/log\/hadoop-yarn\/containers#,#/file:\/\/\/mnt\/disk1\/log\/hadoop-yarn\/containers/g' /etc/emr/hadoop-conf-2.6.0.hbase/yarn-site.xml

chmod 777 /mnt/disk1/log/

su -l hadoop -c '/opt/apps/hadoop-2.6.0/sbin/yarn-daemon.sh start resourcemanager'

else

sed -i 's/#loop#{disk_dirs}\/mapred#,#/file:\/\/\/mnt\/disk4\/mapred,file:\/\/\/mnt\/disk1\/mapred,file:\/\/\/mnt\/disk3\/mapred,file:\/\/\/mnt\/disk2\/mapred/g' /etc/emr/hadoop-conf-2.6.0.hbase/mapred-site.xml

sed -i 's/#loop#{disk_dirs}\/yarn#,#/file:\/\/\/mnt\/disk4\/yarn,file:\/\/\/mnt\/disk1\/yarn,file:\/\/\/mnt\/disk3\/yarn,file:\/\/\/mnt\/disk2\/yarn/g' /etc/emr/hadoop-conf-2.6.0.hbase/yarn-site.xml

sed -i 's/#loop#{disk_dirs}\/log\/hadoop-yarn\/containers#,#/file:\/\/\/mnt\/disk4\/log\/hadoop-yarn\/containers,file:\/\/\/mnt\/disk1\/log\/hadoop-yarn\/containers,file:\/\/\/mnt\/disk3\/log\/hadoop-yarn\/containers,file:\/\/\/mnt\/disk2\/log\/hadoop-yarn\/containers/g' /etc/emr/hadoop-conf-2.6.0.hbase/yarn-site.xml

chmod 770 /mnt/disk1

chmod 770 /mnt/disk2

chmod 770 /mnt/disk3

chmod 770 /mnt/disk4

chmod 777 /mnt/disk1/log/

su -l hadoop -c '/opt/apps/hadoop-2.6.0/sbin/yarn-daemon.sh start nodemanager'

sed -i '$aif test -d "\/mnt\/disk1";then\nchmod 770 \/mnt\/disk1\nfi\nif test -d "\/mnt\/disk2";then\nchmod 770 \/mnt\/disk2\nfi\nif test -d "\/mnt/disk3";then\nchmod 770 /mnt\/disk3\nfi\nif test -d "\/mnt/disk4";then\nchmod 770 \/mnt\/disk4\nfi\nif test -d "\/mnt\/disk1\/log";then\nchmod 777 \/mnt\/disk1\/log\nfi' /usr/local/emr/emr-bin/script/hbase/sbin/4_start_hdfs.sh

fi

2.引导操作shell脚本(phoenix_bootstrap.sh)

跟HBase+Phoenix部署中的phoenix_bootstrap.sh一致。

3.OSS存储yarn_bootstrap.sh

将1中的yarn_bootstrap.sh脚本上传到OSS中,创建集群的时候需要从OSS中选择

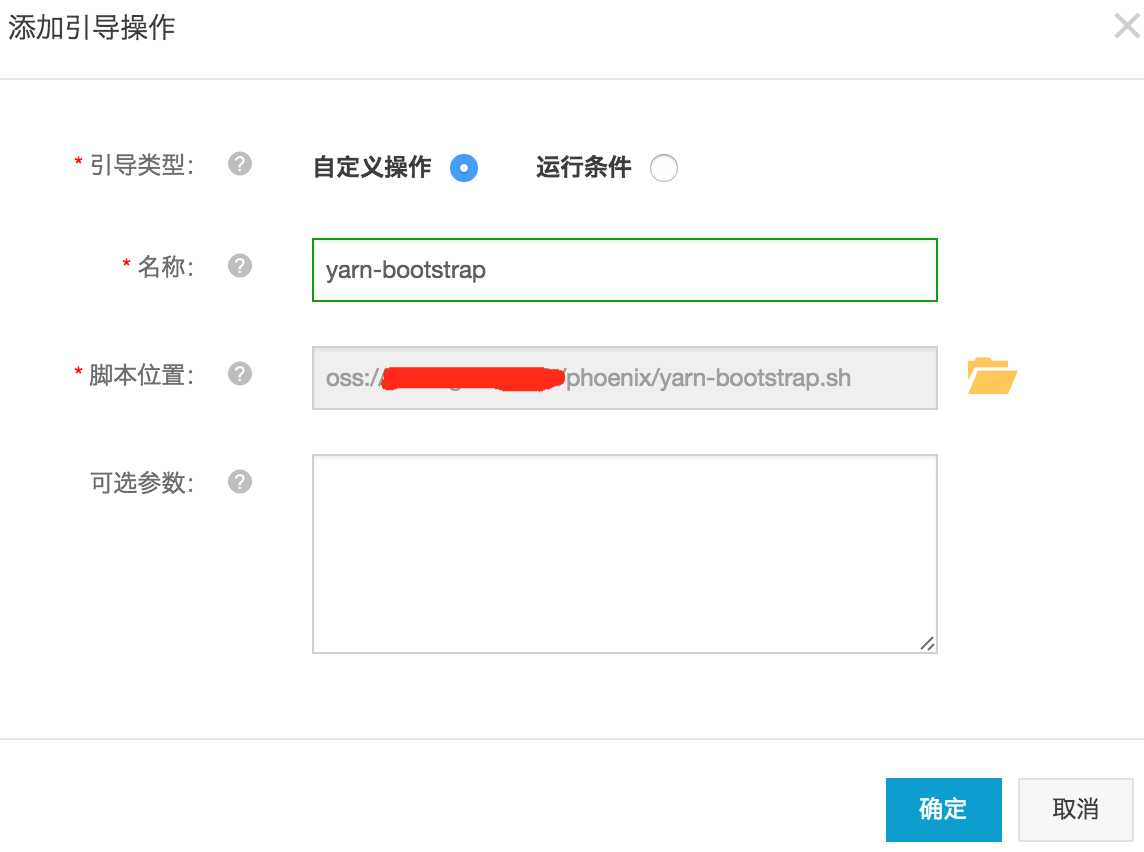

4.创建HBase集群(添加引导操作)

在E-MapReduce中创建HBase集群,在创建集群的基本信息页面,点击添加引导操作先添加yarn_bootstrap.sh脚本,然后再点击添加引导操作来添加phoenix_bootstrap.sh,如下所示:

5.验证

集群创建完成,状态显示为空闲后,登陆集群的master节点进行验证

1)示例1

执行:

sudo su hadoop

cd ~

echo '100,Jack,Doe' >>example.csv

echo '200,Tony,Poppins' >>example.csv

hadoop dfs -put example.csv /sqlline.py localhost

0: jdbc:phoenix:localhost> CREATE TABLE example (

my_pk bigint not null,

m.first_name varchar(50),

m.last_name varchar(50)

CONSTRAINT pk PRIMARY KEY (my_pk));

hadoop jar /opt/apps/phoenix-4.7.0-HBase-1.1-bin/phoenix-4.7.0-HBase-1.1-client.jar org.apache.phoenix.mapreduce.CsvBulkLoadTool --input /example.csv -z localhost -t example

验证

sqlline.py localhost

0: jdbc:phoenix:localhost> select * from example;

+--------+-------------+------------+

| MY_PK | FIRST_NAME | LAST_NAME |

+--------+-------------+------------+

| 100 | Jack | Doe |

| 200 | Tony | Poppins |

+--------+-------------+------------+