lvs/dr+keepalived应用测试实施文档

原文:http://beacon.blog.51cto.com/442731/94723

声明:本测试文档只针对未曾使用过lvs+keepalived的技术爱好者,对于文中错误希望读过此文档的朋友斧正。文档冗长的原因是包含了很多细节,可能有些并无必要说明的我也做了详细的解释。基于以上原因,敬请高人略过此文档。 未完,待续........

一、测试名称

lvs/dr模式下keepalived应用的测试

二、测试目的

目前有1台lvs/dr转发用户请求到后端web服务器,转发通过脚本控制。有两个问题需要解决,

⑴、后端web服务器故障不能提供web服务时lvs/dr仍然向其转发请求;

⑵、当lvs/dr故障时,将完全中断web服务。故需要使用软件实现自动增加删除故障web结点,同时实现lvs/dr的双机主备机制。

三、测试环境

1、本测试中使用4台服务器,包括2台lvs/dr和2台web/server。具体情况见下表,

| 服务器名称 | 服务器网络配置 |

| 真实IP地址(RIP) | |

| lvs-dr1 (dr1) | 192.168.1.210 |

| lvs-dr2 (dr2) | 192.168.1.211 |

| lvs-web1 (web1) | 192.168.1.216 |

| lvs-web2 (web2) | 192.168.1.217 |

| 虚拟IP地址(VIP) | |

| web VIP1 | 192.168.1.215 |

| web VIP2 | 192.168.1.220 |

2、当前状况如下图所示

⑴、用户访问网站的请求首先到达lvs-dr1并通过脚本设置判断如何转发用户请求。

⑵、通过转发的用户请求被lvs-web1和lvs-web2响应后直接返回给客户端。但是这样的方式可能出现如下问题,

⑴、当lvs-dr1故障时,无法再接受用户请求并将请求转发给真实的web服务器(即便真实web服务器正常)从而导致整个web服务的瘫痪,也就是lvs控制器存在单点故障问题。

⑵、当lvs-dr1正常时,真实地web服务器如lvs-web1故障。此时lvs-dr1并不知道真实服务器是否在正常提供web服务,所以仍然在向故障的lvs-web1转发用户请求。这样的结果是用户请求无法被故障web服务器相应,某些用户可以访问网站有些则无法访问。

注:服务器故障包括:服务器宕机、web服务终止、网线松动等等。

3、基于以上的问题,我们需要想办法实现对lvs控制器和web服务器的健康监测,一旦服务出现问题能保证服务不中断的情况下排除故障。即增加lvs控制器实现主备模式避免单点故障以及自动删除故障web服务结点并当它恢复后再自动添加到群集中这样的功能。 预期状况如下图所示

通过上图所示实现以下功能

⑴、lvs-dr1和lvs-dr2采用主从的方式配置,从控制器通过心跳监测主控制器是否存在。当监测到主控制器不存在时接管虚拟IP实现向web服务器转发用户请求。

⑵、lvs控制器监控web服务器是否存在,当服务器出现故障(宕机、服务终止、网线松动)时自动删除故障结点,当服务器上线恢复提供服务后又能够自动将该结点添加到群集中。

4、根据以上对现状和预期的分析后,为了测试我们做了如下准备。

| 服务器名称 | 安装软件 |

| lvs-dr1 | ipvsadm-1.24 keepalived-1.1.15 |

| lvs-dr2 | |

| lvs-web1 | cronolog-1.6.2httpd-2.2.9 |

| lvs-web2 |

注:

⑴、由于整个测试实施文档在非生产环境下完成,所以使用虚拟机软件VMWare Workstation 5.5.1作为以上所有结点的运行平台。

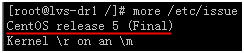

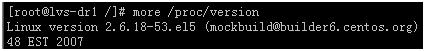

⑵、测试环境中所有结点的操作系统是CentOS 5.1 /CentOS release 5 (Final),内核版本为2.6.18-53.el5。如何查看系统和内核版本?

①、/etc/issue

②、uname -a

③、/proc/version

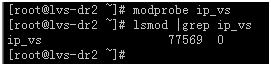

⑶、实现lvs群集的两个重要部件是ipvs内核模块和ipvsadm工具包。当前内核版本的系统已经包含ipvs内核模块,但默认并没有加载到内核中,可以手工加载或安装ipvsadm之后会被加载。使用modprobe命令手工加载ipvs模块并查询模块是否加载。

ipvsadm下载地址[url]http://www.linuxvirtualserver.org/software/kernel-2.6/ipvsadm-1.24.tar.gz[/url]

⑷、keepalived是一个监测lvs转发器和web服务器状态的软件,下面是官方网站对keepalived的一段解释,官方网站地址[url]http://www.keepalived.org[/url]

What is Keepalived ?

The main goal of the keepalived project is to add a strong & robust keepalive facility to the Linux Virtual Server project. This project is written in C with multilayer TCP/IP stack checks. Keepalived implements a framework based on three family checks : Layer3, Layer4 & Layer5/7. This framework gives the daemon the ability of checking a LVS server pool states. When one of the server of the LVS server pool is down, keepalived informs the linux kernel via a setsockopt call to remove this server entrie from the LVS topology. In addition keepalived implements an independent VRRPv2 stack to handle director failover. So in short keepalived is a userspace daemon for LVS cluster nodes healthchecks and LVS directors failover.

Why using Keepalived ?

If your are using a LVS director to loadbalance a server pool in a production environnement, you may want to have a robust solution for healthcheck & failover. keepalived下载地址[url]http://www.keepalived.org/software/keepalived-1.1.15.tar.gz[/url]

⑸、web服务器上需要安装apache2,cronolog是选择安装软件,它可以格式化日志文件的格式,易于对apache日志的管理和分析。Apache2下载地址[url]http://apache.mirror.phpchina.com/httpd/httpd-2.2.9.tar.gz[/url]

Cronolog下载地址[url]http://cronolog.org/download/cronolog-1.6.2.tar.gz[/url]

四、测试步骤

1、配置各个结点的主机名、IP地址。配置过程暂省略,配置如下

| 服务器名称 | 服务器网络配置 |

| lvs-dr1 (dr1) | 192.168.1.210 |

| lvs-dr2 (dr2) | 192.168.1.211 |

| lvs-web1 (web1) | 192.168.1.216 |

| lvs-web2 (web2) | 192.168.1.217 |

注:修改主机名需要/etc/sysconfig/network和/etc/hosts两个文件,然后重新启动生效。

2、在lvs-dr1和lvs-dr2上安装ipvsadm和keepalived,过程如下:

⑴、安装ipvsadm,从官方网站下载ipvsadm-1.24.tar.gz后

[root@lvs-dr1 ~]# tar zxvf ipvsadm-1.24.tar.gz # 解压缩ipvsadm包 #

[root@lvs-dr1 ~]# ln -s /usr/src/kernels/2.6.18-53.el5-i686 /usr/src/linux # 做一个目录的链接 #

[root@lvs-dr1 ~]# ls -l /usr/src/ # 查看ln过的链接状态#

total 16drwxr-xr-x 3 root root 4096 Jun 24 17:28 kernels

lrwxrwxrwx 1 root root 35 Aug 3 18:35 linux -> /usr/src/kernels/2.6.18-53.el5-i686

drwxr-xr-x 7 root root 4096 Jun 24 17:27 redhat

[root@lvs-dr1 ~]# cd ipvsadm-1.24

[root@lvs-dr1 ipvsadm-1.24]make && make install # 编译并安装 #

[root@lvs-dr1 ipvsadm-1.24]# find / -name ipvsadm # 查看ipvsadm的位置 #

/etc/rc.d/init.d/ipvsadm/root/ipvsadm-1.24/ipvsadm/sbin/ipvsadm

⑵、安装keepalived,从官方网站下载keepalived-1.1.15.tar.gz后

[root@lvs-dr1 ~]# tar zxvf keepalived-1.1.15.tar.gz # 解压缩keepalived包 #

[root@lvs-dr1 ~]# cd keepalived-1.1.15

[root@lvs-dr1 keepalived-1.1.15]# ./configure # 最好按照默认配置参数不要指定prefix,可能会出现问题 #

……………省略……………………………………

Keepalived configuration------------------------

Keepalived version :

1.1.15Compiler :

gccCompiler flags :

-g -O2Extra Lib :

-lpopt -lssl -lcrypto Use IPVS Framework :

YesIPVS sync daemon support : YesUse VRRP Framework : Yes

Use LinkWatch : No

Use Debug flags : No

……………结束……………………………………

[root@lvs-dr1 keepalived-1.1.15]# make && make install # 编译并安装 #

[root@lvs-dr1 keepalived-1.1.15]# find / -name keepalived # 查看keepalived位置 #

/usr/local/etc/sysconfig/keepalived

/usr/local/etc/keepalived

/usr/local/etc/rc.d/init.d/keepalived

/usr/local/sbin/keepalived

/root/keepalived-1.1.15/bin/keepalived

/root/keepalived-1.1.15/keepalived

/root/keepalived-1.1.15/keepalived/etc/keepalived

3、在lvs-web1和lvs-web2上安装并配置apache和cronolog。

⑴、安装cronolog,从官方网站下载cronolog-1.6.2.tar.gz后

[root@lvs-web1 ~]# tar zxvf cronolog-1.6.2.tar.gz

[root@lvs-web1 ~]# cd cronolog-1.6.2

[root@lvs- web1 cronolog-1.6.2]# ./configure

[root@lvs- web1 cronolog-1.6.2]# make

[root@lvs- web1 r cronolog-1.6.2]# make install

[root@lvs- web1 cronolog-1.6.2]# find / -name cronolog/usr/local/sbin/cronolog

⑵、安装apache,从官方网站下载httpd-2.2.9.tar.gz后

①、添加用于启动httpd服务的用户和组

[root@lvs-web1 ~]# groupadd –g 500 hjw

[root@lvs-web1 ~]# useradd –g 500 –u 500 hjw

[root@lvs-web1 ~]# grep hjw /etc/passwd # 查看用户文件 #

hjw:x:500:500::/home/hjw:/bin/bash

# 虽然没有设置为/sbin/nologin,但没有为账户设置口令用户无法使用该账户登陆,hjw只能作为服务帐户使用 #

[root@lvs-web1 ~]# grep hjw /etc/group # 查看组文件 #

hjw:x:500:

②、安装apache安装:

[root@lvs-web1 ~]# tar zxvf httpd-2.2.9.tar.gz

[root@lvs-web1 ~]# cd httpd-2.2.9

[root@lvs-web1 httpd-2.2.9]# ./configure --prefix=/usr/local/apache2 --disable-option-checking --enable-cache --enable-disk-cache --enable-mem-cache --enable-rewrite=shared

#配置简单说明

# --prefix=/usr/local/apache2 指定apache安装路径

# --disable-option-checking 不返回错误信息

# --enable-cache 启用缓

存# --enable-disk-cache 启用磁盘缓存

# --enable-mem-cache 启用内存缓存

# --enable-rewrite=shared 实时重写URL请求

[root@lvs-web1 httpd-2.2.9]# make && make install

[root@lvs-web1 ~]# apachectl -t -D DUMP_MODULES # 查看apache加载的模块 #

Loaded Modules:……………省略……………………………………

cache_module (static) # static-静态被编译到代码中,而非动态调用

# disk_cache_module (static) mem_cache_module (static)

……………省略……………………………………

so_module (static) # 允许在Apache启动和重启时加载DSO模块,而不用重新编译。Apache 2.2.9默认将so模块编译到httpd代码中 # rewrite_module (shared)

# shared-DSO(Dynamic Shared Objects) 使该模块用于动态调用。关于DSO相关文档请参阅[url]http://beacon.blog.51cto.com/442731/94711[/url] #Syntax OK

……………结束……………………………………

[root@lvs-web1 ~]#vi /usr/local/apache2/conf/httpd.conf # 修改配置文件 #

……………省略……………………………………

User daemon # 改成hjwGroup daemon # 改成hjw

……………省略……………………………………

#ServerName [url]www.example.com:80[/url]

ServerName 127.0.0.1 #添加这一行

……………省略……………………………………

<Directory />

Options FollowSymLinks

AllowOverride None

Order deny,allow

Deny from all # 改成 Allow from all

</Directory>……

………省略……………………………………

# Virtual hosts#Include conf/extra/httpd-vhosts.conf #将这一行注释去掉

……………结束……………………………………

[root@lvs-web1 ~]#vi /usr/local/apache2/conf/extra/httpd-vhosts.conf #修改虚拟目录文件#

……………省略……………………………………

# 添加虚拟目录如下

<VirtualHost *:80>

# ServerAdmin webmaster@dummy-host2.example.com

DocumentRoot "/usr/local/apache2/webapps"

DirectoryIndex index.html

# ServerName dummy-host2.example.com

# ErrorLog "logs/dummy-host2.example.com-error_log"

# CustomLog "logs/dummy-host2.example.com-access_log" common

</VirtualHost>

<VirtualHost *:8090>

# ServerAdmin webmaster@dummy-host2.example.com

DocumentRoot "/usr/local/apache2/webapps"

DirectoryIndex index1.html

# ServerName dummy-host2.example.com

# ErrorLog "logs/dummy-host2.example.com-error_log"

# CustomLog "logs/dummy-host2.example.com-access_log" common

……………结束……………………………………

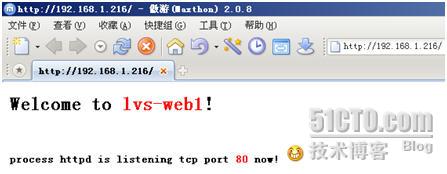

直接访问客户端访问[url]http://192.168.1.216[/url]

访问[url]http://192.168.1.216:8090[/url]

在/etc/rc.local中添加,使得apache在开机时启动

[root@lvs-web1 extra]# more /etc/rc.local

#!/bin/sh

## This script will be executed *after* all the other init scripts.# You can put your own initialization stuff in here if you don't

# want to do the full Sys V style init stuff. touch /var/lock/subsys/local/usr/local/apache2/bin/apachectl start# shell end

4、使用ipvs脚本实现单台lvs控制器转发请求到两台web服务器

⑴、lvs-dr1/lvs控制器

①、ipvs脚本

[root@lvs-dr1 bin]# pwd/usr/local/bin # 控制器上ipvs脚本的路径 #

[root@lvs-dr1 bin]# ll lvsdr

-rwxr-xr-x 1 root root 1163 Aug 15 22:37 lvsdr# 脚本需要可执行权限,使用chmod 755 lvsdr给与脚本文件执行执行权限 #

[root@lvs-dr1 bin]# more lvsdr

#!/bin/bash

# 2008-08-19 by hjw

RIP1=192.168.1.216

RIP2=192.168.1.217

VIP1=192.168.1.215

VIP2=192.168.1.220

/etc/rc.d/init.d/functions

case "$1" in

start)

echo " start LVS of DirectorServer"

# set the Virtual IP Address and sysctl parameter

/sbin/ifconfig eth0:0 $VIP1 broadcast $VIP1 netmask 255.255.255.255 up

/sbin/ifconfig eth0:1 $VIP2 broadcast $VIP2 netmask 255.255.255.255 up

/sbin/route add -host $VIP1 dev eth0:0

/sbin/route add -host $VIP2 dev eth0:1

echo "1" >/proc/sys/net/ipv4/ip_forward

#Clear IPVS table/sbin/ipvsadm -C

#set LVS

#Web Apache

/sbin/ipvsadm -A -t $VIP1:80 -s wlc -p 800

/sbin/ipvsadm -a -t $VIP1:80 -r $RIP1:80 -g

/sbin/ipvsadm -a -t $VIP1:80 -r $RIP2:80 -w 3 -g

/sbin/ipvsadm -a -t $VIP1:80 -r $RIP2:80 -w 3 -g

/sbin/ipvsadm -a -t $VIP1:80 -r $RIP3:80 -g

/sbin/ipvsadm -A -t $VIP2:8090 -s wlc -p 1800

/sbin/ipvsadm -a -t $VIP2:8090 -r $RIP1:8090 -g

/sbin/ipvsadm -a -t $VIP2:8090 -r $RIP2:8090 -w 3 -g

#Run LVS

/sbin/ipvsadm

;;

stop)

echo "close LVS Directorserver"echo "0" >/proc/sys/net/ipv4/ip_forward

/sbin/ipvsadm -C/sbin/ifconfig eth0:0 down#/sbin/ifconfig eth0:1 down

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

;;

esac

# shell end

②、lvs-dr1的网络配置

[root@lvs-dr1 bin]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:F4:5B:1C

inet addr:192.168.1.210 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fef4:5b1c/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:3148 errors:0 dropped:0 overruns:0 frame:0

TX packets:430 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:257945 (251.8 KiB) TX bytes:59872 (58.4 KiB)

Interrupt:177 Base address:0x1080

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:F4:5B:1C

inet addr:192.168.1.215 Bcast:192.168.1.215 Mask:255.255.255.255

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Interrupt:177 Base address:0x1080

eth0:1 Link encap:Ethernet HWaddr 00:0C:29:F4:5B:1C

inet addr:192.168.1.220 Bcast:192.168.1.220 Mask:255.255.255.255

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Interrupt:177 Base address:0x1080

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:48 errors:0 dropped:0 overruns:0 frame:0

TX packets:48 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:4000 (3.9 KiB) TX bytes:4000 (3.9 KiB)

③、在/etc/rc.local中添加,脚本开机启动

[root@lvs-dr1 ~]# more /etc/rc.local

#!/bin/sh

## This script will be executed *after* all the other init scripts.

# You can put your own initialization stuff in here if you don't

# want to do the full Sys V style init stuff.

touch /var/lock/subsys/local

/usr/local/bin/lvsdr start

# shell end

⑵、web服务器/ lvs-web1和lvs-web2

①、ipvs脚本

[root@lvs-web1 bin]# pwd/usr/local/bin #web服务器上ipvs脚本的路径 #

[root@lvs-web1 bin]# ll lvs

-rwxr-xr-x 1 root root 874 Aug 15 05:18 lvs

# 脚本需要可执行权限,使用chmod 755 lvsdr给与脚本文件执行执行权限 #

[root@lvs-web1 bin]# more lvs

#!/bin/bash

#description:start realserver

#chkconfig 235 26 26

# 2008-08-19 by hjw

VIP1=192.168.1.215

VIP2=192.168.1.220

/etc/rc.d/init.d/functions

case "$1" in

start)

echo " start LVS of REALServer"

/sbin/ifconfig lo:0 $VIP1 broadcast $VIP1 netmask 255.255.255.255 up

/sbin/ifconfig lo:1 $VIP2 broadcast $VIP2 netmask 255.255.255.255 up

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

;;

stop)

/sbin/ifconfig lo:0 down/sbin/ifconfig lo:1 down

echo "close LVS Directorserver"echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/all/arp_announce

;;

*)echo "Usage: $0 {start|stop}"

exit 1

;;

esac

# shell end

②、lvs-web1的网络配置(lvs-web2略)

[root@lvs-web1 bin]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:8C:52:7E

inet addr:192.168.1.216 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe8c:527e/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:3671 errors:0 dropped:0 overruns:0 frame:0

TX packets:475 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:301398 (294.3 KiB) TX bytes:65170 (63.6 KiB)

Interrupt:177 Base address:0x1080

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:12 errors:0 dropped:0 overruns:0 frame:0

TX packets:12 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:904 (904.0 b) TX bytes:904 (904.0 b)

lo:0 Link encap:Local Loopback

inet addr:192.168.1.215 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:16436 Metric:1

lo:1 Link encap:Local Loopback

inet addr:192.168.1.220 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:16436 Metric:1

③、在/etc/rc.local中添加,脚本开机启动

[root@lvs-web1 ~]# more /etc/rc.local

#!/bin/sh

## This script will be executed *after* all the other init scripts.# You can put your own initialization stuff in here if you don't

# want to do the full Sys V style init stuff.

touch /var/lock/subsys/local

/usr/local/apache2/bin/apachectl start

/usr/local/bin/lvs start&

# shell end

⑶、测试转发

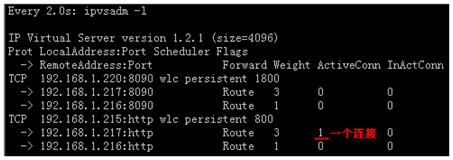

①、在客户端浏览器访问[url]http://192.168.1.215[/url]使用ipvsadm -l查看控制器转发状态。[root@lvs-dr1 bin]# watch ipvsadm -l

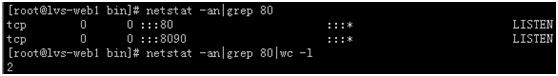

查看lvs-web1状态

[root@lvs-web1 bin]# netstat -an|grep 80

[root@lvs-web1 bin]# netstat -an|grep 80|wc -l

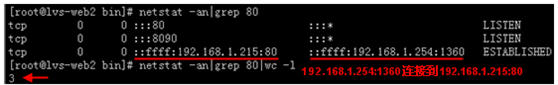

查看lvs-web2状态

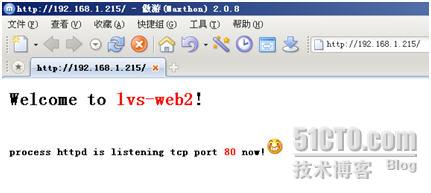

客户端返回的页面

由于lvs-web2的权重值大,客户端从lvs-web2得到请求的页面,说明整个系统运行正常。

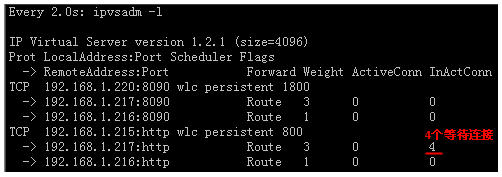

②、模拟web服务器故障,将lvs-web2网络中断。用ipvsadm –l查看状态,有4个等待转发的不活动连接

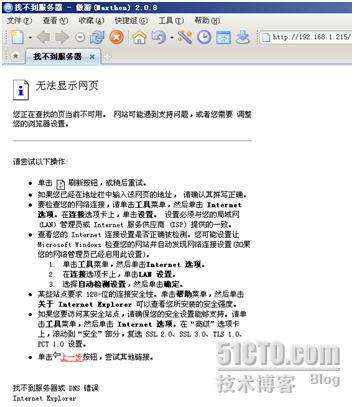

最终因为lvs-web2的web服务不可用无法相应客户端请求,返回无法显示的页面

5、使用keepalived实现单台lvs控制器转发请求到两台web服务器,并且lvs控制器监控web服务器的状态并自动添加删除web结点。

⑴、在lvs控制器上安装keepalived后需要停止原来的lvsdr脚本,然后根据需要对keepalived配置文件进行调整,而web服务器的脚本和网络配置不需要进行任何改动。

①、keepalived脚本

[root@lvs-dr1 keepalived]# pwd/usr/local/etc/keepalived # keepalived配置文件的路径 #

[root@lvs-dr1 keepalived]# ll keepalived.conf

-rw-r--r-- 1 root root 1627 Aug 16 09:13 keepalived.conf # keepalived配置文件名 #

[root@lvs-dr1 keepalived]# more keepalived.conf

! Configuration File for keepalived

global_defs {

# notification_email {

# }

# notification_email_from Alexandre.Cassen@firewall.loc

# smtp_server 192.168.200.1

# smtp_connect_timeout 30 router_id LVS_DEVEL}

# 2008-08-19 by hjw#

VIP1vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 200

advert_int 1

authentication {

auth_type PASS

auth_pass 1111 }

virtual_ipaddress {

192.168.1.215

192.168.1.220

}}

virtual_server 192.168.1.215 80 {

delay_loop 6

lb_algo wlc

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.1.216 80 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

} }

real_server 192.168.1.217 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}}

virtual_server 192.168.1.220 8090 {

delay_loop 6

lb_algo wlc

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.1.216 8090 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 8090

} }

real_server 192.168.1.217 8090 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 8090

}

}}

# shell end

②、在/etc/rc.local中添加,脚本开机启动

[root@lvs-dr1 ~]# more /etc/rc.local

#!/bin/sh## This script will be executed *after* all the other init scripts.

# You can put your own initialization stuff in here if you don't

# want to do the full Sys V style init stuff.

touch /var/lock/subsys/local

#/usr/local/bin/lvsdr start

/usr/local/sbin/keepalived -D -f /usr/local/etc/keepalived/keepalived.conf

# shell end

⑵、用户请求转发功能测试由于转发功能测试在步骤4中已经进行,这里省略。

⑶、自动删除添加结点功能测试测试删除/添加结点需要模拟web服务器故障,其中包括手动终止服务和中断网络(相当于宕机),测试以lvs-web2为例。

①、手动停止lvs-web2上的httpd服务

控制器lvs-dr1上监控转发状态。当监测到lvs-web2上的服务停止后大概2-3秒,keepalived自动将这个结点从转发列表中删除。

此时,用户请求将只被转发到lvs-web1上。

启动httpd服务之后5秒之内lvs-web2又重新被加到转发列表中,此时用户请求可以被转发到lvs-web1和lvs-web2但由于web2的权重值大所以用户再次请求[url]http://192.168.1.215[/url]时请求被转发到lvs-web2。

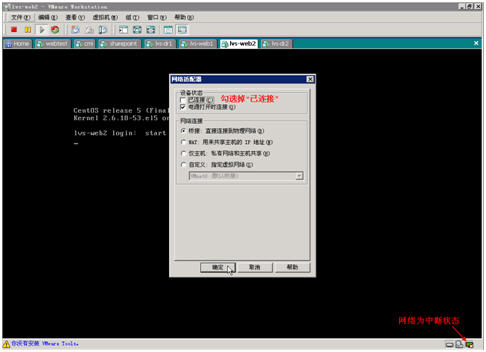

②、中断网络,

控制器通过发送ICMP包判断lvs-web2已经不存在,所以将它从转发列表中删除。lvs-dr1会根据配置文件不断监测real_server,当lvs-web2重新上线并能够提供web服务之后dr1又会重新将web2添加到转发列表中。这个过程删除和添加web结点的过程与上面停止httpd服务的完全一致的,在此不再赘述。这些过程只是在服务器端进行,对于用户来说是完全透明的,他们并不会感受到服务器端正在发生的变化。

6、避免lvs控制器单点故障,添加lvs-dr2到群集中使用keepalived实现控制器的主备。

⑴、keepalived配置文件

[root@lvs-dr1 keepalived]# pwd/usr/local/etc/keepalived # keepalived配置文件位置 #

[root@lvs-dr1 keepalived]# ll keepalived.conf

-rw-r--r-- 1 root root 1624 Aug 16 23:19 keepalived.conf

[root@lvs-dr1 keepalived]# more keepalived.conf

! Configuration File for keepalived global_defs { # notification_email { # acassen@firewall.loc # failover@firewall.loc # sysadmin@firewall.loc # } # notification_email_from Alexandre.Cassen@firewall.loc # smtp_server 192.168.200.1 # smtp_connect_timeout 30 router_id LVS_DEVEL} # 2008-08-19 by hjwvrrp_instance VI_1 { state MASTER # lvs-dr2为BACKUP # interface eth0 virtual_router_id 51 priority 200 # lvs-dr2为100 # advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.1.215 192.168.1.220 }} virtual_server 192.168.1.215 80 { delay_loop 6 lb_algo wlc lb_kind DR persistence_timeout 50 protocol TCP real_server 192.168.1.216 80 { weight 1 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 192.168.1.217 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } }} virtual_server 192.168.1.220 8090 { delay_loop 6 lb_algo wlc lb_kind DR persistence_timeout 50 protocol TCP real_server 192.168.1.216 8090 { weight 1 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 8090 } } real_server 192.168.1.217 8090 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 8090 } }}# shell end ⑵、lvs-dr1启动,lvs-dr2关机

| 服务器 | 状态 | 日志 |

| lvs-dr1 | 启动 | √ |

| lvs-dr2 | 关机 |

lvs-dr1启动后日志……………省略……………………………………Aug 17 00:53:40 lvs-dr1 Keepalived: Starting Keepalived v1.1.15 (08/04,2008) Aug 17 00:53:40 lvs-dr1 Keepalived: Starting Healthcheck child process, pid=1671 #启动Healthcheck进程#Aug 17 00:53:40 lvs-dr1 Keepalived: Starting VRRP child process, pid=1681 #启动VRRP child进程#Aug 17 00:53:40 lvs-dr1 Keepalived_vrrp: Using MII-BMSR NIC polling thread...Aug 17 00:53:40 lvs-dr1 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.210 addedAug 17 00:53:40 lvs-dr1 Keepalived_vrrp: Registering Kernel netlink reflectorAug 17 00:53:40 lvs-dr1 Keepalived_vrrp: Registering Kernel netlink command channelAug 17 00:53:40 lvs-dr1 Keepalived_vrrp: Registering gratutious ARP shared channelAug 17 00:53:41 lvs-dr1 kernel: IPVS: Registered protocols (TCP, UDP, AH, ESP)Aug 17 00:53:41 lvs-dr1 kernel: IPVS: Connection hash table configured (size=4096, memory=32Kbytes)Aug 17 00:53:41 lvs-dr1 kernel: IPVS: ipvs loaded.Aug 17 00:53:41 lvs-dr1 Keepalived_healthcheckers: Using MII-BMSR NIC polling thread...Aug 17 00:53:41 lvs-dr1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.210 addedAug 17 00:53:41 lvs-dr1 Keepalived_healthcheckers: Registering Kernel netlink reflectorAug 17 00:53:41 lvs-dr1 Keepalived_healthcheckers: Registering Kernel netlink command channelAug 17 00:53:41 lvs-dr1 Keepalived_healthcheckers: Opening file '/usr/local/etc/keepalived/keepalived.conf'. Aug 17 00:53:41 lvs-dr1 Keepalived_vrrp: Opening file '/usr/local/etc/keepalived/keepalived.conf'. Aug 17 00:53:41 lvs-dr1 Keepalived_healthcheckers: Configuration is using : 16607 BytesAug 17 00:53:41 lvs-dr1 Keepalived_vrrp: Configuration is using : 35534 BytesAug 17 00:53:41 lvs-dr1 kernel: IPVS: [wlc] scheduler registered.Aug 17 00:53:41 lvs-dr1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.216:80]Aug 17 00:53:41 lvs-dr1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.217:80]Aug 17 00:53:41 lvs-dr1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.216:8090]Aug 17 00:53:41 lvs-dr1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.217:8090]Aug 17 00:53:41 lvs-dr1 Keepalived_vrrp: VRRP sockpool: [ifindex(2), proto(112), fd(9,10)]Aug 17 00:53:42 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Transition to MASTER STATEAug 17 00:53:43 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering MASTER STATE #dr1置成master状态#Aug 17 00:53:43 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) setting protocol VIPs.Aug 17 00:53:43 lvs-dr1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.215 addedAug 17 00:53:43 lvs-dr1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.220 addedAug 17 00:53:43 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.215 #arp绑定ip 192.168.1.215到eth0#Aug 17 00:53:43 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.220Aug 17 00:53:43 lvs-dr1 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.215 addedAug 17 00:53:43 lvs-dr1 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.220 addedAug 17 00:53:48 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.215Aug 17 00:53:48 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.220……………结束…………………………………… ⑶、lvs-dr1开机,lvs-dr2启动 lvs-dr2日志

| 服务器 | 状态 | 日志 |

| lvs-dr1 | 开机 | |

| lvs-dr2 | 启动 | √ |

lvs-dr2日志启动日志……………省略……………………………………Aug 17 03:49:21 lvs-dr2 Keepalived: Starting Keepalived v1.1.15 (08/04,2008) Aug 17 03:49:21 lvs-dr2 Keepalived: Starting Healthcheck child process, pid=1656Aug 17 03:49:21 lvs-dr2 Keepalived_vrrp: Using MII-BMSR NIC polling thread...Aug 17 03:49:21 lvs-dr2 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.211 addedAug 17 03:49:21 lvs-dr2 Keepalived_vrrp: Registering Kernel netlink reflectorAug 17 03:49:21 lvs-dr2 Keepalived_vrrp: Registering Kernel netlink command channelAug 17 03:49:21 lvs-dr2 Keepalived_vrrp: Registering gratutious ARP shared channelAug 17 03:49:21 lvs-dr2 Keepalived: Starting VRRP child process, pid=1658Aug 17 03:49:22 lvs-dr2 kernel: IPVS: Registered protocols (TCP, UDP, AH, ESP)Aug 17 03:49:22 lvs-dr2 kernel: IPVS: Connection hash table configured (size=4096, memory=32Kbytes)Aug 17 03:49:22 lvs-dr2 kernel: IPVS: ipvs loaded.Aug 17 03:49:22 lvs-dr2 Keepalived_healthcheckers: Using MII-BMSR NIC polling thread...Aug 17 03:49:22 lvs-dr2 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.211 addedAug 17 03:49:22 lvs-dr2 Keepalived_healthcheckers: Registering Kernel netlink reflectorAug 17 03:49:22 lvs-dr2 Keepalived_healthcheckers: Registering Kernel netlink command channelAug 17 03:49:22 lvs-dr2 Keepalived_healthcheckers: Opening file '/usr/local/etc/keepalived/keepalived.conf'. Aug 17 03:49:22 lvs-dr2 Keepalived_vrrp: Opening file '/usr/local/etc/keepalived/keepalived.conf'. Aug 17 03:49:22 lvs-dr2 Keepalived_healthcheckers: Configuration is using : 16607 BytesAug 17 03:49:22 lvs-dr2 Keepalived_vrrp: Configuration is using : 35534 BytesAug 17 03:49:23 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) Entering BACKUP STATE # dr2置成backup状态#Aug 17 03:49:23 lvs-dr2 Keepalived_vrrp: VRRP sockpool: [ifindex(2), proto(112), fd(9,10)]Aug 17 03:49:23 lvs-dr2 kernel: IPVS: [wlc] scheduler registered.Aug 17 03:49:23 lvs-dr2 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.216:80]Aug 17 03:49:23 lvs-dr2 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.217:80]Aug 17 03:49:23 lvs-dr2 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.216:8090]Aug 17 03:49:23 lvs-dr2 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.217:8090]……………结束…………………………………… ⑷、lvs-dr1关机,lvs-dr2开机 lvs-dr2日志

| 服务器 | 状态 | 日志 |

| lvs-dr1 | 关闭 | |

| lvs-dr2 | 开机 | √ |

lvs-dr2日志……………省略……………………………………Aug 17 03:52:11 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) Transition to MASTER STATEAug 17 03:52:12 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) Entering MASTER STATE # dr2置成master状态#Aug 17 03:52:12 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) setting protocol VIPs.Aug 17 03:52:12 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.215Aug 17 03:52:12 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.220Aug 17 03:52:12 lvs-dr2 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.215 addedAug 17 03:52:12 lvs-dr2 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.220 addedAug 17 03:52:12 lvs-dr2 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.215 addedAug 17 03:52:12 lvs-dr2 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.220 addedAug 17 03:52:17 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.215Aug 17 03:52:17 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.220……………结束…………………………………… ⑸、lvs-dr1启动,lvs-dr2开机 lvs-dr1和lvs-dr2日志

| 服务器 | 状态 | 日志 |

| lvs-dr1 | 启动 | √ |

| lvs-dr2 | 开机 | √ |

lvs-dr1启动日志……………结束……………………………………Aug 17 01:01:32 lvs-dr1 Keepalived: Starting Keepalived v1.1.15 (08/04,2008) Aug 17 01:01:32 lvs-dr1 Keepalived: Starting Healthcheck child process, pid=1688Aug 17 01:01:32 lvs-dr1 Keepalived: Starting VRRP child process, pid=1690Aug 17 01:01:32 lvs-dr1 Keepalived_vrrp: Using MII-BMSR NIC polling thread...Aug 17 01:01:32 lvs-dr1 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.210 addedAug 17 01:01:32 lvs-dr1 Keepalived_vrrp: Registering Kernel netlink reflectorAug 17 01:01:32 lvs-dr1 Keepalived_vrrp: Registering Kernel netlink command channelAug 17 01:01:32 lvs-dr1 Keepalived_vrrp: Registering gratutious ARP shared channelAug 17 01:01:33 lvs-dr1 kernel: IPVS: Registered protocols (TCP, UDP, AH, ESP)Aug 17 01:01:33 lvs-dr1 kernel: IPVS: Connection hash table configured (size=4096, memory=32Kbytes)Aug 17 01:01:33 lvs-dr1 kernel: IPVS: ipvs loaded.Aug 17 01:01:33 lvs-dr1 Keepalived_healthcheckers: Using MII-BMSR NIC polling thread...Aug 17 01:01:33 lvs-dr1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.210 addedAug 17 01:01:33 lvs-dr1 Keepalived_healthcheckers: Registering Kernel netlink reflectorAug 17 01:01:33 lvs-dr1 Keepalived_healthcheckers: Registering Kernel netlink command channelAug 17 01:01:33 lvs-dr1 Keepalived_healthcheckers: Opening file '/usr/local/etc/keepalived/keepalived.conf'. Aug 17 01:01:33 lvs-dr1 Keepalived_vrrp: Opening file '/usr/local/etc/keepalived/keepalived.conf'. Aug 17 01:01:33 lvs-dr1 Keepalived_healthcheckers: Configuration is using : 16607 BytesAug 17 01:01:33 lvs-dr1 Keepalived_vrrp: Configuration is using : 35534 BytesAug 17 01:01:33 lvs-dr1 kernel: IPVS: [wlc] scheduler registered.Aug 17 01:01:33 lvs-dr1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.216:80]Aug 17 01:01:33 lvs-dr1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.217:80]Aug 17 01:01:33 lvs-dr1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.216:8090]Aug 17 01:01:33 lvs-dr1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.1.217:8090]Aug 17 01:01:33 lvs-dr1 Keepalived_vrrp: VRRP sockpool: [ifindex(2), proto(112), fd(9,10)]Aug 17 01:01:34 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Transition to MASTER STATEAug 17 01:01:35 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering MASTER STATE # dr1置成master状态#Aug 17 01:01:35 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) setting protocol VIPs.Aug 17 01:01:35 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.215Aug 17 01:01:35 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.220Aug 17 01:01:35 lvs-dr1 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.215 addedAug 17 01:01:35 lvs-dr1 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.220 addedAug 17 01:01:35 lvs-dr1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.215 addedAug 17 01:01:35 lvs-dr1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.220 addedAug 17 01:01:40 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.215Aug 17 01:01:40 lvs-dr1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.1.220……………结束…………………………………… lvs-dr2日志……………省略……………………………………Aug 17 03:54:11 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) Received higher prio advert # 收到优先级别更高的通告 #Aug 17 03:54:11 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) Entering BACKUP STATE # dr2置成backup状态#Aug 17 03:54:11 lvs-dr2 Keepalived_vrrp: VRRP_Instance(VI_1) removing protocol VIPs. # 移除VIP绑定 #Aug 17 03:54:11 lvs-dr2 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.215 removedAug 17 03:54:11 lvs-dr2 Keepalived_vrrp: Netlink reflector reports IP 192.168.1.220 removedAug 17 03:54:11 lvs-dr2 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.215 removedAug 17 03:54:11 lvs-dr2 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.1.220 removed……………结束…………………………………… 讨论、结论和参考资料整体方案实施后补充待续……..五、测试讨论六、测试结论七、参考资料

本文转自rshare 51CTO博客,原文链接:http://blog.51cto.com/1364952/1965468,如需转载请自行联系原作者