实验目标:

1.反向代理服务器将用户请求负载均衡到后端tomcat节点;

2.配置基于nginx的负载均衡,实现会话绑定;

3.配置基于mod_jk的负载均衡,实现会话绑定;

4.基于mod_proxy实现负载均衡,实现会话绑定;

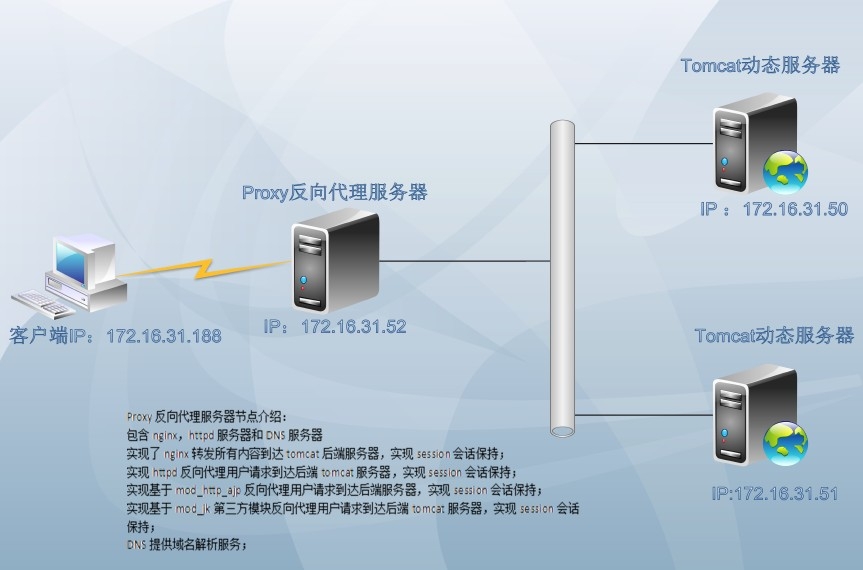

网络架构图:

网络主机规划表:

实验步骤:

前提配置:

1、tom1和tom2节点时间必须同步;

建议使用ntp协议进行;

参考博客:http://sohudrgon.blog.51cto.com/3088108/1598314

2、节点之间必须要通过主机名互相通信;

建议使用hosts文件;

通信中使用的名字必须与其节点为上“uname -n”命令展示出的名字保持一致;

# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.0.1 server.magelinux.com server

172.16.31.52 www.stu31.com proxy.stu31.com proxy

172.16.31.50 tom1.stu31.com tom1

172.16.31.51 tom2.stu31.com tom2

3、节点之间彼此root用户能基于ssh密钥方式进行通信;

节点tom1:

# ssh-keygen -t rsa -P ""

# ssh-copy-id -i .ssh/id_rsa.pub tom1

节点tom2:

# ssh-keygen -t rsa -P ""

# ssh-copy-id -i .ssh/id_rsa.pub tom2

测试ssh无密钥通信:

[root@tom1 ~]# date ;ssh tom2 date

Wed Jan 14 21:52:46 CST 2015

Wed Jan 14 21:52:46 CST 2015

4.设置proxy为其他节点的管理端

# ssh-keygen -t rsa -P ""

# ssh-copy-id -i .ssh/id_rsa.pub root@172.16.31.50

# ssh-copy-id -i .ssh/id_rsa.pub root@172.16.31.51

管理其他节点,传递节点tom1和tom2都需要的软件包:

# for i in {1..2}; do scp jdk-7u67-linux-x64.rpm tom$i:/root ; done

一.配置后端tomcat节点服务器

两个节点同时安装

1.安装jdk,节点tom1和tom2存放jdk;

jdk-7u67-linux-x64.rpm

[root@proxy ~]# for i in {1..2}; do ssh tom$i "rpm -ivh jdk-7u67-linux-x64.rpm" ;done

查看安装情况:

[root@tom1 ~]# ls /usr/java/

default jdk1.7.0_67 latest

[root@tom1 ~]# cd /usr/java/jdk1.7.0_67/

[root@tom1 jdk1.7.0_67]# ls

bin lib src.zip

COPYRIGHT LICENSE THIRDPARTYLICENSEREADME-JAVAFX.txt

db man THIRDPARTYLICENSEREADME.txt

include README.html

jre release

2.配置jdk环境变量

# vim /etc/profile.d/java.sh

export JAVA_HOME=/usr/java/latest

export PATH=$JAVA_HOME/bin:$PATH

加载变量:

# source /etc/profile.d/java.sh

运行命令显示java的版本和jre运行时环境:

# java -version

java version "1.7.0_67"

Java(TM) SE Runtime Environment (build 1.7.0_67-b01)

Java HotSpot(TM) 64-Bit Server VM (build 24.65-b04, mixed mode)

3.开始安装tomcat软件

获得tomcat软件:

apache-tomcat-7.0.55.tar.gz

安装tomcat:

[root@proxy ~]# for i in {1..2}; do ssh tom$i "tar xf apache-tomcat-7.0.55.tar.gz -C /usr/local" ; done

创建软链接:

[root@tom1 ~]# cd /usr/local/

[root@tom1 local]# ln -sv apache-tomcat-7.0.55/ tomcat

`tomcat' -> `apache-tomcat-7.0.55/'

[root@tom1 local]# cd tomcat

[root@tom1 tomcat]# ls

bin lib logs RELEASE-NOTES temp work

conf LICENSE NOTICE RUNNING.txt webapps

4.配置tomcat环境变量:

# vim /etc/profile.d/tomcat.sh

export CATALINA_HOME=/usr/local/tomcat

export PATH=$CATALINA_HOME/bin:$PATH

加载环境变量:

# source /etc/profile.d/tomcat.sh

5.默认tomcat是root身份运行的,这样不安全,我们设置来用普通用户

注意:生产环境中运行tomcat尽量不要使用root

[root@proxy ~]# for i in {1..2}; do ssh tom$i "groupadd -g 280 tomcat;useradd -g 280 -u 280 tomcat" ; done

查看节点tom1和tom2用户tomcat的ID:

[root@proxy ~]# for i in {1..2}; do ssh tom$i "id tomcat" ; done

uid=280(tomcat) gid=280(tomcat) groups=280(tomcat)

uid=280(tomcat) gid=280(tomcat) groups=280(tomcat)

6.将tomcat的安装目录的权限更改为tomcat用户

[root@proxy ~]# for i in {1..2}; do ssh tom$i "chown -R tomcat:tomcat /usr/local/tomcat/" ; done

7.构建tomcat服务脚本

在proxy管理节点创建tomcat的脚本:

#!/bin/bash

#chkconfig: - 95 5

#description : tomcat serverlet container.

JAVA_HOME=/usr/java/latest

CATALINA_HOME=/usr/local/tomcat

export JAVA_HOME CATALINA_HOME

case $1 in

start)

exec $CATALINA_HOME/bin/catalina.sh start

;;

stop)

exec $CATALINA_HOME/bin/catalina.sh stop

;;

restart)

$CATALINA_HOME/bin/catalina.sh stop

sleep 1

exec $CATALINA_HOME/bin/catalina.sh start

;;

*)

exec $CATALINA_HOME/bin/catalina.sh $*

;;

esac

赋予执行权限:

[root@proxy ~]# chmod +x tomcat

拷贝到tomcat节点:

[root@proxy ~]# for i in {1..2}; do scp tomcat tom$i:/etc/init.d/ ; done

加入系统服务:

[root@proxy ~]# for i in {1..2}; do ssh tom$i "chkconfig --add tomcat" ; done

[root@proxy ~]# for i in {1..2}; do ssh tom$i "chkconfig --list tomcat" ; done

tomcat 0:off 1:off 2:off 3:off 4:off 5:off 6:off

tomcat 0:off 1:off 2:off 3:off 4:off 5:off 6:off

8.启动tomcat服务测试

如果切换到tomcat用户可以启动tomcat服务,但是我们为了方便这里就使用root直接启动啦。

[root@proxy ~]# for i in {1..2}; do ssh tom$i "service tomcat start" ; done

Tomcat started.

Tomcat started.

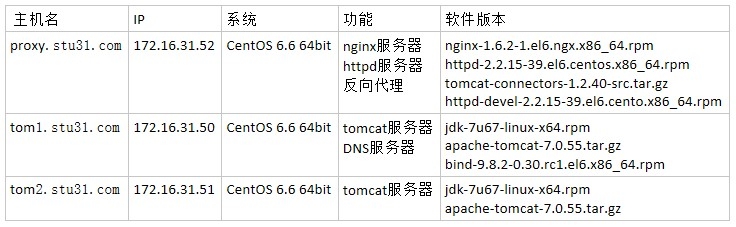

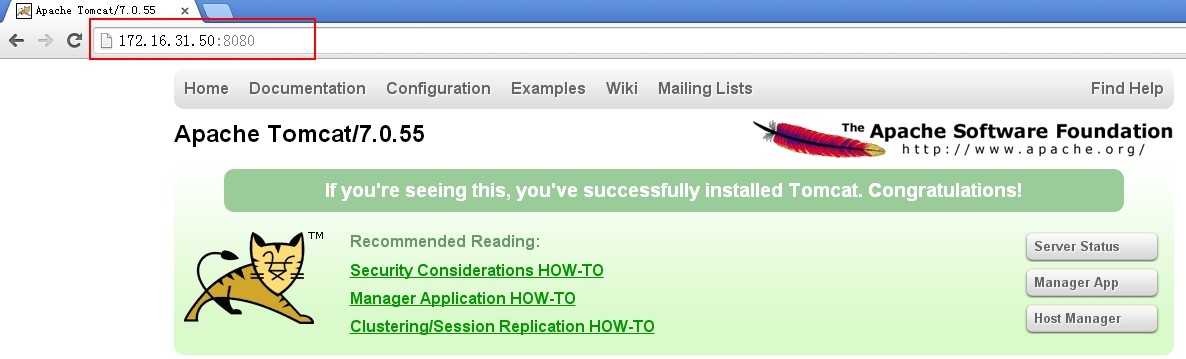

访问节点tom1和tom2的tomcat测试页:

9.部署一个webapp

在管理节点创建webapp的目录,及webapp所需的目录:

[root@proxy ~]# for i in {1..2}; do ssh tom$i "mkdir /usr/local/tomcat/webapps/testapp; cd /usr/local/tomcat/webapps/testapp; mkdir lib classes WEB-INF" ; done

到节点tom1和tom2创建tomcat的测试页:

节点tom1:

[root@tom1 testapp]# pwd

/usr/local/tomcat/webapps/testapp

[root@tom1 testapp]# vim index.jsp

<%@ page language="java" %>

<html>

<head><title>TomcatA</title></head>

<body>

<h1><font color="red">TomcatA.stu31.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("stu31.com","stu31.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

节点tom2:

[root@tom2 testapp]# pwd

/usr/local/tomcat/webapps/testapp

[root@tom2 testapp]# vim index.jsp

<%@ page language="java" %>

<html>

<head><title>TomcatB </title></head>

<body>

<h1><font color="red">TomcatB.stu31.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("stu31.com","stu31.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

配置节点tom1和tom2的虚拟主机配置文件:

节点tom1:

[root@tom1 ~]# cd /usr/local/tomcat

[root@tom1 tomcat]# vim conf/server.xml

[root@tom1 tomcat]# cat conf/server.xml

<?xml version='1.0' encoding='utf-8'?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<!-- Note: A "Server" is not itself a "Container", so you may not

define subcomponents such as "Valves" at this level.

Documentation at /docs/config/server.html

-->

<Server port="8005" shutdown="SHUTDOWN">

<!-- Security listener. Documentation at /docs/config/listeners.html

<Listener className="org.apache.catalina.security.SecurityListener" />

-->

<!--APR library loader. Documentation at /docs/apr.html -->

<Listener className="org.apache.catalina.core.AprLifecycleListener" SSLEngine="on" />

<!--Initialize Jasper prior to webapps are loaded. Documentation at /docs/jasper-howto.html -->

<Listener className="org.apache.catalina.core.JasperListener" />

<!-- Prevent memory leaks due to use of particular java/javax APIs-->

<Listener className="org.apache.catalina.core.JreMemoryLeakPreventionListener" />

<Listener className="org.apache.catalina.mbeans.GlobalResourcesLifecycleListener" />

<Listener className="org.apache.catalina.core.ThreadLocalLeakPreventionListener" />

<!-- Global JNDI resources

Documentation at /docs/jndi-resources-howto.html

-->

<GlobalNamingResources>

<!-- Editable user database that can also be used by

UserDatabaseRealm to authenticate users

-->

<Resource name="UserDatabase" auth="Container"

type="org.apache.catalina.UserDatabase"

description="User database that can be updated and saved"

factory="org.apache.catalina.users.MemoryUserDatabaseFactory"

pathname="conf/tomcat-users.xml" />

</GlobalNamingResources>

<!-- A "Service" is a collection of one or more "Connectors" that share

a single "Container" Note: A "Service" is not itself a "Container",

so you may not define subcomponents such as "Valves" at this level.

Documentation at /docs/config/service.html

-->

<Service name="Catalina">

<!--The connectors can use a shared executor, you can define one or more named thread pools-->

<!--

<Executor name="tomcatThreadPool" namePrefix="catalina-exec-"

maxThreads="150" minSpareThreads="4"/>

-->

<!-- A "Connector" represents an endpoint by which requests are received

and responses are returned. Documentation at :

Java HTTP Connector: /docs/config/http.html (blocking & non-blocking)

Java AJP Connector: /docs/config/ajp.html

APR (HTTP/AJP) Connector: /docs/apr.html

Define a non-SSL HTTP/1.1 Connector on port 8080

-->

#tomcat服务监听端口不用更改,我们的反向代理服务直接反向代理到这个8080端口

<Connector port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

<!-- A "Connector" using the shared thread pool-->

<!--

<Connector executor="tomcatThreadPool"

port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

-->

<!-- Define a SSL HTTP/1.1 Connector on port 8443

This connector uses the BIO implementation that requires the JSSE

style configuration. When using the APR/native implementation, the

OpenSSL style configuration is required as described in the APR/native

documentation -->

<!--

<Connector port="8443" protocol="org.apache.coyote.http11.Http11Protocol"

maxThreads="150" SSLEnabled="true" scheme="https" secure="true"

clientAuth="false" sslProtocol="TLS" />

-->

<!-- Define an AJP 1.3 Connector on port 8009 -->

<Connector port="8009" protocol="AJP/1.3" redirectPort="8443" />

<!-- An Engine represents the entry point (within Catalina) that processes

every request. The Engine implementation for Tomcat stand alone

analyzes the HTTP headers included with the request, and passes them

on to the appropriate Host (virtual host).

Documentation at /docs/config/engine.html -->

<!-- You should set jvmRoute to support load-balancing via AJP ie :

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1">

-->

#更改默认主机名

<Engine name="Catalina" defaultHost="tom1.stu31.com">

<!--For clustering, please take a look at documentation at:

/docs/cluster-howto.html (simple how to)

/docs/config/cluster.html (reference documentation) -->

<!--

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"/>

-->

<!-- Use the LockOutRealm to prevent attempts to guess user passwords

via a brute-force attack -->

<Realm className="org.apache.catalina.realm.LockOutRealm">

<!-- This Realm uses the UserDatabase configured in the global JNDI

resources under the key "UserDatabase". Any edits

that are performed against this UserDatabase are immediately

available for use by the Realm. -->

<Realm className="org.apache.catalina.realm.UserDatabaseRealm"

resourceName="UserDatabase"/>

</Realm>

#更改主机名,并添加一个Context为新输入的webapp

<Host name="tom1.stu31.com" appBase="webapps"

unpackWARs="true" autoDeploy="true">

<Context path="" docBase="testapp" reloadable="true" />

<!-- SingleSignOn valve, share authentication between web applications

Documentation at: /docs/config/valve.html -->

<!--

<Valve className="org.apache.catalina.authenticator.SingleSignOn" />

-->

<!-- Access log processes all example.

Documentation at: /docs/config/valve.html

Note: The pattern used is equivalent to using pattern="common" -->

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log." suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

</Host>

</Engine>

</Service>

</Server>

节点tom2的配置:

[root@tom2 ~]# cd /usr/local/tomcat

[root@tom2 tomcat]# vim conf/server.xml

[root@tom2 tomcat]# cat conf/server.xml

<?xml version='1.0' encoding='utf-8'?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<!-- Note: A "Server" is not itself a "Container", so you may not

define subcomponents such as "Valves" at this level.

Documentation at /docs/config/server.html

-->

<Server port="8005" shutdown="SHUTDOWN">

<!-- Security listener. Documentation at /docs/config/listeners.html

<Listener className="org.apache.catalina.security.SecurityListener" />

-->

<!--APR library loader. Documentation at /docs/apr.html -->

<Listener className="org.apache.catalina.core.AprLifecycleListener" SSLEngine="on" />

<!--Initialize Jasper prior to webapps are loaded. Documentation at /docs/jasper-howto.html -->

<Listener className="org.apache.catalina.core.JasperListener" />

<!-- Prevent memory leaks due to use of particular java/javax APIs-->

<Listener className="org.apache.catalina.core.JreMemoryLeakPreventionListener" />

<Listener className="org.apache.catalina.mbeans.GlobalResourcesLifecycleListener" />

<Listener className="org.apache.catalina.core.ThreadLocalLeakPreventionListener" />

<!-- Global JNDI resources

Documentation at /docs/jndi-resources-howto.html

-->

<GlobalNamingResources>

<!-- Editable user database that can also be used by

UserDatabaseRealm to authenticate users

-->

<Resource name="UserDatabase" auth="Container"

type="org.apache.catalina.UserDatabase"

description="User database that can be updated and saved"

factory="org.apache.catalina.users.MemoryUserDatabaseFactory"

pathname="conf/tomcat-users.xml" />

</GlobalNamingResources>

<!-- A "Service" is a collection of one or more "Connectors" that share

a single "Container" Note: A "Service" is not itself a "Container",

so you may not define subcomponents such as "Valves" at this level.

Documentation at /docs/config/service.html

-->

<Service name="Catalina">

<!--The connectors can use a shared executor, you can define one or more named thread pools-->

<!--

<Executor name="tomcatThreadPool" namePrefix="catalina-exec-"

maxThreads="150" minSpareThreads="4"/>

-->

<!-- A "Connector" represents an endpoint by which requests are received

and responses are returned. Documentation at :

Java HTTP Connector: /docs/config/http.html (blocking & non-blocking)

Java AJP Connector: /docs/config/ajp.html

APR (HTTP/AJP) Connector: /docs/apr.html

Define a non-SSL HTTP/1.1 Connector on port 8080

-->

#tomcat服务监听端口不用更改,我们的反向代理服务直接反向代理到这个8080端口

<Connector port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

<!-- A "Connector" using the shared thread pool-->

<!--

<Connector executor="tomcatThreadPool"

port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

-->

<!-- Define a SSL HTTP/1.1 Connector on port 8443

This connector uses the BIO implementation that requires the JSSE

style configuration. When using the APR/native implementation, the

OpenSSL style configuration is required as described in the APR/native

documentation -->

<!--

<Connector port="8443" protocol="org.apache.coyote.http11.Http11Protocol"

maxThreads="150" SSLEnabled="true" scheme="https" secure="true"

clientAuth="false" sslProtocol="TLS" />

-->

<!-- Define an AJP 1.3 Connector on port 8009 -->

<Connector port="8009" protocol="AJP/1.3" redirectPort="8443" />

<!-- An Engine represents the entry point (within Catalina) that processes

every request. The Engine implementation for Tomcat stand alone

analyzes the HTTP headers included with the request, and passes them

on to the appropriate Host (virtual host).

Documentation at /docs/config/engine.html -->

<!-- You should set jvmRoute to support load-balancing via AJP ie :

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1">

-->

#更改默认主机名

<Engine name="Catalina" defaultHost="tom2.stu31.com">

<!--For clustering, please take a look at documentation at:

/docs/cluster-howto.html (simple how to)

/docs/config/cluster.html (reference documentation) -->

<!--

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"/>

-->

<!-- Use the LockOutRealm to prevent attempts to guess user passwords

via a brute-force attack -->

<Realm className="org.apache.catalina.realm.LockOutRealm">

<!-- This Realm uses the UserDatabase configured in the global JNDI

resources under the key "UserDatabase". Any edits

that are performed against this UserDatabase are immediately

available for use by the Realm. -->

<Realm className="org.apache.catalina.realm.UserDatabaseRealm"

resourceName="UserDatabase"/>

</Realm>

#更改主机名,并添加一个Context为新输入的webapp

<Host name="tom2.stu31.com" appBase="webapps"

unpackWARs="true" autoDeploy="true">

<Context path="" docBase="testapp" reloadable="true" />

<!-- SingleSignOn valve, share authentication between web applications

Documentation at: /docs/config/valve.html -->

<!--

<Valve className="org.apache.catalina.authenticator.SingleSignOn" />

-->

<!-- Access log processes all example.

Documentation at: /docs/config/valve.html

Note: The pattern used is equivalent to using pattern="common" -->

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log." suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

</Host>

</Engine>

</Service>

</Server>

10.节点所有配置部署完毕后,启动tomcat服务进行访问测试:

到管理节点检查配置文件:

[root@proxy ~]# for i in {1..2} ; do ssh tom$i "catalina.sh configtest" ; done

Jan 15, 2015 8:37:57 AM org.apache.catalina.core.AprLifecycleListener init

INFO: The APR based Apache Tomcat Native library which allows optimal performance in production environments was not found on the java.library.path: /usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

Jan 15, 2015 8:37:59 AM org.apache.coyote.AbstractProtocol init

INFO: Initializing ProtocolHandler ["http-bio-8080"]

Jan 15, 2015 8:37:59 AM org.apache.coyote.AbstractProtocol init

INFO: Initializing ProtocolHandler ["ajp-bio-8009"]

Jan 15, 2015 8:37:59 AM org.apache.catalina.startup.Catalina load

INFO: Initialization processed in 3987 ms

Jan 15, 2015 8:38:05 AM org.apache.catalina.core.AprLifecycleListener init

INFO: The APR based Apache Tomcat Native library which allows optimal performance in production environments was not found on the java.library.path: /usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

Jan 15, 2015 8:38:07 AM org.apache.coyote.AbstractProtocol init

INFO: Initializing ProtocolHandler ["http-bio-8080"]

Jan 15, 2015 8:38:07 AM org.apache.coyote.AbstractProtocol init

INFO: Initializing ProtocolHandler ["ajp-bio-8009"]

Jan 15, 2015 8:38:07 AM org.apache.catalina.startup.Catalina load

INFO: Initialization processed in 4235 ms

在管理节点启动tomcat服务:

[root@proxy ~]# for i in {1..2} ; do ssh tom$i "catalina.sh start" ; done

Tomcat started.

Tomcat started.

在管理节点查看tomcat服务监听的端口:

[root@proxy ~]# for i in {1..2} ; do ssh tom$i "ss -tnul |grep 800* " ; done

tcp LISTEN 0 1 ::ffff:127.0.0.1:8005 :::*

tcp LISTEN 0 100 :::8009 :::*

tcp LISTEN 0 100 :::8080 :::*

tcp LISTEN 0 1 ::ffff:127.0.0.1:8005 :::*

tcp LISTEN 0 100 :::8009 :::*

tcp LISTEN 0 100 :::8080 :::*

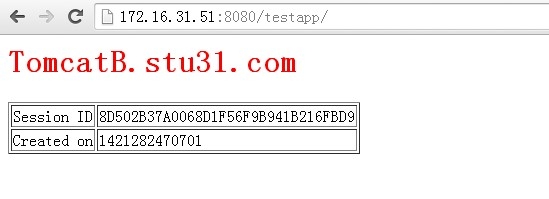

客户端windows xp访问测试;

节点tom1和tom2的tomcat环境就搭建完毕!下面就进行负载均衡的实验了。

Tom2节点在本实验中是DNS服务节点;提供DNS解析服务,有关DNS服务器的配置请参考博客:http://sohudrgon.blog.51cto.com/3088108/1588344

二.proxy节点反向服务器的配置(1):使用nginx实现负载均衡请求到后端tomcat节点

1.安装nginx

[root@proxy ~]# yum install nginx-1.6.2-1.el6.ngx.x86_64.rpm

2.配置nginx服务器实现反向代理功能

[root@proxy nginx]# pwd

/etc/nginx

配置主配置文件添加后端tomcat节点:

[root@proxy nginx]# vim nginx.conf

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

upstream tcsrvs {

server 172.16.31.50:8080

server 172.16.31.51:8080

}

include /etc/nginx/conf.d/*.conf;

}

配置nginx默认配置文件反向代理用户请求到后端tomcat节点:

[root@proxy nginx]# vim conf.d/default.conf

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log /var/log/nginx/log/host.access.log main;

location / {

proxy_pass http://tcsrvs;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

3.检查配置文件语法,启动nginx服务,访问测试

检查语法:

[root@proxy nginx]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

启动nginx服务:

[root@proxy nginx]# service nginx start

Starting nginx: [ OK ]

4.实现基于ip_hash绑定会话的负载均衡调度

[root@proxy nginx]# vim nginx.conf

upstream tcsrvs {

ip_hash;

server 172.16.31.50:8080;

server 172.16.31.51:8080;

}

检查语法:

[root@proxy nginx]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

5.重启nginx服务器访问测试;

[root@proxy nginx]# service nginx restart

Stopping nginx: [ OK ]

Starting nginx: [ OK ]

至此,使用nginx实现负载均衡请求到后端tomcat节点就实现了!

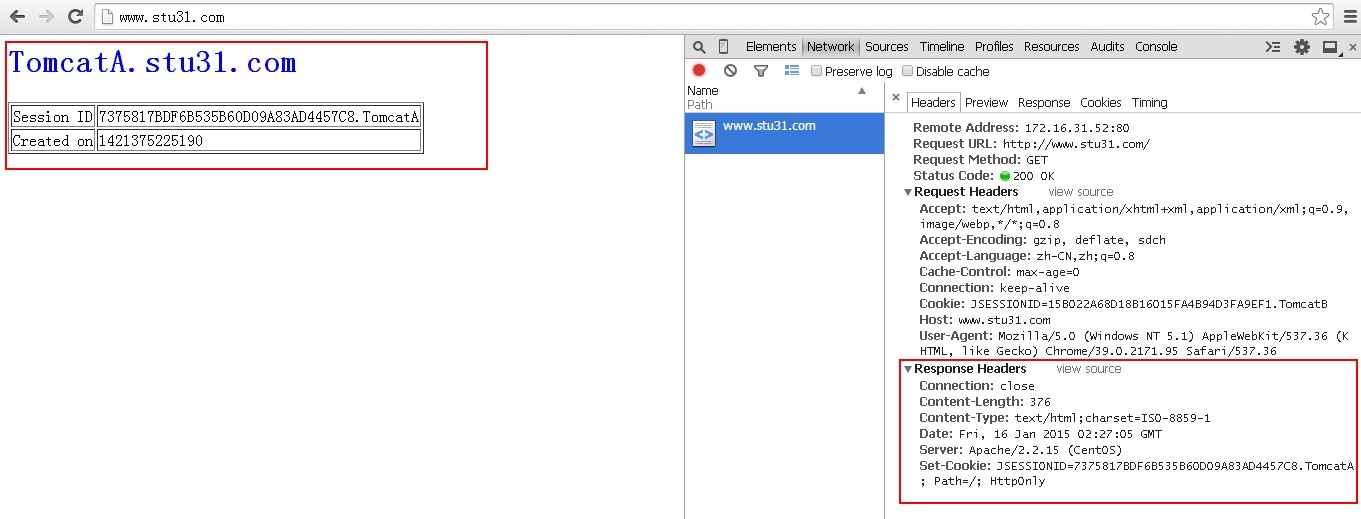

三..proxy节点反向服务器的配置(2):使用httpd实现负载均衡请求的后端tomcat节点

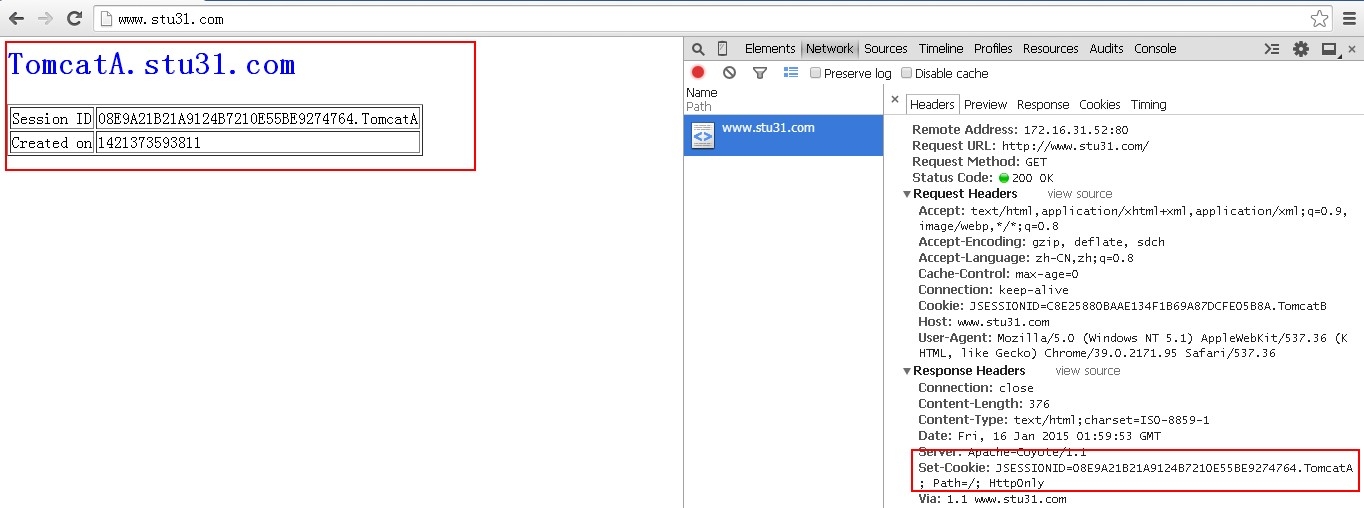

前提:配置后端tomcat节点tom1和tom2的server.xml文件

确保Engine组件中存在jvmRoute属性,其值要与mod_jk配置中使用worker同名;

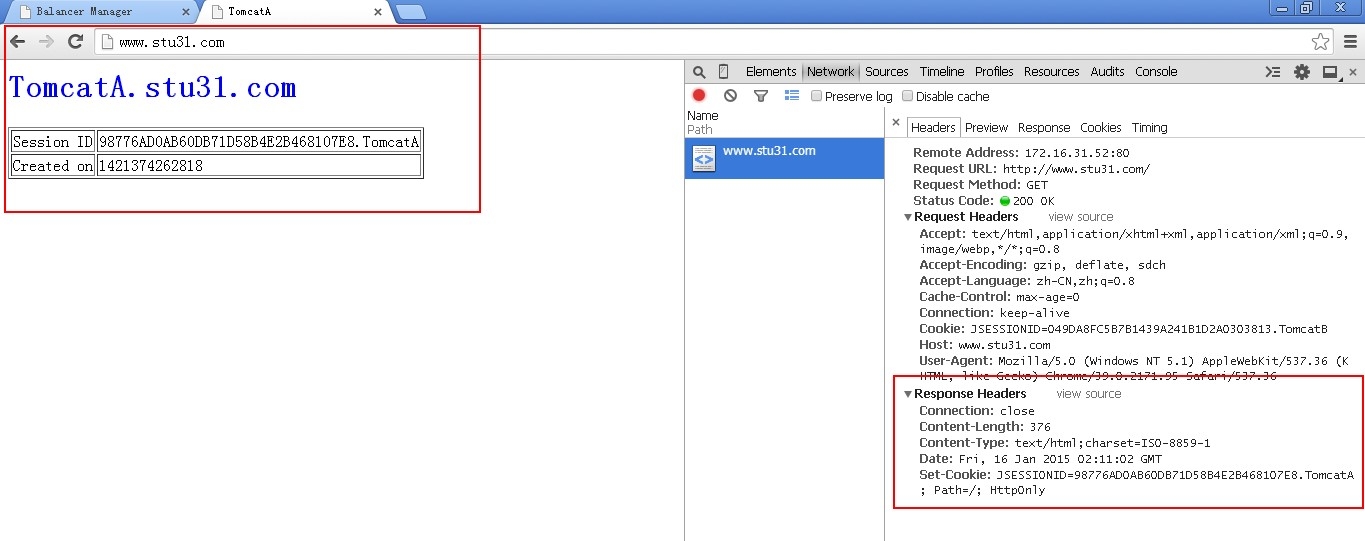

tom1:

<Engine name="Catalina" defaultHost="tom1.stu31.com" jvmRoute="TomcatA">

tom2:

<Engine name="Catalina" defaultHost="tom2.stu31.com" jvmRoute="TomcatB">

为了更好的区分两个tomcat节点,我们将tom1节点的主页测试文件更改为蓝色:

[root@tom1 testapp]# pwd

/usr/local/tomcat/webapps/testapp

[root@tom1 testapp]# vim index.jsp

<%@ page language="java" %>

<html>

<head><title>TomcatA</title></head>

<body>

<h1><font color="blue">TomcatA.stu31.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("stu31.com","stu31.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

配置成功后重启tomcat服务。

1.停止nginx服务器,防止其占用80端口。

[root@proxy nginx]# service nginx stop

Stopping nginx: [ OK ]

2.配置apache通过proxy_http_module模块与Tomcat连接:

检测当前的apache是否支持mod_proxy、mod_proxy_http、mod_proxy_ajp和proxy_balancer_module模块:

# httpd -M

……………………

proxy_module (shared)

proxy_balancer_module (shared)

proxy_ftp_module (shared)

proxy_http_module (shared)

proxy_ajp_module (shared)

proxy_connect_module (shared)

……………………

3.在httpd.conf的全局配置段或虚拟主机中添加如下内容:

[root@proxy ~]# vim /etc/httpd/conf/httpd.conf

#注释掉如下内容

#DocumentRoot "/var/www/html"

4.创建虚拟主机配置文件,实现负载均衡调度用户请求到后端tomcat服务器;

[root@proxy ~]# vim /etc/httpd/conf.d/mod_proxy_http.conf

NameVirtualHost *:80

<VirtualHost *:80>

ServerName www.stu31.com

ProxyVia on

ProxyRequests off

ProxyPreserveHost on

<proxy balancer://tcsrvs>

BalancerMember http://172.16.31.50:8080 loadfactor=1 route=TomcatA

BalancerMember http://172.16.31.51:8080 loadfactor=1 route=TomcatB

</proxy>

ProxyPass / balancer://tcsrvs/

ProxyPassReverse / balancer://tcsrvs/

<Proxy *>

Order deny,allow

Allow from all

</Proxy>

<Location />

Order deny,allow

Allow from all

</Location>

</VirtualHost>

关于如上apache指令的说明:

ProxyPreserveHost {On|Off}:如果启用此功能,代理会将用户请求报文中的Host:行发送给后端的服务器,而不再使用ProxyPass指定的服务器地址。如果想在反向代理中支持虚拟主机,则需要开启此项,否则就无需打开此功能。

ProxyVia {On|Off|Full|Block}:用于控制在http首部是否使用Via:,主要用于在多级代理中控制代理请求的流向。默认为Off,即不启用此功能;On表示每个请求和响应报文均添加Via:;Full表示每个Via:行都会添加当前apache服务器的版本号信息;Block表示每个代理请求报文中的Via:都会被移除。

ProxyRequests {On|Off}:是否开启apache正向代理的功能;启用此项时为了代理http协议必须启用mod_proxy_http模块。同时,如果为apache设置了ProxyPass,则必须将ProxyRequests设置为Off。

ProxyPass [path] !|url [key=value key=value ...]]:将后端服务器某URL与当前服务器的某虚拟路径关联起来作为提供服务的路径,path为当前服务器上的某虚拟路径,url为后端服务器上某URL路径。使用此指令时必须将ProxyRequests的值设置为Off。需要注意的是,如果path以“/”结尾,则对应的url也必须以“/”结尾,反之亦然。

另外,mod_proxy模块在httpd 2.1的版本之后支持与后端服务器的连接池功能,连接在按需创建在可以保存至连接池中以备进一步使用。连接池大小或其它设定可以通过在ProxyPass中使用key=value的方式定义。常用的key如下所示:

◇ min:连接池的最小容量,此值与实际连接个数无关,仅表示连接池最小要初始化的空间大小。

◇ max:连接池的最大容量,每个MPM都有自己独立的容量;都值与MPM本身有关,如Prefork的总是为1,而其它的则取决于ThreadsPerChild指令的值。

◇ loadfactor:用于负载均衡集群配置中,定义对应后端服务器的权重,取值范围为1-100。

◇ retry:当apache将请求发送至后端服务器得到错误响应时等待多长时间以后再重试。单位是秒钟。

如果Proxy指定是以balancer://开头,即用于负载均衡集群时,其还可以接受一些特殊的参数,如下所示:

◇lbmethod:apache实现负载均衡的调度方法,默认是byrequests,即基于权重将统计请求个数进行调度,bytraffic则执行基于权重的流量计数调度,bybusyness通过考量每个后端服务器的当前负载进行调度。

◇ maxattempts:放弃请求之前实现故障转移的次数,默认为1,其最大值不应该大于总的节点数。

◇ nofailover:取值为On或Off,设置为On时表示后端服务器故障时,用户的session将损坏;因此,在后端服务器不支持session复制时可将其设置为On。

◇ stickysession:调度器的sticky session的名字,根据web程序语言的不同,其值为JSESSIONID或PHPSESSIONID。

上述指令除了能在banlancer://或ProxyPass中设定之外,也可使用ProxySet指令直接进行设置,如:

<Proxy balancer://tcsrvs>

BalancerMember http://172.16.31.50:8080 loadfactor=1

BalancerMember http://172.16.31.51:8080 loadfactor=2

ProxySet lbmethod=byrequests

</Proxy>

ProxyPassReverse:用于让apache调整HTTP重定向响应报文中的Location、Content-Location及URI标签所对应的URL,在反向代理环境中必须使用此指令避免重定向报文绕过proxy服务器。

5.配置文件语法检查,启动httpd服务器;

[root@proxy conf.d]# httpd -t

Syntax OK

[root@proxy conf.d]# service httpd start

Starting httpd: [ OK ]

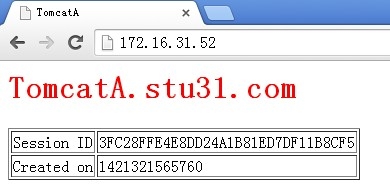

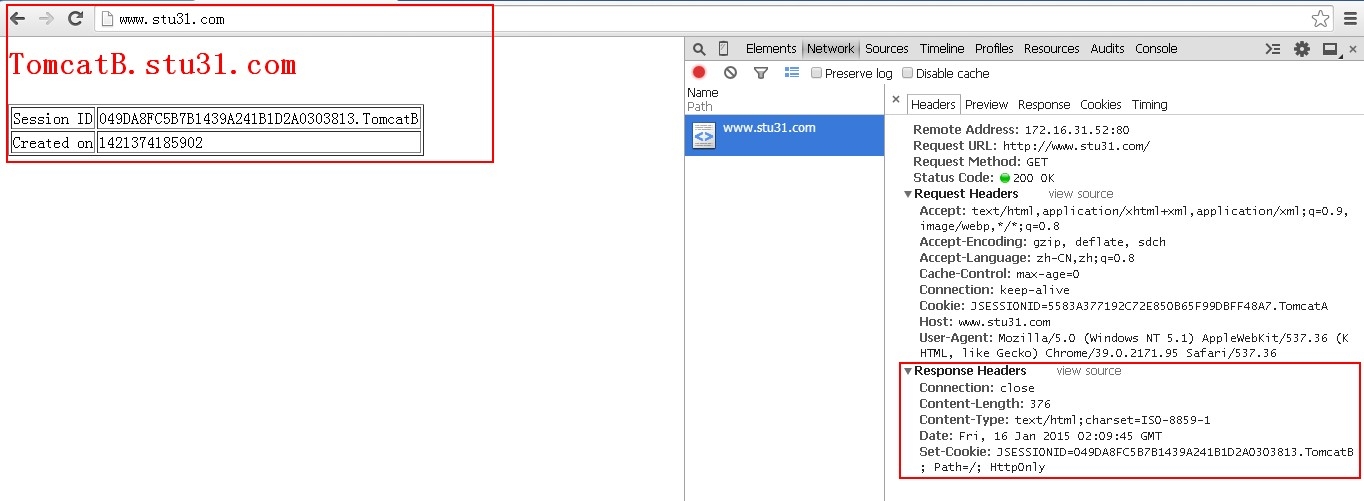

6.访问测试:

至此,使用httpd实现负载均衡请求的后端tomcat节点的实验就完成了。

7.绑定session会话和开启负载均衡管理界面:

[root@proxy conf.d]# pwd

/etc/httpd/conf.d

[root@proxy conf.d]# vim mod_proxy_http1.conf

NameVirtualHost *:80

<VirtualHost *:80>

ServerName www.stu31.com

ProxyVia on

ProxyRequests off

ProxyPreserveHost on

<proxy balancer://tcsrvs>

BalancerMember http://172.16.31.50:8080 loadfactor=1 route=TomcatA

BalancerMember http://172.16.31.51:8080 loadfactor=1 route=TomcatB

</proxy>

<Location /lbmanager> #定义负载均衡管理界面

SetHandler balancer-manager

</Location>

ProxyPass /lbmanager ! #该界面是不做代理

ProxyPass / balancer://tcsrvs/ stickysession=JSESSIONID #开启session绑定

ProxyPassReverse / balancer://tcsrvs/

<Proxy *>

Order deny,allow

Allow from all

</Proxy>

<Location />

Order deny,allow

Allow from all

</Location>

</VirtualHost>

8.重启httpd服务,访问测试:

[root@proxy conf.d]# service httpd restart

Stopping httpd: [ OK ]

Starting httpd: [ OK ]

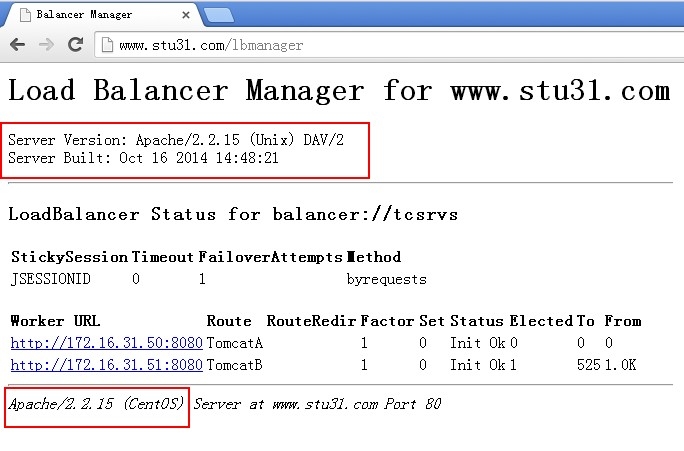

测试负载均衡管理界面也是正常的。可以在此处做简单的管理操作。

观察其中发送的数据发现只有tom2节点;实现了会话绑定;

9.配置apache通过mod_proxy_ajp模块与Tomcat连接

备份httpd_proxy配置文件

[root@proxy conf.d]# cp mod_proxy_http.conf mod_proxy_ajp.conf

[root@proxy conf.d]# ls

mod_dnssd.conf mod_proxy_ajp.conf mod_proxy_http.conf README welcome.conf

#需要将前面的http连接器的配置文件更改名称,不然httpd服务器加载。

[root@proxy conf.d]# mv mod_proxy_http.conf mod_proxy_http.conf.bak

配置ajp的配置文件,将协议由http更改为ajp协议即可:

[root@proxy conf.d]# vim mod_proxy_ajp.conf

NameVirtualHost *:80

<VirtualHost *:80>

ServerName www.stu31.com

ProxyVia on

ProxyRequests off

ProxyPreserveHost on

<proxy balancer://tcsrvs>

BalancerMember ajp://172.16.31.50:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://172.16.31.51:8009 loadfactor=1 route=TomcatB

</proxy>

<Location /lbmanager>

SetHandler balancer-manager

</Location>

ProxyPass /lbmanager !

ProxyPass / balancer://tcsrvs/

ProxyPassReverse / balancer://tcsrvs/

<Proxy *>

Order deny,allow

Allow from all

</Proxy>

<Location />

Order deny,allow

Allow from all

</Location>

</VirtualHost>

10.检查语法,重启服务,访问测试;

#httpd -t

#service httpd restart

11.通过tcpdump在后端tomcat节点的8009端口抓包

[root@tom1 ~]# tcpdump -nn -i eth0 port 8009

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 65535 bytes

10:12:50.441165 IP 172.16.31.52.43213 > 172.16.31.50.8009: Flags [S], seq 3134086892, win 14600, options [mss 1460,sackOK,TS val 4063808 ecr 0,nop,wscale 5], length 0

10:12:50.441201 IP 172.16.31.50.8009 > 172.16.31.52.43213: Flags [S.], seq 1267158001, ack 3134086893, win 14480, options [mss 1460,sackOK,TS val 49992174 ecr 4063808,nop,wscale 6], length 0

10:12:50.441481 IP 172.16.31.52.43213 > 172.16.31.50.8009: Flags [.], ack 1, win 457, options [nop,nop,TS val 4063809 ecr 49992174], length 0

10:12:50.441676 IP 172.16.31.52.43213 > 172.16.31.50.8009: Flags [P.], seq 1:433, ack 1, win 457, options [nop,nop,TS val 4063809 ecr 49992174], length 432

10:12:50.441688 IP 172.16.31.50.8009 > 172.16.31.52.43213: Flags [.], ack 433, win 243, options [nop,nop,TS val 49992175 ecr 4063809], length 0

10:12:50.444041 IP 172.16.31.50.8009 > 172.16.31.52.43213: Flags [P.], seq 1:130, ack 433, win 243, options [nop,nop,TS val 49992177 ecr 4063809], length 129

10:12:50.444581 IP 172.16.31.50.8009 > 172.16.31.52.43213: Flags [P.], seq 130:514, ack 433, win 243, options [nop,nop,TS val 49992178 ecr 4063809], length 384

10:12:50.444718 IP 172.16.31.50.8009 > 172.16.31.52.43213: Flags [P.], seq 514:520, ack 433, win 243, options [nop,nop,TS val 49992178 ecr 4063809], length 6

10:12:50.446793 IP 172.16.31.52.43213 > 172.16.31.50.8009: Flags [.], ack 130, win 490, options [nop,nop,TS val 4063813 ecr 49992177], length 0

10:12:50.446807 IP 172.16.31.52.43213 > 172.16.31.50.8009: Flags [.], ack 514, win 524, options [nop,nop,TS val 4063814 ecr 49992178], length 0

10:12:50.446810 IP 172.16.31.52.43213 > 172.16.31.50.8009: Flags [.], ack 520, win 524, options [nop,nop,TS val 4063814 ecr 49992178], length 0

至此,基于mod_proxy_ajp代理客户端访问到后端服务器的负载均衡实现。

12.绑定session会话

配置session会话绑定更上面mod_proxy的会话绑定配置一致,只需要在反向代理服务器组tcsrvs后加stickysession=JSESSIONID 即可:

如图配置:

NameVirtualHost *:80

<VirtualHost *:80>

ServerName www.stu31.com

ProxyVia on

ProxyRequests off

ProxyPreserveHost on

<proxy balancer://tcsrvs>

BalancerMember ajp://172.16.31.50:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://172.16.31.51:8009 loadfactor=1 route=TomcatB

</proxy>

<Location /lbmanager>

SetHandler balancer-manager

</Location>

ProxyPass /lbmanager !

ProxyPass / balancer://tcsrvs/ stickysession=JSESSIONID

ProxyPassReverse / balancer://tcsrvs/

<Proxy *>

Order deny,allow

Allow from all

</Proxy>

<Location />

Order deny,allow

Allow from all

</Location>

</VirtualHost>

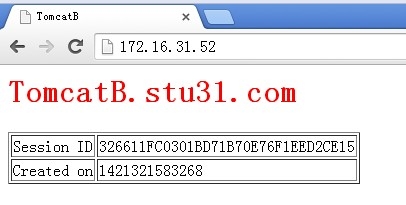

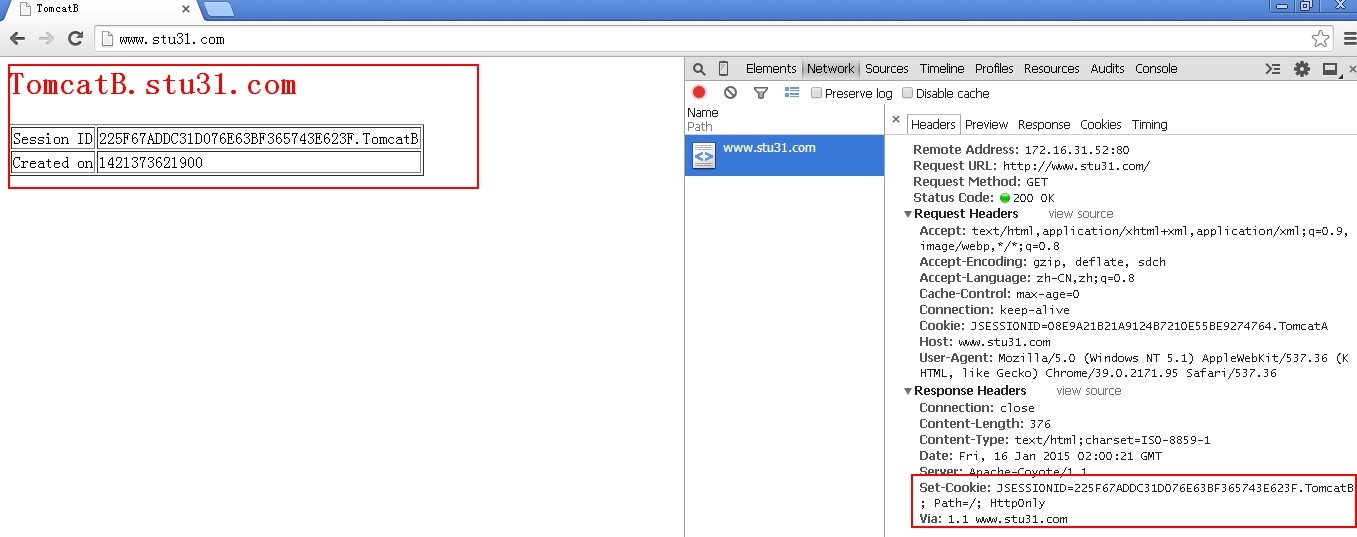

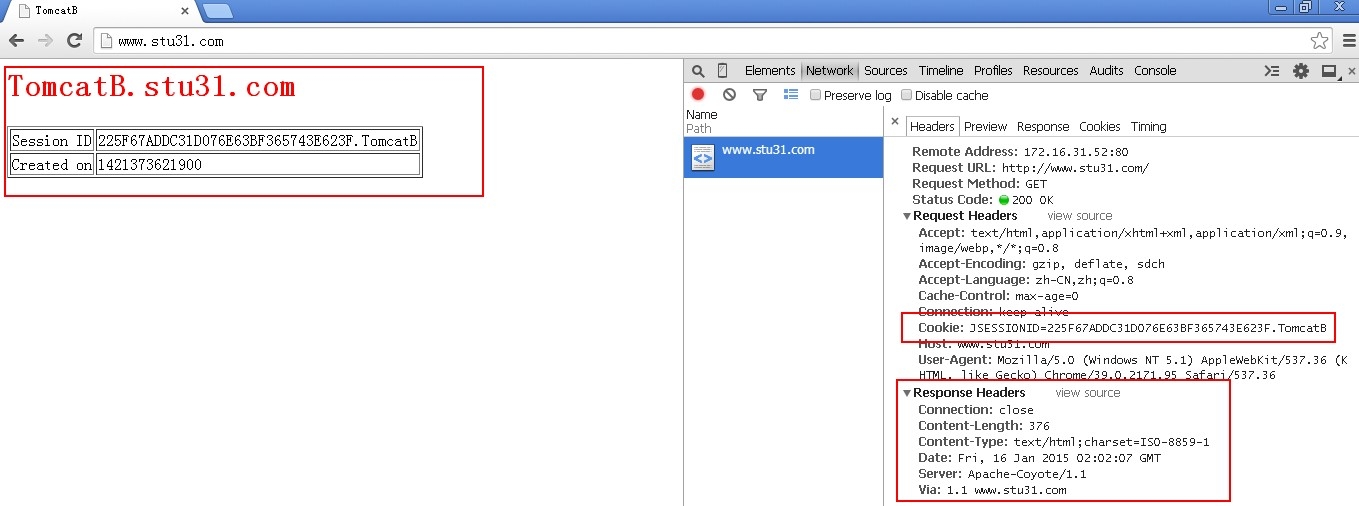

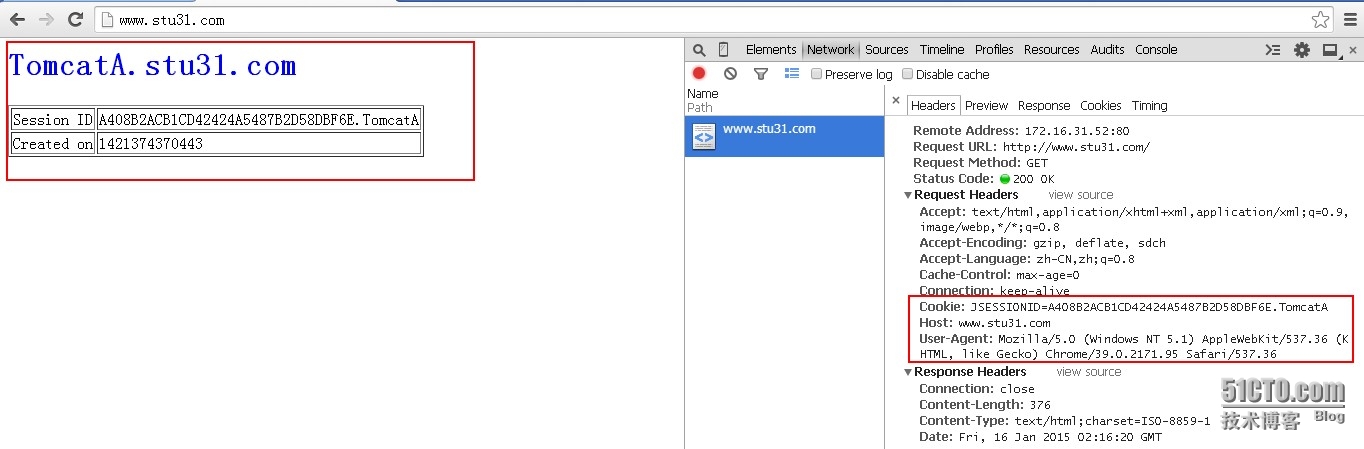

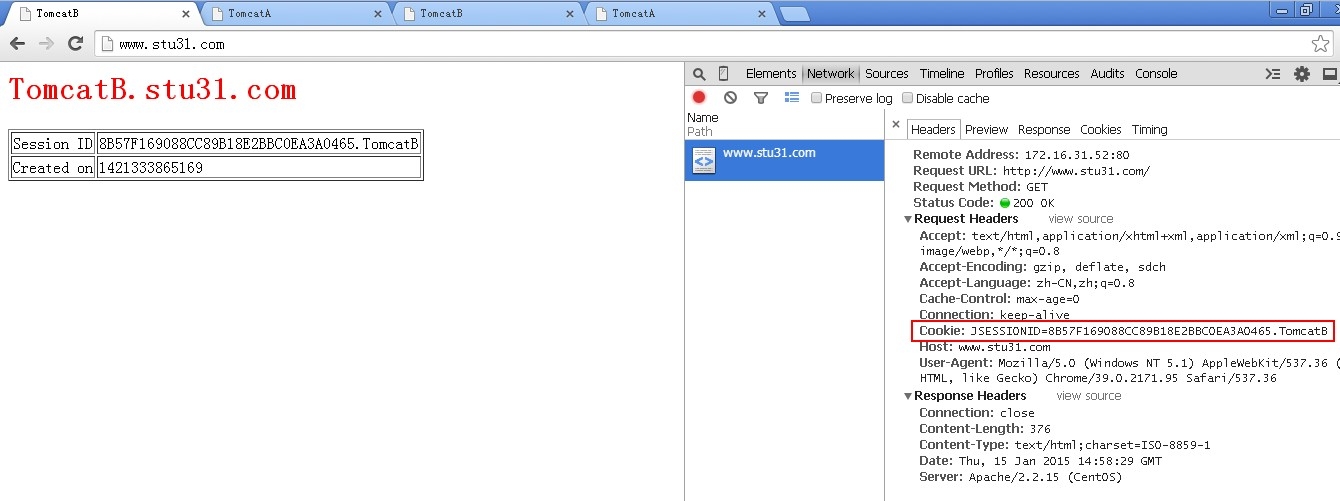

13.重启httpd服务,访问测试,刷新页面都是tomcatA:

#httpd -t

#service httpd restart

可以查看一下负载均衡管理界面:

至此,基于mod_proxy_ajp代理客户端访问到后端服务器的会话保持就实现了。

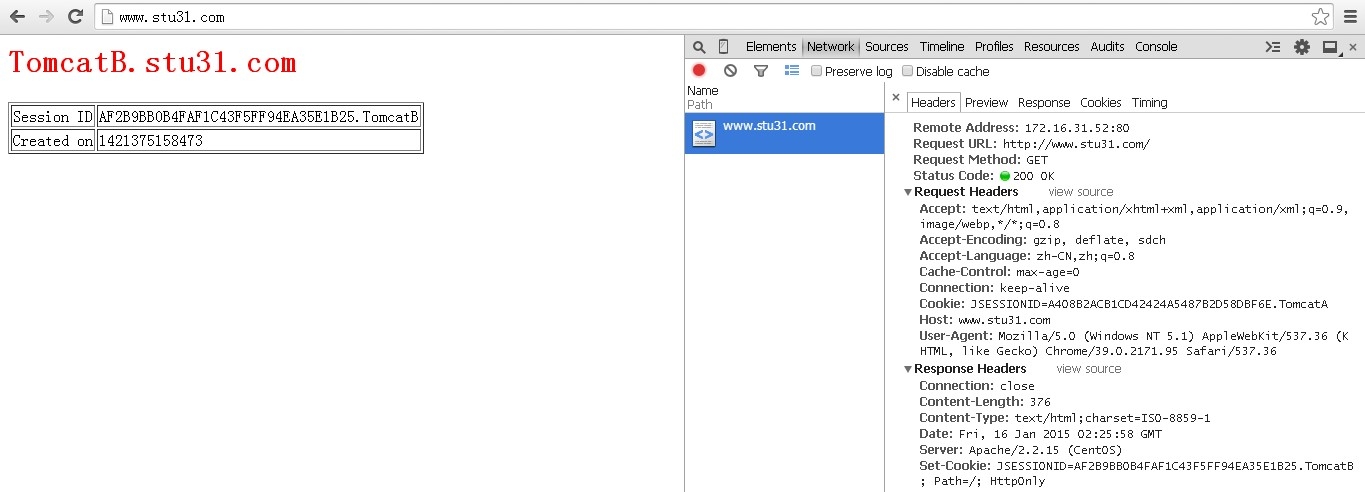

四.proxy节点反向服务器的配置(3):使用mod_jk实现负载均衡请求到后端tomcat节点,并实现session绑定

mod_jk是ASF的一个项目,是一个工作于apache端基于AJP协议与Tomcat通信的连接器,它是apache的一个模块,是AJP协议的客户端(服务端是Tomcat的AJP连接器)。

1.开发环境部署

# yum groupinstall Development Tools

# yum install -y httpd-devel

2.安装第三方的mod_jk模块

[root@proxy ~]# tar xf tomcat-connectors-1.2.40-src.tar.gz

[root@proxy ~]# cd tomcat-connectors-1.2.40-src

[root@proxy tomcat-connectors-1.2.40-src]# cd native/

[root@proxy native]# ./configure --with-apxs=`which apxs`

[root@proxy native]# make && make install

3.查看安装的mod_jk模块

# ls /usr/lib64/httpd/modules/ | grep mod_jk

mod_jk.so

4.装载mod_jk模块到httpd服务器

先备份原来的mod_proxy_ajp的配置文件;

[root@proxy conf.d]# pwd

/etc/httpd/conf.d

[root@proxy conf.d]# mv mod_proxy_ajp.conf mod_proxy_ajp.conf.bak

apache要使用mod_jk连接器,需要在启动时加载此连接器模块。为了便于管理与mod_jk模块相关的配置,这里使用一个专门的配置文件/etc/httpd/conf.d/mod_jk.conf来保存相关指令及其设置。其内容如下:

#vim /etc/httpd/conf.d/mod_jk.conf

LoadModule jk_module modules/mod_jk.so

JkWorkersFile /etc/httpd/conf.d/workers.properties

JkLogFile logs/mod_jk.log

JkLogLevel debug

JkMount /jkstatus StatA

JkMount /* tcsrvs

使用/etc/httpd/conf.d/workers.properties来定义一个名为tcsrvs的worker,并为其指定几个属性。文件内容如下:

#vim /etc/httpd/conf.d/workers.properties

worker.list=tcsrvs,StatA

worker.TomcatA.type=ajp13

worker.TomcatA.host=172.16.31.50

worker.TomcatA.port=8009

worker.TomcatA.lbfactor=1

worker.TomcatB.type=ajp13

worker.TomcatB.host=172.16.31.51

worker.TomcatB.port=8009

worker.TomcatB.lbfactor=1

worker.tcsrvs.type=lb

worker.tcsrvs.sticky_session=0

worker.tcsrvs.balance_workers=TomcatA,TomcatB

worker.StatA.type=status

5.配置后端tomcat节点tom1和tom2的server.xml文件

确保Engine组件中存在jvmRoute属性,其值要与mod_jk配置中使用worker同名;

tom1节点:

<Engine name="Catalina" defaultHost="tom1.stu31.com" jvmRoute="TomcatA">

tom2节点:

<Engine name="Catalina" defaultHost="tom2.stu31.com" jvmRoute="TomcatB">

配置成功后重启tomcat服务。

#service tomcat stop

#service tomcat start

6.检查语法,重启httpd服务,访问测试:

检查语法:

[root@proxy conf.d]# httpd -t

Syntax OK

重启httpd服务:

[root@proxy conf.d]# service httpd restart

Stopping httpd: [ OK ]

Starting httpd: [ OK ]

访问测试:

7.通过tcpdump在后端tomcat节点的8009端口抓包

[root@tom2 tomcat]# tcpdump -nn -i eth0 port 8009

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 65535 bytes

22:09:29.951504 IP 172.16.31.52.47390 > 172.16.31.51.8009: Flags [S], seq 90832382, win 14600, options [mss 1460,sackOK,TS val 52439866 ecr 0,nop,wscale 5], length 0

22:09:29.951553 IP 172.16.31.51.8009 > 172.16.31.52.47390: Flags [S.], seq 99107550, ack 90832383, win 14480, options [mss 1460,sackOK,TS val 43542063 ecr 52439866,nop,wscale 6], length 0

22:09:29.951826 IP 172.16.31.52.47390 > 172.16.31.51.8009: Flags [.], ack 1, win 457, options [nop,nop,TS val 52439866 ecr 43542063], length 0

22:09:29.952466 IP 172.16.31.52.47390 > 172.16.31.51.8009: Flags [P.], seq 1:500, ack 1, win 457, options [nop,nop,TS val 52439867 ecr 43542063], length 499

22:09:29.952478 IP 172.16.31.51.8009 > 172.16.31.52.47390: Flags [.], ack 500, win 243, options [nop,nop,TS val 43542064 ecr 52439867], length 0

22:09:29.954260 IP 172.16.31.51.8009 > 172.16.31.52.47390: Flags [P.], seq 1:122, ack 500, win 243, options [nop,nop,TS val 43542066 ecr 52439867], length 121

22:09:29.954573 IP 172.16.31.52.47390 > 172.16.31.51.8009: Flags [.], ack 122, win 457, options [nop,nop,TS val 52439869 ecr 43542066], length 0

22:09:29.954965 IP 172.16.31.51.8009 > 172.16.31.52.47390: Flags [P.], seq 122:498, ack 500, win 243, options [nop,nop,TS val 43542067 ecr 52439869], length 376

22:09:29.954991 IP 172.16.31.51.8009 > 172.16.31.52.47390: Flags [P.], seq 498:504, ack 500, win 243, options [nop,nop,TS val 43542067 ecr 52439869], length 6

22:09:29.956549 IP 172.16.31.52.47390 > 172.16.31.51.8009: Flags [.], ack 498, win 490, options [nop,nop,TS val 52439871 ecr 43542067], length 0

22:09:29.956558 IP 172.16.31.52.47390 > 172.16.31.51.8009: Flags [.], ack 504, win 490, options [nop,nop,TS val 52439871 ecr 43542067], length 0

至此,基于mod_jk代理客户端访问到后端服务器的负载均衡实现。

8.基于mod_jk代理客户端访问到后端服务器的负载均衡实现session绑定;

只需要在workers.properties将会话保持打开:

worker.tcsrvs.sticky_session=1

重启httpd服务器;

[root@proxy conf.d]# service httpd restart

Stopping httpd: [ OK ]

Starting httpd: [ OK ]

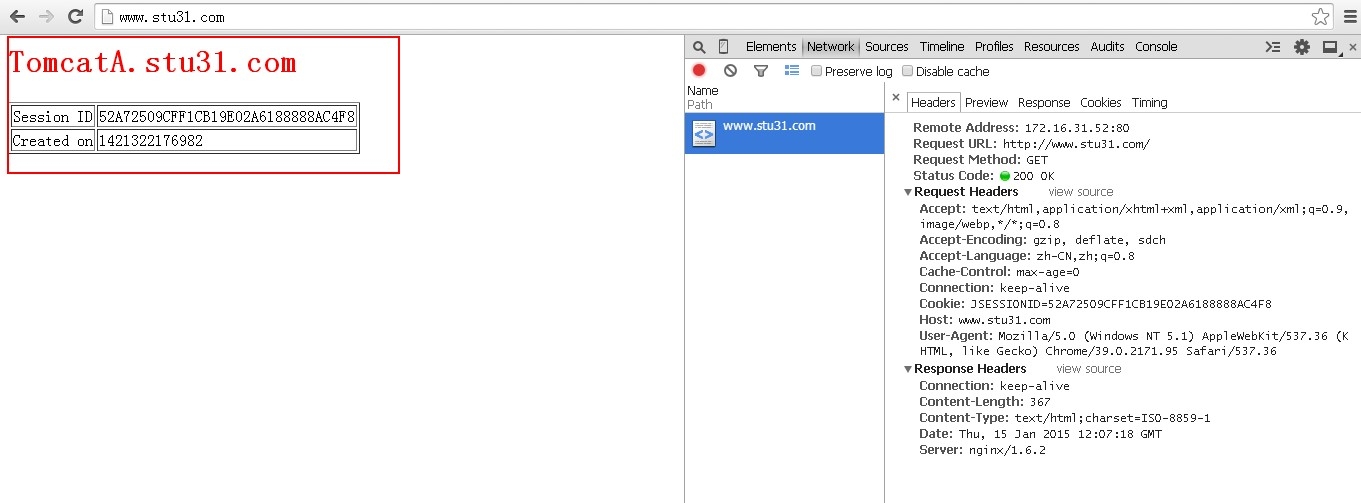

9.访问测试

可以看出会话实现了绑定。

至此,使用apache的proxy_http_module和proxy_ajp_module,第三方模块mod_jk,nginx反向代理请求到后端实现负载均衡和session保持的实验就完成了。

本文转自 dengaosky 51CTO博客,原文链接:http://blog.51cto.com/dengaosky/1964893,如需转载请自行联系原作者