一、准备环境:

三台机器:

VIP:192.168.203.89

fastdfs1:192.168.203.91 tracker1 storage1 keepalived lvs nginx

fastdfs1:192.168.203.92 tracker2 storage2 keepalived lvs nginx

fastdfs1:192.168.203.93 tracker3 storage3 keepalived lvs nginx

操作系统:

CentOS release 6.5 (Final)

软件环境:

fastdfs-5.05 GraphicsMagick-1.3.23 ngx_cache_purge-master lua-nginx-module-master LuaJIT-2.0.4

ngx_devel_kit-master fastdfs-nginx-module libfastcommon-master nginx-1.9.7

软件存放路径:/root下面

二、安装配置(三台机器需同时操作):

1 配置/etc/hosts文件:

echo "192.168.203.91 tracker1 storage1" >> /etc/hosts

echo "192.168.203.92 tracker2 storage2" >> /etc/hosts

echo "192.168.203.93 tracker3 storage3" >> /etc/hosts

2 解压软件并安装libfastcommon-master

cd /root/modules

unzip libfastcommon-master.zip

cd libfastcommon-master

sh make.sh

./make.sh install && echo $?

如果返回为零则代表安装成功

3、解压并安装fastdfs-5.05

cd /root/modules

tar -xvf fastdfs-5.05.tar.gz

cd fastdfs-5.05

./make.sh && ./make.sh install && echo $?

如果返回为零则代表安装成功

4、创建所需要的所有目录并创建软链接

mkdir -pv /www/fastdfs/data/storage/data

mkdir -pv /www/fastdfs/data/storage/logs

mkdir -pv /www/fastdfs/data/tracker/data

mkdir -pv /www/fastdfs/data/tracker/logs

mkdir -pv /www/fastdfs/data/storage/M00

cd /www/fastdfs/data/storage

注意:软连接必须写绝对路径,不能写相对路径

ln -s /www/fastdfs/data/storage/data/ /www/fastdfs/data/storage/M00/

将fastdfs的配置文件复制到/etc/fdfs/下

cp /root/modules/fastdfs-5.05/conf/{client.conf,http.conf} /etc/fdfs/ -ar

三、配置服务:

1、配置Tracker和Storage服务

cd /etc/fdfs/

cp tracker.conf.sample tracker.conf

[root@fastdfs1 fdfs]# cat tracker.conf|grep -Ev "^#|^$" 排除注释和空行

disabled=false

bind_addr=

port=22122

connect_timeout=30

network_timeout=60

base_path=/www/fastdfs/data/tracker

max_connections=256

accept_threads=1

work_threads=4

store_lookup=2

store_group=group2

store_server=0

store_path=0

download_server=0

reserved_storage_space = 10%

log_level=info

run_by_group=

run_by_user=

allow_hosts=*

sync_log_buff_interval = 10

check_active_interval = 120

thread_stack_size = 64KB

storage_ip_changed_auto_adjust = true

storage_sync_file_max_delay = 86400

storage_sync_file_max_time = 300

use_trunk_file = false

slot_min_size = 256

slot_max_size = 16MB

trunk_file_size = 64MB

trunk_create_file_advance = false

trunk_create_file_time_base = 02:00

trunk_create_file_interval = 86400

trunk_create_file_space_threshold = 20G

trunk_init_check_occupying = false

trunk_init_reload_from_binlog = false

trunk_compress_binlog_min_interval = 0

use_storage_id = false

storage_ids_filename = storage_ids.conf

id_type_in_filename = ip

store_slave_file_use_link = false

rotate_error_log = false

error_log_rotate_time=00:00

rotate_error_log_size = 0

log_file_keep_days = 0

use_connection_pool = false

connection_pool_max_idle_time = 3600

http.server_port=80

http.check_alive_interval=30

http.check_alive_type=tcp

http.check_alive_uri=/status.html

2.启动fds_tracker

fdfs_trackerd /etc/fdfs/tracker.conf

3、配置Storage服务并启动

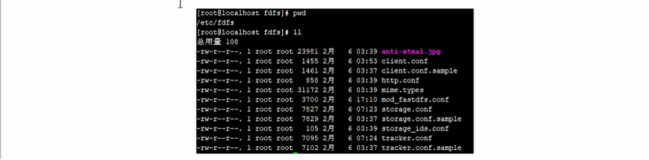

[root@fastdfs1 fdfs]# pwd

/etc/fdfs

[root@fastdfs1 fdfs]# cp storage.conf.sample storage.conf

[root@fastdfs1 fdfs]# cat storage.conf|grep -Ev "^#|^$" 排除注释和空行

disabled=false

group_name=group1

bind_addr=

client_bind=true

port=23000

connect_timeout=30

network_timeout=60

heart_beat_interval=30

stat_report_interval=60

base_path=/www/fastdfs/data/storage

max_connections=256

buff_size = 256KB

accept_threads=1

work_threads=4

disk_rw_separated = true

disk_reader_threads = 1

disk_writer_threads = 1

sync_wait_msec=50

sync_interval=0

sync_start_time=00:00

sync_end_time=23:59

write_mark_file_freq=500

store_path_count=1

store_path0=/www/fastdfs/data/storage

subdir_count_per_path=256

tracker_server=192.168.203.91:22122

tracker_server=192.168.203.92:22122

tracker_server=192.168.203.93:22122

log_level=info

run_by_group=

run_by_user=

allow_hosts=*

file_distribute_path_mode=0

file_distribute_rotate_count=100

fsync_after_written_bytes=0

sync_log_buff_interval=10

sync_binlog_buff_interval=10

sync_stat_file_interval=300

thread_stack_size=512KB

upload_priority=10

if_alias_prefix=

check_file_duplicate=0

file_signature_method=hash

key_namespace=FastDFS

keep_alive=0

use_access_log = false

rotate_access_log = false

access_log_rotate_time=00:00

rotate_error_log = false

error_log_rotate_time=00:00

rotate_access_log_size = 0

rotate_error_log_size = 0

log_file_keep_days = 0

file_sync_skip_invalid_record=false

use_connection_pool = false

connection_pool_max_idle_time = 3600

http.domain_name=

http.server_port=80

[root@Slave fdfs]# fdfs_storaged /etc/fdfs/storage.conf &

4、配置客户端:

[root@fastdfs1 fdfs]# pwd

/etc/fdfs

[root@fastdfs1 fdfs]# cp client.conf.sample client.conf

[root@fastdfs1 fdfs]# cat client.conf|grep -Ev "^#|^$"

connect_timeout=30

network_timeout=60

base_path=/www/fastdfs/data/tracker

tracker_server=192.168.203.91:22122

tracker_server=192.168.203.92:22122

tracker_server=192.168.203.93:22122

log_level=info

use_connection_pool = false

connection_pool_max_idle_time = 3600

load_fdfs_parameters_from_tracker=false

use_storage_id = false

storage_ids_filename = storage_ids.conf

http.tracker_server_port=80

5、检查配置是否正确,如出现以下结果则代表一定正确

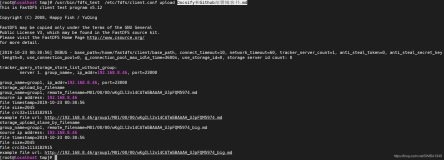

[root@fastdfs1 fdfs]# fdfs_monitor client.conf

[2016-09-09 14:23:38] DEBUG - base_path=/www/fastdfs/data/tracker, connect_timeout=30, network_timeout=60, tracker_server_count=3, anti_steal_token=0, anti_steal_secret_key length=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s, use_storage_id=0, storage server id count: 0

server_count=3, server_index=2

tracker server is 192.168.203.93:22122

group count: 1

Group 1:

group name = group1

disk total space = 35851 MB

disk free space = 31014 MB

trunk free space = 0 MB

storage server count = 3

active server count = 3

storage server port = 23000

storage HTTP port = 80

store path count = 1

subdir count per path = 256

current write server index = 0

current trunk file id = 0

Storage 1:

id = 192.168.203.91

ip_addr = 192.168.203.91 (tracker1) ACTIVE

http domain =

version = 5.05

join time = 2016-09-09 08:55:59

up time = 2016-09-09 08:55:59

total storage = 35851 MB

free storage = 31014 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 80

current_write_path = 0

source storage id = 192.168.203.92

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 2

connection.max_count = 3

total_upload_count = 1

success_upload_count = 1

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 390009

success_upload_bytes = 390009

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 0

success_sync_in_bytes = 0

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 1

success_file_open_count = 1

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 2

success_file_write_count = 2

last_heart_beat_time = 2016-09-09 14:23:36

last_source_update = 2016-09-09 14:00:49

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

Storage 2:

id = 192.168.203.92

ip_addr = 192.168.203.92 (tracker2) ACTIVE

http domain =

version = 5.05

join time = 2016-09-09 13:23:22

up time = 2016-09-09 13:23:22

total storage = 35851 MB

free storage = 31015 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 80

current_write_path = 0

source storage id =

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 2

connection.max_count = 2

total_upload_count = 0

success_upload_count = 0

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 0

success_upload_bytes = 0

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 390009

success_sync_in_bytes = 390009

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 1

success_file_open_count = 1

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 2

success_file_write_count = 2

last_heart_beat_time = 2016-09-09 14:23:35

last_source_update = 1970-01-01 08:00:00

last_sync_update = 2016-09-09 14:00:58

last_synced_timestamp = 2016-09-09 14:00:49 (0s delay)

Storage 3:

id = 192.168.203.93

ip_addr = 192.168.203.93 (tracker3) ACTIVE

http domain =

version = 5.05

join time = 2016-09-09 13:23:25

up time = 2016-09-09 13:23:25

total storage = 35851 MB

free storage = 31016 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 80

current_write_path = 0

source storage id = 192.168.203.92

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 2

connection.max_count = 2

total_upload_count = 0

success_upload_count = 0

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 0

success_upload_bytes = 0

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 390009

success_sync_in_bytes = 390009

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 1

success_file_open_count = 1

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 2

success_file_write_count = 2

last_heart_beat_time = 2016-09-09 14:23:10

last_source_update = 1970-01-01 08:00:00

last_sync_update = 2016-09-09 14:00:57

last_synced_timestamp = 2016-09-09 14:00:49 (0s delay)

四、安装依赖模块:

[root@fastdfs1 fdfs]# cd /root/modules/fastdfs-nginx-module/src/ && cp mod_fastdfs.conf /etc/fdfs

修改成如下配置:

[root@fastdfs1 fdfs]# cat /etc/fdfs/mod_fastdfs.conf |grep -vE "^$|^#"

connect_timeout=2

network_timeout=30

base_path=/www/fastdfs/data/storage

load_fdfs_parameters_from_tracker=true

storage_sync_file_max_delay = 86400

use_storage_id = false

storage_ids_filename = storage_ids.conf

tracker_server=192.168.203.91:22122

tracker_server=192.168.203.92:22122

tracker_server=192.168.203.93:22122

storage_server_port=23000

group_name=group1

url_have_group_name = true

store_path_count=1

store_path0=/www/fastdfs/data/storage

log_level=info

log_filename=

response_mode=proxy

if_alias_prefix=

flv_support = true

flv_extension = flv

group_count = 0

五、安装一些其他软件:

1.安装JIT

cd /root/modules

tar -xvf LuaJIT-2.0.4.tar.gz

cd LuaJIT-2.0.4

make && make install PREFIX=/usr/local/lj2

cat >>/etc/profile<<EOF

export LUAJIT_LIB=/usr/local/lj2/lib

export LUAJIT_INC=/usr/local/lj2/include/luajit-2.0

export LD_LIBRARY_PATH=/usr//locallj2/lib:$LD_LIBRARY_PATH

export PK_CONFIG_PATH=/usr/local/lj2/lib/pkgconfig:$PK_CONFIG_PATH

export GM_HOME=/usr/local/gm

export PATH=$GM_HOME/bin:$PATH

export LD_LIBRARY_PATH=$GM_HOME/lib:$LD_LIBRARY_PATH

EOF

记住:必须source加载下环境变量,否则会出现报错很多依赖没有安装

[root@Slave LuaJIT-2.0.4]# source /etc/profile

2.GraphicsMagick安装

yum install -y libjpe libjpeg-devel libpng libpng-devel libtool libtool-devel libtool-ltdl libtool-ltdl-devel

cd /root/modules

tar -xvf GraphicsMagick-1.3.23.tar.gz

./configure --prefix=/usr/local/gm --enable-libtool-verbose --with-included-ltdl --enable-shared --disable-static --with-modules --with-frozenpaths --without-perl --without-magick-plus-plus --with-quantum-depth=8 --enable-symbol-prefix

配置完成之后需要查看三项是否是yes

JPEG v1 yes

PNG yes

ZLIB yes

没问题后,执行make && make install安装

3.安装nginx的三个软件依赖包

yum install zlib-devel pcre-devel openssl-devel -y

在指定的软件包解压位置,修改fastdfs-nginx-module的配置文件

vim fastdfs-nginx-module/src/config

CORE_INCS="$CORE_INCS /usr/local/include/fastdfs/ /usr/local/include/fastcommon/"

修改为

CORE_INCS="$CORE_INCS /usr/include/fastdfs/ /usr/include/fastcommon/"

4.安装nginx

4.1 unzip ngx_cache_purge-master.zip

4.2 unzip lua-nginx-module-master.zip

4.3 unzip ngx_devel_kit-master.zip

4.4 tar xf nginx-1.9.7.tar.gz

开始编译Nginx

cd nginx-1.9.7

./configure --prefix=/usr/local/nginx --with-ld-opt="-Wl,-rpath,$LUAJIT_LIB" --with-http_ssl_module --with-http_realip_module --with-http_addition_module --with-http_sub_module --with-http_gzip_static_module --with-http_stub_status_module --with-pcre --add-module=../fastdfs-nginx-module/src/ --add-module=../lua-nginx-module-master --add-module=../ngx_cache_purge-master --add-module=../ngx_devel_kit-master

make && make install

必须要配置nginx配置文件

vim /usr/local/nginx/conf/nginx.conf

location /group1/M00 {

root /www/fastdfs/data/;

ngx_fastdfs_module;

}

cp Nginx配置文件中的两个文件到/etc/fdfs

cp mime.types nginx.conf /etc/fdfs/

启动nginx

/usr/local/nginx/sbin/nginx

六、测试:

[root@fastdfs1 ~]# fdfs_upload_file /etc/fdfs/client.conf 1_2.jpg 上传一个文件,名为1_2.jpg

group1/M00/00/00/wKjLXFfSV9-AOZ9dAAXzedIMkpQ580.jpg

在浏览器中输入IP打开:

192.168.203.91/group1/M00/00/00/wKjLXFfSV9-AOZ9dAAXzedIMkpQ580.jpg

如果可以看到图片就证明单机搭建成功

七、在91和92两台机器上面搭建keepalived和lvs:

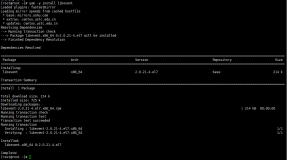

yum install keepalived ipvsadm -y

修改主keepalived的keepalived.conf配置文件:

[root@fastdfs1 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

notification_email {

jimo291@gmail.com #email 通知

}

notification_email_from jimo291@gmail.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS1 # 设置lvs的id,在一个网络内应该是唯一的

}

vrrp_sync_group test { #设置vrrp组

group {

loadbalance

}

}

vrrp_instance loadbalance {

state MASTER #设置lvs的状态,报错MASTER和BACKUP两种,必须大写

interface eth0 #设置对外服务的接口

lvs_sync_daemon_inteface eth0 #设置lvs监听的接口

virtual_router_id 51 #设置虚拟路由表示

priority 180 #设置优先级,数值越大,优先级越高

advert_int 5 #设置同步时间间隔

authentication { #设置验证类型和密码

auth_type PASS

auth_pass 1111

}

virtual_ipaddress { #设置lvs vip

192.168.203.89

}

}

virtual_server 192.168.203.89 80 {

delay_loop 6 #健康检查时间间隔

lb_algo rr #负载均衡调度算法

lb_kind DR #负载均衡转发规则

#persistence_timeout 20 #设置会话保持时间,对bbs等很有用

protocol TCP #协议

real_server 192.168.203.91 80 {

weight 3 #设置权重

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.203.92 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.203.93 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

修改备keepalived的keepalived.conf配置文件:

[root@fastdfs2 conf]# cat /etc/keepalived/keepalived.conf

global_defs {

notification_email {

jimo291@gmail.com

}

notification_email_from jimo291@gmail.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS2

}

vrrp_sync_group test {

group {

loadbalance

}

}

vrrp_instance loadbalance {

state BACKUP

interface eth0

lvs_sync_daemon_inteface eth0

virtual_router_id 51

priority 150

advert_int 5

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.203.89

}

}

virtual_server 192.168.203.89 80 {

delay_loop 6

lb_algo rr

lb_kind DR

#persistence_timeout 20

protocol TCP

real_server 192.168.203.91 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.203.92 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.203.93 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

[root@fastdfs2 conf]# cat /etc/keepalived/keepalived.conf

global_defs {

notification_email {

jimo291@gmail.com

}

notification_email_from jimo291@gmail.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS2

}

vrrp_sync_group test {

group {

loadbalance

}

}

vrrp_instance loadbalance {

state BACKUP

interface eth0

lvs_sync_daemon_inteface eth0

virtual_router_id 51

priority 150

advert_int 5

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.203.89

}

}

virtual_server 192.168.203.89 80 {

delay_loop 6

lb_algo rr

lb_kind DR

#persistence_timeout 20

protocol TCP

real_server 192.168.203.91 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.203.92 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.203.93 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

三台机器上面都需要部署的脚本:

[root@fastdfs2 conf]# cat /etc/rc.d/init.d/realserver.sh

#!/bin/bash

# description: Config realserver lo and apply noarp

SNS_VIP=192.168.203.89

. /etc/rc.d/init.d/functions

case "$1" in

start)

ifconfig lo:0 $SNS_VIP netmask 255.255.255.255 broadcast $SNS_VIP

/sbin/route add -host $SNS_VIP dev lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p >/dev/null 2>&1

echo "RealServer Start OK"

;;

stop)

ifconfig lo:0 down

route del $SNS_VIP >/dev/null 2>&1

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "RealServer Stoped"

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

exit 0

[root@fastdfs2 conf]# chmod +x /etc/rc.d/init.d/realserver.sh

三台机器上面都启动这个脚本

[root@fastdfs2 conf]# /etc/rc.d/init.d/realserver.sh start

验证:如果回环网卡上面都有这个IP,则说明成功

lo:0 Link encap:Local Loopback

inet addr:192.168.203.89 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:16436 Metric:1

验证集群:

如果输入这个IP能够看到图片则代表集群搭建成功

http://192.168.203.89/group1/M00/00/00/wKjLXFfSV9-AOZ9dAAXzedIMkpQ580.jpg

可以停掉一台或者两台机器重复试验,如果都是可以访问的并不受影响则代表搭建成功