一,GFS2简介

GFS2是一个基于GFS的先进的集群文件系统,能够同步每台主机的集群文件系统的metadata,能够进行文件锁的管理,并且必须要redhat cluster suite支持,GFS2可以grow,进行容量的调整,不过这是在disk动态容量调整的支持下,也就是本文所要实现的CLVM。

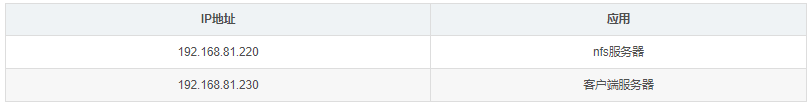

实验环境:

192.168.30.119 tgtd.luojianlong.com OS:Centos 6.4 x86_64 管理服务器 iscsi-target-server

192.168.30.115 node1.luojianlong.com OS:Centos 6.4 x86_64 iscsi-initiator

192.168.30.116 node2.luojianlong.com OS:Centos 6.4 x86_64 iscsi-initiator

192.168.30.117 node3.luojianlong.com OS:Centos 6.4 x86_64 iscsi-initiator

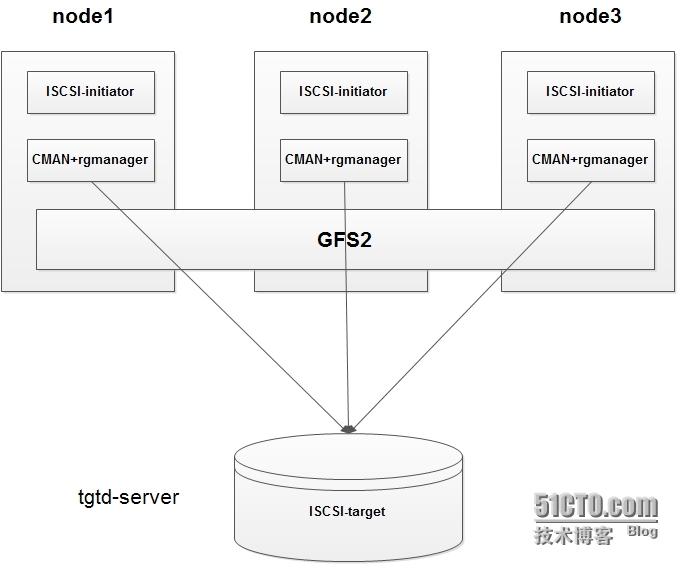

原理:

node1,node2,node3分别通过ISCSI-initiator登录并挂载tgtd服务器的存储设备,利用RHCS搭建GFS2高可用集群文件系统,且保证3个节点对存储设备能够同时读写访问。

下面是拓扑图:

二,准备工作

分别设置4台服务器的hosts文件,以便能够解析对应节点,设置管理节点到各集群节点的ssh密钥无密码登录,关闭NetworkManager,设置开机不自动启动。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

[root@tgtd ~]

# cat /etc/hosts

192.168.30.115 node1.luojianlong.com node1

192.168.30.116 node2.luojianlong.com node2

192.168.30.117 node3.luojianlong.com node3

[root@tgtd ~]

# ssh-copy-id -i node1

The authenticity of host

'node1 (192.168.30.115)'

can't be established.

RSA key fingerprint is 66:2e:28:75:ba:34:5e:b1:40:66:af:ba:37:80:20:3f.

Are you sure you want to

continue

connecting (

yes

/no

)?

yes

Warning: Permanently added

'node1,192.168.30.115'

(RSA) to the list of known hosts.

root@node1's password:

Now try logging into the machine, with

"ssh 'node1'"

, and check

in

:

.

ssh

/authorized_keys

to

make

sure we haven

't added extra keys that you weren'

t expecting.

[root@tgtd ~]

# ssh-copy-id -i node2

The authenticity of host

'node2 (192.168.30.116)'

can't be established.

RSA key fingerprint is 66:2e:28:75:ba:34:5e:b1:40:66:af:ba:37:80:20:3f.

Are you sure you want to

continue

connecting (

yes

/no

)?

yes

Warning: Permanently added

'node2,192.168.30.116'

(RSA) to the list of known hosts.

root@node2's password:

Now try logging into the machine, with

"ssh 'node2'"

, and check

in

:

.

ssh

/authorized_keys

to

make

sure we haven

't added extra keys that you weren'

t expecting.

[root@tgtd ~]

# ssh-copy-id -i node3

The authenticity of host

'node3 (192.168.30.117)'

can't be established.

RSA key fingerprint is 66:2e:28:75:ba:34:5e:b1:40:66:af:ba:37:80:20:3f.

Are you sure you want to

continue

connecting (

yes

/no

)?

yes

Warning: Permanently added

'node3,192.168.30.117'

(RSA) to the list of known hosts.

root@node3's password:

Now try logging into the machine, with

"ssh 'node3'"

, and check

in

:

.

ssh

/authorized_keys

to

make

sure we haven

't added extra keys that you weren'

t expecting.

[root@tgtd ~]

# for I in {1..3}; do scp /etc/hosts node$I:/etc/; done

hosts 100% 129 0.1KB

/s

00:00

hosts 100% 129 0.1KB

/s

00:00

hosts

|

|

1

2

3

4

5

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'service NetworkManager stop'; done

Stopping NetworkManager daemon: [ OK ]

Stopping NetworkManager daemon: [ OK ]

Stopping NetworkManager daemon: [ OK ]

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'chkconfig NetworkManager off'; done

|

关闭各节点的iptables,selinux服务

|

1

2

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'service iptables stop'; done

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'setenforce 0'; done

|

集群安装

RHCS的核心组件为cman和rgmanager,其中cman为基于openais的“集群基础架构层”,rgmanager为资源管理器。RHCS的集群中资源的配置需要修改其主配置文件/etc/cluster/cluster.xml实现,其仅安装在集群中的某一节点上即可,而cman和rgmanager需要分别安装在集群中的每个节点上。这里选择将此三个rpm包分别安装在了集群中的每个节点上

|

1

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'yum -y install cman rgmanager'; done

|

为集群创建配置文件

RHCS的配置文件/etc/cluster/cluster.conf,其在每个节点上都必须有一份,且内容均相同,其默认不存在,因此需要事先创建,ccs_tool命令可以完成此任务。另外,每个集群通过集群ID来标识自身,因此,在创建集群配置文件时需要为其选定一个集群名称,这里假设其为tcluster。此命令需要在集群中的某个节点上执行

|

1

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'ccs_tool create tcluster'; done

|

查看生成的配置文件的内容

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@node1 cluster]

# cat cluster.conf

<?xml version=

"1.0"

?>

<cluster name=

"tcluster"

config_version=

"1"

>

<clusternodes>

<

/clusternodes

>

<fencedevices>

<

/fencedevices

>

<

rm

>

<failoverdomains/>

<resources/>

<

/rm

>

<

/cluster

>

#ccs_tool命令用于在线更新CCS的配置文件

|

为集群添加节点

RHCS集群需要配置好各节点及相关的fence设备后才能启动,因此,这里需要事先将各节点添加进集群配置文件。每个节点在添加进集群时,需要至少为其配置node id(每个节点的id必须惟一),ccs_tool的addnode子命令可以完成节点添加。将前面规划的三个集群节点添加至集群中,可以使用如下命令实现。

|

1

2

3

|

[root@node1 ~]

# ccs_tool addnode -n 1 node1.luojianlong.com

[root@node1 ~]

# ccs_tool addnode -n 2 node2.luojianlong.com

[root@node1 ~]

# ccs_tool addnode -n 3 node3.luojianlong.com

|

查看已经添加完成的节点及相关信息:

|

1

2

3

4

5

6

|

[root@node1 ~]

# ccs_tool lsnode

Cluster name: tcluster, config_version: 4

Nodename Votes Nodeid Fencetype

node1.luojianlong.com 1 1

node2.luojianlong.com 1 2

node3.luojianlong.com 1 3

|

复制配置文件到其他2个节点

|

1

2

|

[root@node1 ~]

# scp /etc/cluster/cluster.conf node2:/etc/cluster/

[root@node1 ~]

# scp /etc/cluster/cluster.conf node3:/etc/cluster/

|

启动集群

RHCS集群会等待各节点都启动后方才进入正常工作状态,因此,需要把集群各节点上的cman服务同时启动起来。这分别需要在各节点上执行如下命令

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'service cman start'; done

Starting cluster:

Checking

if

cluster has been disabled at boot... [ OK ]

Checking Network Manager... [ OK ]

Global setup... [ OK ]

Loading kernel modules... [ OK ]

Mounting configfs... [ OK ]

Starting cman... [ OK ]

Waiting

for

quorum... [ OK ]

Starting fenced... [ OK ]

Starting dlm_controld... [ OK ]

Tuning DLM kernel config... [ OK ]

Starting gfs_controld... [ OK ]

Unfencing self... [ OK ]

Joining fence domain... [ OK ]

Starting cluster:

Checking

if

cluster has been disabled at boot... [ OK ]

Checking Network Manager... [ OK ]

Global setup... [ OK ]

Loading kernel modules... [ OK ]

Mounting configfs... [ OK ]

Starting cman... [ OK ]

Waiting

for

quorum... [ OK ]

Starting fenced... [ OK ]

Starting dlm_controld... [ OK ]

Tuning DLM kernel config... [ OK ]

Starting gfs_controld... [ OK ]

Unfencing self... [ OK ]

Joining fence domain... [ OK ]

Starting cluster:

Checking

if

cluster has been disabled at boot... [ OK ]

Checking Network Manager... [ OK ]

Global setup... [ OK ]

Loading kernel modules... [ OK ]

Mounting configfs... [ OK ]

Starting cman... [ OK ]

Waiting

for

quorum... [ OK ]

Starting fenced... [ OK ]

Starting dlm_controld... [ OK ]

Tuning DLM kernel config... [ OK ]

Starting gfs_controld... [ OK ]

Unfencing self... [ OK ]

Joining fence domain... [ OK ]

|

|

1

2

3

4

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'service rgmanager start'; done

Starting Cluster Service Manager: [ OK ]

Starting Cluster Service Manager: [ OK ]

Starting Cluster Service Manager: [ OK ]

|

|

1

2

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'chkconfig rgmanager on'; done

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'chkconfig cman on'; done

|

查看集群状态信息

|

1

2

3

4

5

6

7

8

|

[root@node1 ~]

# clustat

Cluster Status

for

tcluster @ Tue Apr 1 16:45:23 2014

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

node1.luojianlong.com 1 Online, Local

node2.luojianlong.com 2 Online

node3.luojianlong.com 3 Online

|

cman_tool的status子命令则以当前节点为视角来显示集群的相关信息

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

[root@node1 ~]

# cman_tool status

Version: 6.2.0

Config Version: 4

Cluster Name: tcluster

Cluster Id: 10646

Cluster Member: Yes

Cluster Generation: 28

Membership state: Cluster-Member

Nodes: 3

Expected votes: 3

Total votes: 3

Node votes: 1

Quorum: 2

Active subsystems: 8

Flags:

Ports Bound: 0 177

Node name: node1.luojianlong.com

Node ID: 1

Multicast addresses: 239.192.41.191

Node addresses: 192.168.30.115

|

cman_tool的nodes子命令则可以列出集群中每个节点的相关信息

|

1

2

3

4

5

|

[root@node1 ~]

# cman_tool nodes

Node Sts Inc Joined Name

1 M 24 2014-04-01 16:37:57 node1.luojianlong.com

2 M 24 2014-04-01 16:37:57 node2.luojianlong.com

3 M 28 2014-04-01 16:38:11 node3.luojianlong.com

|

cman_tool的services子命令则可以列出集群中每个服务的相关信息

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

[root@node1 ~]

# cman_tool services

fence domain

member count 3

victim count 0

victim now 0

master nodeid 1

wait state none

members 1 2 3

dlm lockspaces

name rgmanager

id

0x5231f3eb

flags 0x00000000

change member 3 joined 1 remove 0 failed 0

seq

3,3

members 1 2 3

|

在tgtd server上安装scsi-target-utils

|

1

2

|

[root@tgtd ~]

# yum -y install scsi-target-utils

[root@tgtd ~]

# cp /etc/tgt/targets.conf /etc/tgt/targets.conf.bak

|

编辑target配置文件,定义target

|

1

2

3

4

5

6

7

|

[root@tgtd ~]

# vi /etc/tgt/targets.conf

# 添加如下内容

<target iqn.2014-04.com.luojianlong:target1>

backing-store

/dev/sdb

initiator-address 192.168.30.0

/24

<

/target

>

[root@tgtd ~]

# service tgtd restart

|

backing-store:指定后端要共享的磁盘编号

initiator-address:授权客户端访问的网络地址

incominguser:设置登录用户的账号密码

启动target并查看

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

[root@tgtd ~]

# tgtadm -L iscsi -m target -o show

Target 1: iqn.2014-04.com.luojianlong:target1

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

Backing store

type

: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 10737 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

Backing store

type

: rdwr

Backing store path:

/dev/sdb

Backing store flags:

Account information:

ACL information:

192.168.30.0

/24

|

配置3个节点,使用iscsi-initiator登录tgtd服务的存储设备

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'iscsiadm -m discovery -t st -p 192.168.30.119'; done

192.168.30.119:3260,1 iqn.2014-04.com.luojianlong:target1

192.168.30.119:3260,1 iqn.2014-04.com.luojianlong:target1

192.168.30.119:3260,1 iqn.2014-04.com.luojianlong:target1

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'iscsiadm -m node -T iqn.2014-04.com.luojianlong:target1 -p 192.168.30.119:3260 -l'; done

Logging

in

to [iface: default, target: iqn.2014-04.com.luojianlong:target1, portal: 192.168.30.119,3260] (multiple)

Login to [iface: default, target: iqn.2014-04.com.luojianlong:target1, portal: 192.168.30.119,3260] successful.

Logging

in

to [iface: default, target: iqn.2014-04.com.luojianlong:target1, portal: 192.168.30.119,3260] (multiple)

Login to [iface: default, target: iqn.2014-04.com.luojianlong:target1, portal: 192.168.30.119,3260] successful.

Logging

in

to [iface: default, target: iqn.2014-04.com.luojianlong:target1, portal: 192.168.30.119,3260] (multiple)

Login to [iface: default, target: iqn.2014-04.com.luojianlong:target1, portal: 192.168.30.119,3260] successful.

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'fdisk -l /dev/sdb'; done

Disk

/dev/sdb

: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors

/track

, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical

/physical

): 512 bytes / 512 bytes

I

/O

size (minimum

/optimal

): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk

/dev/sdb

: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors

/track

, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical

/physical

): 512 bytes / 512 bytes

I

/O

size (minimum

/optimal

): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk

/dev/sdb

: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors

/track

, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical

/physical

): 512 bytes / 512 bytes

I

/O

size (minimum

/optimal

): 512 bytes / 512 bytes

Disk identifier: 0x00000000

|

在其中一个节点上格式化一个分区

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

[root@node1 ~]

# fdisk /dev/sdb

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0x7ac42a91.

Changes will remain

in

memory only,

until

you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (

command

'c'

) and change display

units

to

sectors (

command

'u'

).

Command (m

for

help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-10240, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-10240, default 10240): +5G

Command (m

for

help): w

The partition table has been altered!

Calling ioctl() to re-

read

partition table.

Syncing disks.

[root@node1 ~]

# fdisk /dev/sdb

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (

command

'c'

) and change display

units

to

sectors (

command

'u'

).

Command (m

for

help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 2

First cylinder (5122-10240, default 5122):

Using default value 5122

Last cylinder, +cylinders or +size{K,M,G} (5122-10240, default 10240): +5G

Value out of range.

Last cylinder, +cylinders or +size{K,M,G} (5122-10240, default 10240): +4G

Command (m

for

help): w

The partition table has been altered!

Calling ioctl() to re-

read

partition table.

Syncing disks.

[root@node1 ~]

# fdisk -l /dev/sdb

Disk

/dev/sdb

: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors

/track

, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical

/physical

): 512 bytes / 512 bytes

I

/O

size (minimum

/optimal

): 512 bytes / 512 bytes

Disk identifier: 0x7ac42a91

Device Boot Start End Blocks Id System

/dev/sdb1

1 5121 5243888 83 Linux

/dev/sdb2

5122 9218 4195328 83 Linux

|

配置使用gfs2文件系统

|

1

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'yum -y install gfs2-utils'; done

|

使用gfs2命令工具在之前创建好的/dev/sdb1上创建集群文件系统gfs2,可以使用如下命令

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@node1 ~]

# mkfs.gfs2 -j 3 -p lock_dlm -t tcluster:sdb1 /dev/sdb1

This will destroy any data on

/dev/sdb1

.

It appears to contain: Linux GFS2 Filesystem (blocksize 4096, lockproto lock_dlm)

Are you sure you want to proceed? [y

/n

] y

Device:

/dev/sdb1

Blocksize: 4096

Device Size 5.00 GB (1310972 blocks)

Filesystem Size: 5.00 GB (1310970 blocks)

Journals: 3

Resource Groups: 21

Locking Protocol:

"lock_dlm"

Lock Table:

"tcluster:sdb1"

UUID: 478dac97-c25f-5bc8-a719-0d385fea23e3

|

mkfs.gfs2为gfs2文件系统创建工具,其一般常用的选项有:

-b BlockSize:指定文件系统块大小,最小为512,默认为4096;

-J MegaBytes:指定gfs2日志区域大小,默认为128MB,最小值为8MB;

-j Number:指定创建gfs2文件系统时所创建的日志区域个数,一般需要为每个挂载的客户端指定一个日志区域;

-p LockProtoName:所使用的锁协议名称,通常为lock_dlm或lock_nolock之一;

-t LockTableName:锁表名称,一般来说一个集群文件系统需一个锁表名以便让集群节点在施加文件锁时得悉其所关联到的集群文件系统,锁表名称为clustername:fsname,其中的clustername必须跟集群配置文件中的集群名称保持一致,因此,也仅有此集群内的节点可访问此集群文件系统;此外,同一个集群内,每个文件系统的名称必须惟一。

格式化完成后,重启node1,node2,node3,不然无法挂载刚才创建的GFS2分区

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

|

[root@node1 ~]

# mount /dev/sdb1 /mnt/

[root@node1 ~]

# cp /etc/fstab /mnt/

# 在node2,node3上面也同时挂载/dev/sdb1

[root@node2 ~]

# mount /dev/sdb1 /mnt/

[root@node3 ~]

# mount /dev/sdb1 /mnt/

# 在node1上挂载目录中写入数据,检测node2,node3的挂载目录数据情况

[root@node2 mnt]

# tail -f fstab

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/VolGroup-lv_root

/ ext4 defaults 1 1

UUID=db4bad23-32a8-44a6-bdee-1585ce9e13ac

/boot

ext4 defaults 1 2

/dev/mapper/VolGroup-lv_swap

swap swap defaults 0 0

tmpfs

/dev/shm

tmpfs defaults 0 0

devpts

/dev/pts

devpts gid=5,mode=620 0 0

sysfs

/sys

sysfs defaults 0 0

proc

/proc

proc defaults 0 0

[root@node3 mnt]

# tail -f fstab

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/VolGroup-lv_root

/ ext4 defaults 1 1

UUID=db4bad23-32a8-44a6-bdee-1585ce9e13ac

/boot

ext4 defaults 1 2

/dev/mapper/VolGroup-lv_swap

swap swap defaults 0 0

tmpfs

/dev/shm

tmpfs defaults 0 0

devpts

/dev/pts

devpts gid=5,mode=620 0 0

sysfs

/sys

sysfs defaults 0 0

proc

/proc

proc defaults 0 0

[root@node1 mnt]

# echo "hello" >> fstab

[root@node2 mnt]

# tail -f fstab

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/VolGroup-lv_root

/ ext4 defaults 1 1

UUID=db4bad23-32a8-44a6-bdee-1585ce9e13ac

/boot

ext4 defaults 1 2

/dev/mapper/VolGroup-lv_swap

swap swap defaults 0 0

tmpfs

/dev/shm

tmpfs defaults 0 0

devpts

/dev/pts

devpts gid=5,mode=620 0 0

sysfs

/sys

sysfs defaults 0 0

proc

/proc

proc defaults 0 0

hello

[root@node3 mnt]

# tail -f fstab

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/VolGroup-lv_root

/ ext4 defaults 1 1

UUID=db4bad23-32a8-44a6-bdee-1585ce9e13ac

/boot

ext4 defaults 1 2

/dev/mapper/VolGroup-lv_swap

swap swap defaults 0 0

tmpfs

/dev/shm

tmpfs defaults 0 0

devpts

/dev/pts

devpts gid=5,mode=620 0 0

sysfs

/sys

sysfs defaults 0 0

proc

/proc

proc defaults 0 0

hello

|

以上信息发现,node2,node3已经发现数据发生变化。

三,配置使用CLVM(集群逻辑卷)

在RHCS集群节点上安装lvm2-cluster

|

1

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'yum -y install lvm2-cluster'; done

|

在RHCS的各节点上,为lvm启用集群功能

|

1

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'lvmconf --enable-cluster'; done

|

为RHCS各节点启动clvmd服务

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'service clvmd start'; done

Starting clvmd:

Activating VG(s): 2 logical volume(s)

in

volume group

"VolGroup"

now active

clvmd not running on node node3.luojianlong.com

clvmd not running on node node2.luojianlong.com

[ OK ]

Starting clvmd:

Activating VG(s): 2 logical volume(s)

in

volume group

"VolGroup"

now active

clvmd not running on node node3.luojianlong.com

[ OK ]

Starting clvmd:

Activating VG(s): 2 logical volume(s)

in

volume group

"VolGroup"

now active

[ OK ]

|

创建物理卷、卷组和逻辑卷,使用管理单机逻辑卷的相关命令即可

|

1

2

3

4

5

6

7

|

[root@node1 ~]

# pvcreate /dev/sdb2

Physical volume

"/dev/sdb2"

successfully created

[root@node1 ~]

# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2

VolGroup lvm2 a-- 29.51g 0

/dev/sdb2

lvm2 a-- 4.00g 4.00g

# 此时,在另外的其它节点上也能够看到刚刚创建的物理卷

|

创建卷组和逻辑卷

|

1

2

3

4

5

6

7

8

9

|

[root@node1 ~]

# vgcreate clustervg /dev/sdb2

Clustered volume group

"clustervg"

successfully created

[root@node1 ~]

# lvcreate -L 2G -n clusterlv clustervg

Logical volume

"clusterlv"

created

[root@node1 ~]

# lvs

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_root VolGroup -wi-ao---- 25.63g

lv_swap VolGroup -wi-ao---- 3.88g

clusterlv clustervg -wi-a----- 2.00g

|

在其他节点也能看到对应的逻辑卷

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@tgtd ~]

# for I in {1..3}; do ssh node$I 'lvs'; done

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_root VolGroup -wi-ao---- 25.63g

lv_swap VolGroup -wi-ao---- 3.88g

clusterlv clustervg -wi-a----- 2.00g

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_root VolGroup -wi-ao---- 25.63g

lv_swap VolGroup -wi-ao---- 3.88g

clusterlv clustervg -wi-a----- 2.00g

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_root VolGroup -wi-ao---- 25.63g

lv_swap VolGroup -wi-ao---- 3.88g

clusterlv clustervg -wi-a----- 2.00g

|

格式化逻辑卷

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

[root@node1 ~]

# lvcreate -L 2G -n clusterlv clustervg

Logical volume

"clusterlv"

created

[root@node1 ~]

# lvs

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_root VolGroup -wi-ao---- 25.63g

lv_swap VolGroup -wi-ao---- 3.88g

clusterlv clustervg -wi-a----- 2.00g

[root@node1 ~]

# mkfs.gfs2 -p lock_dlm -j 2 -t tcluster:clusterlv /dev/clustervg/clusterlv

This will destroy any data on

/dev/clustervg/clusterlv

.

It appears to contain: symbolic link to `..

/dm-2

'

Are you sure you want to proceed? [y

/n

] y

Device:

/dev/clustervg/clusterlv

Blocksize: 4096

Device Size 2.00 GB (524288 blocks)

Filesystem Size: 2.00 GB (524288 blocks)

Journals: 2

Resource Groups: 8

Locking Protocol:

"lock_dlm"

Lock Table:

"tcluster:clusterlv"

UUID: c8fbef88-970d-92c4-7b66-72499406fa9c

|

挂载逻辑卷

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

[root@node1 ~]

# mount /dev/clustervg/clusterlv /media/

[root@node2 ~]

# mount /dev/clustervg/clusterlv /media/

[root@node3 ~]

# mount /dev/clustervg/clusterlv /media/

Too many nodes mounting filesystem, no

free

journals

# 发现node3挂载不了,因为刚才创建了2个journal,需要再添加一个

[root@node1 ~]

# gfs2_jadd -j 1 /dev/clustervg/clusterlv

Filesystem:

/media

Old Journals 2

New Journals 3

# 然后挂载node3

[root@node3 ~]

# mount /dev/clustervg/clusterlv /media/

[root@node1 ~]

# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root

ext4 26G 6.1G 18G 26% /

tmpfs tmpfs 1.9G 38M 1.9G 2%

/dev/shm

/dev/sda1

ext4 485M 65M 395M 15%

/boot

/dev/sdb1

gfs2 5.1G 388M 4.7G 8%

/mnt

/dev/mapper/clustervg-clusterlv

gfs2 2.0G 388M 1.7G 19%

/media

|

扩展逻辑卷

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

[root@node1 ~]

# lvextend -L +2G /dev/clustervg/clusterlv

Extending logical volume clusterlv to 4.00 GiB

Logical volume clusterlv successfully resized

[root@node1 ~]

# gfs2_grow /dev/clustervg/clusterlv

FS: Mount Point:

/media

FS: Device:

/dev/dm-2

FS: Size: 524288 (0x80000)

FS: RG size: 65533 (0xfffd)

DEV: Size: 1048576 (0x100000)

The

file

system grew by 2048MB.

gfs2_grow complete.

[root@node1 ~]

# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root

ext4 26G 6.1G 18G 26% /

tmpfs tmpfs 1.9G 38M 1.9G 2%

/dev/shm

/dev/sda1

ext4 485M 65M 395M 15%

/boot

/dev/sdb1

gfs2 5.1G 388M 4.7G 8%

/mnt

/dev/mapper/clustervg-clusterlv

gfs2 4.0G 388M 3.7G 10%

/media

|

发现逻辑卷已经被扩展

到此,RHCS,GFS2,ISCSI,CLVM实现共享存储配置完毕。

本文转自ljl_19880709 51CTO博客,原文链接:http://blog.51cto.com/luojianlong/1388556,如需转载请自行联系原作者