Use of NFS Considered Harmful

Table of Contents

| I. | The Problem | |

| II. | Introduction to NFS | |

| III. | List of Concerns | |

| a. | Time Synchronization | |

| b. | File Locking Semantics | |

| c. | File Locking API | |

| d. | Exclusive File Creation | |

| e. | Delayed Write Caching | |

| f. | Read Caching and File Access Time | |

| g. | Indestructable Files | |

| h. | User and Group Names and Numbers | |

| i. | Superuser Account | |

| j. | Security | |

| k. | Unkillable Processes | |

| l. | Timeouts on Mount and Unmount | |

| m. | Overlapping Exports | |

| n. | Automount | |

| IV. | Other Issues to Address | |

| a. | Scalability | |

| b. | Server Replication | |

| V. | Typical (Mis)Uses of NFS | |

| a. | Logging | |

| b. | Data Exchange | |

| VI. | Reasonable NFS Use | |

| a. | Package Maintenance | |

| b. | User Home Directories | |

| VII. | Alternatives | |

| a. | ||

| b. | Home Directories | |

| c. | Data Migration | |

| VIII. | Conclusion | |

| IX. | References |

I. The Problem

NFS fails at the goal of allowing a computer to access files over a network as if they were on a local disk. In many ways, NFS comes close to the objective, and in certain circumstances (detailed later), this is acceptable. However, the subtle differences can cause subtle bugs and greater system issues. The widespread misconception about the compatibility and transparency of NFS means that it is often used inappropriately, and often put into production when better, more acceptable solutions exist.

II. Introduction to NFS

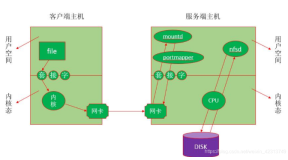

The Network File System (NFS) is a protocol developed by Sun Microsystems. There are currently two versions (versions 2 and 3), which are documented in various IETF RFC's (these are not IETF standards, merely informational documents), and another version currently being standardized (version 4). NFS is intended to allow a computer to access files over a network as if they were on a local disk. It achieves this via a client-server interface, where one machine exports a drive or portion of a drive, and another machine mounts the export locally. Any combination of imports and mounts is possible, with clients able to mount multiple exports from multiple servers, servers exporting multiple directories to multiple clients, and hosts that act as both clients and servers.

NFS is built on the Remote Procedure Call (RPC) mechanism, making it a stateless protocol. In fact, NFS is not stateless from the client point of view, but it is from the server point of view. This means that the server does not keep any information about the clients using it. The advantage of this is that there is no additional burden placed on the server for additional clients (except for any load incurred by actual data transfer). The idea is that while clients will probably use only a few servers, servers will need to support large numbers of clients. In addition to scalability, using RPC allows NFS to be extremely robust in the event of failure on either the client or server. If a client fails, the server will typically not notice. If a server fails, any pending client operations will typically resume without observable interruption, except for a temporary pause in operations until the server has resumed normal operation.

As protocols go, NFS has been widely accepted. Every network operating system has had NFS ported to it in one form or another, and it is used in almost every Unix environment worldwide. It provides a convenient mechanism for sharing data across platforms, and is a relatively robust, nearly ubiquitous solution to centralized data storage problems. Engineers are familiar with it, users accustomed to it, and developers continue to improve it.

III. List of Concerns

Following are a few known problems with NFS and suggested workarounds.

a. Time Synchronization

NFS does not synchronize time between client and server, and offers no mechanism for the client to determine what time the server thinks it is. What this means is that a client can update a file, and have the timestamp on the file be either some time long in the past, or even in the future, from its point of view.

While this is generally not an issue if clocks are a few seconds or even a few minutes off, it can be confusing and misleading to humans. Of even greater importance is the affect on programs. Programs often do not expect time difference like this, and may end abnormally or behave strangely, as various tasks timeout instantly, or take extraordinarily long while to timeout.

Poor time synchronization also makes debugging problems difficult, because there is no easy way to establish a chronology of events. This is especially problematic when investigating security issues, such as break in attempts.

Workaround: Use the Network Time Protocol (NTP) religiously. Use of NTP can result in machines that have extremely small time differences.

Note: The NFS protocol version 3 does have support for the client specifying the time when updating a file, but this is not widely implemented. Additionally, it does not help in the case where two clients are accessing the same file from machines with drifting clocks.

b. File Locking Semantics

Programs use file locking to insure that concurrent access to files does not occur except when guaranteed to be safe. This prevents data corruption, and allows handshaking between cooperative processes.

In Unix, the kernel handles file locking. This is required so that if a program is terminated, any locks that it has are released. It also allows the operations to be atomic, meaning that a lock cannot be obtained by multiple processes.

Because NFS is stateless, there is no way for the server to keep track of file locks - it simply does not know what clients there are or what files they are using. In an effort to solve this, a separate server, the lock daemon, was added. Typically, each NFS server will run a lock daemon.

The combination of lock daemon and NFS server yields a solution that is almost like Unix file locking. Unfortunately, file locking is extremely slow, compared to NFS traffic without file locking (or file locking on a local Unix disk). Of greater concern is the behaviour of NFS locking on failure.

In the event of server failure (e.g. server reboot or lock daemon restart), all client locks are lost. However, the clients are not informed of this, and because the other operations (read, write, and so on) are not visibly interrupted, they have no reliable way to prevent other clients from obtaining a lock on a file they think they have locked.

In the event of client failure, the locks are not immediately freed. Nor is there a timeout. If the client process terminates, the client OS kernel will notify the server, and the lock will be freed. However, if the client system shuts down abnormally (e.g. power failure or kernel panic), then the server will not be notified. When the client reboots and remounts the NFS exports, the server is notified and any client locks are freed.

If the client does not reboot, for example if a frustrated user hits the power switch and goes home for the weekend, or if a computer has had a hardware failure and must wait for replacement parts, then the locks are never freed! In this unfortunate scenario, the server lock daemon must be restarted, with the same effects as a server failure.

Workaround: If possible (given program source and skill with code modification), remove locking and insure no inconsistency occurs via other mechanisms, possibly using atomic file creation (see below) or some other mechanism for synchronization. Otherwise, build platforms never fail and have a staff trained on the implications of NFS file locking failure. If NFS is used only for files that are never accessed by more than a single client, locking is not an issue.

Note: A status monitor mechanism exists to monitor client status, and free client locks if a client is unavailable. However, clients may chose not to use this mechanism, and in many implementations do not.

c. File Locking API

In Unix, there are two flavours of file locking, flock() from BSD and lockf() from System V. It varies from system to system which of these mechanisms work with NFS. In Solaris, Sun's Unix variant, lockf() works with NFS, and flock() is implemented via lockf(). On other systems, the results are less consistent. For example, on some systems, lockf() is not implemented at all, and flock() does not support NFS; while on other systems, lockf() supports NFS but flock() does not.

Regardless of the system specifics, programs often assume that if they are unable to obtain a lock, it is because another program has the lock. This can cause problems as programs wait for the lock to be freed. Since the reason the lock fails is because locking is unsupported, the attempt to obtain a lock will never work. This results in either the applications waiting forever, or aborting their operation.

These results will also vary with the support of the server. While typically the NFS server runs an accompanying lock daemon, this is not guaranteed.

Workaround: Upgrade to the latest versions of all operating systems, as they usually have improved and more consistent locking support. Also, use the lock daemon. Additionally, try to use only programs written to handle NFS locking properly, veified either by code review or a vendor compliance statement.

d. Exclusive File Creation

In Unix, when a program creates a file, it may ask for the operation to fail if the file already exists (as opposed to the default behaviour of using the existing file). This allows programs to know that, for example, they have a unique file name for a temporary file. It is also used by various daemons for locking various operations, e.g. modifying mail folders or print queues.

Unfortunately, NFS does not properly implement this behaviour. A file creation will sometimes return success even if the file already exists. Programs written to work on a local file system will experience strange results when they attempt to update a file after using file creation to lock it, only to discover another file is modifying it (I have personally seen mailboxes with hundreds of mail messages corrupted because of this), because it also "locked" the file via the same mechanism.

Workaround: If possible (given program source and skill with code modification), use the following method, as documented in the Linux open() manual page:

The solution for performing atomic file locking using a lockfile is to create a unique file on the same fs (e.g., incorporating hostname and pid), use link(2) to make a link to the lockfile and use stat(2) on the unique file to check if its link count has increased to 2. Do not use the return value of the link() call.This still leaves the issue of client failure unanswered. The suggested solution for this is to pick a timeout value and assume if a lock is older than a certain application-specific age that it has been abandoned.

e. Delayed Write Caching

In an effort to improve efficiency, many NFS clients cache writes. This means that they delay sending small writes to the server, with the idea that if the client makes another small write in a short amount of time, the client need only send a single message to the server.Unix servers typically cache disk writes to local disks the same way. The difference is that Unix servers also keep track of the state of the file in the cache memory versus the state on disk, so programs are all presented with a single view of the file.

In NFS caching, all applications on a single client will typically see the same file contents. However, applications accessing the file from different clients will not see the same file for several seconds.

Workaround: It is often possible to disable client write caching. Unfortunately, this frequently causes unacceptably slow performance, depending on the application. (Applications that perform I/O of large chunks of data should be unaffected, but applications that perform lots of small I/O operations will be severely punished.) If locking is employed, applications can explicitly cooperate and flush files from the local cache to the server, but see the previous sections on locking when employing this solution.

f. Read Caching and File Access Time

Unix file systems typically have three times associated with a file: the time of last modification (file creation or write), the time of last "change" (write or change of inode information), or the time of last access (file execution or read). NFS file systems also report this information.NFS clients perform attribute caching for efficiency reasons. Reading small amounts of data does not update the access time on the server. This means a server may report a file has been unaccessed for a much longer time than is accurate.

This can cause problems as administrators and automatic cleanup software may delete files that have remained unused for a long time, expecting them to be stale lock files, abandoned temporary files and so on.

Workaround: Attribute caching may be disabled on the client, but this is usually not a good idea for performance reasons. Administrators should be trained to understand the behaviour of NFS regarding file access time. Any programs that rely on access time information should be modified to use another mechanism.

g. Indestructible Files

In Unix, when a file is opened, the data of that file is accessible to the process that opened it, even if the file is deleted. The disk blocks the file uses are freed only when the last process which has it open has closed it.An NFS server, being stateless, has no way to know what clients have a file open. Indeed, in NFS clients never really "open" or "close" files. So when a file is deleted, the server merely frees the space. Woe be unto any client that was expecting the file contents to be accessible as before, as in the Unix world!

In an effort to minimize this as much as possible, when a client deletes a file, the operating systems checks if any process on the same client box has it open. If it does, the client renames the file to a "hidden" file. Any read or write requests from processes on the client that were to the now-deleted file go to the new file.

This file is named in the form .nfsXXXX, where the XXXX value is determined by the inode of the deleted file - basically a random value. If a process (such as rm) attempts to delete this new file from the client, it is replaced by a new .nfsXXXX file, until the process with the file open closes it.

These files are difficult to get rid of, as the process with the file open needs to be killed, and it is not easy to determine what that process is. These files may have unpleasant side effects such as preventing directories from being removed.

If the server or client crashes while a .nfsXXXX file is in use, they will never be deleted. There is no way for the server or a client to know whether a .nfsXXXX file is currently being used by a client or not.

Workaround: One should be able to delete .nfsXXXX files from another client, however if a process writes to the file, it will be created at that time. It would be best to exit or kill processes using an NFS file before deleting it. Unfortunately, there is no way to know if an uncooperative process has a file open.

h. User and Group Names and Numbers

NFS uses user and group numbers, rather than names. This means that each machine that accesses an NFS export needs (or at least should) have the same user and group identifiers as the NFS export has. Note that this problem is not unique to NFS, and also applies, for instance, to removable media and archives. It is most frequently an issue with NFS, however.Workaround: Either the /etc/passwd and /etc/group files must be synchronized, or something like NIS+ needs to be used for this purpose.

i. Superuser Account

NFS has special handling of the superuser account (also known as the root account). By default, the root user may not update files on an NFS mount.Normally on a Unix system, root may do anything to any file. When an NFS drive has been mounted, this is no longer the case. This can confuse scripts and administrators alike.

To clarify: a normal user (for example "shane" or "billg") can update files that the superuser ("root") cannot.

Workaround: Enable root access to specific clients for NFS exports, but only in a trusted environment since NFS is insecure. Therefore, this does not guarantee that unauthorized client will be unable to access the mount as root.

j. Security

NFS is inherently unsecure. While certain methods of encrypted authentication exist, all data is transmitted in the clear, and accounts may be spoofed by user programs.Workaround: Only use NFS within a highly trusted environment, behind the appropriate firewalls and with careful attention to host security. Visit the NFS (in)security administration and information clearinghouse for suggestions.

SunWorld offers the following advice in the Solaris Security FAQ:

Disable NFS if possible. NFS traffic flows in clear-text (even when using "AUTH_DES" or "AUTH_KERB" for authentication) so any files transported via NFS are susceptible to snooping.

Sound advice, indeed.

k. Unkillable Processes

When an NFS server is unavailable, the client will typically not return an error to the process attempting to use it. Rather the client will retry the operation. At some point, it will eventually give up and return an error to the process.In Unix there are two kinds of devices, slow and fast. The semantics of I/O operations vary depending on the type of device. For example, a read on a fast device will always fill a buffer, whereas a read on a slow device will return any data ready, even if the buffer is not filled. Disks (even floppy disks or CD-ROM's) are considered fast devices.

The Unix kernel typically does not allow fast I/O operations to be interrupted. The idea is to avoid the overhead of putting a process into a suspended state until data is available, because the data is always either available or not. For disk reads, this is not a problem, because a delay of even hundreds of milliseconds waiting for I/O to be interrupted is not often harmful to system operation.

NFS mounts, since they are intended to mimic disks, are also considered fast devices. However, in the event of a server failure, an NFS disk can take minutes to eventually return success or failure to the application. A program using data on an NFS mount, however, can remain in an uninterruptable state until a final timeout occurs.

Workaround: Don't panic when a process will not terminate from repeated kill -9 commands. If ps reports the process is in state D, there is a good chance that it is waiting on an NFS mount. Wait 10 minutes, and if the process has still not terminated, then panic.

l. Timeouts on Mount and Unmount

If the Unix mount or umount command attempts to operate on a NFS mount, it will retry the operation until success or timeout, which is several minutes. Normally, this is not a great problem when mounting and unmounting manually. However, when added to the file system table for a host, it can slow booting and shutdown considerably. This can impact service negatively, as machines can take much longer to reboot than they would otherwise.A special case of this is circular mounts. It is possible for a machine to mount a directory from a machine that it is also exporting to. This is not normally a problem in service, but during system boots it can cause problems. During power failures, for instance, systems will often hang waiting to mount each other, until eventually timing out.

Any mounting scenario that eventually leads back to the originating host can cause this problem.

Workaround: File system tables should be audited to make sure that old NFS servers have not been left.

Circular mounts should be avoided. (This is sometimes more difficult than it sounds, for instance in environments where mail folders are exported from a single host, and home directories are exported from a single host. Both of these hosts need the mounts from the other. In this case, try automount for these file systems - but see below for automount concerns.)

m. Overlapping Exports

Unlike mounts from secondary storage, NFS exports are not tied to distinct physical media, but are determined by system administrator configuration. Because of this, it is possible to export a directory that refers to a single file system multiple times.

# export of printer spool directory /var/spool/lpr *.ripe.net(rw) # export of mail spool, from the same file system /var/spool/mail *.ripe.net(rw)The problem here is that it is not immediately obvious to users and applications on clients who have mounted both exports that these reside on the same physical media. When df reports each partition has 200 Mbyte free, for instance, they may assume there is actually 400 Mbyte free, when in fact there is only the same 200 Mbyte reported twice.

Workaround: Either be careful not to export directories from a single file system separately, or train administrators and possibly users as to the implications of this style of export. If the free space and inode count for several mounts is identical, check if they are actually from the same physical storage.

Note: Certain NFS servers, such as the Network Appliance servers, allow exports to have size restrictions lower than the amount of free space on the physical storage. In this case, there is no way for a client to know if they actually use the same disk(s). Use this "feature" with caution.

n. Automount

Automounting is a method where Unix mounts file systems on an as-needed basis. This is useful for removable media, which may change frequently. It is also useful NFS, which may not be accessible all the time, and which would require a lot of time to mount large numbers of file systems.One minor concern is the transitive nature of automounted mounts, which deviates from standard Unix practice. Not a large concern, but it is yet another way in which NFS mounts deviate in operation from normal local mounts.

Another issue is the invisibility of automount mount points. Normally, a Unix filesystem requires that a directory (hopefully empty) exists where a mount is attached. For automount directories this is not the case. An example:

$ cd /home $ ls -F $ cat shane/hello.pl #!/usr/bin/perl print "Hello, world (Perl rules)./n"; $ ls -F shane/ $As you see here, the /home directory was empty, then on access of /home/shane/hello.pl the directory /home/shane was automounted. At this point, the /home directory contained a directory. Most users would not expect to have a directory created by the cat command.

Workaround: Train administrators on the affects of automounting so they will not be surprised.

IV. Other Issues to Address

The concerns discussed in the previous section are just few examples of the problems with NFS. Additional issues that should be mentioned, and will hopefully be researched and discussed in more detail in future, are:

a. Scalability

NFS is designed the way it is for scalability NFS is typically not used on the scale of more than a few dozen machines. While it is possible that larger configurations exist, it is doubtful that NFS can reasonably support them. The problem is not so much software or system support, but rather bottlenecks on the media. Consider the performance implications of sharing even an extremely fast hard disk with hundreds of other users.

b. Server Replication

Some NFS implementations support server replication. The implications on performance, administration, and data consistency for these configurations need to be seriously examined and evaluated.

V. Typical (Mis)Uses of NFS

NFS is often used in ways that guarantees that its weaknesses will cause problems. Following are a few examples and specifics on how NFS causes problems.

a. Logging

The idea of using NFS for logging is that it allows a single location where an entire site's records can be maintained. This allows for more efficient use of disk resources and centralization of administrative tasks like backup. However, using a centralized log file system makes all applications using it vulnerable to any of them filling the available space. This can happen by a single task on the network running away.Also, if a host is having network problems, it may stop logging, making it difficult for the administrator to determine what chain of events led up to the current state. At the very least, the administrator will not be able to access these logs from that host.

Security is always a primary concern with NFS, but with centralized logging potentially sensitive information may be broadcast to the LAN. For example, "from" and "to" information on e-mail is often logged by e-mail servers. Malicious users can also take advantage of the previously mentioned concerns to confuse administrators and cover their tracks.

Any use of the network runs a higher risk of data loss than local disks. With two hosts, the chance of hardware error is higher than with a single host. Additionally, any switches or cables between the hosts may fail. Hosts are also vulnerable to DNS failures, DHCP failures, as well as security risks like UDP spoofs and the previously mentioned denial of service attacks.

b. Data Exchange

NFS is often used to exchange data between cooperating tasks running on different machines. Typically it involves a well-known directory where one or more machines add files and one or more machines remove the files for processing.This can work, but coordination is difficult. Developers and administrators need to be aware of the issues documented in the list of concerns above.

Synchronization is often difficult when using NFS for data exchange, especially when using tools not intended for this task. A common backup procedure is for hosts to place archive files in an NFS mount that a different host copies to backup media. This can lead to disaster as file systems fill and the backup procedure takes longer, resulting in the copy to tape starting before the backup has completed. (The real disaster occurs when a restoration from this tape is attempted.)

NFS is generally considered an easy means of solving data sharing problems because it is often already configured and installed. The problems with this incorrect assumption are often not discovered until a great deal of work is required to re-engineer a solution. As a result, the mostly working, but not best, solution is left in place.

VI. Reasonable NFS Use

There are situations where NFS is fine solution for an administration problem. These always involve data that has a single point of update.

a. Package Maintenance

Administrators often have to maintain packages across a large number of machines. In this case, they desire to reduce the work and chance for misconfiguration by centralizing package installation and configuration.This is an excellent application for NFS. Executables and their configuration files can be exported in a read-only fashion. Users will automatically have the latest versions of their software available.

Changes should still occur during off-peak times to avoid user confusion as much as possible.

b. User Home Directories

NFS is often used to present a consistent environment to users. By using NFS to mount users' home directories, machines can be treated as equivalent by users.In this scenario, care must be taken to present users with a consistent environment in other regards. This means identical package installation and so on. It is also difficult to do this in a heterogeneous environment, where different operating systems and/or hardware are used. In this case, users should be helped to adapt their scripts or configurations for the different environments where they need to.

Also, users must be informed that their home directories are NFS mounted, and made aware of the implications of that. At the very least, their cron jobs should only operate from a single host, and they will have to be educated on the appropriate mechanism for password changes and such.

VI. Alternatives

Clearly, alternatives to NFS are needed. Unfortunately, the wide availability of NFS means that there are no equivalent technologies currently on the market or under development. In examining alternatives, care must be taken not to look at current specific configurations and ask how to duplicate them without using NFS.

Simply asking for a XYZFS to replace NFS is not the solution. Like kicking any bad habit, moving away from NFS use requires some dedication and flexibility. Possible alternatives include:

a. E-mail

Rather than exporting /var/mail or /var/spool/mail, administrators should configure clients to use SMTP and IMAP (or POP3 if for some reason IMAP is not available). This has the added advantage of being easy for users to use outside of the corporate environment, such as when telecommuting or travelling.

b. Home directories

As noted above, using NFS for home directories is sometimes acceptable. I suggest that for many cases a radical new idea be employed: using workstation local hard drives for a users home directory.Modern workstations (often PC's in today's environments) tend to have extremely fast and large hard disks. Being local, they also have full speed access, far beyond anything but the fastest network controllers. The main concern is obtaining proper backups, but this should not be any more work than administering a typical NFS configuration.

A further concern is migrating user data.

c. Data Migration

Processes often run on different platforms for any number of reasons. They often need to share data.Rather than using NFS for this task, use explicit data duplication, via one of the long-established mechanisms designed for this purpose.

One easy solution is to use FTP to move this data. An even easier solution, once installed, is to use the scp program that is included with SSH to move data. This has an added advantage of being optionally encrypted and/or compressed. Do not use rcp for this purpose as it is inherently insecure, and often introduces security holes.

Other applications will require other solutions, but by and large these are neither especially complicated nor hard to configure and administer.

VIII. Conclusion

While NFS is a reasonable approach to the problem of remote access to disks, it fails to do so in a way that preserves Unix file semantics. Using NFS for complex networking issues is often a poorly thought out attempt to use a simple solution to a complex problem.

NFS suffers from a fundamental design flaw, attempting to find a compromise between the desire to deal with the network environment efficiently and preserve existing access semantics. The advertised ability of NFS to treat network resources as if they were local plainly does not measure up under close scrutiny.

There are two potential solutions to the problem of remote access that do not make this compromise.

One potential solution is to abandon Unix file semantics for remote access. This is the solution taken by many of the advanced file systems researched by the acedemicacademic community. This allows improved control over affects of working in a network environment, and also allows for use of special features only available in thise environment, such as disconnected activity or using data across multiple hosts. Unfortunately, for full advantage it requires software be rewritten with understanding of the different semantics.

Another solution would be to pursue Unix semantics to their full extent, without compromising for performance or reliability reasons, which has never been seriously attempted. It may be that this would be a poor solution, but I suggest that in a modern environment, with processor speed and memory availability greatly outstripping increases in number of clients, that this would not be the case.

Until a "plug-in" alternative to NFS is developed, administrators, developers, managers, and all others who are in positions to recommend networking and storage solutions would do well to avoid use of NFS whenever possible. If a decision to use NFS is finally reached, at the very least the issues discussed here should be addressed in the early phases of installation, to avoid painful changes late in implementation or delivery.

IX. References

NFS: Network File System Protocol Specification, RFC1094

NFS Version 3 Protocol Specification, RFC1813

NFS version 4 Protocol, draft-ietf-nfsv4-07.txt