Introduction

Interspeech 2017 was held during August 20-24, 2017 in Stockholm, Sweden. Participants from various research institutes, universities and renowned companies used this platform to share their newest technologies, systems, and products. A high-profile team from Alibaba Group, a Diamond Sponsor of the conference, also joined the event. It was announced that from October 25 on, Alibaba iDST Voice Team and Alibaba Cloud Community will work together on a series of information sharing meetings regarding voice technology, in an effort to share the technological progress reported in Interspeech 2017. This article covers the session on Self-adaptive Speech Recognition Technology.

1. Self-adaptive Speech Recognition Technology

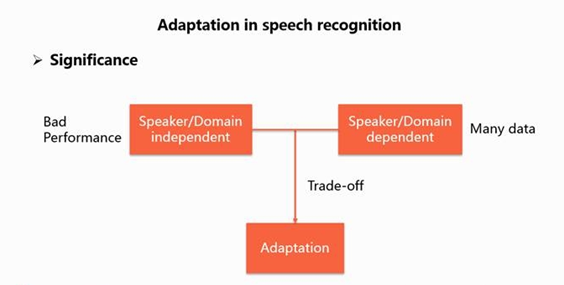

Self-adaptive speech recognition technology aims to improve the performance of speech recognition system for a certain speaker or domain. The purpose of this technology is to eliminate the decline in speech recognition performance due to variations in speakers or domains in the training and test sets. This variation mainly includes phonetics difference and those arising from pronunciation habits. Self-adaptive technology is used for products related to speech recognition technology and speech recognition of VIP customers.

The variability may decrease the performance of speaker/domain-independent recognition systems. If a related recognition system is trained for such speaker or domain, huge data collection is required, which is costly. Self-adaptive speech recognition technology offers a good trade-off, requires less data and offers good performance.

The self-adaptive speech recognition technologies can be classified into two categories according to their self-adaption spaces: self-adaptions in feature space and model space. The self-adaption in feature space tries to convert the relevant feature into irrelevant feature, thus matching with the irrelevant model. The self-adaption in model space tries to convert the irrelevant model into relevant model and thus match with the relevant feature. In short, the purpose of these two types of algorithms is to match the relevant feature with the irrelevant model.

2. Interspeech 2017 Paper Reading

2.1 Paper 1

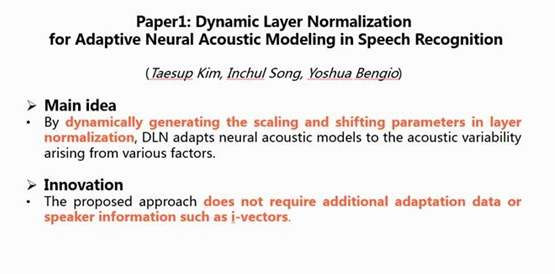

This article titled "Dynamic Layer Normalization for Adaptive Neural Acoustic Modeling in Speech Recognition" was presented by the University of Montréal. The main idea of this article is to convert the context-independent scaling and shifting parameters in layer normalization into context-dependent parameters and dynamically generate scaling and shifting parameters from the contextual information. This is called self-adaption in model space. The main innovative aspect lies in the fact that it does not require a self-adaptive stage (i.e., the self-adaption using the target domain enables learning knowledge on the target domain) or a relevant feature that includes speaker information, such as i-Vector.

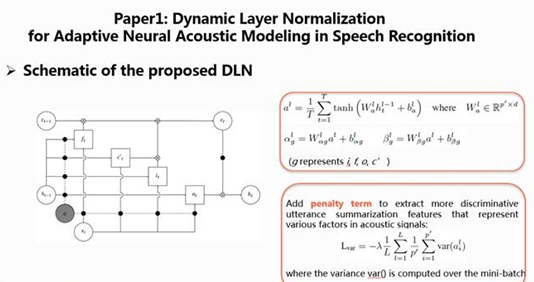

The formula for the proposed DLN is shown in the diagram on the upper right. First, use the hidden layer or input vector hl−1thtl−1 of the same size as the minibatch (TT) of the previous layer for summarization and get alal. After that, use the matrix of a linear transformation and bias to dynamically control scaling (alphalgalphagl) and shifting (betalgbetagl) parameters.

Meanwhile, on the basis of the previous CE training, add a penalty term (LvarLvar in the diagram above) to increase the variance in the sentence and extract a more discriminative summarization.

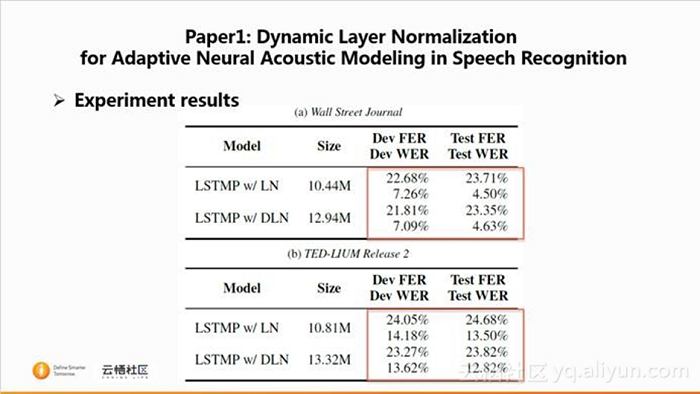

This paper further summarizes the experiments on 81-hour WSJ and 212-hour TED datasets including 283 and 5,076 speakers, respectively.

At first, the performances of LN and DLN are compared for the WSJ dataset, mainly including FER (frame error rate) and WER (word error rate) of the development and test sets. The result shows that the performance of DLN is better than that of LN, except for the WER of the test set. This paper argues that the number of speakers in the WSJ dataset is less and therefore the variability among sentences is unobvious, and that the WSJ dataset is recorded in quiet environment and therefore the sentences are smooth and steady and the DLN cannot take effect.

As illustrated in the second diagram, the result of the TED dataset shows that the four performance parameters of DLN are better than those of LN. The comparison between the WSJ and TED datasets shows that the TED dataset has better performance because the TED dataset has more speakers and sentences than the WSJ dataset and therefore the variability is more obvious. In this paper, we see that the dynamical LN is related to the variability of sentences. And on the whole, DLN is better than LN.

2.2 Paper 2

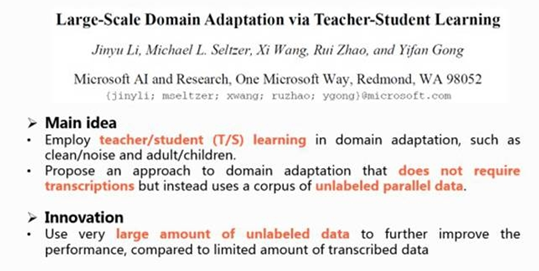

This paper titled "Large-Scale Domain Adaptation via Teacher-Student Learning" is from Microsoft. The main idea of this paper is to employ teacher/student structure in domain adaptation. This method does not require labeled data of target the domain, but requires parallel data of the training set. The innovative and valuable aspect of this method is the fact that it uses very large amount of unlabeled data and the output of teacher network to further improve the performance of student model.

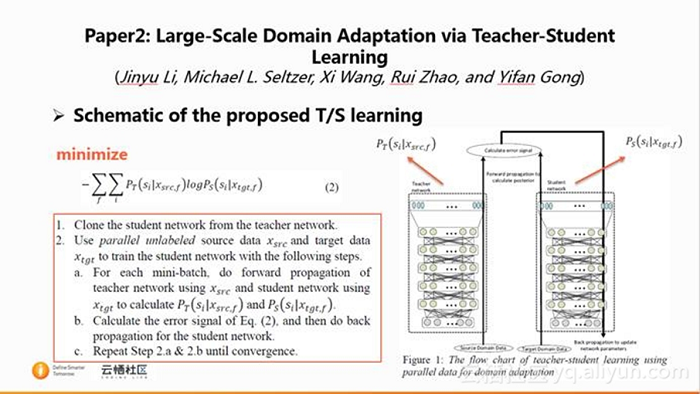

In the above diagram, "Teacher/student" is abbreviated as T/S. The flow chart of T/S training is illustrated on the upper right. In Figure 1, the teacher network is on the left and the student network is on the right, and the posterior probabilities of their output are set as PTPT and PSPS respectively.

The training process of student network is as follows:

- First, clone the initial student network from the teacher network.

- After that, use student domain data and teacher domain data to calculate posterior probabilities of the corresponding networks (PTPT and PSPS).

- Finally, use these two posterior probabilities to calculate the error signal and do back propagation for the student network.

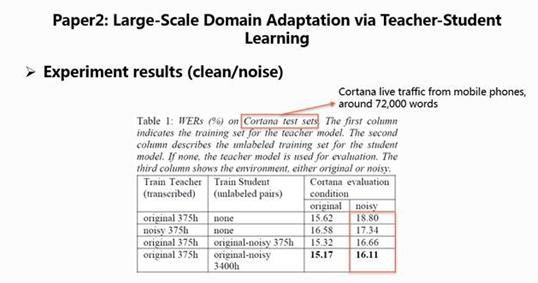

The experiment in this paper is based on the 375-hour Cortana data in English language. Different test sets are used for different domains.

For original/noisy environment, the experiment is conducted on the Cortana test set. At first, the teacher network is tested, and the test result shows that the performance of noisy speech is much worse than that of noise-free speech (18.8% vs. 15.62%). If a simulation approach is employed to train the teacher network, the test result shows improvement in the performance of noisy speech (17.34%), which is equivalent to the training on the student network using hard label. The T/S algorithm is used for Line 4 and Line 5; for the same quantity of data, the soft label is better than hard label (16.66% vs. 17.34%). Increase in the data of student network training to 3,400 hours would further enhance the performance (16.11%).

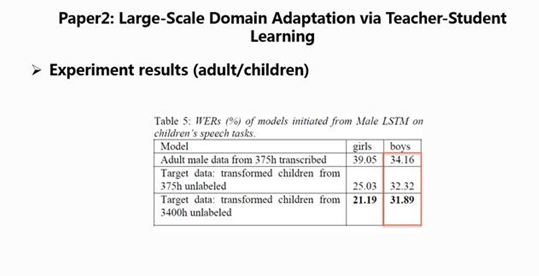

For adult/child experiment, first wipe off the 375-hour female and child data to generate an adult male model. The experiment result shows that the recognition performance of children is much worse, at 39.05 and 34.16, respectively. The usage of T/S algorithm in an original/noisy environment can further enhance the performance, and the expansion of data is beneficial to the performance.

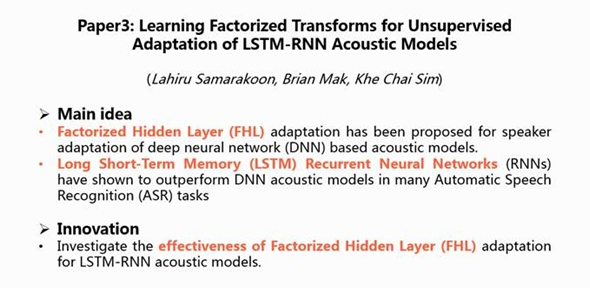

2.3 Paper 3

This paper is provided by the Hong Kong University of Science and Technology and Google. The main idea and innovative aspect behind this article is application of self-adaptive approach of the Factorized Hidden Layer (FHL) to LSTM-RNN.

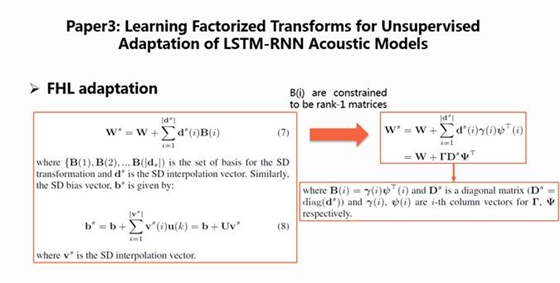

For the FHL adaptation algorithm, add a speaker-dependent web weight (WW) to the speaker-independent WW to generate the speaker-dependent web weight (WsWs). In formula (7), we can see that (B(1),B(2),...,B(i))(B(1),B(2),...,B(i)) is the set of the basis matrix for the SD transformation obtained through linear interpolation. Similarly, the neural network bias (bb) can be changed according to the speaker.

However, as the basis matrix brings in a large amount of parameters during the actual experiment, the basis matrix is constrained to be rank-1 and formula (7) is subject to alternation as shown on the upper right. As the basis matrix is rank-1, it can be expressed as a column vector (gamma(i)gamma(i)) multiplied by a row vector (psi(i)Tpsi(i)T). Meanwhile, the interpolation vector is expressed in the form of diagonal matrix (DsDs). The model training becomes easier with continued multiplication of GammaGamma, DsDs and PsiTPsiT.

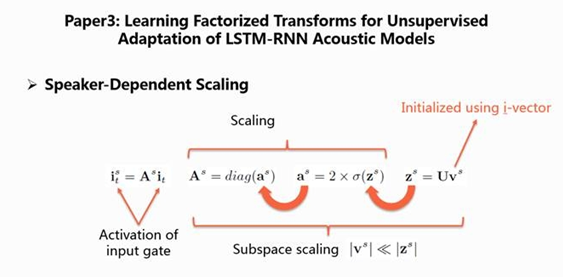

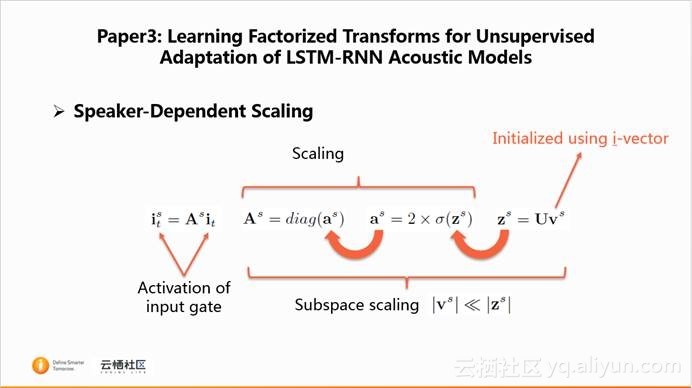

This article also introduces the speaker-dependent scaling. The activation value of the LSTM memory unit is used for speaker-dependent scaling. As shown in the formula, the speaker-dependent scaling can be done with zszs learning for such speaker. But there is a problem in this algorithm: the zszs dimension is related with the layer width of the network, and many parameters are required. Therefore, a subspace scaling approach is presented, whereby the zszs is controlled using a low-dimensional vector vsvs at a fixed dimension, and the vsvs dimension is much less than zszs, significantly reducing the amount of speaker-dependent parameters.

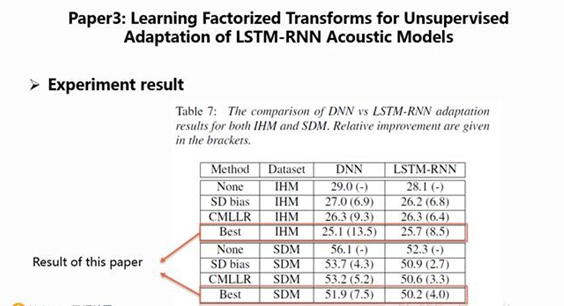

This paper is based on a 78-hour dataset. The diagram above shows the final WER generated using the algorithm in the article. In the diagram, "none" stands for no usage of self-adaptive algorithm, and "SD bias" for no usage of SD weighted matrix in the FHL other than SD bias. CMLLR is a self-adaptive algorithm. The algorithm in the article has achieved the best performance compared with SD bias and CMLLR. The improvement in performance of LSTM-RNN is less than that of DNN, indicating that the adaption on the LSTM-RNN is more difficult.

3. Conclusion

These papers from Interspeech 2017 present many interesting ideas from various researchers on self-adaptive technology. It has personally benefitted me a lot and I hope this article can educate you more on the tenets of self-adaptive technology.

4. References

[1] Kim T, Song I, Bengio Y. Dynamic Layer Normalization for Adaptive Neural Acoustic Modeling in Speech Recognition[J]. 2017.

[2] Li J, Seltzer M L, Wang X, et al. Large-Scale Domain Adaptation via Teacher-Student Learning[J]. 2017.

[3] Samarakoon L, Mak B, Sim K C. Learning Factorized Transforms for Unsupervised Adaptation of LSTM-RNN Acoustic Models[C]// INTERSPEECH. 2017:744-748.