不多说,直接上干货!

首先,大家先去看我这篇博客。对于Oozie的安装有一个全新的认识。

Oozie安装的说明

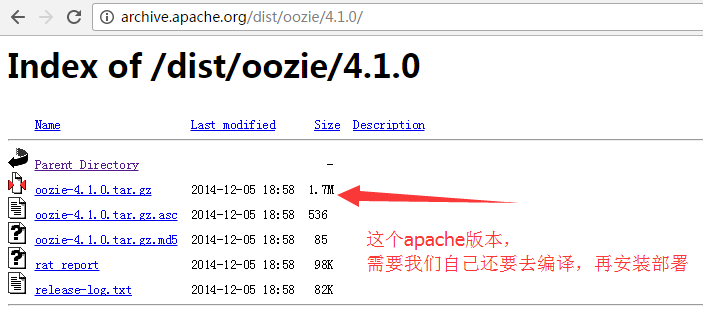

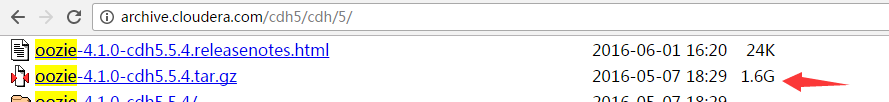

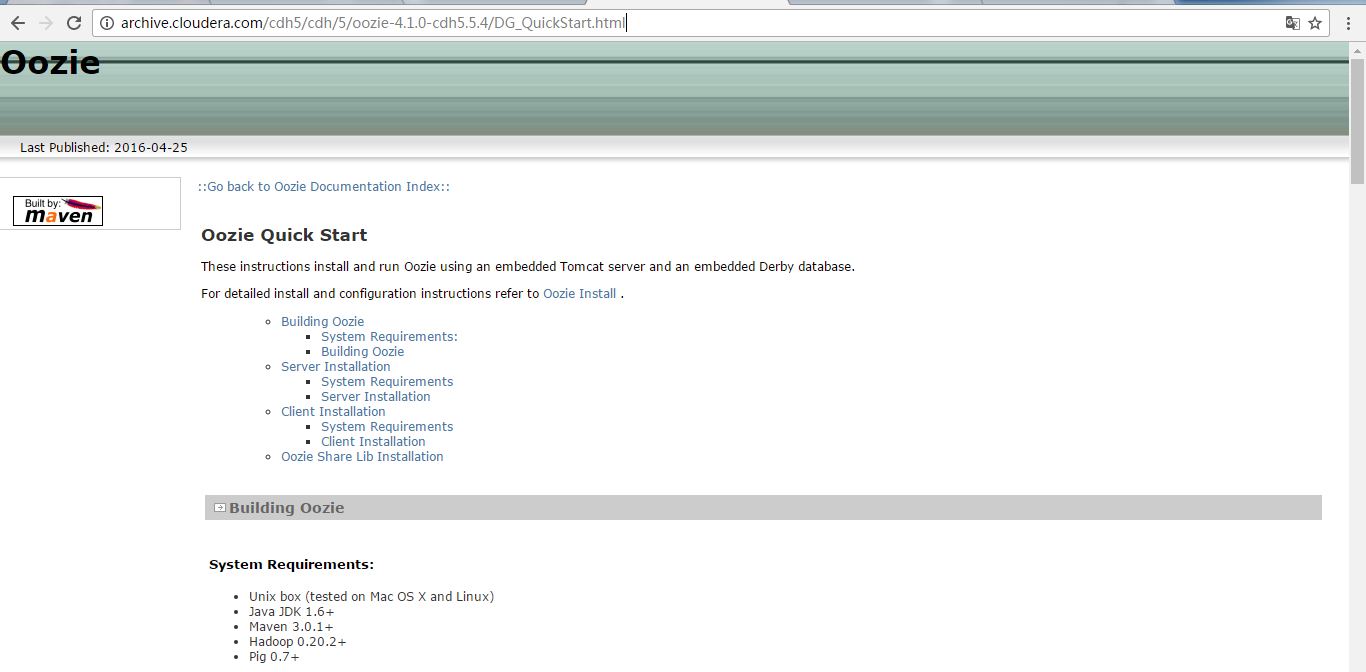

我这里呢,本篇博文定位于手动来安装Oozie,同时避免Apache版本的繁琐编译安装,直接使用CDH版本,已经编译好的oozie-4.1.0-cdh5.5.4.tar.gz。

如果,你要使用Apache版本的话,则需要自己去编译吧!

Apache版本只有1.7M。CDH(已经帮我们编译好了)有1.0G。

第一大步:oozie-4.1.0-cdh5.5.4.tar.gz的下载

http://archive.cloudera.com/cdh5/cdh/5/

当然,你这里也可以不像我这里,本地下载好,也可以在线下载。

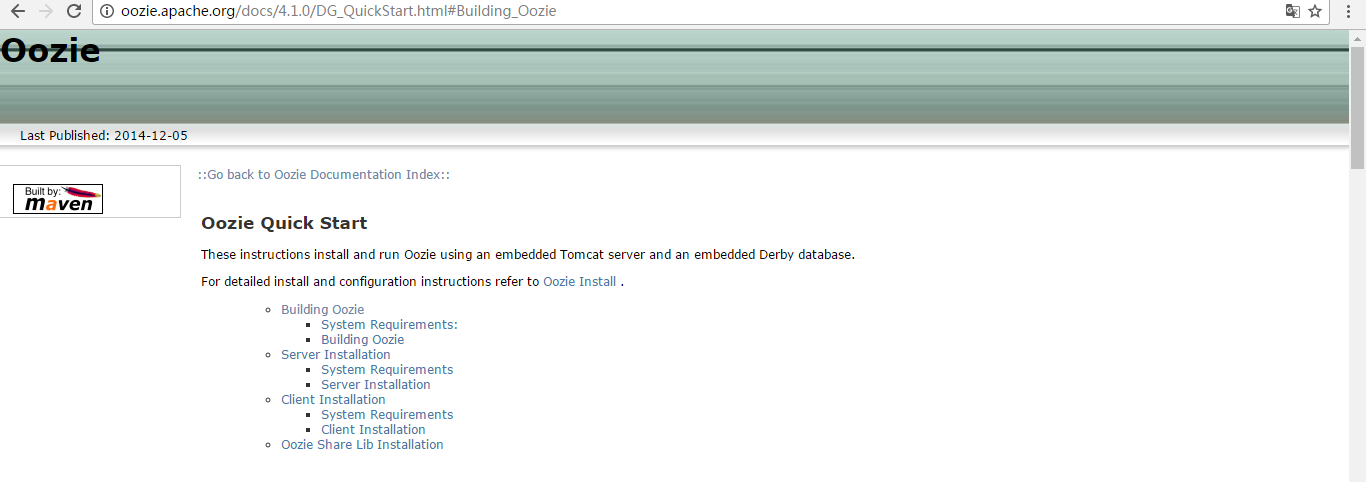

第二大步:Apache Oozie 4.1.0编译的参考官方文档(这个大家去做吧)

http://oozie.apache.org/docs/4.1.0/DG_QuickStart.html#Building_Oozie

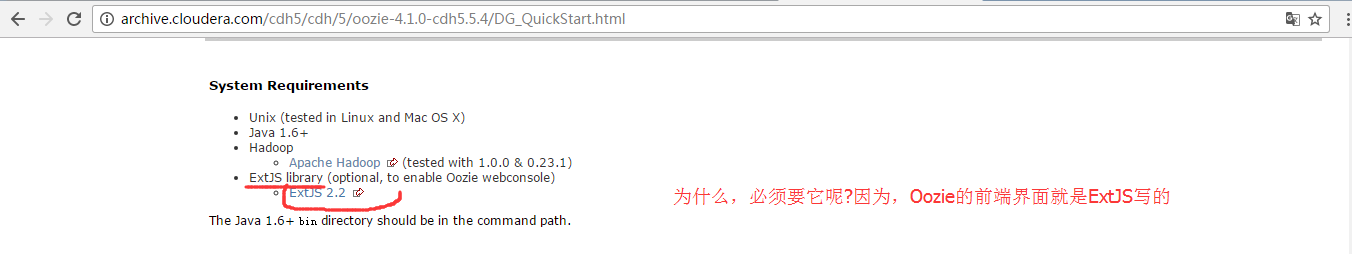

上面是官网要求,至少的!

但是呢,现在,我建议大家,如下。

使用的环境是:Hadoop-2.6.0 Oozie 4.2.0 Jdk 1.7 Maven 3.3.9 Pig0.15.0 Hive-1.2.1 Sqoop 1.99.6

第二大步:Cloudera Oozie 4.1.0编译的参考官方文档(本博文重点)

当然,大家也可以用CDH版本的源码去编译安装,我这里也不多赘述。主要注意的是,把这个源码包打开之后,里面有个pom.xml文件

这个很重要,把里面的什么hive啊、hbase等版本,改成自己机器里的版本。别用默认的。注意这些细节就可以了。然后大家有兴趣,自己去做吧!

注意: apache版本的oozie需要自己编译,由于我本身的环境是cdh5,所以可以直接cdh编译好的版本。(即oozie-4.1.0-cdh5.5.4.tar.gz)

Oozie Server Architecture

大家,可以看到Oozie Server端是在Tomcat里。

Oozie Server 的安装

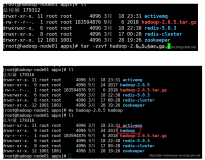

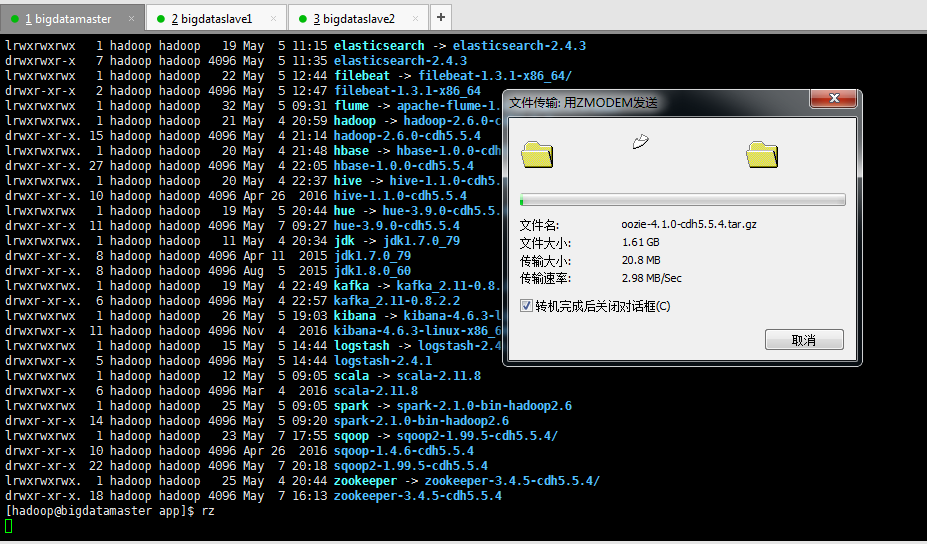

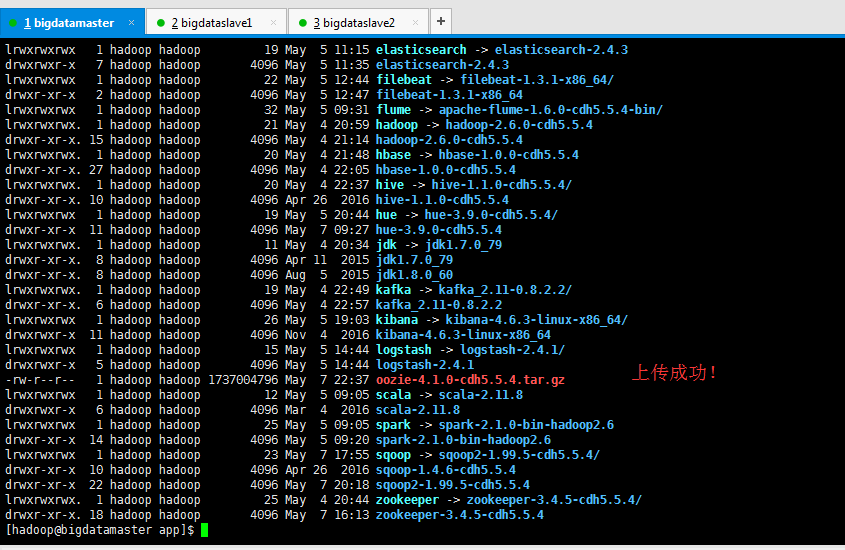

上传

需要一段时间

[hadoop@bigdatamaster app]$ pwd /home/hadoop/app [hadoop@bigdatamaster app]$ ll total 68 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 lrwxrwxrwx 1 hadoop hadoop 19 May 5 20:44 hue -> hue-3.9.0-cdh5.5.4/ drwxr-xr-x 11 hadoop hadoop 4096 May 7 09:27 hue-3.9.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 23 May 7 17:55 sqoop -> sqoop2-1.99.5-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 drwxr-xr-x 22 hadoop hadoop 4096 May 7 20:18 sqoop2-1.99.5-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 18 hadoop hadoop 4096 May 7 16:13 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$ rz [hadoop@bigdatamaster app]$ ll total 1696368 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 lrwxrwxrwx 1 hadoop hadoop 19 May 5 20:44 hue -> hue-3.9.0-cdh5.5.4/ drwxr-xr-x 11 hadoop hadoop 4096 May 7 09:27 hue-3.9.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 -rw-r--r-- 1 hadoop hadoop 1737004796 May 7 22:37 oozie-4.1.0-cdh5.5.4.tar.gz lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 23 May 7 17:55 sqoop -> sqoop2-1.99.5-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 drwxr-xr-x 22 hadoop hadoop 4096 May 7 20:18 sqoop2-1.99.5-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 18 hadoop hadoop 4096 May 7 16:13 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$

解压

[hadoop@bigdatamaster app]$ pwd /home/hadoop/app [hadoop@bigdatamaster app]$ ll total 1696368 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 lrwxrwxrwx 1 hadoop hadoop 19 May 5 20:44 hue -> hue-3.9.0-cdh5.5.4/ drwxr-xr-x 11 hadoop hadoop 4096 May 7 09:27 hue-3.9.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 -rw-r--r-- 1 hadoop hadoop 1737004796 May 7 22:37 oozie-4.1.0-cdh5.5.4.tar.gz lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 23 May 7 17:55 sqoop -> sqoop2-1.99.5-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 drwxr-xr-x 22 hadoop hadoop 4096 May 7 20:18 sqoop2-1.99.5-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 18 hadoop hadoop 4096 May 7 16:13 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$ tar -zxvf oozie-4.1.0-cdh5.5.4.tar.gz

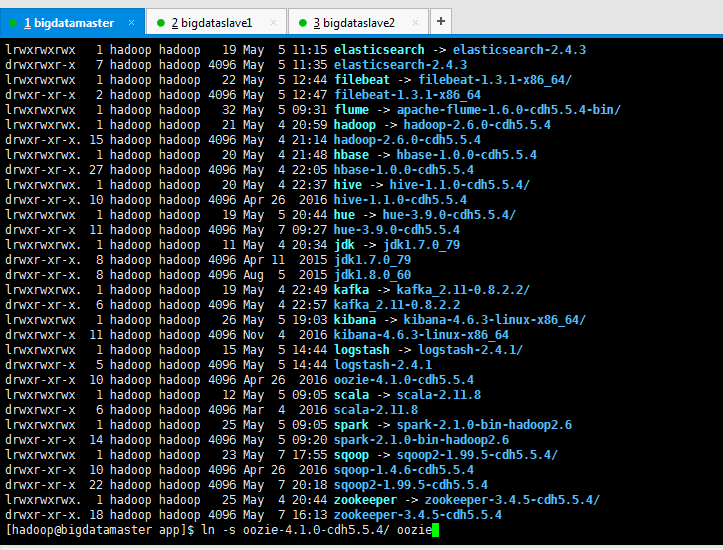

建立软链接(为了适应不同版本的需求)

[hadoop@bigdatamaster app]$ pwd /home/hadoop/app [hadoop@bigdatamaster app]$ ll total 72 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 lrwxrwxrwx 1 hadoop hadoop 19 May 5 20:44 hue -> hue-3.9.0-cdh5.5.4/ drwxr-xr-x 11 hadoop hadoop 4096 May 7 09:27 hue-3.9.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 oozie-4.1.0-cdh5.5.4 lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 23 May 7 17:55 sqoop -> sqoop2-1.99.5-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 drwxr-xr-x 22 hadoop hadoop 4096 May 7 20:18 sqoop2-1.99.5-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 18 hadoop hadoop 4096 May 7 16:13 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$ ln -s oozie-4.1.0-cdh5.5.4/ oozie [hadoop@bigdatamaster app]$ ll total 72 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 lrwxrwxrwx 1 hadoop hadoop 19 May 5 20:44 hue -> hue-3.9.0-cdh5.5.4/ drwxr-xr-x 11 hadoop hadoop 4096 May 7 09:27 hue-3.9.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 lrwxrwxrwx 1 hadoop hadoop 21 May 8 10:23 oozie -> oozie-4.1.0-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 oozie-4.1.0-cdh5.5.4 lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 23 May 7 17:55 sqoop -> sqoop2-1.99.5-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 drwxr-xr-x 22 hadoop hadoop 4096 May 7 20:18 sqoop2-1.99.5-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 18 hadoop hadoop 4096 May 7 16:13 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$

设置环境变量

[hadoop@bigdatamaster ~]$ su root

Password:

[root@bigdatamaster hadoop]# vim /etc/profile

#oozie export OOZIE_HOME=/home/hadoop/app/oozie export PATH=$PATH:$OOZIE_HOME/bin

[hadoop@bigdatamaster ~]$ su root Password: [root@bigdatamaster hadoop]# vim /etc/profile [root@bigdatamaster hadoop]# source /etc/profile

初步认识下Oozie的目录结构

[hadoop@bigdatamaster app]$ cd oozie [hadoop@bigdatamaster oozie]$ pwd /home/hadoop/app/oozie [hadoop@bigdatamaster oozie]$ ll total 1014180 drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 bin drwxr-xr-x 4 hadoop hadoop 4096 Apr 26 2016 conf drwxr-xr-x 6 hadoop hadoop 4096 Apr 26 2016 docs drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 lib drwxr-xr-x 2 hadoop hadoop 12288 Apr 26 2016 libtools -rw-r--r-- 1 hadoop hadoop 37664 Apr 26 2016 LICENSE.txt -rw-r--r-- 1 hadoop hadoop 909 Apr 26 2016 NOTICE.txt drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 oozie-core -rwxr-xr-x 1 hadoop hadoop 46275 Apr 26 2016 oozie-examples.tar.gz -rwxr-xr-x 1 hadoop hadoop 77456039 Apr 26 2016 oozie-hadooplibs-4.1.0-cdh5.5.4.tar.gz drwxr-xr-x 9 hadoop hadoop 4096 Apr 26 2016 oozie-server -r--r--r-- 1 hadoop hadoop 428704179 Apr 26 2016 oozie-sharelib-4.1.0-cdh5.5.4.tar.gz -r--r--r-- 1 hadoop hadoop 429103879 Apr 26 2016 oozie-sharelib-4.1.0-cdh5.5.4-yarn.tar.gz -rwxr-xr-x 1 hadoop hadoop 103020321 Apr 26 2016 oozie.war -rw-r--r-- 1 hadoop hadoop 83521 Apr 26 2016 release-log.txt drwxr-xr-x 21 hadoop hadoop 4096 Apr 26 2016 src [hadoop@bigdatamaster oozie]$

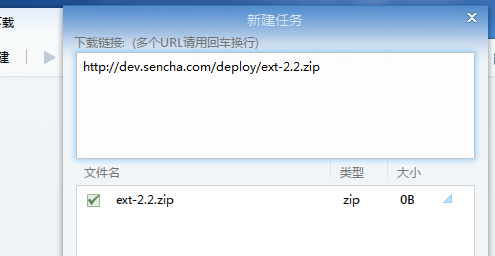

点击它,就可以下载。然后上传。

http://dev.sencha.com/deploy/ext-2.2.zip

建议用迅雷下载

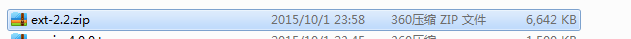

这里,我暂时上传到/home/hadoop下,其实,最终只需放到$OOZIE_HOME/libext下即可。(但是这个目录暂时是没有的,得要新建)

[hadoop@bigdatamaster ~]$ pwd /home/hadoop [hadoop@bigdatamaster ~]$ ll total 44 drwxrwxr-x. 20 hadoop hadoop 4096 May 8 10:23 app drwxrwxr-x. 7 hadoop hadoop 4096 May 5 11:22 data drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Desktop drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Documents drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Downloads drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Music drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Pictures drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Public drwxrwxr-x. 2 hadoop hadoop 4096 May 4 17:46 shell drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Templates drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Videos [hadoop@bigdatamaster ~]$ rz [hadoop@bigdatamaster ~]$ ll total 6688 drwxrwxr-x. 20 hadoop hadoop 4096 May 8 10:23 app drwxrwxr-x. 7 hadoop hadoop 4096 May 5 11:22 data drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Desktop drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Documents drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Downloads -rw-r--r-- 1 hadoop hadoop 6800612 Oct 1 2015 ext-2.2.zip drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Music drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Pictures drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Public drwxrwxr-x. 2 hadoop hadoop 4096 May 4 17:46 shell drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Templates drwxr-xr-x 2 hadoop hadoop 4096 May 5 08:51 Videos [hadoop@bigdatamaster ~]$

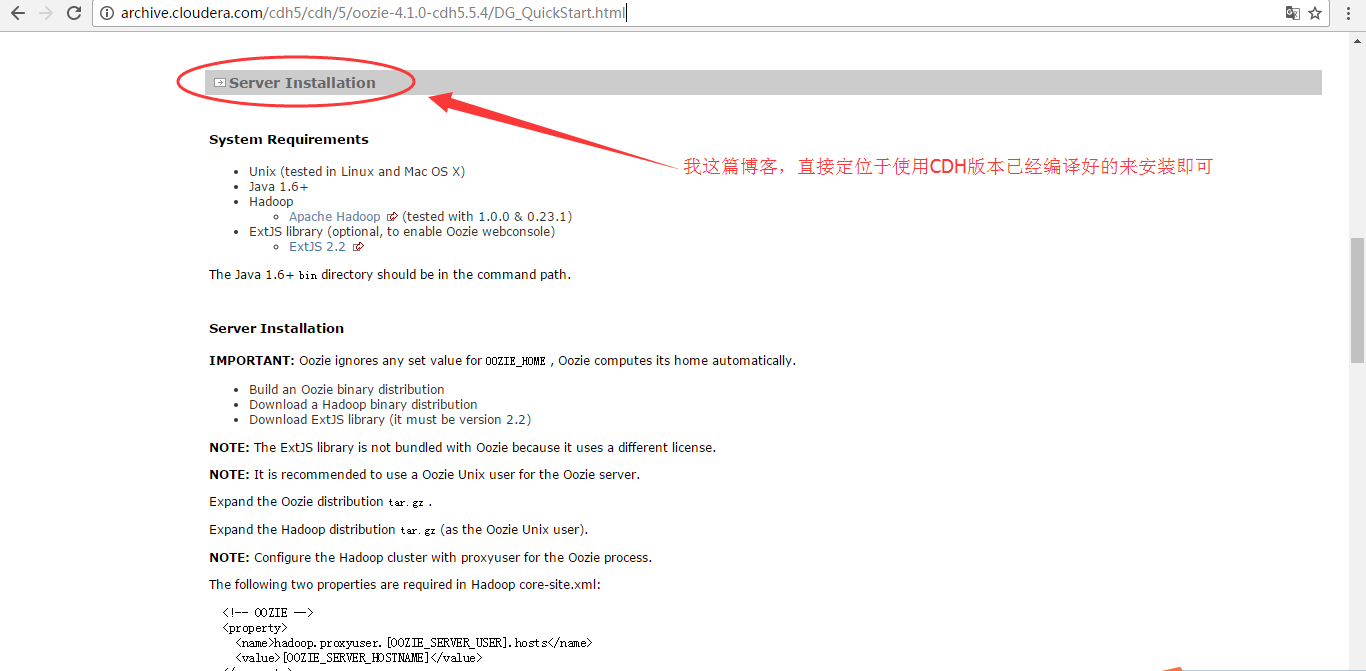

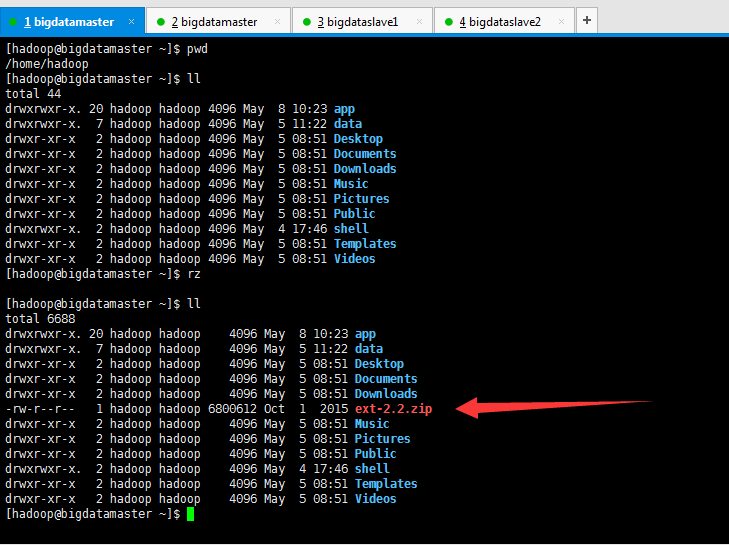

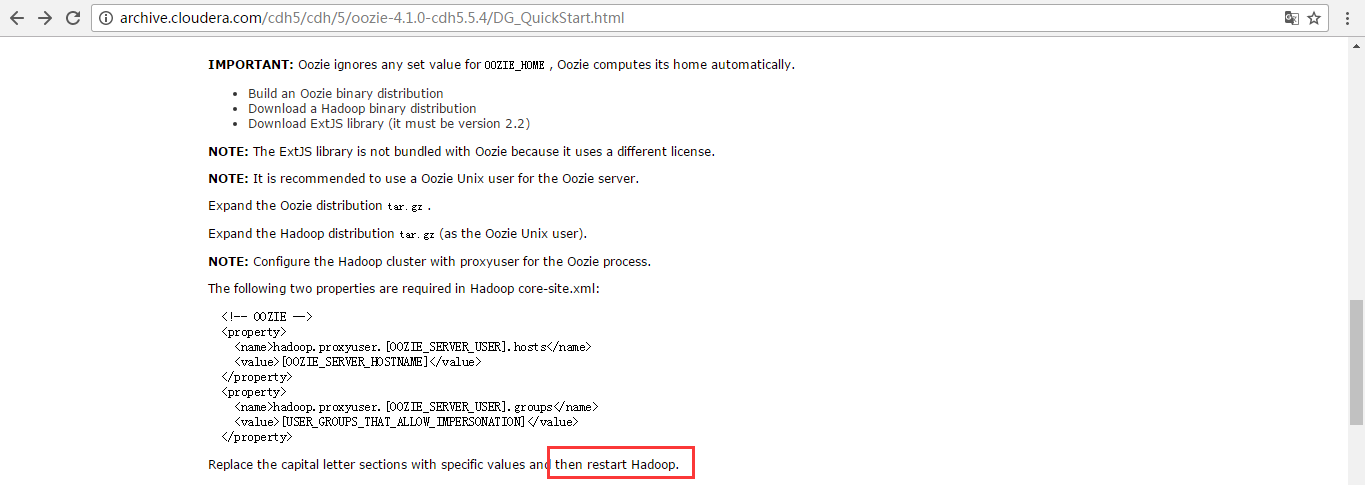

<!-- OOZIE -->

<property>

<name>hadoop.proxyuser.[OOZIE_SERVER_USER].hosts</name>

<value>[OOZIE_SERVER_HOSTNAME]</value>

</property>

<property>

<name>hadoop.proxyuser.[OOZIE_SERVER_USER].groups</name>

<value>[USER_GROUPS_THAT_ALLOW_IMPERSONATION]</value>

</property>

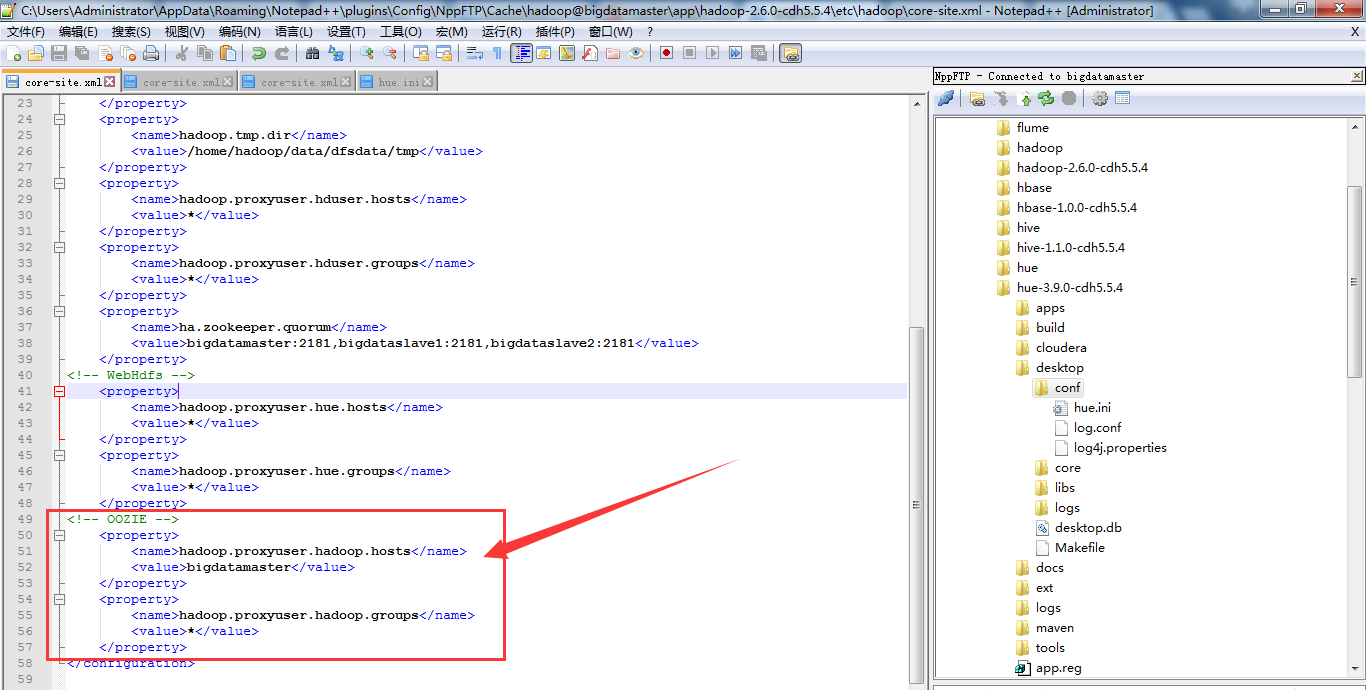

我的这里是

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>bigdatamaster</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

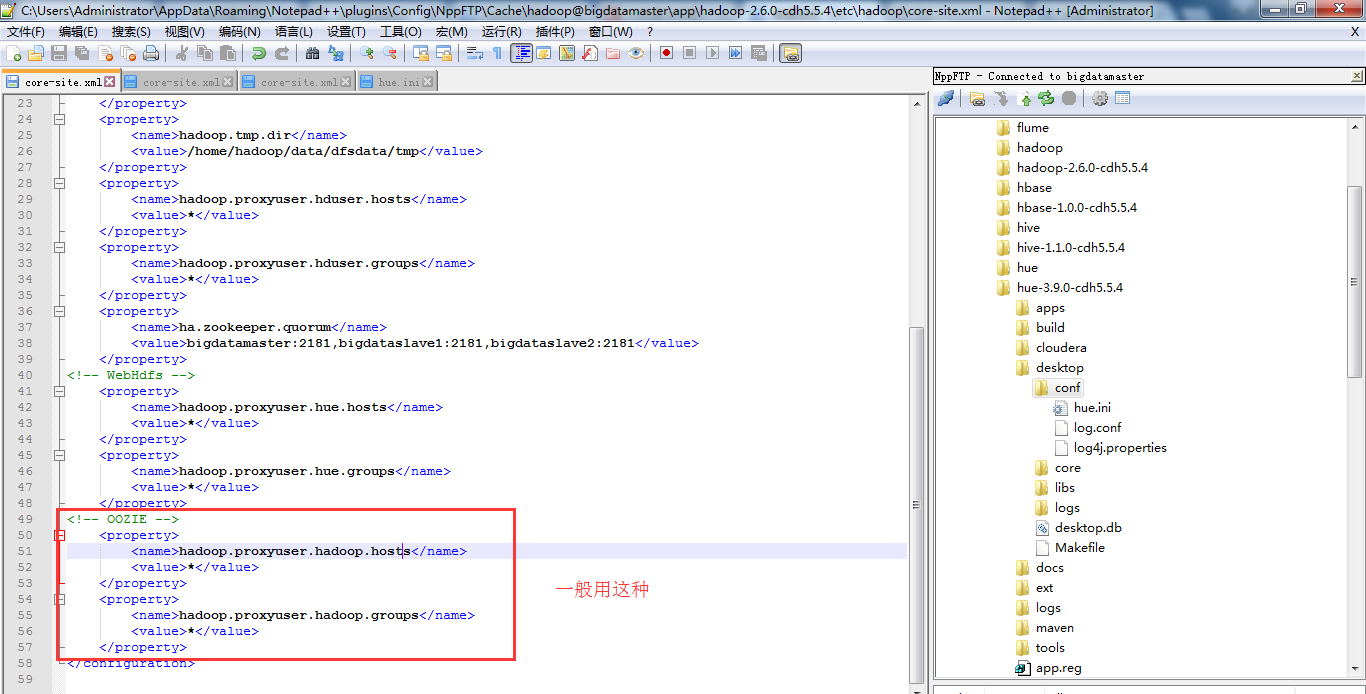

或者(一般用这种)

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

这里只需对安装oozie的机器即可,我这里只安装在bigdatamaster机器上。

注意,先配置好,再要重启hadoop。不然,不生效的哈。

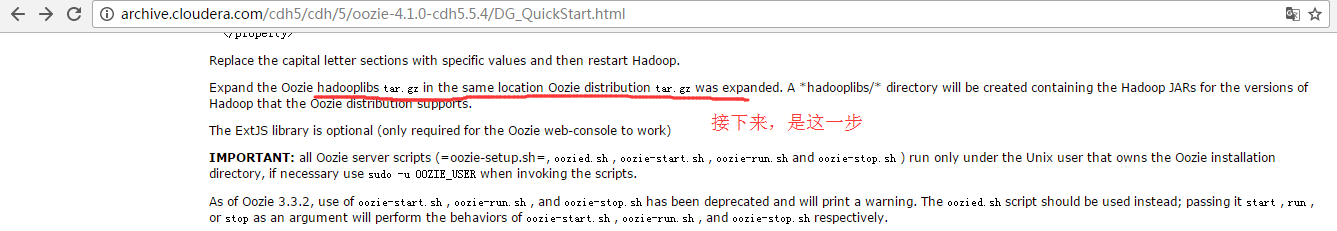

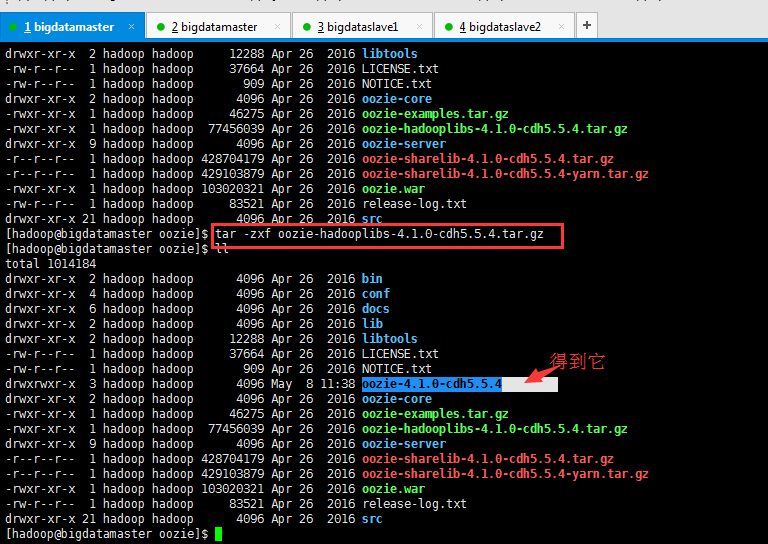

接下来,是解压hadoooplibs.tar.gz

Expand the Oozie hadooplibs tar.gz in the same location Oozie distribution tar.gz was expanded

我这里对应的是,oozie-hadooplibs-4.1.0-cdh5.5.4.tar.gz

[hadoop@bigdatamaster oozie]$ pwd /home/hadoop/app/oozie [hadoop@bigdatamaster oozie]$ ll total 1014180 drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 bin drwxr-xr-x 4 hadoop hadoop 4096 Apr 26 2016 conf drwxr-xr-x 6 hadoop hadoop 4096 Apr 26 2016 docs drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 lib drwxr-xr-x 2 hadoop hadoop 12288 Apr 26 2016 libtools -rw-r--r-- 1 hadoop hadoop 37664 Apr 26 2016 LICENSE.txt -rw-r--r-- 1 hadoop hadoop 909 Apr 26 2016 NOTICE.txt drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 oozie-core -rwxr-xr-x 1 hadoop hadoop 46275 Apr 26 2016 oozie-examples.tar.gz -rwxr-xr-x 1 hadoop hadoop 77456039 Apr 26 2016 oozie-hadooplibs-4.1.0-cdh5.5.4.tar.gz drwxr-xr-x 9 hadoop hadoop 4096 Apr 26 2016 oozie-server -r--r--r-- 1 hadoop hadoop 428704179 Apr 26 2016 oozie-sharelib-4.1.0-cdh5.5.4.tar.gz -r--r--r-- 1 hadoop hadoop 429103879 Apr 26 2016 oozie-sharelib-4.1.0-cdh5.5.4-yarn.tar.gz -rwxr-xr-x 1 hadoop hadoop 103020321 Apr 26 2016 oozie.war -rw-r--r-- 1 hadoop hadoop 83521 Apr 26 2016 release-log.txt drwxr-xr-x 21 hadoop hadoop 4096 Apr 26 2016 src [hadoop@bigdatamaster oozie]$ tar -zxf oozie-hadooplibs-4.1.0-cdh5.5.4.tar.gz [hadoop@bigdatamaster oozie]$ ll total 1014184 drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 bin drwxr-xr-x 4 hadoop hadoop 4096 Apr 26 2016 conf drwxr-xr-x 6 hadoop hadoop 4096 Apr 26 2016 docs drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 lib drwxr-xr-x 2 hadoop hadoop 12288 Apr 26 2016 libtools -rw-r--r-- 1 hadoop hadoop 37664 Apr 26 2016 LICENSE.txt -rw-r--r-- 1 hadoop hadoop 909 Apr 26 2016 NOTICE.txt drwxrwxr-x 3 hadoop hadoop 4096 May 8 11:38 oozie-4.1.0-cdh5.5.4 drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 oozie-core -rwxr-xr-x 1 hadoop hadoop 46275 Apr 26 2016 oozie-examples.tar.gz -rwxr-xr-x 1 hadoop hadoop 77456039 Apr 26 2016 oozie-hadooplibs-4.1.0-cdh5.5.4.tar.gz drwxr-xr-x 9 hadoop hadoop 4096 Apr 26 2016 oozie-server -r--r--r-- 1 hadoop hadoop 428704179 Apr 26 2016 oozie-sharelib-4.1.0-cdh5.5.4.tar.gz -r--r--r-- 1 hadoop hadoop 429103879 Apr 26 2016 oozie-sharelib-4.1.0-cdh5.5.4-yarn.tar.gz -rwxr-xr-x 1 hadoop hadoop 103020321 Apr 26 2016 oozie.war -rw-r--r-- 1 hadoop hadoop 83521 Apr 26 2016 release-log.txt drwxr-xr-x 21 hadoop hadoop 4096 Apr 26 2016 src [hadoop@bigdatamaster oozie]$

A *hadooplibs/* directory will be created containing the Hadoop JARs for the versions of Hadoop that the Oozie distribution supports.

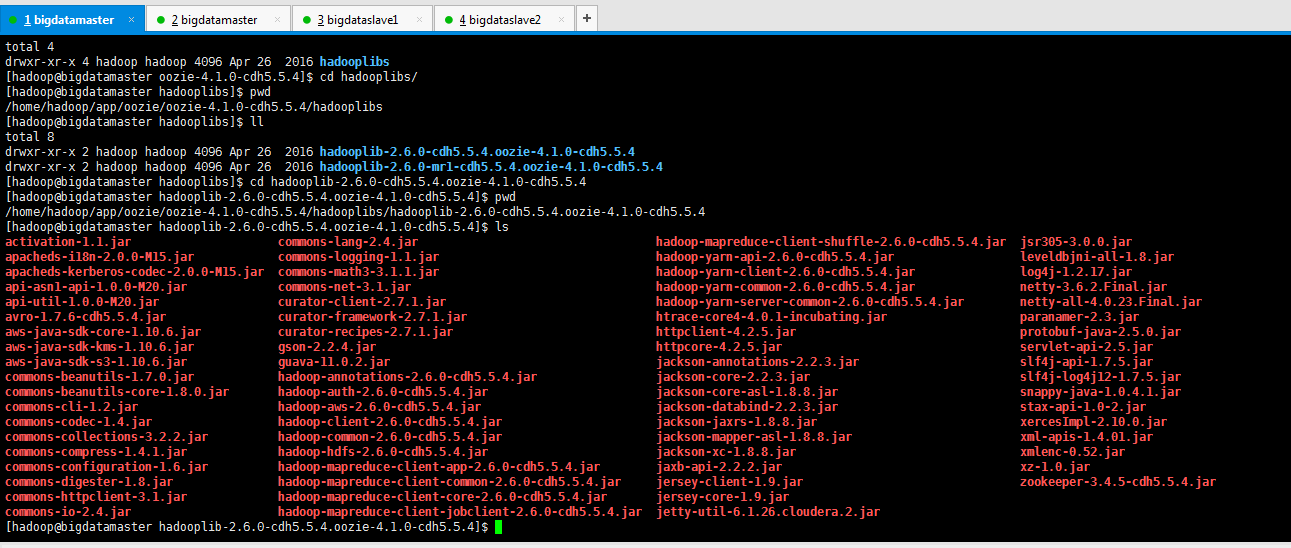

因为, 它是支持MR1,也支持MR2(YARN)。我们的是在YARN里。即成功生成了

[hadoop@bigdatamaster oozie-4.1.0-cdh5.5.4]$ pwd /home/hadoop/app/oozie/oozie-4.1.0-cdh5.5.4 [hadoop@bigdatamaster oozie-4.1.0-cdh5.5.4]$ ll total 4 drwxr-xr-x 4 hadoop hadoop 4096 Apr 26 2016 hadooplibs [hadoop@bigdatamaster oozie-4.1.0-cdh5.5.4]$ cd hadooplibs/ [hadoop@bigdatamaster hadooplibs]$ pwd /home/hadoop/app/oozie/oozie-4.1.0-cdh5.5.4/hadooplibs [hadoop@bigdatamaster hadooplibs]$ ll total 8 drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 hadooplib-2.6.0-cdh5.5.4.oozie-4.1.0-cdh5.5.4 drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 hadooplib-2.6.0-mr1-cdh5.5.4.oozie-4.1.0-cdh5.5.4 [hadoop@bigdatamaster hadooplibs]$ cd hadooplib-2.6.0-cdh5.5.4.oozie-4.1.0-cdh5.5.4 [hadoop@bigdatamaster hadooplib-2.6.0-cdh5.5.4.oozie-4.1.0-cdh5.5.4]$ pwd /home/hadoop/app/oozie/oozie-4.1.0-cdh5.5.4/hadooplibs/hadooplib-2.6.0-cdh5.5.4.oozie-4.1.0-cdh5.5.4 [hadoop@bigdatamaster hadooplib-2.6.0-cdh5.5.4.oozie-4.1.0-cdh5.5.4]$ ls activation-1.1.jar commons-lang-2.4.jar hadoop-mapreduce-client-shuffle-2.6.0-cdh5.5.4.jar jsr305-3.0.0.jar apacheds-i18n-2.0.0-M15.jar commons-logging-1.1.jar hadoop-yarn-api-2.6.0-cdh5.5.4.jar leveldbjni-all-1.8.jar apacheds-kerberos-codec-2.0.0-M15.jar commons-math3-3.1.1.jar hadoop-yarn-client-2.6.0-cdh5.5.4.jar log4j-1.2.17.jar api-asn1-api-1.0.0-M20.jar commons-net-3.1.jar hadoop-yarn-common-2.6.0-cdh5.5.4.jar netty-3.6.2.Final.jar api-util-1.0.0-M20.jar curator-client-2.7.1.jar hadoop-yarn-server-common-2.6.0-cdh5.5.4.jar netty-all-4.0.23.Final.jar avro-1.7.6-cdh5.5.4.jar curator-framework-2.7.1.jar htrace-core4-4.0.1-incubating.jar paranamer-2.3.jar aws-java-sdk-core-1.10.6.jar curator-recipes-2.7.1.jar httpclient-4.2.5.jar protobuf-java-2.5.0.jar aws-java-sdk-kms-1.10.6.jar gson-2.2.4.jar httpcore-4.2.5.jar servlet-api-2.5.jar aws-java-sdk-s3-1.10.6.jar guava-11.0.2.jar jackson-annotations-2.2.3.jar slf4j-api-1.7.5.jar commons-beanutils-1.7.0.jar hadoop-annotations-2.6.0-cdh5.5.4.jar jackson-core-2.2.3.jar slf4j-log4j12-1.7.5.jar commons-beanutils-core-1.8.0.jar hadoop-auth-2.6.0-cdh5.5.4.jar jackson-core-asl-1.8.8.jar snappy-java-1.0.4.1.jar commons-cli-1.2.jar hadoop-aws-2.6.0-cdh5.5.4.jar jackson-databind-2.2.3.jar stax-api-1.0-2.jar commons-codec-1.4.jar hadoop-client-2.6.0-cdh5.5.4.jar jackson-jaxrs-1.8.8.jar xercesImpl-2.10.0.jar commons-collections-3.2.2.jar hadoop-common-2.6.0-cdh5.5.4.jar jackson-mapper-asl-1.8.8.jar xml-apis-1.4.01.jar commons-compress-1.4.1.jar hadoop-hdfs-2.6.0-cdh5.5.4.jar jackson-xc-1.8.8.jar xmlenc-0.52.jar commons-configuration-1.6.jar hadoop-mapreduce-client-app-2.6.0-cdh5.5.4.jar jaxb-api-2.2.2.jar xz-1.0.jar commons-digester-1.8.jar hadoop-mapreduce-client-common-2.6.0-cdh5.5.4.jar jersey-client-1.9.jar zookeeper-3.4.5-cdh5.5.4.jar commons-httpclient-3.1.jar hadoop-mapreduce-client-core-2.6.0-cdh5.5.4.jar jersey-core-1.9.jar commons-io-2.4.jar hadoop-mapreduce-client-jobclient-2.6.0-cdh5.5.4.jar jetty-util-6.1.26.cloudera.2.jar [hadoop@bigdatamaster hadooplib-2.6.0-cdh5.5.4.oozie-4.1.0-cdh5.5.4]$

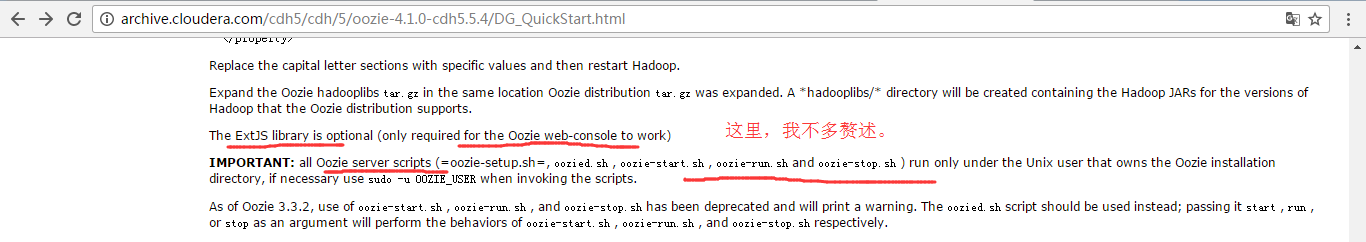

The ExtJS library is optional (only required for the Oozie web-console to work) IMPORTANT: all Oozie server scripts (=oozie-setup.sh=, oozied.sh , oozie-start.sh , oozie-run.sh and oozie-stop.sh ) run only under the Unix user that owns the Oozie installation directory, if necessary use sudo -u OOZIE_USER when invoking the scripts.

As of Oozie 3.3.2, use of oozie-start.sh , oozie-run.sh , and oozie-stop.sh has been deprecated and will print a warning. The oozied.sh script should be used instead; passing it start , run , or stop as an argument will perform the behaviors of oozie-start.sh , oozie-run.sh , and oozie-stop.sh respectively.

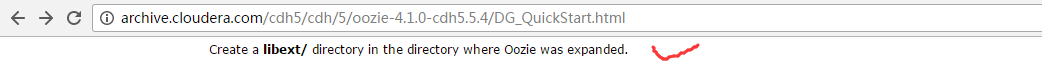

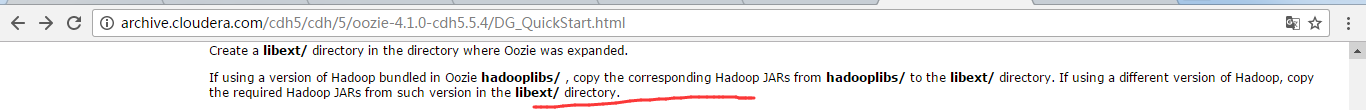

Create a libext/ directory in the directory where Oozie was expanded.

由此可见,安装后,是没有这个目录libext的。

所以,得新建mkdir libext

[hadoop@bigdatamaster oozie]$ mkdir libext [hadoop@bigdatamaster oozie]$ ll total 1014188 drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 bin drwxr-xr-x 4 hadoop hadoop 4096 Apr 26 2016 conf drwxr-xr-x 6 hadoop hadoop 4096 Apr 26 2016 docs drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 lib drwxrwxr-x 2 hadoop hadoop 4096 May 8 12:51 libext drwxr-xr-x 2 hadoop hadoop 12288 Apr 26 2016 libtools -rw-r--r-- 1 hadoop hadoop 37664 Apr 26 2016 LICENSE.txt -rw-r--r-- 1 hadoop hadoop 909 Apr 26 2016 NOTICE.txt drwxrwxr-x 3 hadoop hadoop 4096 May 8 11:38 oozie-4.1.0-cdh5.5.4 drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 oozie-core -rwxr-xr-x 1 hadoop hadoop 46275 Apr 26 2016 oozie-examples.tar.gz -rwxr-xr-x 1 hadoop hadoop 77456039 Apr 26 2016 oozie-hadooplibs-4.1.0-cdh5.5.4.tar.gz drwxr-xr-x 9 hadoop hadoop 4096 Apr 26 2016 oozie-server -r--r--r-- 1 hadoop hadoop 428704179 Apr 26 2016 oozie-sharelib-4.1.0-cdh5.5.4.tar.gz -r--r--r-- 1 hadoop hadoop 429103879 Apr 26 2016 oozie-sharelib-4.1.0-cdh5.5.4-yarn.tar.gz -rwxr-xr-x 1 hadoop hadoop 103020321 Apr 26 2016 oozie.war -rw-r--r-- 1 hadoop hadoop 83521 Apr 26 2016 release-log.txt drwxr-xr-x 21 hadoop hadoop 4096 Apr 26 2016 src [hadoop@bigdatamaster oozie]$

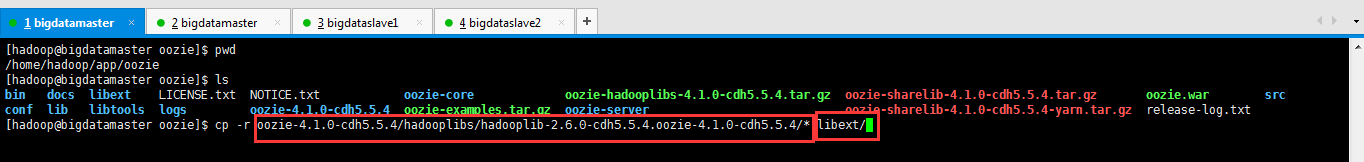

新建好目录之后,然后,将hadooplibs下所有的hadoop jar包都复制一份到这个新建好的libext目录下

If using a version of Hadoop bundled in Oozie hadooplibs/ , copy the corresponding Hadoop JARs from hadooplibs/ to the libext/ directory. If using a different version of Hadoop, copy the required Hadoop JARs from such version in the libext/ directory.

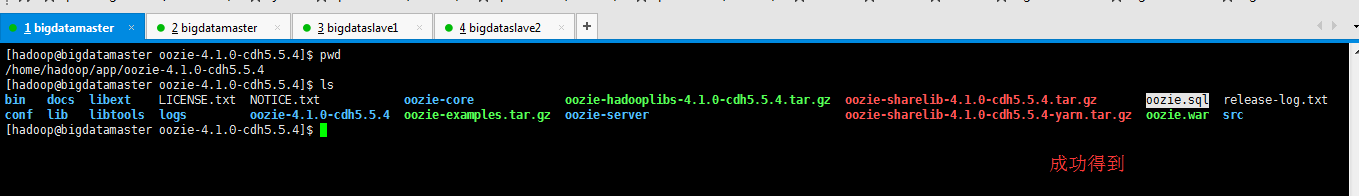

[hadoop@bigdatamaster oozie]$ pwd /home/hadoop/app/oozie [hadoop@bigdatamaster oozie]$ ls bin docs libext LICENSE.txt NOTICE.txt oozie-core oozie-hadooplibs-4.1.0-cdh5.5.4.tar.gz oozie-sharelib-4.1.0-cdh5.5.4.tar.gz oozie.war src conf lib libtools logs oozie-4.1.0-cdh5.5.4 oozie-examples.tar.gz oozie-server oozie-sharelib-4.1.0-cdh5.5.4-yarn.tar.gz release-log.txt [hadoop@bigdatamaster oozie]$ cp -r oozie-4.1.0-cdh5.5.4/hadooplibs/hadooplib-2.6.0-cdh5.5.4.oozie-4.1.0-cdh5.5.4/* libext/ [hadoop@bigdatamaster oozie]$

查看有没有拷贝成功

然后,再拷贝,我们之前,暂时上传在/home/hadop下的ext-2.2.zip到$OOZIE_HOME/libext目录下

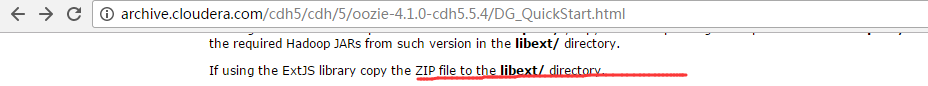

If using the ExtJS library copy the ZIP file to the libext/ directory.

这里官网,说的很谦虚,还来什么如果。其实是必须的啊!因为Ooize的前端界面就是用到ExtJS。

[hadoop@bigdatamaster libext]$ pwd /home/hadoop/app/oozie/libext [hadoop@bigdatamaster libext]$ cp /home/hadoop/ext-2.2.zip /home/hadoop/app/oozie/libext/ [hadoop@bigdatamaster libext]$ ls activation-1.1.jar commons-lang-2.4.jar hadoop-mapreduce-client-jobclient-2.6.0-cdh5.5.4.jar jetty-util-6.1.26.cloudera.2.jar apacheds-i18n-2.0.0-M15.jar commons-logging-1.1.jar hadoop-mapreduce-client-shuffle-2.6.0-cdh5.5.4.jar jsr305-3.0.0.jar apacheds-kerberos-codec-2.0.0-M15.jar commons-math3-3.1.1.jar hadoop-yarn-api-2.6.0-cdh5.5.4.jar leveldbjni-all-1.8.jar api-asn1-api-1.0.0-M20.jar commons-net-3.1.jar hadoop-yarn-client-2.6.0-cdh5.5.4.jar log4j-1.2.17.jar api-util-1.0.0-M20.jar curator-client-2.7.1.jar hadoop-yarn-common-2.6.0-cdh5.5.4.jar netty-3.6.2.Final.jar avro-1.7.6-cdh5.5.4.jar curator-framework-2.7.1.jar hadoop-yarn-server-common-2.6.0-cdh5.5.4.jar netty-all-4.0.23.Final.jar aws-java-sdk-core-1.10.6.jar curator-recipes-2.7.1.jar htrace-core4-4.0.1-incubating.jar paranamer-2.3.jar aws-java-sdk-kms-1.10.6.jar ext-2.2.zip httpclient-4.2.5.jar protobuf-java-2.5.0.jar aws-java-sdk-s3-1.10.6.jar gson-2.2.4.jar httpcore-4.2.5.jar servlet-api-2.5.jar commons-beanutils-1.7.0.jar guava-11.0.2.jar jackson-annotations-2.2.3.jar slf4j-api-1.7.5.jar commons-beanutils-core-1.8.0.jar hadoop-annotations-2.6.0-cdh5.5.4.jar jackson-core-2.2.3.jar slf4j-log4j12-1.7.5.jar commons-cli-1.2.jar hadoop-auth-2.6.0-cdh5.5.4.jar jackson-core-asl-1.8.8.jar snappy-java-1.0.4.1.jar commons-codec-1.4.jar hadoop-aws-2.6.0-cdh5.5.4.jar jackson-databind-2.2.3.jar stax-api-1.0-2.jar commons-collections-3.2.2.jar hadoop-client-2.6.0-cdh5.5.4.jar jackson-jaxrs-1.8.8.jar xercesImpl-2.10.0.jar commons-compress-1.4.1.jar hadoop-common-2.6.0-cdh5.5.4.jar jackson-mapper-asl-1.8.8.jar xml-apis-1.4.01.jar commons-configuration-1.6.jar hadoop-hdfs-2.6.0-cdh5.5.4.jar jackson-xc-1.8.8.jar xmlenc-0.52.jar commons-digester-1.8.jar hadoop-mapreduce-client-app-2.6.0-cdh5.5.4.jar jaxb-api-2.2.2.jar xz-1.0.jar commons-httpclient-3.1.jar hadoop-mapreduce-client-common-2.6.0-cdh5.5.4.jar jersey-client-1.9.jar zookeeper-3.4.5-cdh5.5.4.jar commons-io-2.4.jar hadoop-mapreduce-client-core-2.6.0-cdh5.5.4.jar jersey-core-1.9.jar [hadoop@bigdatamaster libext]$

欧克,这样的话。我们的/home/hadoop下的ext-2.2.zip 就可以删除了,不要了。

下面的这些操作,就是我们之前的那么jar包都准备好了,然后,怎么打到libext目录里去。

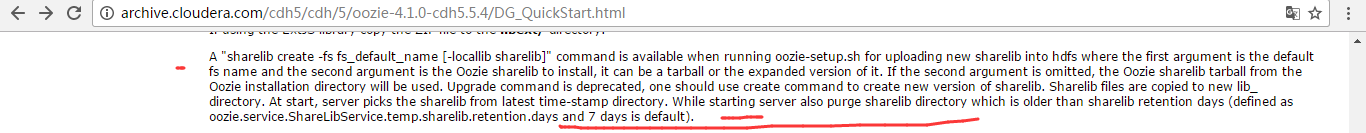

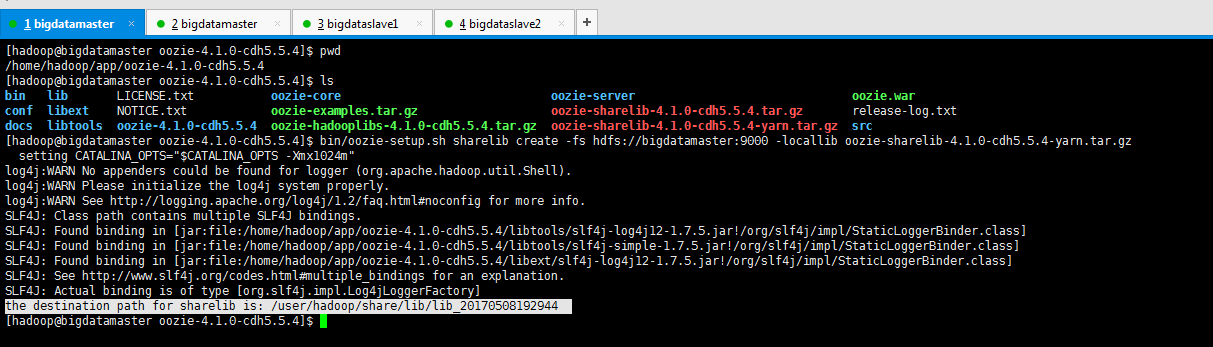

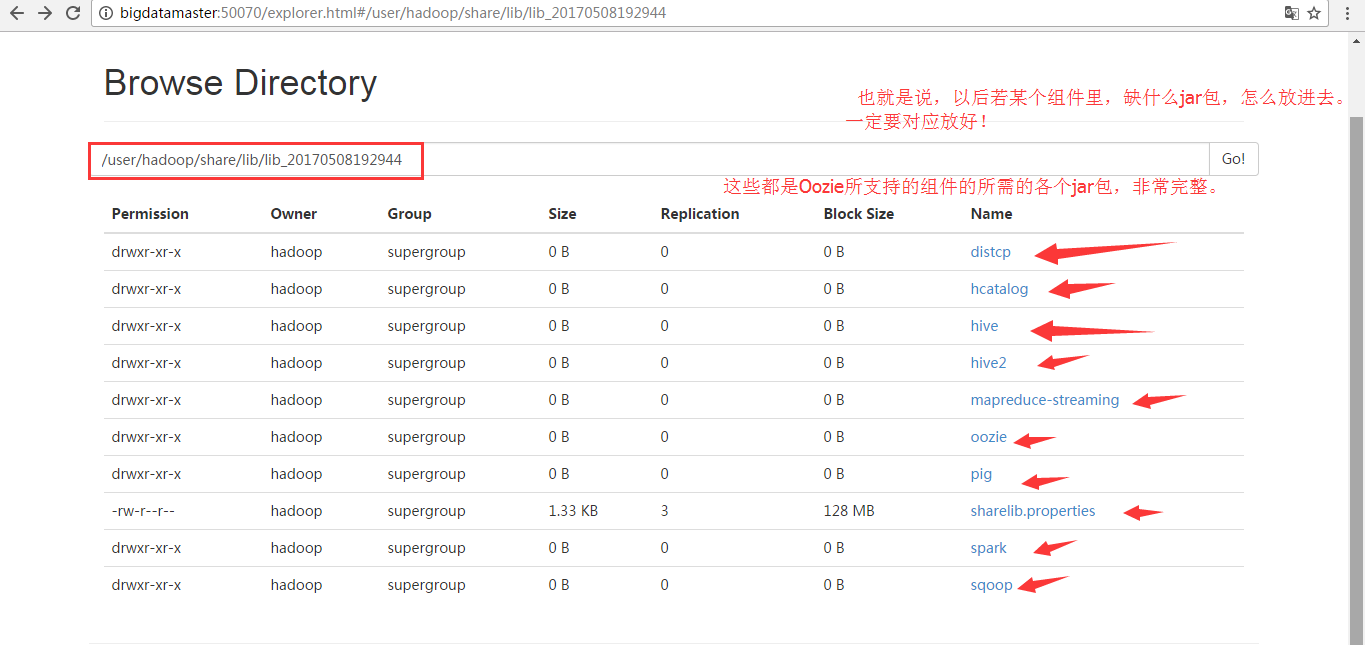

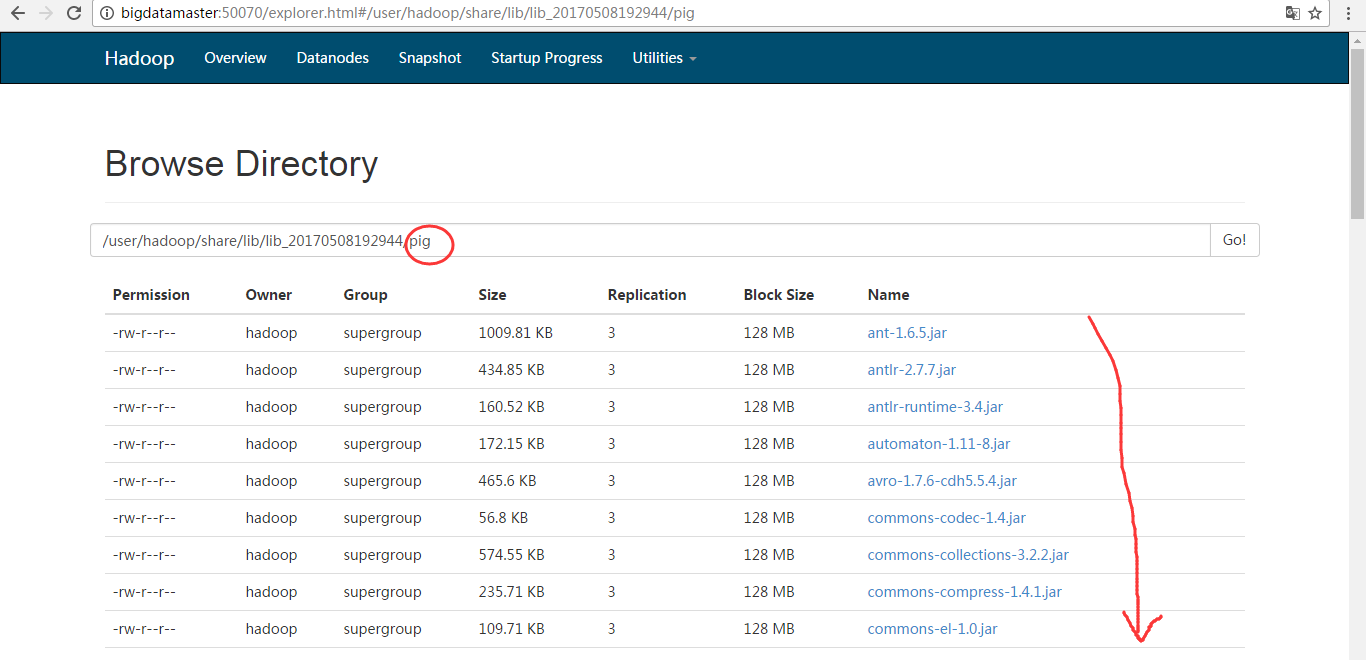

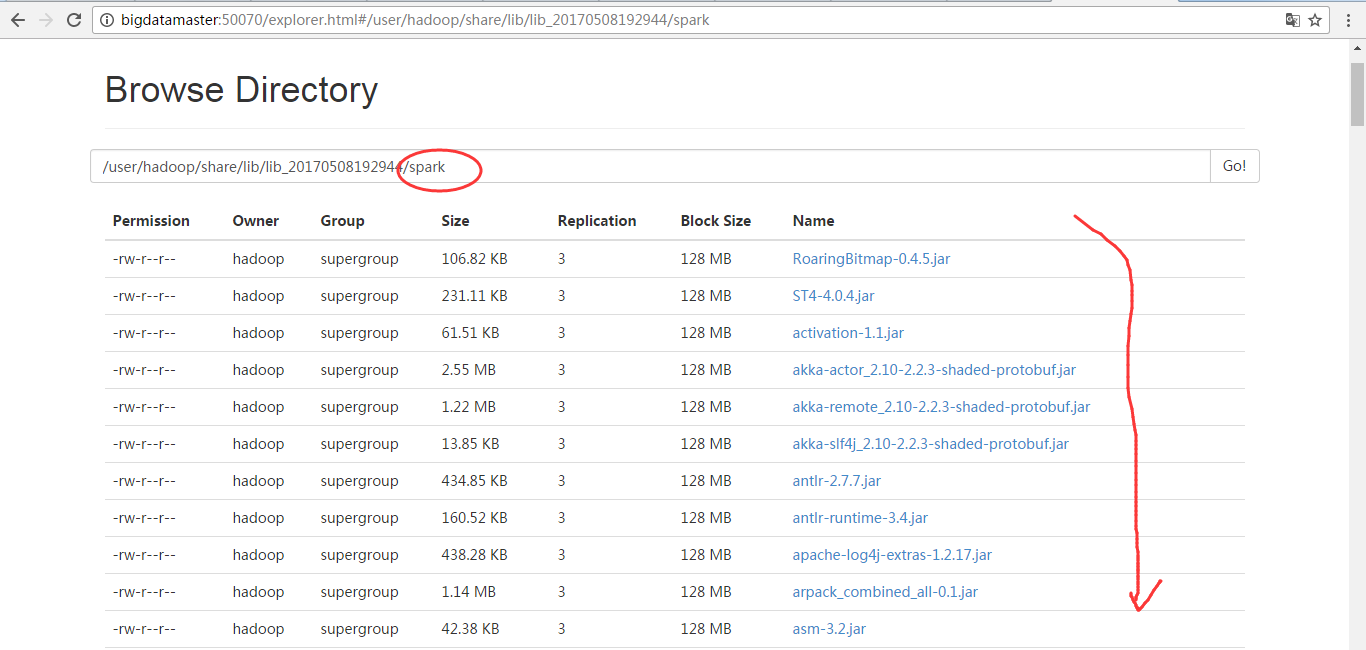

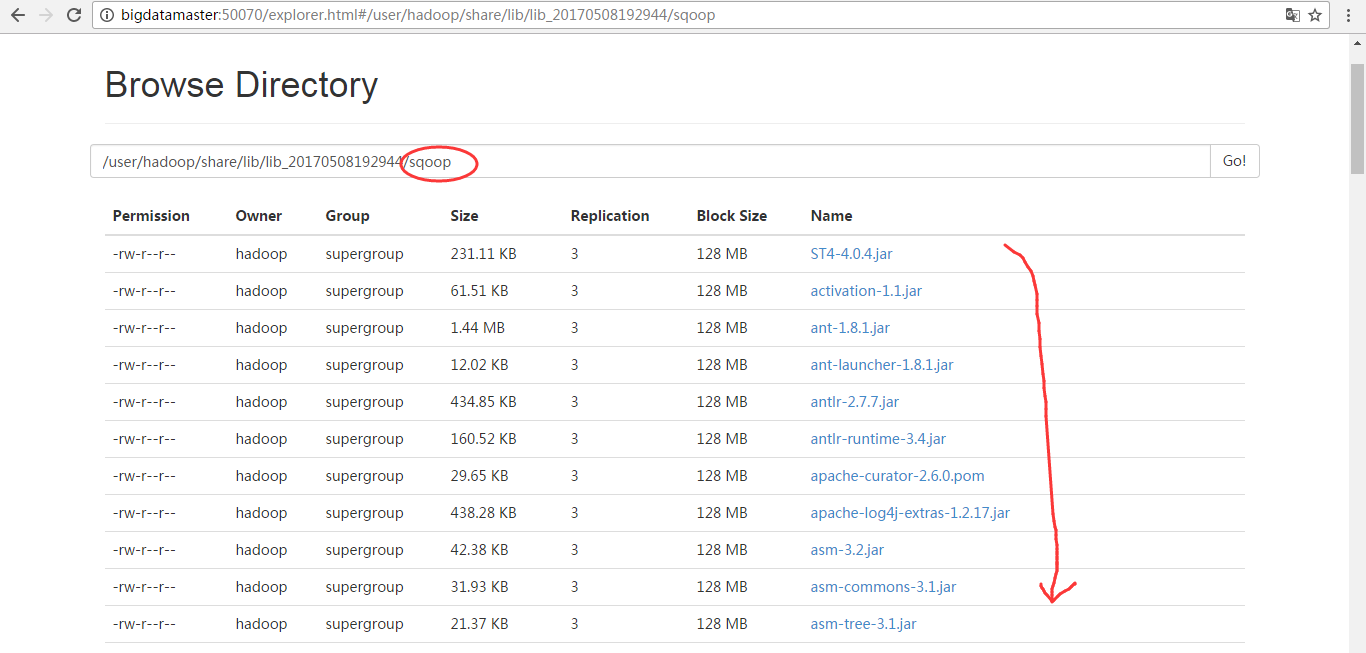

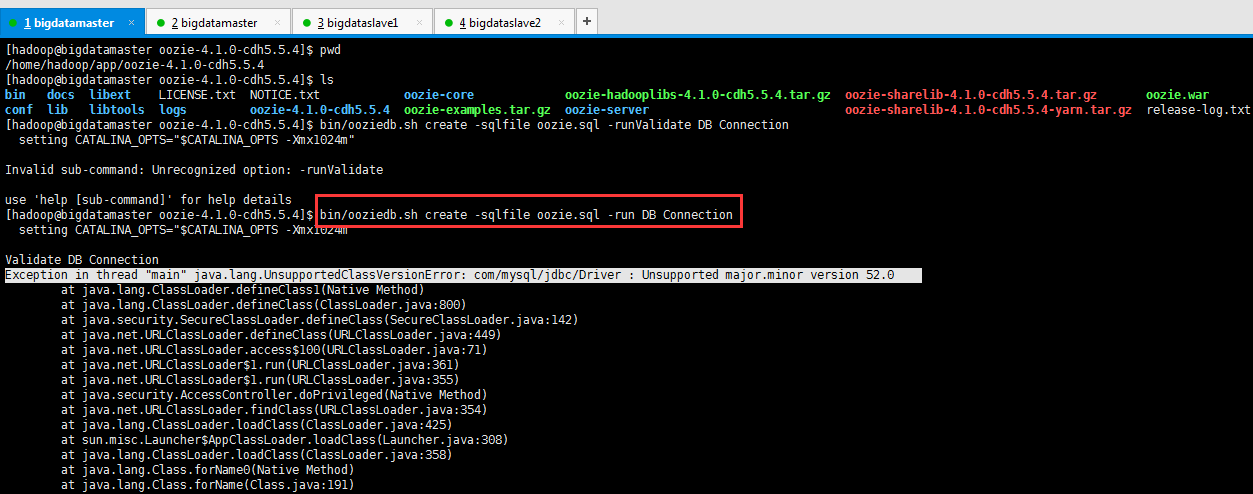

A "sharelib create -fs fs_default_name [-locallib sharelib]" command is available when running oozie-setup.sh for uploading new sharelib into hdfs where the first argument is the default fs name and the second argument is the Oozie sharelib to install, it can be a tarball or the expanded version of it. If the second argument is omitted, the Oozie sharelib tarball from the Oozie installation directory will be used. Upgrade command is deprecated, one should use create command to create new version of sharelib. Sharelib files are copied to new lib_ directory. At start, server picks the sharelib from latest time-stamp directory. While starting server also purge sharelib directory which is older than sharelib retention days (defined as oozie.service.ShareLibService.temp.sharelib.retention.days and 7 days is default).

继续往下。

"prepare-war [-d directory]" command is for creating war files for oozie with an optional alternative directory other than libext. db create|upgrade|postupgrade -run [-sqlfile ] command is for create, upgrade or postupgrade oozie db with an optional sql file Run the oozie-setup.sh script to configure Oozie with all the components added to the libext/ directory.

因为,官网说的很明白,如果我们没有像上述那样,把所有的包都拷贝到$OOZIE_HOME/libext下,则就直接执行下面的命令需要指定,也是可以达到目的的。

那我这里,已经弄好了,就不需去指定参数了,可以直接执行

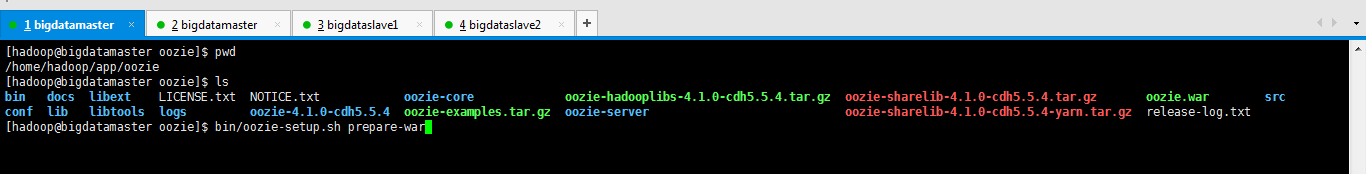

bin/oozie-setup.sh prepare-war

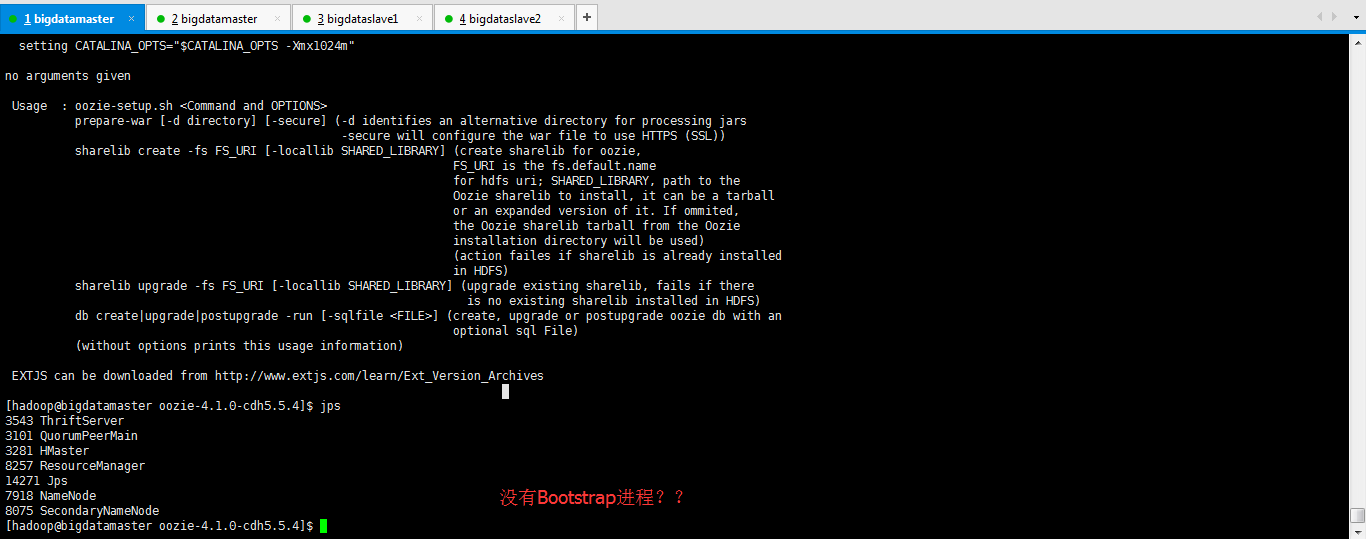

[hadoop@bigdatamaster oozie]$ pwd /home/hadoop/app/oozie [hadoop@bigdatamaster oozie]$ ls bin docs libext LICENSE.txt NOTICE.txt oozie-core oozie-hadooplibs-4.1.0-cdh5.5.4.tar.gz oozie-sharelib-4.1.0-cdh5.5.4.tar.gz oozie.war src conf lib libtools logs oozie-4.1.0-cdh5.5.4 oozie-examples.tar.gz oozie-server oozie-sharelib-4.1.0-cdh5.5.4-yarn.tar.gz release-log.txt [hadoop@bigdatamaster oozie]$ bin/oozie-setup.sh prepare-war

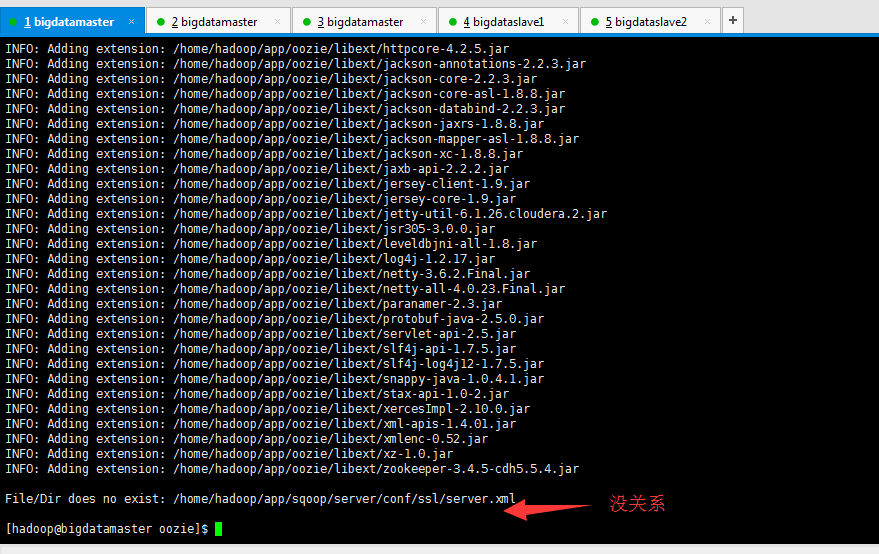

setting CATALINA_OPTS="$CATALINA_OPTS -Xmx1024m"

INFO: Adding extension: /home/hadoop/app/oozie/libext/activation-1.1.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/apacheds-i18n-2.0.0-M15.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/apacheds-kerberos-codec-2.0.0-M15.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/api-asn1-api-1.0.0-M20.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/api-util-1.0.0-M20.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/avro-1.7.6-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/aws-java-sdk-core-1.10.6.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/aws-java-sdk-kms-1.10.6.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/aws-java-sdk-s3-1.10.6.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-beanutils-1.7.0.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-beanutils-core-1.8.0.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-cli-1.2.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-codec-1.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-collections-3.2.2.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-compress-1.4.1.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-configuration-1.6.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-digester-1.8.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-httpclient-3.1.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-io-2.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-lang-2.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-logging-1.1.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-math3-3.1.1.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/commons-net-3.1.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/curator-client-2.7.1.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/curator-framework-2.7.1.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/curator-recipes-2.7.1.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/gson-2.2.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/guava-11.0.2.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-annotations-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-auth-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-aws-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-client-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-common-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-hdfs-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-mapreduce-client-app-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-mapreduce-client-common-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-mapreduce-client-core-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-mapreduce-client-jobclient-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-mapreduce-client-shuffle-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-yarn-api-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-yarn-client-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-yarn-common-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/hadoop-yarn-server-common-2.6.0-cdh5.5.4.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/htrace-core4-4.0.1-incubating.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/httpclient-4.2.5.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/httpcore-4.2.5.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jackson-annotations-2.2.3.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jackson-core-2.2.3.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jackson-core-asl-1.8.8.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jackson-databind-2.2.3.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jackson-jaxrs-1.8.8.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jackson-mapper-asl-1.8.8.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jackson-xc-1.8.8.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jaxb-api-2.2.2.jar

INFO: Adding extension: /home/hadoop/app/oozieb/libext/jersey-client-1.9.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jersey-core-1.9.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jetty-util-6.1.26.cloudera.2.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/jsr305-3.0.0.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/leveldbjni-all-1.8.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/log4j-1.2.17.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/netty-3.6.2.Final.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/netty-all-4.0.23.Final.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/paranamer-2.3.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/protobuf-java-2.5.0.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/servlet-api-2.5.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/slf4j-api-1.7.5.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/slf4j-log4j12-1.7.5.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/snappy-java-1.0.4.1.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/stax-api-1.0-2.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/xercesImpl-2.10.0.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/xml-apis-1.4.01.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/xmlenc-0.52.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/xz-1.0.jar

INFO: Adding extension: /home/hadoop/app/oozie/libext/zookeeper-3.4.5-cdh5.5.4.jar

File/Dir does no exist: /home/hadoop/app/sqoop/server/conf/ssl/server.xml

[hadoop@bigdatamaster oozie]$

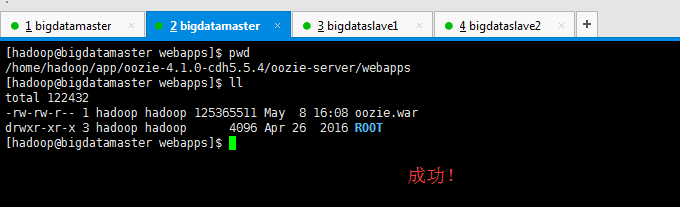

我一直在这里反复试了好几次,oozie-server下的oozie.war生成不出来。

解决办法

CDH版本的oozie安装执行bin/oozie-setup.sh prepare-war,没生成oozie.war?

[hadoop@bigdatamaster webapps]$ pwd /home/hadoop/app/oozie-4.1.0-cdh5.5.4/oozie-server/webapps [hadoop@bigdatamaster webapps]$ ll total 122432 -rw-rw-r-- 1 hadoop hadoop 125365511 May 8 16:08 oozie.war drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 ROOT [hadoop@bigdatamaster webapps]$

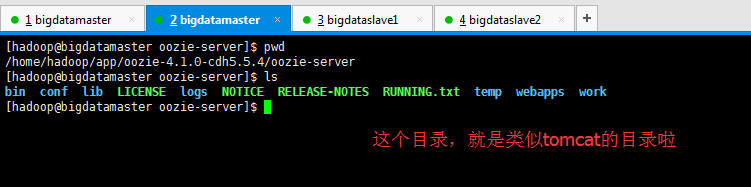

[hadoop@bigdatamaster oozie-server]$ pwd /home/hadoop/app/oozie-4.1.0-cdh5.5.4/oozie-server [hadoop@bigdatamaster oozie-server]$ ls bin conf lib LICENSE logs NOTICE RELEASE-NOTES RUNNING.txt temp webapps work [hadoop@bigdatamaster oozie-server]$

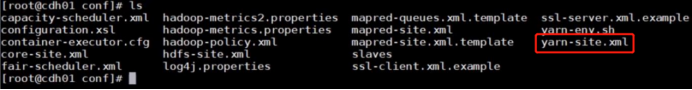

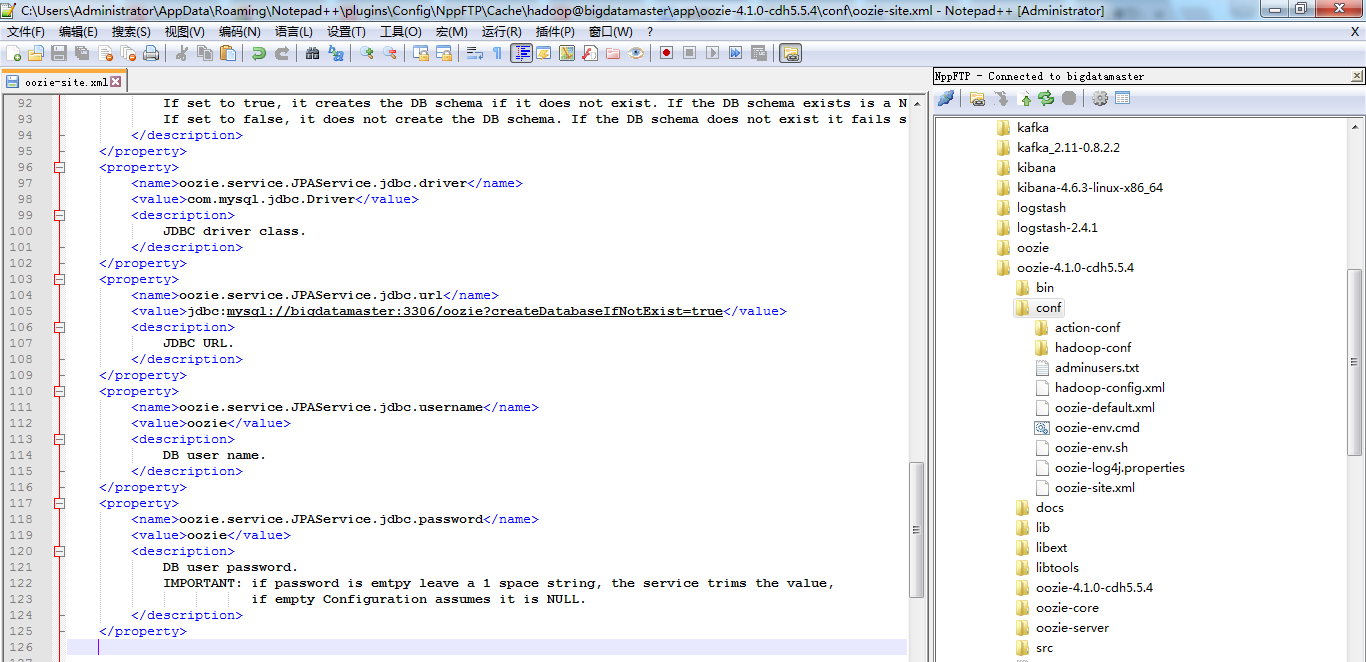

然后,我们配置好$OOZIE_HOME/conf/oozie-site.xml 和 配置$OOZIE_HOME/conf/oozie-default.xml (这个配置文件,一般是不需动的,它里面是最全的)

注意,我们的oozie-site.xml配置文件里面默认是如下

<?xml version="1.0"?> <!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to you under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. --> <configuration> <!-- Refer to the oozie-default.xml file for the complete list of Oozie configuration properties and their default values. --> <!-- Proxyuser Configuration --> <!-- <property> <name>oozie.service.ProxyUserService.proxyuser.#USER#.hosts</name> <value>*</value> <description> List of hosts the '#USER#' user is allowed to perform 'doAs' operations. The '#USER#' must be replaced with the username o the user who is allowed to perform 'doAs' operations. The value can be the '*' wildcard or a list of hostnames. For multiple users copy this property and replace the user name in the property name. </description> </property> <property> <name>oozie.service.ProxyUserService.proxyuser.#USER#.groups</name> <value>*</value> <description> List of groups the '#USER#' user is allowed to impersonate users from to perform 'doAs' operations. The '#USER#' must be replaced with the username o the user who is allowed to perform 'doAs' operations. The value can be the '*' wildcard or a list of groups. For multiple users copy this property and replace the user name in the property name. </description> </property> --> <!-- Default proxyuser configuration for Hue --> <property> <name>oozie.service.ProxyUserService.proxyuser.hue.hosts</name> <value>*</value> </property> <property> <name>oozie.service.ProxyUserService.proxyuser.hue.groups</name> <value>*</value> </property> </configuration>

然后,去网上找到如下的配置信息,复制粘贴进去。

最后oozie-site.xml配置文件,如下

<?xml version="1.0"?> <!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to you under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. --> <configuration> <!-- Refer to the oozie-default.xml file for the complete list of Oozie configuration properties and their default values. --> <!-- Proxyuser Configuration --> <!-- <property> <name>oozie.service.ProxyUserService.proxyuser.#USER#.hosts</name> <value>*</value> <description> List of hosts the '#USER#' user is allowed to perform 'doAs' operations. The '#USER#' must be replaced with the username o the user who is allowed to perform 'doAs' operations. The value can be the '*' wildcard or a list of hostnames. For multiple users copy this property and replace the user name in the property name. </description> </property> <property> <name>oozie.service.ProxyUserService.proxyuser.#USER#.groups</name> <value>*</value> <description> List of groups the '#USER#' user is allowed to impersonate users from to perform 'doAs' operations. The '#USER#' must be replaced with the username o the user who is allowed to perform 'doAs' operations. The value can be the '*' wildcard or a list of groups. For multiple users copy this property and replace the user name in the property name. </description> </property> --> <!-- Default proxyuser configuration for Hue --> <property> <name>oozie.service.ProxyUserService.proxyuser.hue.hosts</name> <value>*</value> </property> <property> <name>oozie.service.ProxyUserService.proxyuser.hue.groups</name> <value>*</value> </property> <property> <name>oozie.db.schema.name</name> <value>oozie</value> <description> Oozie DataBase Name </description> </property> <property> <name>oozie.service.JPAService.create.db.schema</name> <value>false</value> <description> Creates Oozie DB. If set to true, it creates the DB schema if it does not exist. If the DB schema exists is a NOP. If set to false, it does not create the DB schema. If the DB schema does not exist it fails start up. </description> </property> <property> <name>oozie.service.JPAService.jdbc.driver</name> <value>com.mysql.jdbc.Driver</value> <description> JDBC driver class. </description> </property> <property> <name>oozie.service.JPAService.jdbc.url</name> <value>jdbc:mysql://bigdatamaster:3306/oozie?createDatabaseIfNotExist=true</value> <description> JDBC URL. </description> </property> <property> <name>oozie.service.JPAService.jdbc.username</name> <value>oozie</value> <description> DB user name. </description> </property> <property> <name>oozie.service.JPAService.jdbc.password</name> <value>oozie</value> <description> DB user password. IMPORTANT: if password is emtpy leave a 1 space string, the service trims the value, if empty Configuration assumes it is NULL. </description> </property> <property> <name>oozie.service.HadoopAccessorService.hadoop.configurations</name> <value>*=/home/hadoop/app/hadoop-2.6.0-cdh5.5.4/etc/hadoop</value> <description> Comma separated AUTHORITY=HADOOP_CONF_DIR, where AUTHORITY is the HOST:PORT of the Hadoop service (JobTracker, HDFS). The wildcard '*' configuration is used when there is no exact match for an authority. The HADOOP_CONF_DIR contains the relevant Hadoop *-site.xml files. If the path is relative is looked within the Oozie configuration directory; though the path can be absolute (i.e. to point to Hadoop client conf/ directories in the local filesystem. </description> </property> </configuration>

oozie-default.xml保持默认,因为,越新的版本很多都默认配置好了。