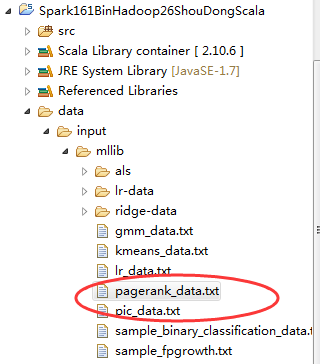

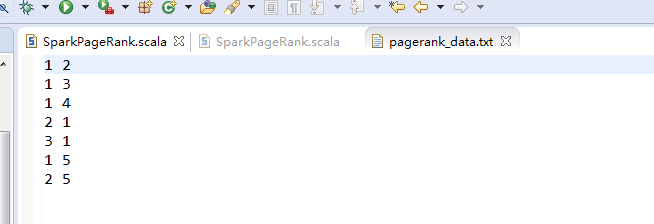

spark-1.6.1-bin-hadoop2.6里Basic包下的SparkPageRank.scala

/* * Licensed to the Apache Software Foundation (ASF) under one or more * contributor license agreements. See the NOTICE file distributed with * this work for additional information regarding copyright ownership. * The ASF licenses this file to You under the Apache License, Version 2.0 * (the "License"); you may not use this file except in compliance with * the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ // scalastyle:off println package org.apache.spark.examples import org.apache.spark.SparkContext._ import org.apache.spark.{SparkConf, SparkContext} /** * Computes the PageRank of URLs from an input file. Input file should * be in format of: * URL neighbor URL * URL neighbor URL * URL neighbor URL * ... * where URL and their neighbors are separated by space(s). * * This is an example implementation for learning how to use Spark. For more conventional use, * please refer to org.apache.spark.graphx.lib.PageRank */ object SparkPageRank { /* * 显示警告函数 */ def showWarning() { System.err.println( """WARN: This is a naive implementation of PageRank and is given as an example! |Please use the PageRank implementation found in org.apache.spark.graphx.lib.PageRank |for more conventional use. """.stripMargin) } /* * 主函数 */ def main(args: Array[String]) { if (args.length < 1) { System.err.println("Usage: SparkPageRank <file> <iter>") System.exit(1) } showWarning() val sparkConf = new SparkConf().setAppName("PageRank").setMaster("local") //解析日志,srcUrl - neighborUrl, 并对key去重 val iters = if (args.length > 1) args(1).toInt else 10 val ctx = new SparkContext(sparkConf) val lines = ctx.textFile("data/input/mllib/pagerank_data.txt", 1) //根据边关系数据生成 邻接表 如:(1,(2,3,4,5)) (2,(1,5))... val links = lines.map{ s => val parts = s.split("\\s+") (parts(0), parts(1)) }.distinct().groupByKey().cache() var ranks = links.mapValues(v => 1.0)//初始化 ranks, 每一个url初始分值为1 /* * 迭代iters次; 每次迭代中做如下处理, links(urlKey, neighborUrls) join (urlKey, rank(分值)); * 对neighborUrls以及初始 rank,每一个neighborUrl , neighborUrlKey, 初始rank/size(新的rank贡献值); * 然后再进行reduceByKey相加 并对分值 做调整 0.15 + 0.85 * _ */ for (i <- 1 to iters) { val contribs = links.join(ranks).values.flatMap{ case (urls, rank) => val size = urls.size urls.map(url => (url, rank / size)) } ranks = contribs.reduceByKey(_ + _).mapValues(0.15 + 0.85 * _) } //输出排名 val output = ranks.collect() output.foreach(tup => println(tup._1 + " has rank: " + tup._2 + ".")) ctx.stop() } } // scalastyle:on println

暂时还没运行出结果、

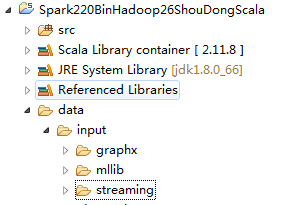

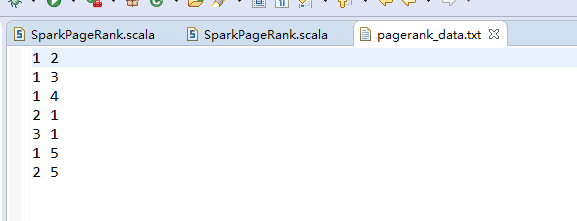

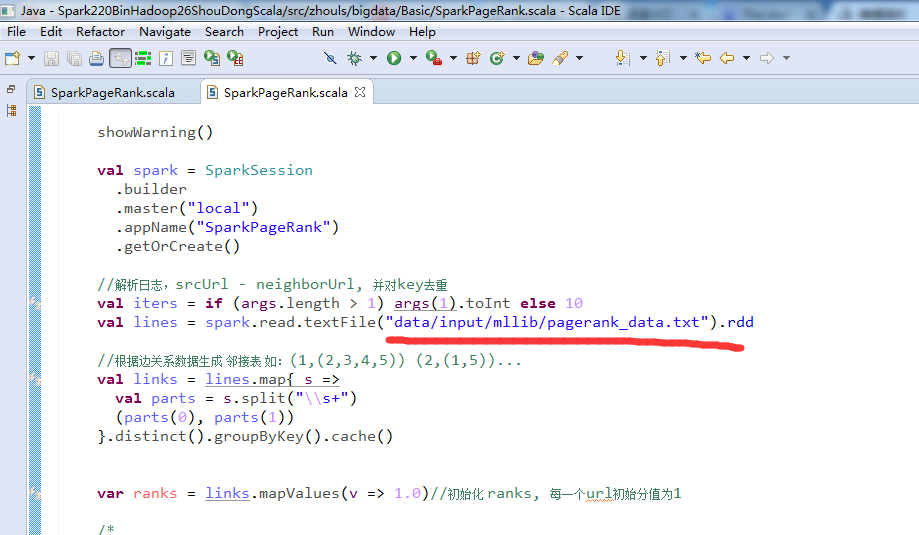

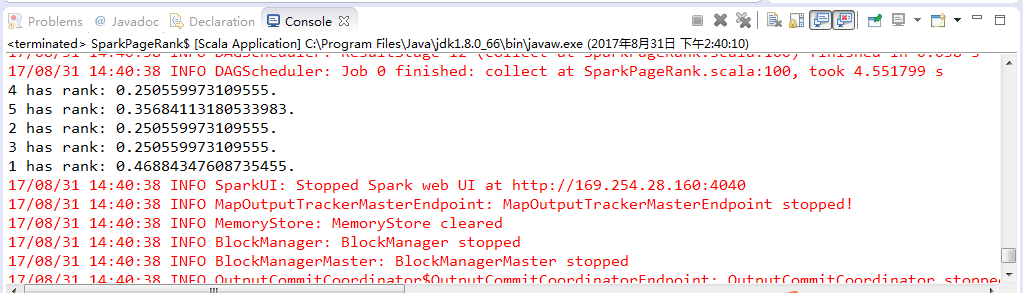

spark-2.2.0-bin-hadoop2.6里Basic包下的SparkPageRank.scala

/* * Licensed to the Apache Software Foundation (ASF) under one or more * contributor license agreements. See the NOTICE file distributed with * this work for additional information regarding copyright ownership. * The ASF licenses this file to You under the Apache License, Version 2.0 * (the "License"); you may not use this file except in compliance with * the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ // scalastyle:off println package org.apache.spark.examples import org.apache.spark.sql.SparkSession /** * Computes the PageRank of URLs from an input file. Input file should * be in format of: * URL neighbor URL * URL neighbor URL * URL neighbor URL * ... * where URL and their neighbors are separated by space(s). * * This is an example implementation for learning how to use Spark. For more conventional use, * please refer to org.apache.spark.graphx.lib.PageRank * * Example Usage: * {{{ * bin/run-example SparkPageRank data/mllib/pagerank_data.txt 10 * }}} */ object SparkPageRank { /* * 显示警告函数 */ def showWarning() { System.err.println( """WARN: This is a naive implementation of PageRank and is given as an example! |Please use the PageRank implementation found in org.apache.spark.graphx.lib.PageRank |for more conventional use. """.stripMargin) } /* * 主函数 */ def main(args: Array[String]) { if (args.length < 1) { System.err.println("Usage: SparkPageRank <file> <iter>") System.exit(1) } showWarning() val spark = SparkSession .builder .master("local") .appName("SparkPageRank") .getOrCreate() //解析日志,srcUrl - neighborUrl, 并对key去重 val iters = if (args.length > 1) args(1).toInt else 10 val lines = spark.read.textFile("data/input/mllib/pagerank_data.txt").rdd //根据边关系数据生成 邻接表 如:(1,(2,3,4,5)) (2,(1,5))... val links = lines.map{ s => val parts = s.split("\\s+") (parts(0), parts(1)) }.distinct().groupByKey().cache() var ranks = links.mapValues(v => 1.0)//初始化 ranks, 每一个url初始分值为1 /* * 迭代iters次; 每次迭代中做如下处理, links(urlKey, neighborUrls) join (urlKey, rank(分值)); * 对neighborUrls以及初始 rank,每一个neighborUrl , neighborUrlKey, 初始rank/size(新的rank贡献值); * 然后再进行reduceByKey相加 并对分值 做调整 0.15 + 0.85 * _ */ for (i <- 1 to iters) { val contribs = links.join(ranks).values.flatMap{ case (urls, rank) => val size = urls.size urls.map(url => (url, rank / size)) } ranks = contribs.reduceByKey(_ + _).mapValues(0.15 + 0.85 * _) } //输出排名 val output = ranks.collect() output.foreach(tup => println(tup._1 + " has rank: " + tup._2 + ".")) spark.stop() } } // scalastyle:on println

WARN: This is a naive implementation of PageRank and is given as an example! Please use the PageRank implementation found in org.apache.spark.graphx.lib.PageRank for more conventional use. Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties 17/08/31 14:40:14 INFO SparkContext: Running Spark version 2.2.0 17/08/31 14:40:15 INFO SparkContext: Submitted application: SparkPageRank 17/08/31 14:40:15 INFO SecurityManager: Changing view acls to: Administrator 17/08/31 14:40:15 INFO SecurityManager: Changing modify acls to: Administrator 17/08/31 14:40:15 INFO SecurityManager: Changing view acls groups to: 17/08/31 14:40:15 INFO SecurityManager: Changing modify acls groups to: 17/08/31 14:40:15 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(Administrator); groups with view permissions: Set(); users with modify permissions: Set(Administrator); groups with modify permissions: Set() 17/08/31 14:40:16 INFO Utils: Successfully started service 'sparkDriver' on port 58368. 17/08/31 14:40:16 INFO SparkEnv: Registering MapOutputTracker 17/08/31 14:40:16 INFO SparkEnv: Registering BlockManagerMaster 17/08/31 14:40:16 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 17/08/31 14:40:16 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 17/08/31 14:40:16 INFO DiskBlockManager: Created local directory at C:\Users\Administrator\AppData\Local\Temp\blockmgr-af8c900a-ef5a-4f3d-b8ec-71522f0204ac 17/08/31 14:40:17 INFO MemoryStore: MemoryStore started with capacity 904.8 MB 17/08/31 14:40:17 INFO SparkEnv: Registering OutputCommitCoordinator 17/08/31 14:40:17 INFO Utils: Successfully started service 'SparkUI' on port 4040. 17/08/31 14:40:17 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://169.254.28.160:4040 17/08/31 14:40:17 INFO Executor: Starting executor ID driver on host localhost 17/08/31 14:40:17 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 58377. 17/08/31 14:40:17 INFO NettyBlockTransferService: Server created on 169.254.28.160:58377 17/08/31 14:40:17 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 17/08/31 14:40:17 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 169.254.28.160, 58377, None) 17/08/31 14:40:17 INFO BlockManagerMasterEndpoint: Registering block manager 169.254.28.160:58377 with 904.8 MB RAM, BlockManagerId(driver, 169.254.28.160, 58377, None) 17/08/31 14:40:17 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 169.254.28.160, 58377, None) 17/08/31 14:40:17 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, 169.254.28.160, 58377, None) 17/08/31 14:40:18 INFO SharedState: Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/D:/Code/EclipsePaperCode/Spark220BinHadoop26ShouDongScala/spark-warehouse/'). 17/08/31 14:40:18 INFO SharedState: Warehouse path is 'file:/D:/Code/EclipsePaperCode/Spark220BinHadoop26ShouDongScala/spark-warehouse/'. 17/08/31 14:40:20 INFO StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint 17/08/31 14:40:29 INFO FileSourceStrategy: Pruning directories with: 17/08/31 14:40:29 INFO FileSourceStrategy: Post-Scan Filters: 17/08/31 14:40:29 INFO FileSourceStrategy: Output Data Schema: struct<value: string> 17/08/31 14:40:29 INFO FileSourceScanExec: Pushed Filters: 17/08/31 14:40:32 INFO CodeGenerator: Code generated in 768.477562 ms 17/08/31 14:40:32 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 219.9 KB, free 904.6 MB) 17/08/31 14:40:32 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 20.6 KB, free 904.6 MB) 17/08/31 14:40:32 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 169.254.28.160:58377 (size: 20.6 KB, free: 904.8 MB) 17/08/31 14:40:32 INFO SparkContext: Created broadcast 0 from rdd at SparkPageRank.scala:75 17/08/31 14:40:32 INFO FileSourceScanExec: Planning scan with bin packing, max size: 4194337 bytes, open cost is considered as scanning 4194304 bytes. 17/08/31 14:40:34 INFO SparkContext: Starting job: collect at SparkPageRank.scala:100 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 5 (distinct at SparkPageRank.scala:81) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 7 (distinct at SparkPageRank.scala:81) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 14 (flatMap at SparkPageRank.scala:92) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 21 (flatMap at SparkPageRank.scala:92) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 28 (flatMap at SparkPageRank.scala:92) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 35 (flatMap at SparkPageRank.scala:92) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 42 (flatMap at SparkPageRank.scala:92) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 49 (flatMap at SparkPageRank.scala:92) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 56 (flatMap at SparkPageRank.scala:92) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 63 (flatMap at SparkPageRank.scala:92) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 70 (flatMap at SparkPageRank.scala:92) 17/08/31 14:40:34 INFO DAGScheduler: Registering RDD 77 (flatMap at SparkPageRank.scala:92) 17/08/31 14:40:34 INFO DAGScheduler: Got job 0 (collect at SparkPageRank.scala:100) with 1 output partitions 17/08/31 14:40:34 INFO DAGScheduler: Final stage: ResultStage 12 (collect at SparkPageRank.scala:100) 17/08/31 14:40:34 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 11) 17/08/31 14:40:34 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 11) 17/08/31 14:40:34 INFO DAGScheduler: Submitting ShuffleMapStage 0 (MapPartitionsRDD[5] at distinct at SparkPageRank.scala:81), which has no missing parents 17/08/31 14:40:35 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 11.0 KB, free 904.6 MB) 17/08/31 14:40:35 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 5.8 KB, free 904.5 MB) 17/08/31 14:40:35 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 169.254.28.160:58377 (size: 5.8 KB, free: 904.8 MB) 17/08/31 14:40:35 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:35 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 0 (MapPartitionsRDD[5] at distinct at SparkPageRank.scala:81) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:35 INFO TaskSchedulerImpl: Adding task set 0.0 with 1 tasks 17/08/31 14:40:35 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 5320 bytes) 17/08/31 14:40:35 INFO Executor: Running task 0.0 in stage 0.0 (TID 0) 17/08/31 14:40:36 INFO CodeGenerator: Code generated in 58.596224 ms 17/08/31 14:40:36 INFO FileScanRDD: Reading File path: file:///D:/Code/EclipsePaperCode/Spark220BinHadoop26ShouDongScala/data/input/pagerank_data.txt, range: 0-33, partition values: [empty row] 17/08/31 14:40:36 INFO CodeGenerator: Code generated in 38.938763 ms 17/08/31 14:40:36 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 1755 bytes result sent to driver 17/08/31 14:40:36 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 1200 ms on localhost (executor driver) (1/1) 17/08/31 14:40:36 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool 17/08/31 14:40:36 INFO DAGScheduler: ShuffleMapStage 0 (distinct at SparkPageRank.scala:81) finished in 1.306 s 17/08/31 14:40:36 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:36 INFO DAGScheduler: running: Set() 17/08/31 14:40:36 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 9, ShuffleMapStage 1, ShuffleMapStage 5, ShuffleMapStage 2, ShuffleMapStage 6, ShuffleMapStage 3, ShuffleMapStage 10, ShuffleMapStage 7, ShuffleMapStage 4, ShuffleMapStage 11, ShuffleMapStage 8) 17/08/31 14:40:36 INFO DAGScheduler: failed: Set() 17/08/31 14:40:36 INFO DAGScheduler: Submitting ShuffleMapStage 1 (MapPartitionsRDD[7] at distinct at SparkPageRank.scala:81), which has no missing parents 17/08/31 14:40:36 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 4.7 KB, free 904.5 MB) 17/08/31 14:40:36 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 2.5 KB, free 904.5 MB) 17/08/31 14:40:36 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 169.254.28.160:58377 (size: 2.5 KB, free: 904.8 MB) 17/08/31 14:40:36 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:36 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 1 (MapPartitionsRDD[7] at distinct at SparkPageRank.scala:81) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:36 INFO TaskSchedulerImpl: Adding task set 1.0 with 1 tasks 17/08/31 14:40:36 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 1, localhost, executor driver, partition 0, ANY, 4610 bytes) 17/08/31 14:40:36 INFO Executor: Running task 0.0 in stage 1.0 (TID 1) 17/08/31 14:40:36 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:36 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 27 ms 17/08/31 14:40:37 INFO Executor: Finished task 0.0 in stage 1.0 (TID 1). 1241 bytes result sent to driver 17/08/31 14:40:37 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 1) in 275 ms on localhost (executor driver) (1/1) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool 17/08/31 14:40:37 INFO DAGScheduler: ShuffleMapStage 1 (distinct at SparkPageRank.scala:81) finished in 0.279 s 17/08/31 14:40:37 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:37 INFO DAGScheduler: running: Set() 17/08/31 14:40:37 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 9, ShuffleMapStage 5, ShuffleMapStage 2, ShuffleMapStage 6, ShuffleMapStage 3, ShuffleMapStage 10, ShuffleMapStage 7, ShuffleMapStage 4, ShuffleMapStage 11, ShuffleMapStage 8) 17/08/31 14:40:37 INFO DAGScheduler: failed: Set() 17/08/31 14:40:37 INFO DAGScheduler: Submitting ShuffleMapStage 2 (MapPartitionsRDD[14] at flatMap at SparkPageRank.scala:92), which has no missing parents 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 6.5 KB, free 904.5 MB) 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 3.2 KB, free 904.5 MB) 17/08/31 14:40:37 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on 169.254.28.160:58377 (size: 3.2 KB, free: 904.8 MB) 17/08/31 14:40:37 INFO SparkContext: Created broadcast 3 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:37 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 2 (MapPartitionsRDD[14] at flatMap at SparkPageRank.scala:92) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Adding task set 2.0 with 1 tasks 17/08/31 14:40:37 INFO TaskSetManager: Starting task 0.0 in stage 2.0 (TID 2, localhost, executor driver, partition 0, ANY, 4840 bytes) 17/08/31 14:40:37 INFO Executor: Running task 0.0 in stage 2.0 (TID 2) 17/08/31 14:40:37 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:37 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms 17/08/31 14:40:37 INFO MemoryStore: Block rdd_8_0 stored as values in memory (estimated size 728.0 B, free 904.5 MB) 17/08/31 14:40:37 INFO BlockManagerInfo: Added rdd_8_0 in memory on 169.254.28.160:58377 (size: 728.0 B, free: 904.8 MB) 17/08/31 14:40:37 INFO BlockManager: Found block rdd_8_0 locally 17/08/31 14:40:37 INFO Executor: Finished task 0.0 in stage 2.0 (TID 2). 2067 bytes result sent to driver 17/08/31 14:40:37 INFO TaskSetManager: Finished task 0.0 in stage 2.0 (TID 2) in 198 ms on localhost (executor driver) (1/1) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool 17/08/31 14:40:37 INFO DAGScheduler: ShuffleMapStage 2 (flatMap at SparkPageRank.scala:92) finished in 0.206 s 17/08/31 14:40:37 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:37 INFO DAGScheduler: running: Set() 17/08/31 14:40:37 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 9, ShuffleMapStage 5, ShuffleMapStage 6, ShuffleMapStage 3, ShuffleMapStage 10, ShuffleMapStage 7, ShuffleMapStage 4, ShuffleMapStage 11, ShuffleMapStage 8) 17/08/31 14:40:37 INFO DAGScheduler: failed: Set() 17/08/31 14:40:37 INFO DAGScheduler: Submitting ShuffleMapStage 3 (MapPartitionsRDD[21] at flatMap at SparkPageRank.scala:92), which has no missing parents 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_4 stored as values in memory (estimated size 6.8 KB, free 904.5 MB) 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_4_piece0 stored as bytes in memory (estimated size 3.4 KB, free 904.5 MB) 17/08/31 14:40:37 INFO BlockManagerInfo: Added broadcast_4_piece0 in memory on 169.254.28.160:58377 (size: 3.4 KB, free: 904.8 MB) 17/08/31 14:40:37 INFO SparkContext: Created broadcast 4 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:37 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 3 (MapPartitionsRDD[21] at flatMap at SparkPageRank.scala:92) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Adding task set 3.0 with 1 tasks 17/08/31 14:40:37 INFO TaskSetManager: Starting task 0.0 in stage 3.0 (TID 3, localhost, executor driver, partition 0, PROCESS_LOCAL, 4849 bytes) 17/08/31 14:40:37 INFO Executor: Running task 0.0 in stage 3.0 (TID 3) 17/08/31 14:40:37 INFO BlockManager: Found block rdd_8_0 locally 17/08/31 14:40:37 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:37 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms 17/08/31 14:40:37 INFO Executor: Finished task 0.0 in stage 3.0 (TID 3). 1370 bytes result sent to driver 17/08/31 14:40:37 INFO TaskSetManager: Finished task 0.0 in stage 3.0 (TID 3) in 103 ms on localhost (executor driver) (1/1) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Removed TaskSet 3.0, whose tasks have all completed, from pool 17/08/31 14:40:37 INFO DAGScheduler: ShuffleMapStage 3 (flatMap at SparkPageRank.scala:92) finished in 0.162 s 17/08/31 14:40:37 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:37 INFO DAGScheduler: running: Set() 17/08/31 14:40:37 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 9, ShuffleMapStage 5, ShuffleMapStage 6, ShuffleMapStage 10, ShuffleMapStage 7, ShuffleMapStage 4, ShuffleMapStage 11, ShuffleMapStage 8) 17/08/31 14:40:37 INFO DAGScheduler: failed: Set() 17/08/31 14:40:37 INFO DAGScheduler: Submitting ShuffleMapStage 4 (MapPartitionsRDD[28] at flatMap at SparkPageRank.scala:92), which has no missing parents 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_5 stored as values in memory (estimated size 6.8 KB, free 904.5 MB) 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_5_piece0 stored as bytes in memory (estimated size 3.4 KB, free 904.5 MB) 17/08/31 14:40:37 INFO BlockManagerInfo: Added broadcast_5_piece0 in memory on 169.254.28.160:58377 (size: 3.4 KB, free: 904.8 MB) 17/08/31 14:40:37 INFO SparkContext: Created broadcast 5 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:37 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 4 (MapPartitionsRDD[28] at flatMap at SparkPageRank.scala:92) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Adding task set 4.0 with 1 tasks 17/08/31 14:40:37 INFO TaskSetManager: Starting task 0.0 in stage 4.0 (TID 4, localhost, executor driver, partition 0, PROCESS_LOCAL, 4849 bytes) 17/08/31 14:40:37 INFO Executor: Running task 0.0 in stage 4.0 (TID 4) 17/08/31 14:40:37 INFO BlockManager: Found block rdd_8_0 locally 17/08/31 14:40:37 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:37 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 1 ms 17/08/31 14:40:37 INFO Executor: Finished task 0.0 in stage 4.0 (TID 4). 1413 bytes result sent to driver 17/08/31 14:40:37 INFO TaskSetManager: Finished task 0.0 in stage 4.0 (TID 4) in 104 ms on localhost (executor driver) (1/1) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Removed TaskSet 4.0, whose tasks have all completed, from pool 17/08/31 14:40:37 INFO DAGScheduler: ShuffleMapStage 4 (flatMap at SparkPageRank.scala:92) finished in 0.111 s 17/08/31 14:40:37 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:37 INFO DAGScheduler: running: Set() 17/08/31 14:40:37 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 9, ShuffleMapStage 5, ShuffleMapStage 6, ShuffleMapStage 10, ShuffleMapStage 7, ShuffleMapStage 11, ShuffleMapStage 8) 17/08/31 14:40:37 INFO DAGScheduler: failed: Set() 17/08/31 14:40:37 INFO DAGScheduler: Submitting ShuffleMapStage 5 (MapPartitionsRDD[35] at flatMap at SparkPageRank.scala:92), which has no missing parents 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_6 stored as values in memory (estimated size 6.8 KB, free 904.5 MB) 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_6_piece0 stored as bytes in memory (estimated size 3.4 KB, free 904.5 MB) 17/08/31 14:40:37 INFO BlockManagerInfo: Added broadcast_6_piece0 in memory on 169.254.28.160:58377 (size: 3.4 KB, free: 904.8 MB) 17/08/31 14:40:37 INFO SparkContext: Created broadcast 6 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:37 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 5 (MapPartitionsRDD[35] at flatMap at SparkPageRank.scala:92) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Adding task set 5.0 with 1 tasks 17/08/31 14:40:37 INFO TaskSetManager: Starting task 0.0 in stage 5.0 (TID 5, localhost, executor driver, partition 0, PROCESS_LOCAL, 4849 bytes) 17/08/31 14:40:37 INFO Executor: Running task 0.0 in stage 5.0 (TID 5) 17/08/31 14:40:37 INFO BlockManager: Found block rdd_8_0 locally 17/08/31 14:40:37 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:37 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms 17/08/31 14:40:37 INFO Executor: Finished task 0.0 in stage 5.0 (TID 5). 1370 bytes result sent to driver 17/08/31 14:40:37 INFO TaskSetManager: Finished task 0.0 in stage 5.0 (TID 5) in 74 ms on localhost (executor driver) (1/1) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Removed TaskSet 5.0, whose tasks have all completed, from pool 17/08/31 14:40:37 INFO DAGScheduler: ShuffleMapStage 5 (flatMap at SparkPageRank.scala:92) finished in 0.079 s 17/08/31 14:40:37 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:37 INFO DAGScheduler: running: Set() 17/08/31 14:40:37 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 9, ShuffleMapStage 6, ShuffleMapStage 10, ShuffleMapStage 7, ShuffleMapStage 11, ShuffleMapStage 8) 17/08/31 14:40:37 INFO DAGScheduler: failed: Set() 17/08/31 14:40:37 INFO DAGScheduler: Submitting ShuffleMapStage 6 (MapPartitionsRDD[42] at flatMap at SparkPageRank.scala:92), which has no missing parents 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_7 stored as values in memory (estimated size 6.8 KB, free 904.5 MB) 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_7_piece0 stored as bytes in memory (estimated size 3.4 KB, free 904.5 MB) 17/08/31 14:40:37 INFO BlockManagerInfo: Added broadcast_7_piece0 in memory on 169.254.28.160:58377 (size: 3.4 KB, free: 904.8 MB) 17/08/31 14:40:37 INFO SparkContext: Created broadcast 7 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:37 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 6 (MapPartitionsRDD[42] at flatMap at SparkPageRank.scala:92) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Adding task set 6.0 with 1 tasks 17/08/31 14:40:37 INFO TaskSetManager: Starting task 0.0 in stage 6.0 (TID 6, localhost, executor driver, partition 0, PROCESS_LOCAL, 4849 bytes) 17/08/31 14:40:37 INFO Executor: Running task 0.0 in stage 6.0 (TID 6) 17/08/31 14:40:37 INFO BlockManager: Found block rdd_8_0 locally 17/08/31 14:40:37 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:37 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms 17/08/31 14:40:37 INFO Executor: Finished task 0.0 in stage 6.0 (TID 6). 1370 bytes result sent to driver 17/08/31 14:40:37 INFO TaskSetManager: Finished task 0.0 in stage 6.0 (TID 6) in 74 ms on localhost (executor driver) (1/1) 17/08/31 14:40:37 INFO TaskSchedulerImpl: Removed TaskSet 6.0, whose tasks have all completed, from pool 17/08/31 14:40:37 INFO DAGScheduler: ShuffleMapStage 6 (flatMap at SparkPageRank.scala:92) finished in 0.081 s 17/08/31 14:40:37 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:37 INFO DAGScheduler: running: Set() 17/08/31 14:40:37 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 9, ShuffleMapStage 10, ShuffleMapStage 7, ShuffleMapStage 11, ShuffleMapStage 8) 17/08/31 14:40:37 INFO DAGScheduler: failed: Set() 17/08/31 14:40:37 INFO DAGScheduler: Submitting ShuffleMapStage 7 (MapPartitionsRDD[49] at flatMap at SparkPageRank.scala:92), which has no missing parents 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_8 stored as values in memory (estimated size 6.8 KB, free 904.5 MB) 17/08/31 14:40:37 INFO MemoryStore: Block broadcast_8_piece0 stored as bytes in memory (estimated size 3.4 KB, free 904.5 MB) 17/08/31 14:40:37 INFO BlockManagerInfo: Added broadcast_8_piece0 in memory on 169.254.28.160:58377 (size: 3.4 KB, free: 904.8 MB) 17/08/31 14:40:37 INFO SparkContext: Created broadcast 8 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:37 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 7 (MapPartitionsRDD[49] at flatMap at SparkPageRank.scala:92) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Adding task set 7.0 with 1 tasks 17/08/31 14:40:38 INFO TaskSetManager: Starting task 0.0 in stage 7.0 (TID 7, localhost, executor driver, partition 0, PROCESS_LOCAL, 4849 bytes) 17/08/31 14:40:38 INFO Executor: Running task 0.0 in stage 7.0 (TID 7) 17/08/31 14:40:38 INFO BlockManager: Found block rdd_8_0 locally 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 1 ms 17/08/31 14:40:38 INFO Executor: Finished task 0.0 in stage 7.0 (TID 7). 1370 bytes result sent to driver 17/08/31 14:40:38 INFO TaskSetManager: Finished task 0.0 in stage 7.0 (TID 7) in 62 ms on localhost (executor driver) (1/1) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Removed TaskSet 7.0, whose tasks have all completed, from pool 17/08/31 14:40:38 INFO DAGScheduler: ShuffleMapStage 7 (flatMap at SparkPageRank.scala:92) finished in 0.065 s 17/08/31 14:40:38 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:38 INFO DAGScheduler: running: Set() 17/08/31 14:40:38 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 9, ShuffleMapStage 10, ShuffleMapStage 11, ShuffleMapStage 8) 17/08/31 14:40:38 INFO DAGScheduler: failed: Set() 17/08/31 14:40:38 INFO DAGScheduler: Submitting ShuffleMapStage 8 (MapPartitionsRDD[56] at flatMap at SparkPageRank.scala:92), which has no missing parents 17/08/31 14:40:38 INFO MemoryStore: Block broadcast_9 stored as values in memory (estimated size 6.8 KB, free 904.5 MB) 17/08/31 14:40:38 INFO MemoryStore: Block broadcast_9_piece0 stored as bytes in memory (estimated size 3.4 KB, free 904.5 MB) 17/08/31 14:40:38 INFO BlockManagerInfo: Added broadcast_9_piece0 in memory on 169.254.28.160:58377 (size: 3.4 KB, free: 904.7 MB) 17/08/31 14:40:38 INFO SparkContext: Created broadcast 9 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:38 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 8 (MapPartitionsRDD[56] at flatMap at SparkPageRank.scala:92) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Adding task set 8.0 with 1 tasks 17/08/31 14:40:38 INFO TaskSetManager: Starting task 0.0 in stage 8.0 (TID 8, localhost, executor driver, partition 0, PROCESS_LOCAL, 4849 bytes) 17/08/31 14:40:38 INFO Executor: Running task 0.0 in stage 8.0 (TID 8) 17/08/31 14:40:38 INFO BlockManager: Found block rdd_8_0 locally 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 1 ms 17/08/31 14:40:38 INFO Executor: Finished task 0.0 in stage 8.0 (TID 8). 1327 bytes result sent to driver 17/08/31 14:40:38 INFO TaskSetManager: Finished task 0.0 in stage 8.0 (TID 8) in 80 ms on localhost (executor driver) (1/1) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Removed TaskSet 8.0, whose tasks have all completed, from pool 17/08/31 14:40:38 INFO DAGScheduler: ShuffleMapStage 8 (flatMap at SparkPageRank.scala:92) finished in 0.085 s 17/08/31 14:40:38 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:38 INFO DAGScheduler: running: Set() 17/08/31 14:40:38 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 9, ShuffleMapStage 10, ShuffleMapStage 11) 17/08/31 14:40:38 INFO DAGScheduler: failed: Set() 17/08/31 14:40:38 INFO DAGScheduler: Submitting ShuffleMapStage 9 (MapPartitionsRDD[63] at flatMap at SparkPageRank.scala:92), which has no missing parents 17/08/31 14:40:38 INFO MemoryStore: Block broadcast_10 stored as values in memory (estimated size 6.8 KB, free 904.5 MB) 17/08/31 14:40:38 INFO MemoryStore: Block broadcast_10_piece0 stored as bytes in memory (estimated size 3.4 KB, free 904.5 MB) 17/08/31 14:40:38 INFO BlockManagerInfo: Added broadcast_10_piece0 in memory on 169.254.28.160:58377 (size: 3.4 KB, free: 904.7 MB) 17/08/31 14:40:38 INFO SparkContext: Created broadcast 10 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:38 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 9 (MapPartitionsRDD[63] at flatMap at SparkPageRank.scala:92) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Adding task set 9.0 with 1 tasks 17/08/31 14:40:38 INFO TaskSetManager: Starting task 0.0 in stage 9.0 (TID 9, localhost, executor driver, partition 0, PROCESS_LOCAL, 4849 bytes) 17/08/31 14:40:38 INFO Executor: Running task 0.0 in stage 9.0 (TID 9) 17/08/31 14:40:38 INFO BlockManager: Found block rdd_8_0 locally 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms 17/08/31 14:40:38 INFO Executor: Finished task 0.0 in stage 9.0 (TID 9). 1370 bytes result sent to driver 17/08/31 14:40:38 INFO TaskSetManager: Finished task 0.0 in stage 9.0 (TID 9) in 62 ms on localhost (executor driver) (1/1) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Removed TaskSet 9.0, whose tasks have all completed, from pool 17/08/31 14:40:38 INFO DAGScheduler: ShuffleMapStage 9 (flatMap at SparkPageRank.scala:92) finished in 0.078 s 17/08/31 14:40:38 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:38 INFO DAGScheduler: running: Set() 17/08/31 14:40:38 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 10, ShuffleMapStage 11) 17/08/31 14:40:38 INFO DAGScheduler: failed: Set() 17/08/31 14:40:38 INFO DAGScheduler: Submitting ShuffleMapStage 10 (MapPartitionsRDD[70] at flatMap at SparkPageRank.scala:92), which has no missing parents 17/08/31 14:40:38 INFO MemoryStore: Block broadcast_11 stored as values in memory (estimated size 6.8 KB, free 904.5 MB) 17/08/31 14:40:38 INFO MemoryStore: Block broadcast_11_piece0 stored as bytes in memory (estimated size 3.4 KB, free 904.5 MB) 17/08/31 14:40:38 INFO BlockManagerInfo: Added broadcast_11_piece0 in memory on 169.254.28.160:58377 (size: 3.4 KB, free: 904.7 MB) 17/08/31 14:40:38 INFO SparkContext: Created broadcast 11 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:38 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 10 (MapPartitionsRDD[70] at flatMap at SparkPageRank.scala:92) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Adding task set 10.0 with 1 tasks 17/08/31 14:40:38 INFO TaskSetManager: Starting task 0.0 in stage 10.0 (TID 10, localhost, executor driver, partition 0, PROCESS_LOCAL, 4849 bytes) 17/08/31 14:40:38 INFO Executor: Running task 0.0 in stage 10.0 (TID 10) 17/08/31 14:40:38 INFO BlockManager: Found block rdd_8_0 locally 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms 17/08/31 14:40:38 INFO Executor: Finished task 0.0 in stage 10.0 (TID 10). 1327 bytes result sent to driver 17/08/31 14:40:38 INFO TaskSetManager: Finished task 0.0 in stage 10.0 (TID 10) in 51 ms on localhost (executor driver) (1/1) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Removed TaskSet 10.0, whose tasks have all completed, from pool 17/08/31 14:40:38 INFO DAGScheduler: ShuffleMapStage 10 (flatMap at SparkPageRank.scala:92) finished in 0.055 s 17/08/31 14:40:38 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:38 INFO DAGScheduler: running: Set() 17/08/31 14:40:38 INFO DAGScheduler: waiting: Set(ResultStage 12, ShuffleMapStage 11) 17/08/31 14:40:38 INFO DAGScheduler: failed: Set() 17/08/31 14:40:38 INFO DAGScheduler: Submitting ShuffleMapStage 11 (MapPartitionsRDD[77] at flatMap at SparkPageRank.scala:92), which has no missing parents 17/08/31 14:40:38 INFO MemoryStore: Block broadcast_12 stored as values in memory (estimated size 6.8 KB, free 904.4 MB) 17/08/31 14:40:38 INFO MemoryStore: Block broadcast_12_piece0 stored as bytes in memory (estimated size 3.4 KB, free 904.4 MB) 17/08/31 14:40:38 INFO BlockManagerInfo: Added broadcast_12_piece0 in memory on 169.254.28.160:58377 (size: 3.4 KB, free: 904.7 MB) 17/08/31 14:40:38 INFO SparkContext: Created broadcast 12 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:38 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 11 (MapPartitionsRDD[77] at flatMap at SparkPageRank.scala:92) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Adding task set 11.0 with 1 tasks 17/08/31 14:40:38 INFO TaskSetManager: Starting task 0.0 in stage 11.0 (TID 11, localhost, executor driver, partition 0, PROCESS_LOCAL, 4849 bytes) 17/08/31 14:40:38 INFO Executor: Running task 0.0 in stage 11.0 (TID 11) 17/08/31 14:40:38 INFO BlockManager: Found block rdd_8_0 locally 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms 17/08/31 14:40:38 INFO Executor: Finished task 0.0 in stage 11.0 (TID 11). 1327 bytes result sent to driver 17/08/31 14:40:38 INFO TaskSetManager: Finished task 0.0 in stage 11.0 (TID 11) in 43 ms on localhost (executor driver) (1/1) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Removed TaskSet 11.0, whose tasks have all completed, from pool 17/08/31 14:40:38 INFO DAGScheduler: ShuffleMapStage 11 (flatMap at SparkPageRank.scala:92) finished in 0.045 s 17/08/31 14:40:38 INFO DAGScheduler: looking for newly runnable stages 17/08/31 14:40:38 INFO DAGScheduler: running: Set() 17/08/31 14:40:38 INFO DAGScheduler: waiting: Set(ResultStage 12) 17/08/31 14:40:38 INFO DAGScheduler: failed: Set() 17/08/31 14:40:38 INFO DAGScheduler: Submitting ResultStage 12 (MapPartitionsRDD[79] at mapValues at SparkPageRank.scala:96), which has no missing parents 17/08/31 14:40:38 INFO MemoryStore: Block broadcast_13 stored as values in memory (estimated size 3.7 KB, free 904.4 MB) 17/08/31 14:40:38 INFO MemoryStore: Block broadcast_13_piece0 stored as bytes in memory (estimated size 2.2 KB, free 904.4 MB) 17/08/31 14:40:38 INFO BlockManagerInfo: Added broadcast_13_piece0 in memory on 169.254.28.160:58377 (size: 2.2 KB, free: 904.7 MB) 17/08/31 14:40:38 INFO SparkContext: Created broadcast 13 from broadcast at DAGScheduler.scala:1006 17/08/31 14:40:38 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 12 (MapPartitionsRDD[79] at mapValues at SparkPageRank.scala:96) (first 15 tasks are for partitions Vector(0)) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Adding task set 12.0 with 1 tasks 17/08/31 14:40:38 INFO TaskSetManager: Starting task 0.0 in stage 12.0 (TID 12, localhost, executor driver, partition 0, ANY, 4621 bytes) 17/08/31 14:40:38 INFO Executor: Running task 0.0 in stage 12.0 (TID 12) 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks 17/08/31 14:40:38 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms 17/08/31 14:40:38 INFO Executor: Finished task 0.0 in stage 12.0 (TID 12). 1339 bytes result sent to driver 17/08/31 14:40:38 INFO TaskSetManager: Finished task 0.0 in stage 12.0 (TID 12) in 36 ms on localhost (executor driver) (1/1) 17/08/31 14:40:38 INFO TaskSchedulerImpl: Removed TaskSet 12.0, whose tasks have all completed, from pool 17/08/31 14:40:38 INFO DAGScheduler: ResultStage 12 (collect at SparkPageRank.scala:100) finished in 0.038 s 17/08/31 14:40:38 INFO DAGScheduler: Job 0 finished: collect at SparkPageRank.scala:100, took 4.551799 s 4 has rank: 0.250559973109555. 5 has rank: 0.35684113180533983. 2 has rank: 0.250559973109555. 3 has rank: 0.250559973109555. 1 has rank: 0.46884347608735455. 17/08/31 14:40:38 INFO SparkUI: Stopped Spark web UI at http://169.254.28.160:4040 17/08/31 14:40:38 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 17/08/31 14:40:38 INFO MemoryStore: MemoryStore cleared 17/08/31 14:40:38 INFO BlockManager: BlockManager stopped 17/08/31 14:40:38 INFO BlockManagerMaster: BlockManagerMaster stopped 17/08/31 14:40:38 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 17/08/31 14:40:38 INFO SparkContext: Successfully stopped SparkContext 17/08/31 14:40:38 INFO ShutdownHookManager: Shutdown hook called 17/08/31 14:40:38 INFO ShutdownHookManager: Deleting directory C:\Users\Administrator\AppData\Local\Temp\spark-9dff3014-3f32-45da-a992-acd402b64370