1, mmm简介

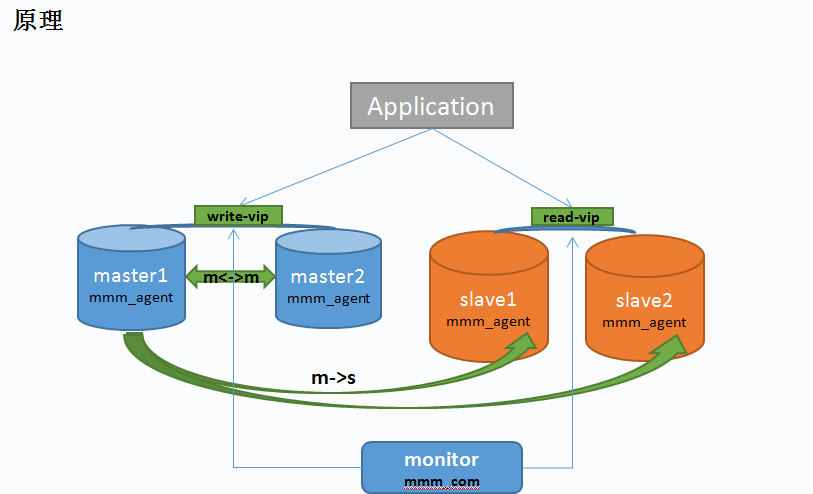

MMM(Master-Masterreplication manager for MySQL)是一套支持双主故障切换和双主日常管理的脚本程序。MMM使用Perl语言开发,主要用来监控和管理MySQL Master-Master(双主)复制,虽然叫做双主复制,但是业务上同一时刻只允许对一个主进行写入,另一台备选主上提供部分读服务,以加速在主主切换时刻备选主的预热,可以说MMM这套脚本程序一方面实现了故障切换的功能,另一方面其内部附加的工具脚本也可以实现多个slave的read负载均衡。

MMM提供了自动和手动两种方式移除一组服务器中复制延迟较高的服务器的虚拟ip,同时它还可以备份数据,实现两节点之间的数据同步等。由于MMM无法完全的保证数据一致性,所以MMM适用于对数据的一致性要求不是很高,但是又想最大程度的保证业务可用性的场景。对于那些对数据的一致性要求很高的业务,非常不建议采用MMM这种高可用架构。

如下图所示:

2,准备好3台mysql服务器

2.1 服务器准备

可以用虚拟机来安装mysql,因为以前做mha实验的时候,已经安装好了mysql,所以这个可以直接在现在已经有的3台mysql上面来部署mmm软件。

| Package name |

Description |

| mysql-mmm-agent |

MySQL-MMM Agent |

| mysql-mmm-monitor |

MySQL-MMM Monitor |

| mysql-mmm-tools |

MySQL-MMM Tools |

Mysql主从搭建:http://blog.csdn.net/mchdba/article/details/44734597

2.2 配置数据库配置

db1(192.168.52.129): vim/etc/my.cnf

server-id=129

log_slave_updates = 1

auto-increment-increment = 2 #每次增长2

auto-increment-offset = 1 #设置自动增长的字段的偏移量,即初始值为1

db2(192.168.52.129): vim/etc/my.cnf

server-id=230

log_slave_updates = 1

auto-increment-increment = 2 #每次增长2

auto-increment-offset = 2 #设置自动增长的字段的偏移量,即初始值为1

db3(192.168.52.128): vim/etc/my.cnf

server-id=331

log_slave_updates = 1

3,安装mmm

3.1 下载mmm

下载地址:http://mysql-mmm.org/downloads,最新版本是2.2.1,如下所示:

使用wget方式下载:wget http://mysql-mmm.org/_media/:mmm2:mysql-mmm-2.2.1.tar.gz

3.2 准备好perl以及lib包环境

yum install -yperl-*

yum install -y libart_lgpl.x86_64

yum install -y mysql-mmm.noarch fail

yum install -y rrdtool.x86_64

yum install -y rrdtool-perl.x86_64

可以可以直接运行install_mmm.sh脚本来安装

3.3 开始安装mmm

按照官网标准配置,mmm是需要安装在一台单独服务器上面,但是这里实验为了节省资源,所以就可以将mmm安装部署在一台slave上面,部署在192.168.52.131上面。

mv :mmm2:mysql-mmm-2.2.1.tar.gzmysql-mmm-2.2.1.tar.gz

tar -xvf mysql-mmm-2.2.1.tar.gz

cd mysql-mmm-2.2.1

make

make install

mmm安装后的拓扑结构如下:

目录 介绍

/usr/lib64/perl5/vendor_perl/ MMM使用的主要perl模块

/usr/lib/mysql-mmm MMM使用的主要脚本

/usr/sbin MMM使用的主要命令的路径

/etc/init.d/ MMM的agent和monitor启动服务的目录

/etc/mysql-mmm MMM配置文件的路径,默认所以的配置文件位于该目录下

/var/log/mysql-mmm 默认的MMM保存日志的位置

到这里已经完成了MMM的基本需求,接下来需要配置具体的配置文件,其中mmm_common.conf,mmm_agent.conf为agent端的配置文件,mmm_mon.conf为monitor端的配置文件。

将mmm_common.conf复制到另外db1、db2上面(因为db3和monitor是一台,所以不用复制了)。

4,配置mysql库数据节点的mmm配置

4.1在db3上配置mmm_common.conf

需要在db1、db2、db3上分配配置agent端配置文件,我这里在db3上安装的mmm,所以直接在db3上编辑操作:

vim/etc/mysql-mmm/mmm_common.conf

[root@oraclem1 ~]# cat /etc/mysql-mmm/mmm_common.conf

active_master_role writer

cluster_interface eth0

pid_path /var/run/mmm_agentd.pid

bin_path /usr/lib/mysql-mmm/

replication_user repl

replication_password repl_1234

agent_user mmm_agent

agent_password mmm_agent_1234

ip 192.168.52.129

mode master

peer db2

ip 192.168.52.128

mode master

peer db1

ip 192.168.52.131

mode slave

hosts db1, db2

ips 192.168.52.120

mode exclusive

hosts db1, db2, db3

ips 192.168.52.129, 192.168.52.128, 192.168.52.131

mode balanced

[root@oraclem1 ~]#

其中 replication_user 用于检查复制的用户, agent_user 为agent的用户, mode 标明是否为主或者备选主,或者从库。 mode exclusive 主为独占模式,同一时刻只能有一个主, 中hosts表示目前的主库和备选主的真实主机ip或者主机名, ips 为对外提供的虚拟机ip地址, 中hosts代表从库真实的ip和主机名, ips 代表从库的虚拟ip地址。

由于db2和db3两台主机也要配置agent配置文件,我们直接把mmm_common.conf从db1拷贝到db2和db3两台主机的/etc/mysql-mmm下。

4.2 将mmm_common.conf复制到db1和db2上面

scp /etc/mysql-mmm/mmm_common.conf data01:/etc/mysql-mmm/mmm_common.conf

scp /etc/mysql-mmm/mmm_common.conf data02:/etc/mysql-mmm/mmm_common.conf

4.3 配置mmm_agent.conf文件

在db1、db2、db3上面配置agent

db1(192.168.52.129):

[root@data01 mysql-mmm]# cat/etc/mysql-mmm/mmm_agent.conf

include mmm_common.conf

this db1

[root@data01 mysql-mmm]#

db2(192.168.52.128):

[root@data02 mysql-mmm-2.2.1]# vim/etc/mysql-mmm/mmm_agent.conf

include mmm_common.conf

this db2

db3(192.168.52.131):

[root@oraclem1 vendor_perl]# cat /etc/mysql-mmm/mmm_agent.conf

include mmm_common.conf

this db3

[root@oraclem1 vendor_perl]#

4.4 配置monitor

[root@oraclem1 vendor_perl]# cat /etc/mysql-mmm/mmm_mon.conf

include mmm_common.conf

ip 127.0.0.1

pid_path /var/run/mmm_mond.pid

bin_path /usr/lib/mysql-mmm/

status_path /var/lib/misc/mmm_mond.status

ping_ips 192.168.52.129, 192.168.52.128, 192.168.52.131

monitor_user mmm_monitor

monitor_password mmm_monitor_1234

debug 0

[root@oraclem1 vendor_perl]#

这里只在原有配置文件中的ping_ips添加了整个架构被监控主机的ip地址,而在中配置了用于监控的用户。

4.5 创建监控用户

| 用户名 |

描述 |

权限 |

| Monitor user |

mmm的monitor端监控所有的mysql数据库的状态用户 |

REPLICATION CLIENT |

| Agent user |

主要是MMM客户端用于改变的master的read_only状态用 |

SUPER,REPLICATION CLIENT,PROCESS |

| repl复制账号 |

用于复制的用户 |

REPLICATION SLAVE |

在3台服务器(db1,db2,db3)进行授权,因为我之前做mha实验的时候mysql已经安装好了,而且复制账号repl也已经建立好了,repl账号语句:GRANT REPLICATION SLAVE ON*.* TO 'repl'@'192.168.52.%' IDENTIFIED BY 'repl_1234';monitor用户:GRANTREPLICATION CLIENT ON *.* TO 'mmm_monitor'@'192.168.0.%' IDENTIFIED BY'mmm_monitor_1234'; agent用户:GRANT SUPER, REPLICATION CLIENT, PROCESS ON *.* TO'mmm_agent'@'192.168.0.%' IDENTIFIED BY'mmm_agent_1234';如下所示:

mysql> GRANT REPLICATION CLIENT ON *.* TO 'mmm_monitor'@'192.168.52.%' IDENTIFIED BY 'mmm_monitor_1234';

Query OK, 0 rows affected (0.26 sec)

mysql> GRANT SUPER, REPLICATION CLIENT, PROCESS ON *.* TO 'mmm_agent'@'192.168.52.%' IDENTIFIED BY 'mmm_agent_1234';

Query OK, 0 rows affected (0.02 sec)

mysql>

如果是从头到尾从新搭建,则加上另外一个复制账户repl的grant语句(分别在3台服务器都需要执行这3条SQL):GRANT REPLICATION SLAVE ON *.* TO 'repl'@'192.168.52.%' IDENTIFIEDBY '123456'; 如下所示:

mysql> GRANT REPLICATION SLAVE ON *.* TO'repl'@'192.168.52.%' IDENTIFIED BY '123456';

Query OK, 0 rows affected (0.01 sec)

mysql>

5,搭建起db1、db2、db3之间的mms服务

db1、db2、db3已经安装好了,现在只需要搭建起mms的架构就可以了。

db1(192.168.52.129)-->db2(192.168.52.128)

db1(192.168.52.129)-->db3(192.168.52.131)

db2(192.168.52.128)—> db1(192.168.52.129)

5.1建立db1到db2、db3的复制

先去查看db1(192.168.52.129)上面的master状况:

mysql> show master status;

+------------------+----------+--------------+--------------------------------------------------+-------------------+

| File | Position | Binlog_Do_DB |Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+--------------------------------------------------+-------------------+

| mysql-bin.000208 | 1248 | user_db |mysql,test,information_schema,performance_schema | |

+------------------+----------+--------------+--------------------------------------------------+-------------------+

1 row in set (0.03 sec)

mysql>

然后在db2和db3上建立复制链接,步骤如下:

STOP SLAVE;

RESET SLAVE;

CHANGE MASTER TOMASTER_HOST='192.168.52.129',MASTER_USER='repl',MASTER_PASSWORD='repl_1234',MASTER_LOG_FILE='mysql-bin.000208',MASTER_LOG_POS=1248;

START SLAVE;

SHOW SLAVE STATUS\G;

检查到双Yes和0就OK了。

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Seconds_Behind_Master: 0

5.2 再建立db2到db1的复制

查看db2(192.168.52.128)的master状况,然后建立复制链接

mysql> show master status;

+------------------+----------+--------------+--------------------------------------------------+-------------------+

| File | Position | Binlog_Do_DB |Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+--------------------------------------------------+-------------------+

| mysql-bin.000066 | 1477 | user_db |mysql,test,information_schema,performance_schema | |

+------------------+----------+--------------+--------------------------------------------------+-------------------+

1 row in set (0.04 sec)

mysql>

然后建立db2到db1的复制链接:

STOP SLAVE;

CHANGE MASTER TO MASTER_HOST='192.168.52.128',MASTER_USER='repl',MASTER_PASSWORD='repl_1234',MASTER_LOG_FILE='mysql-bin.000066',MASTER_LOG_POS=1477;

START SLAVE;

SHOW SLAVE STATUS\G;

检查到双Yes和0就OK了。

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Seconds_Behind_Master: 0

6,启动agent服务

分别在db1、db2、db3上启动agent服务

[root@data01 mysql-mmm]#/etc/init.d/mysql-mmm-agent start

Daemon bin: '/usr/sbin/mmm_agentd'

Daemon pid: '/var/run/mmm_agentd.pid'

Starting MMM Agent daemon... Ok

[root@data01 mysql-mmm]#

7,启动monitor服务

在monit服务器192.168.52.131上启动monitor服务:

添加进系统后台:chkconfig --add /etc/rc.d/init.d/mysql-mmm-monitor

开始启动:service mysql-mmm-monitor start

[root@oraclem1 ~]# servicemysql-mmm-monitor start

Daemon bin: '/usr/sbin/mmm_mond'

Daemon pid: '/var/run/mmm_mond.pid'

Starting MMM Monitor daemon: Ok

[root@oraclem1 ~]#

8,一些管理操作

查看状态:

[root@oraclem1 mysql-mmm]# mmm_control show

db1(192.168.52.129) master/AWAITING_RECOVERY. Roles:

db2(192.168.52.128) master/AWAITING_RECOVERY. Roles:

db3(192.168.52.131) slave/AWAITING_RECOVERY. Roles:

[root@oraclem1 mysql-mmm]#

8.1 设置online:

[root@oraclem1 mysql-mmm]# mmm_control show

db1(192.168.52.129) master/AWAITING_RECOVERY. Roles:

db2(192.168.52.128) master/AWAITING_RECOVERY. Roles:

db3(192.168.52.131) slave/AWAITING_RECOVERY. Roles:

[root@oraclem1 mysql-mmm]# mmm_controlset_online db1

OK: State of 'db1' changed to ONLINE. Nowyou can wait some time and check its new roles!

[root@oraclem1 mysql-mmm]# mmm_controlset_online db2

OK: State of 'db2' changed to ONLINE. Nowyou can wait some time and check its new roles!

[root@oraclem1 mysql-mmm]# mmm_controlset_online db3

OK: State of 'db3' changed to ONLINE. Nowyou can wait some time and check its new roles!

[root@oraclem1 mysql-mmm]#

8.2 check所有db

[root@oraclem1 mysql-mmm]# mmm_controlchecks

db2 ping [last change:2015/04/14 00:10:57] OK

db2 mysql [last change:2015/04/14 00:10:57] OK

db2 rep_threads [last change:2015/04/14 00:10:57] OK

db2 rep_backlog [last change:2015/04/14 00:10:57] OK: Backlog is null

db3 ping [last change:2015/04/14 00:10:57] OK

db3 mysql [last change:2015/04/14 00:10:57] OK

db3 rep_threads [last change:2015/04/14 00:10:57] OK

db3 rep_backlog [last change:2015/04/14 00:10:57] OK: Backlog is null

db1 ping [last change:2015/04/14 00:10:57] OK

db1 mysql [last change:2015/04/14 00:10:57] OK

db1 rep_threads [last change:2015/04/14 00:10:57] OK

db1 rep_backlog [last change: 2015/04/1400:10:57] OK: Backlog is null

[root@oraclem1 mysql-mmm]#

8.3 查看mmm_control日志

[root@oraclem1 mysql-mmm]# tail -f/var/log/mysql-mmm/mmm_mond.log

2015/04/14 00:55:29 FATAL Admin changedstate of 'db1' from AWAITING_RECOVERY to ONLINE

2015/04/14 00:55:29 INFO Orphaned role 'writer(192.168.52.120)'has been assigned to 'db1'

2015/04/14 00:55:29 INFO Orphaned role 'reader(192.168.52.131)'has been assigned to 'db1'

2015/04/14 00:55:29 INFO Orphaned role 'reader(192.168.52.129)'has been assigned to 'db1'

2015/04/14 00:55:29 INFO Orphaned role 'reader(192.168.52.128)'has been assigned to 'db1'

2015/04/14 00:58:15 FATAL Admin changedstate of 'db2' from AWAITING_RECOVERY to ONLINE

2015/04/14 00:58:15 INFO Moving role 'reader(192.168.52.131)'from host 'db1' to host 'db2'

2015/04/14 00:58:15 INFO Moving role 'reader(192.168.52.129)'from host 'db1' to host 'db2'

2015/04/14 00:58:18 FATAL Admin changedstate of 'db3' from AWAITING_RECOVERY to ONLINE

2015/04/14 00:58:18 INFO Moving role 'reader(192.168.52.131)'from host 'db2' to host 'db3'

8.4 mmm_control的命令

[root@oraclem1 ~]# mmm_control --help

Invalid command '--help'

Valid commands are:

help - show this message

ping - ping monitor

show - show status

checks [|all [|all]] - show checks status

set_online - set host online

set_offline - set host offline

mode - print current mode.

set_active - switch into active mode.

set_manual - switch into manual mode.

set_passive - switch into passive mode.

move_role [--force] - move exclusive role to host

(Only use --force if you know what you are doing!)

set_ip - set role with ip to host

[root@oraclem1 ~]#

9,测试切换操作

9.1 mmm切换原理

slave agent收到new master发送的set_active_master信息后如何进行切换主库

源码 Agent\Helpers\Actions.pm

set_active_master($new_master)

Try to catch up with the old master as faras possible and change the master to the new host.

(Syncs to the master log if the old masteris reachable. Otherwise syncs to the relay log.)

(1)、获取同步的binlog和position

先获取new master的show slavestatus中的 Master_Log_File、Read_Master_Log_Pos

wait_log=Master_Log_File, wait_pos=Read_Master_Log_Pos

如果old master可以连接,再获取oldmaster的show master status中的File、Position

wait_log=File, wait_pos=Position # 覆盖new master的slave信息,以old master 为准

(2)、slave追赶old master的同步位置

SELECT MASTER_POS_WAIT('wait_log', wait_pos);

#停止同步

STOP SLAVE;

(3)、设置new master信息

#此时 new master已经对外提供写操作。

#(在main线程里new master先接收到激活的消息,new master 转换(包含vip操作)完成后,然后由_distribute_roles将master变动同步到slave上)

#在new master转换完成后,如果能执行flush logs,更方便管理

#获取new master的binlog数据。

SHOW MASTER STATUS;

#从配置文件/etc/mysql-mmm/mmm_common.conf 读取同步帐户、密码

replication_user、replication_password

#设置新的同步位置

CHANGE MASTER TO MASTER_HOST='$new_peer_host',MASTER_PORT=$new_peer_port

,MASTER_USER='$repl_user', MASTER_PASSWORD='$repl_password'

,MASTER_LOG_FILE='$master_log', MASTER_LOG_POS=$master_pos;

#开启同步

START SLAVE;

9.2 停止db1,看write是否会自动切换到db2

(1)在db1上执行service mysqlstop;

[root@data01 ~]# service mysql stop;

Shutting down MySQL..... SUCCESS!

[root@data01 ~]#

(2)在monitor用mmm_control查看状态

[root@oraclem1 ~]# mmm_control show

db1(192.168.52.129) master/HARD_OFFLINE. Roles:

db2(192.168.52.128) master/ONLINE. Roles: reader(192.168.52.129),writer(192.168.52.120)

db3(192.168.52.131) slave/ONLINE. Roles: reader(192.168.52.128),reader(192.168.52.131)

[root@oraclem1 ~]#

Writer已经变成了db2了。

(3)在monitor上查看后台日志,可以看到如下描述

[root@oraclem1 mysql-mmm]# tail -f /var/log/mysql-mmm/mmm_mond.log

......

2015/04/14 01:34:11 WARN Check 'rep_backlog' on 'db1' is in unknown state! Message: UNKNOWN: Connect error (host = 192.168.52.129:3306, user = mmm_monitor)! Lost connection to MySQL server at 'reading initial communication packet', system error: 111

2015/04/14 01:34:11 WARN Check 'rep_threads' on 'db1' is in unknown state! Message: UNKNOWN: Connect error (host = 192.168.52.129:3306, user = mmm_monitor)! Lost connection to MySQL server at 'reading initial communication packet', system error: 111

2015/04/14 01:34:21 ERROR Check 'mysql' on 'db1' has failed for 10 seconds! Message: ERROR: Connect error (host = 192.168.52.129:3306, user = mmm_monitor)! Lost connection to MySQL server at 'reading initial communication packet', system error: 111

2015/04/14 01:34:23 FATAL State of host 'db1' changed from ONLINE to HARD_OFFLINE (ping: OK, mysql: not OK)

2015/04/14 01:34:23 INFO Removing all roles from host 'db1':

2015/04/14 01:34:23 INFO Removed role 'reader(192.168.52.128)' from host 'db1'

2015/04/14 01:34:23 INFO Removed role 'writer(192.168.52.120)' from host 'db1'

2015/04/14 01:34:23 INFO Orphaned role 'writer(192.168.52.120)' has been assigned to 'db2'

2015/04/14 01:34:23 INFO Orphaned role 'reader(192.168.52.128)' has been assigned to 'db3'

9.3 停止db2,看writer自动切换到db1

(1)启动db1,并设置为online

[root@data01 ~]# service mysql start

Starting MySQL.................. SUCCESS!

[root@data01 ~]#

在monitor上设置db1为online

[root@oraclem1 ~]# mmm_control set_onlinedb1;

OK: State of 'db1' changed to ONLINE. Nowyou can wait some time and check its new roles!

[root@oraclem1 ~]#

在monitor上查看状态

[root@oraclem1 ~]# mmm_control show

db1(192.168.52.129) master/ONLINE. Roles: reader(192.168.52.131)

db2(192.168.52.128) master/ONLINE. Roles: reader(192.168.52.129),writer(192.168.52.120)

db3(192.168.52.131) slave/ONLINE. Roles: reader(192.168.52.128)

[root@oraclem1 ~]#

OK,这里要启动db1,并且将db1设置成online,是因为mmm的配置里面master只能在db1和db2之间切换,在自动切换成功的情况下,必须保证要切换的对象master是online的,不然切换就会失败因为切换对象没有online。

(2)停止db2

[root@data02 ~]# service mysql stop

Shutting down MySQL.. SUCCESS!

[root@data02 ~]#

(3)在monitor上查看master是否自动从db2切换到db1了

[root@oraclem1 ~]# mmm_control show

db1(192.168.52.129) master/ONLINE. Roles: reader(192.168.52.131),writer(192.168.52.120)

db2(192.168.52.128) master/HARD_OFFLINE. Roles:

db3(192.168.52.131)slave/ONLINE. Roles: reader(192.168.52.129), reader(192.168.52.128)

[root@oraclem1 ~]#

OK,writer已经自动变成db1了,db2处于HARD_OFFLINE状态,自动切换成功了。

(4)去查看monitor后台日志

[root@oraclem1 mysql-mmm]# tail -f /var/log/mysql-mmm/mmm_mond.log

......

2015/04/14 01:56:13 ERROR Check 'mysql' on 'db2' has failed for 10 seconds! Message: ERROR: Connect error (host = 192.168.52.128:3306, user = mmm_monitor)! Lost connection to MySQL server at 'reading initial communication packet', system error: 111

2015/04/14 01:56:14 FATAL State of host 'db2' changed from ONLINE to HARD_OFFLINE (ping: OK, mysql: not OK)

2015/04/14 01:56:14 INFO Removing all roles from host 'db2':

2015/04/14 01:56:14 INFO Removed role 'reader(192.168.52.129)' from host 'db2'

2015/04/14 01:56:14 INFO Removed role 'writer(192.168.52.120)' from host 'db2'

2015/04/14 01:56:14 INFO Orphaned role 'writer(192.168.52.120)' has been assigned to 'db1'

2015/04/14 01:56:14 INFO Orphaned role 'reader(192.168.52.129)' has been assigned to 'db3'

9.4 验证vip

先获得writer的vip地址,在db2上

[root@oraclem1 ~]# mmm_control show

db1(192.168.52.129) master/ONLINE. Roles: reader(192.168.52.230)

db2(192.168.52.130) master/ONLINE. Roles: reader(192.168.52.231),writer(192.168.52.120)

db3(192.168.52.131) slave/ONLINE. Roles: reader(192.168.52.229)

[root@oraclem1 ~]#

然后去db2上面,查看ip绑定:

[root@data02 mysql-mmm-2.2.1]# ip add

1: lo: mtu16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen1000

link/ether 00:0c:29:a7:26:fc brd ff:ff:ff:ff:ff:ff

inet 192.168.52.130/24 brd 192.168.52.255 scope global eth0

inet 192.168.52.231/32 scope global eth0

inet 192.168.52.120/32 scope global eth0

inet6 fe80::20c:29ff:fea7:26fc/64 scope link

valid_lft forever preferred_lft forever

3: pan0: mtu1500 qdisc noop state DOWN

link/ether 46:27:c6:ef:2b:95 brd ff:ff:ff:ff:ff:ff

[root@data02 mysql-mmm-2.2.1]#

Ping下writer的vip,是可以ping通的。

[root@oraclem1 ~]# ping 192.168.52.120

PING 192.168.52.120 (192.168.52.120) 56(84)bytes of data.

64 bytes from 192.168.52.120: icmp_seq=1ttl=64 time=2.37 ms

64 bytes from 192.168.52.120: icmp_seq=2ttl=64 time=0.288 ms

64 bytes from 192.168.52.120: icmp_seq=3ttl=64 time=0.380 ms

^C

--- 192.168.52.120 ping statistics ---

3 packets transmitted, 3 received, 0%packet loss, time 2717ms

rtt min/avg/max/mdev =0.288/1.015/2.377/0.963 ms

[root@oraclem1 ~]#

9.5 mmm扩展

10,报错记录汇总

10.1 报错1:

[root@data01 mysql-mmm-2.2.1]#/etc/init.d/mysql-mmm-agent start

Daemon bin: '/usr/sbin/mmm_agentd'

Daemon pid: '/var/run/mmm_agentd.pid'

Starting MMM Agent daemon... Can't locateProc/Daemon.pm in @INC (@INC contains: /usr/local/lib64/perl5/usr/local/share/perl5 /usr/lib64/perl5/vendor_perl/usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 .) at/usr/sbin/mmm_agentd line 7.

BEGIN failed--compilation aborted at/usr/sbin/mmm_agentd line 7.

failed

[root@data01 mysql-mmm-2.2.1]#

解决办法:cpan安装2个插件

cpan Proc::Daemon

cpan Log::Log4perl

10.2 报错2:

[root@oraclem1 vendor_perl]#/etc/init.d/mysql-mmm-agent start

Daemon bin: '/usr/sbin/mmm_agentd'

Daemon pid: '/var/run/mmm_agentd.pid'

Starting MMM Agent daemon... Can't locateProc/Daemon.pm in @INC (@INC contains: /usr/local/lib64/perl5/usr/local/share/perl5 /usr/lib64/perl5/vendor_perl/usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 .) at/usr/sbin/mmm_agentd line 7.

BEGIN failed--compilation aborted at/usr/sbin/mmm_agentd line 7.

failed

[root@oraclem1 vendor_perl]#

# Failed test 'the 'pid2.file' has right permissions via file_umask'

# at /root/.cpan/build/Proc-Daemon-0.19-zoMArm/t/02_testmodule.t line 152.

/root/.cpan/build/Proc-Daemon-0.19-zoMArm/t/02_testmodule.tdid not return a true value at t/03_taintmode.t line 20.

# Looks like you failed 1 test of 19.

# Looks like your test exited with 2 justafter 19.

t/03_taintmode.t ... Dubious, test returned2 (wstat 512, 0x200)

Failed 1/19 subtests

解决方式:强行-f安装

[root@oraclem1 ~]# cpan Proc::Daemon -f

CPAN: Storable loaded ok (v2.20)

Going to read '/root/.cpan/Metadata'

Database was generated on Mon, 13 Apr 2015 11:29:02 GMT

Proc::Daemon is up to date (0.19).

Warning: Cannot install -f, don't know whatit is.

Try the command

i/-f/

to find objects with matching identifiers.

CPAN: Time::HiRes loaded ok (v1.9726)

[root@oraclem1 ~]#

10.3 报错3:

[root@oraclem1 mysql-mmm]# ping192.168.52.120

PING 192.168.52.120 (192.168.52.120) 56(84)bytes of data.

From 192.168.52.131 icmp_seq=2 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=3 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=4 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=6 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=7 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=8 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=10 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=11 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=12 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=14 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=15 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=16 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=17 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=18 DestinationHost Unreachable

From 192.168.52.131 icmp_seq=19 DestinationHost Unreachable

^C

--- 192.168.52.120 ping statistics ---

20 packets transmitted, 0 received, +15errors, 100% packet loss, time 19729ms

pipe 4

[root@oraclem1 mysql-mmm]#

查看后台db1的agent.log日志报错如下:

2015/04/20 02:29:41 FATAL Couldn'tconfigure IP '192.168.52.131' on interface 'eth0': undef

2015/04/20 02:29:42 FATAL Couldn't allowwrites: undef

2015/04/20 02:29:44 FATAL Couldn'tconfigure IP '192.168.52.131' on interface 'eth0': undef

2015/04/20 02:29:45 FATAL Couldn't allowwrites: undef

再查看网络端口:

[root@oraclem1 ~]# ip add

1: lo: mtu16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen1000

link/ether 00:0c:29:0a:79:e6 brd ff:ff:ff:ff:ff:ff

inet 192.168.52.131/24 brd 192.168.52.255 scope global eth0

inet6 fe80::20c:29ff:fe0a:79e6/64 scope link

valid_lft forever preferred_lft forever

3: pan0: mtu1500 qdisc noop state DOWN

link/ether 5e:eb:c1:a4:f8:f8 brd ff:ff:ff:ff:ff:ff

[root@oraclem1 ~]#

再用configure_ip来检查eth0端口的网络情况:

[root@oraclem1 ~]#/usr/lib/mysql-mmm/agent/configure_ip eth0 192.168.52.120

Can't locate Net/ARP.pm in @INC (@INCcontains: /usr/local/lib64/perl5 /usr/local/share/perl5/usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl /usr/lib64/perl5/usr/share/perl5 .) at/usr/share/perl5/vendor_perl/MMM/Agent/Helpers/Network.pm line 11.

BEGIN failed--compilation aborted at/usr/share/perl5/vendor_perl/MMM/Agent/Helpers/Network.pm line 11.

Compilation failed in require at/usr/share/perl5/vendor_perl/MMM/Agent/Helpers/Actions.pm line 5.

BEGIN failed--compilation aborted at/usr/share/perl5/vendor_perl/MMM/Agent/Helpers/Actions.pm line 5.

Compilation failed in require at /usr/lib/mysql-mmm/agent/configure_ipline 6.

BEGIN failed--compilation aborted at/usr/lib/mysql-mmm/agent/configure_ip line 6.

[root@oraclem1 ~]#

然后安装Net/ARP之后,就正常了。cpan Net::ARP

参考资料:http://blog.csdn.net/mchdba/article/details/8633840

参考资料:http://mysql-mmm.org/downloads

参考资料:http://www.open-open.com/lib/view/open1395887766467.html

----------------------------------------------------------------------------------------------------------------

有,文章允许转载,但必须以链接方式注明源地址,否则追究法律责任!>

原博客地址: http://blog.itpub.net/26230597/viewspace-1591814/

原作者:黄杉 (mchdba)

----------------------------------------------------------------------------------------------------------------