zfs可以认为是raid和文件系统的结合体.

解决了动态条带和数据与校验位的一致性问题, 所以具有极高的可靠性和性能.

因为zfs需要接管硬盘, 所以在有RAID卡的环境中, 需要设置为 JBOD模式.

同时ZFS利用SLOG(Separate Intent Log)设备存储ZIL(ZFS Intent Log)来提升写性能.

(使用slog后, 即使断电, 也不会导致数据不一致, 只是会导致ZFS中新的数据没有FLUSH到磁盘, 呈现老的状态, 类似PostgreSQL 的async commit.)

(It's important to identify that all three devices listed above can maintain data persistence during a power outage. The SLOG and the ZIL are critical in getting your data to spinning platter. If a power outage occurs, and you have a volatile SLOG, the worst thing that will happen is the new data is not flushed, and you are left with old data. However, it's important to note, that in the case of a power outage, you won't have corrupted data, just lost data. Your data will still be consistent on disk.)

建议将SLOG放在ssd或者nvram设备中, 提升写性能.

甚至可以拿slog来做增量文件系统恢复, 有点和PostgreSQL的xlog功能类似呢.

因为slog的重要性, 一般加slog设备使用mirror.

例如 :

另外需要注意: SLOG如果使用SSD来做的话, 因为SSD的使用寿命是和擦写次数相关的, 所以我们可以根据SLOG的写入速度来评估SLOG能用多久.

# zpool create tank mirror /tmp/file1 /tmp/file2 mirror /tmp/file3 /tmp/file4 log mirror sde sdf cache sdg sdh

# zpool status tank

pool: tank

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

/tmp/file1 ONLINE 0 0 0

/tmp/file2 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

/tmp/file3 ONLINE 0 0 0

/tmp/file4 ONLINE 0 0 0

logs

mirror-2 ONLINE 0 0 0

sde ONLINE 0 0 0

sdf ONLINE 0 0 0

cache

sdg ONLINE 0 0 0

sdh ONLINE 0 0 0

errors: No known data errors另外需要注意: SLOG如果使用SSD来做的话, 因为SSD的使用寿命是和擦写次数相关的, 所以我们可以根据SLOG的写入速度来评估SLOG能用多久.

SLOG Life Expectancy

Because you will likely be using a consumer-grade SSD for your SLOG in your GNU/Linux server, we need to make some mention of the wear and tear of SSDs for write-intensive scenarios. Of course, this will largely vary based on manufacturer, but we can setup some generalities.

First and foremost, ZFS has advanced wear-leveling algorithms that will evenly wear each chip on the SSD. There is no need for TRIM support, which in all reality, is really just a garbage collection support more than anything. The wear-leveling of ZFS in inherent due to the copy-on-write nature of the filesystem.

Second, various drives will be implemented with different nanometer processes. The smaller the nanometer process, the shorter the life of your SSD. As an example, the Intel 320 is a 25 nanometer MLC 300 GB SSD, and is rated at roughly 5000 P/E cycles. This means you can write to your entire SSD 5000 times if using wear leveling algorithms. This produces 1500000 GB of total written data, or 1500 TB. My ZIL maintains about 3 MB of data per second. As a result, I can maintain about 95 TB of written data per year. This gives me a life of about 15 years for this Intel SSD.

However, the Intel 335 is a 20 nanometer MLC 240 GB SSD, and is rated at roughly 3000 P/E cycles. With wear leveling, this means you can write you entire SSD 3000 times, which produces 720 TB of total written data. This is only 7 years for my 3 MBps ZIL, which is less than 1/2 the life expectancy the Intel 320. Point is, you need to keep an eye on these things when planning out your pool.

Now, if you are using a battery-backed DRAM drive, then wear leveling is not a problem, and the DIMMs will likely last the duration of your server. Same might be said for 10k+ SAS or FC drives.

ZIL的空间一般不需要太大, 作者用了4GB的分区作为SLOG设备.

ZFS另一个强大之处是CACHE算法, 结合了LRU,LFU, 保留最近使用的和使用最频繁的块在缓存中.

同时ZFS还支持二级缓存, 可以使用IOPS能力强大的块设备作为二级缓存设备.

创建zpool时, 尽量使用设备ID (/dev/disk/by-id/*) , 不要使用别名如sda, 因为别名可能重启后会发生变化.

以CentOS 6.4 x64为例, 介绍一下zfs的安装.

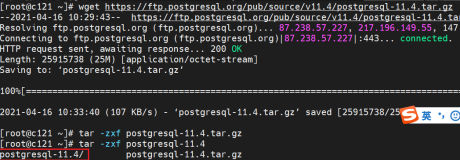

下载

安装spl

安装zfs

测试所在系统的 Solaris的移植性, 使用splat前需要加载splat模块, 否则会报错.

[root@db-172-16-3-150 soft_bak]# wget http://archive.zfsonlinux.org/downloads/zfsonlinux/spl/spl-0.6.2.tar.gz

[root@db-172-16-3-150 soft_bak]# wget http://archive.zfsonlinux.org/downloads/zfsonlinux/zfs/zfs-0.6.2.tar.gz安装spl

[root@db-172-16-3-150 spl-0.6.2]# tar -zxvf spl-0.6.2.tar.gz

[root@db-172-16-3-150 soft_bak]# cd spl-0.6.2

[root@db-172-16-3-150 spl-0.6.2]# ./autogen.sh

[root@db-172-16-3-150 spl-0.6.2]# ./configure --prefix=/opt/spl0.6.2

[root@db-172-16-3-150 spl-0.6.2]# make && make install安装zfs

[root@db-172-16-3-150 soft_bak]# cd /opt/soft_bak/

[root@db-172-16-3-150 soft_bak]# tar -zxvf zfs-0.6.2.tar.gz

[root@db-172-16-3-150 soft_bak]# cd zfs-0.6.2

[root@db-172-16-3-150 zfs-0.6.2]# yum install -y libuuid-devel

[root@db-172-16-3-150 zfs-0.6.2]# ./configure --prefix=/opt/zfs0.6.2

[root@db-172-16-3-150 zfs-0.6.2]# make && make install测试所在系统的 Solaris的移植性, 使用splat前需要加载splat模块, 否则会报错.

如果遇到错误, 使用-vv输出详细信息, 找到错误原因.

接下来创建几个测试文件, 分别作为磁盘, 二级缓存, LOG.

splat - Solaris Porting LAyer Tests

[root@db-172-16-3-150 soft_bak]# cd /opt/spl0.6.2/sbin/

[root@db-172-16-3-150 sbin]# ./splat -a

Unable to open /dev/splatctl: 2

Is the splat module loaded?

[root@db-172-16-3-150 spl0.6.2]# modprobe splat

[root@db-172-16-3-150 spl0.6.2]# /opt/spl0.6.2/sbin/splat -a

------------------------------ Running SPLAT Tests ------------------------------

kmem:kmem_alloc Pass

kmem:kmem_zalloc Pass

kmem:vmem_alloc Pass

kmem:vmem_zalloc Pass

kmem:slab_small Pass

kmem:slab_large Pass

kmem:slab_align Pass

kmem:slab_reap Pass

kmem:slab_age Pass

kmem:slab_lock Pass

kmem:vmem_size Pass

kmem:slab_reclaim Fail Timer expired

taskq:single Pass

taskq:multiple Pass

taskq:system Pass

taskq:wait Pass

taskq:order Pass

taskq:front Pass

taskq:recurse Pass

taskq:contention Pass

taskq:delay Pass

taskq:cancel Pass

krng:freq Pass

mutex:tryenter Pass

mutex:race Pass

mutex:owned Pass

mutex:owner Pass

condvar:signal1 Pass

condvar:broadcast1 Pass

condvar:signal2 Pass

condvar:broadcast2 Pass

condvar:timeout Pass

thread:create Pass

thread:exit Pass

thread:tsd Pass

rwlock:N-rd/1-wr Pass

rwlock:0-rd/N-wr Pass

rwlock:held Pass

rwlock:tryenter Pass

rwlock:rw_downgrade Pass

rwlock:rw_tryupgrade Pass

time:time1 Pass

time:time2 Pass

vnode:vn_open Pass

vnode:vn_openat Pass

vnode:vn_rdwr Pass

vnode:vn_rename Pass

vnode:vn_getattr Pass

vnode:vn_sync Pass

kobj:open Pass

kobj:size/read Pass

atomic:64-bit Pass

list:create/destroy Pass

list:ins/rm head Pass

list:ins/rm tail Pass

list:insert_after Pass

list:insert_before Pass

list:remove Pass

list:active Pass

generic:ddi_strtoul Pass

generic:ddi_strtol Pass

generic:ddi_strtoull Pass

generic:ddi_strtoll Pass

generic:udivdi3 Pass

generic:divdi3 Pass

cred:cred Pass

cred:kcred Pass

cred:groupmember Pass

zlib:compress/uncompress Pass

linux:shrink_dcache Pass

linux:shrink_icache Pass

linux:shrinker Pass接下来创建几个测试文件, 分别作为磁盘, 二级缓存, LOG.

其中二级缓存使用SSD硬盘分区, LOG使用SSD上的两个文件.

创建zpool, 测试性能(cache必需使用块设备, 不能直接用文件).

[root@db-172-16-3-150 ~]# cd /ssd4

[root@db-172-16-3-150 ssd4]# dd if=/dev/zero of=./zfs.log1 bs=1k count=1024000

[root@db-172-16-3-150 ssd4]# dd if=/dev/zero of=./zfs.log2 bs=1k count=1024000

[root@db-172-16-3-150 ~]# cd /opt

[root@db-172-16-3-150 opt]# dd if=/dev/zero of=./zfs.disk1 bs=1k count=1024000

[root@db-172-16-3-150 opt]# dd if=/dev/zero of=./zfs.disk2 bs=1k count=1024000

[root@db-172-16-3-150 opt]# dd if=/dev/zero of=./zfs.disk3 bs=1k count=1024000

[root@db-172-16-3-150 opt]# dd if=/dev/zero of=./zfs.disk4 bs=1k count=1024000创建zpool, 测试性能(cache必需使用块设备, 不能直接用文件).

[root@db-172-16-3-150 ssd4]# ll /dev/disk/by-id/

total 0

lrwxrwxrwx 1 root root 9 Apr 23 08:59 ata-OCZ-REVODRIVE3_OCZ-886PWVEQ351TAPNH -> ../../sdb

lrwxrwxrwx 1 root root 10 Apr 23 08:59 ata-OCZ-REVODRIVE3_OCZ-886PWVEQ351TAPNH-part1 -> ../../sdb1

lrwxrwxrwx 1 root root 9 Apr 23 08:59 ata-OCZ-REVODRIVE3_OCZ-Z2134R0TLQBNE659 -> ../../sda

lrwxrwxrwx 1 root root 10 Apr 23 08:59 ata-OCZ-REVODRIVE3_OCZ-Z2134R0TLQBNE659-part1 -> ../../sda1

lrwxrwxrwx 1 root root 9 Apr 23 08:59 scsi-360026b902fe2ce001261fa4506592f80 -> ../../sdc

lrwxrwxrwx 1 root root 10 Apr 23 08:59 scsi-360026b902fe2ce001261fa4506592f80-part1 -> ../../sdc1

lrwxrwxrwx 1 root root 10 Apr 23 08:59 scsi-360026b902fe2ce001261fa4506592f80-part2 -> ../../sdc2

lrwxrwxrwx 1 root root 10 Apr 23 08:59 scsi-360026b902fe2ce001261fa4506592f80-part3 -> ../../sdc3

lrwxrwxrwx 1 root root 9 Apr 23 08:59 scsi-360026b902fe2ce0018993f2f0c5734b3 -> ../../sdd

lrwxrwxrwx 1 root root 10 Apr 23 08:59 scsi-360026b902fe2ce0018993f2f0c5734b3-part1 -> ../../sdd1

lrwxrwxrwx 1 root root 9 Apr 23 08:59 scsi-SATA_OCZ-REVODRIVE3_OCZ-886PWVEQ351TAPNH -> ../../sdb

lrwxrwxrwx 1 root root 10 Apr 23 08:59 scsi-SATA_OCZ-REVODRIVE3_OCZ-886PWVEQ351TAPNH-part1 -> ../../sdb1

lrwxrwxrwx 1 root root 9 Apr 23 08:59 scsi-SATA_OCZ-REVODRIVE3_OCZ-Z2134R0TLQBNE659 -> ../../sda

lrwxrwxrwx 1 root root 10 Apr 23 08:59 scsi-SATA_OCZ-REVODRIVE3_OCZ-Z2134R0TLQBNE659-part1 -> ../../sda1

lrwxrwxrwx 1 root root 9 Apr 23 08:59 wwn-0x5e83a97e5dbf17f7 -> ../../sdb

lrwxrwxrwx 1 root root 10 Apr 23 08:59 wwn-0x5e83a97e5dbf17f7-part1 -> ../../sdb1

lrwxrwxrwx 1 root root 9 Apr 23 08:59 wwn-0x5e83a97e827c316e -> ../../sda

lrwxrwxrwx 1 root root 10 Apr 23 08:59 wwn-0x5e83a97e827c316e-part1 -> ../../sda1

lrwxrwxrwx 1 root root 9 Apr 23 08:59 wwn-0x60026b902fe2ce001261fa4506592f80 -> ../../sdc

lrwxrwxrwx 1 root root 10 Apr 23 08:59 wwn-0x60026b902fe2ce001261fa4506592f80-part1 -> ../../sdc1

lrwxrwxrwx 1 root root 10 Apr 23 08:59 wwn-0x60026b902fe2ce001261fa4506592f80-part2 -> ../../sdc2

lrwxrwxrwx 1 root root 10 Apr 23 08:59 wwn-0x60026b902fe2ce001261fa4506592f80-part3 -> ../../sdc3

lrwxrwxrwx 1 root root 9 Apr 23 08:59 wwn-0x60026b902fe2ce0018993f2f0c5734b3 -> ../../sdd

lrwxrwxrwx 1 root root 10 Apr 23 08:59 wwn-0x60026b902fe2ce0018993f2f0c5734b3-part1 -> ../../sdd1

[root@db-172-16-3-150 ssd4]# /opt/zfs0.6.2/sbin/zpool create zptest /opt/zfs.disk1 /opt/zfs.disk2 /opt/zfs.disk3 /opt/zfs.disk4 log mirror /ssd4/zfs.log1 /ssd4/zfs.log2 cache /dev/disk/by-id/scsi-SATA_OCZ-REVODRIVE3_OCZ-Z2134R0TLQBNE659-part1

[root@db-172-16-3-150 ssd4]# /opt/zfs0.6.2/sbin/zpool status zptest

pool: zptest

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

zptest ONLINE 0 0 0

/opt/zfs.disk1 ONLINE 0 0 0

/opt/zfs.disk2 ONLINE 0 0 0

/opt/zfs.disk3 ONLINE 0 0 0

/opt/zfs.disk4 ONLINE 0 0 0

logs

mirror-4 ONLINE 0 0 0

/ssd4/zfs.log1 ONLINE 0 0 0

/ssd4/zfs.log2 ONLINE 0 0 0

cache

sda1 ONLINE 0 0 0

errors: No known data errors

[root@db-172-16-3-150 ssd4]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sdc1 29G 9.3G 19G 34% /

tmpfs 48G 0 48G 0% /dev/shm

/dev/sdc3 98G 34G 59G 37% /opt

/dev/sdd1 183G 33G 142G 19% /ssd1

/dev/sdb1 221G 42G 168G 20% /ssd4

zptest 3.9G 0 3.9G 0% /zptest

重启后需要使用zfs来mount.

# /opt/zfs0.6.2/sbin/zfs mount zptest

使用pg_test_fsync测试fsync接口的性能 :

测试过程中, 使用iostat发现, 大量的写实际上集中在zptest的LOG设备上. 符合zfs的log的原理.

使用postgresql测试读写, 并使用同步提交, 减少shared buffer更能体现ZFS的性能.

接下来是直接对ZFS4个文件所在机械硬盘做测试, 测试EXT4的性能.

[root@db-172-16-3-150 ssd4]# cd /zptest

[root@db-172-16-3-150 zptest]# mkdir pg93

[root@db-172-16-3-150 zptest]# chown pg93:pg93 pg93

[root@db-172-16-3-150 zptest]# su - pg93

cpg93@db-172-16-3-150-> cd /zptest/pg93/

pg93@db-172-16-3-150-> pg_test_fsync

5 seconds per test

O_DIRECT supported on this platform for open_datasync and open_sync.

Compare file sync methods using one 64kB write:

(in wal_sync_method preference order, except fdatasync

is Linux's default)

open_datasync n/a*

fdatasync 778.117 ops/sec 1285 usecs/op

fsync 756.724 ops/sec 1321 usecs/op

fsync_writethrough n/a

open_sync n/a*

* This file system and its mount options do not support direct

I/O, e.g. ext4 in journaled mode.

Compare file sync methods using two 64kB writes:

(in wal_sync_method preference order, except fdatasync

is Linux's default)

open_datasync n/a*

fdatasync 96.185 ops/sec 10397 usecs/op

fsync 369.918 ops/sec 2703 usecs/op

fsync_writethrough n/a

open_sync n/a*

* This file system and its mount options do not support direct

I/O, e.g. ext4 in journaled mode.

Compare open_sync with different write sizes:

(This is designed to compare the cost of writing 16kB

in different write open_sync sizes.)

1 * 16kB open_sync write n/a*

2 * 8kB open_sync writes n/a*

4 * 4kB open_sync writes n/a*

8 * 2kB open_sync writes n/a*

16 * 1kB open_sync writes n/a*

Test if fsync on non-write file descriptor is honored:

(If the times are similar, fsync() can sync data written

on a different descriptor.)

write, fsync, close 746.761 ops/sec 1339 usecs/op

write, close, fsync 217.356 ops/sec 4601 usecs/op

Non-Sync'ed 64kB writes:

write 37511.610 ops/sec 27 usecs/op测试过程中, 使用iostat发现, 大量的写实际上集中在zptest的LOG设备上. 符合zfs的log的原理.

avg-cpu: %user %nice %system %iowait %steal %idle

0.00 0.00 4.05 7.59 0.00 88.35

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

sdc 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sdd 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sdb 0.00 26688.00 0.00 2502.00 0.00 226848.00 90.67 1.29 0.52 0.31 78.10

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

使用postgresql测试读写, 并使用同步提交, 减少shared buffer更能体现ZFS的性能.

pg93@db-172-16-3-150-> initdb -E UTF8 --locale=C -D /zptest/pg93/pg_root -U postgres -W

pg93@db-172-16-3-150-> cd /zptest/pg93/pg_root

vi postgresql.conf

listen_addresses = '0.0.0.0' # what IP address(es) to listen on;

port = 1922 # (change requires restart)

max_connections = 100 # (change requires restart)

unix_socket_directories = '.' # comma-separated list of directories

tcp_keepalives_idle = 60 # TCP_KEEPIDLE, in seconds;

tcp_keepalives_interval = 10 # TCP_KEEPINTVL, in seconds;

tcp_keepalives_count = 10 # TCP_KEEPCNT;

shared_buffers = 32MB # min 128kB

maintenance_work_mem = 512MB # min 1MB

vacuum_cost_delay = 10 # 0-100 milliseconds

vacuum_cost_limit = 10000 # 1-10000 credits

bgwriter_delay = 10ms # 10-10000ms between rounds

checkpoint_segments = 3 # in logfile segments, min 1, 16MB each

effective_cache_size = 96000MB

log_destination = 'csvlog' # Valid values are combinations of

logging_collector = on # Enable capturing of stderr and csvlog

log_truncate_on_rotation = off # If on, an existing log file with the

log_checkpoints = on

log_connections = on

log_disconnections = on

log_error_verbosity = verbose # terse, default, or verbose messages

log_timezone = 'PRC'

autovacuum = on # Enable autovacuum subprocess? 'on'

log_autovacuum_min_duration = 0 # -1 disables, 0 logs all actions and

datestyle = 'iso, mdy'

timezone = 'PRC'

lc_messages = 'C' # locale for system error message

lc_monetary = 'C' # locale for monetary formatting

lc_numeric = 'C' # locale for number formatting

lc_time = 'C' # locale for time formatting

default_text_search_config = 'pg_catalog.english'

pg93@db-172-16-3-150-> pg_ctl start -D /zptest/pg93/pg_root

pg93@db-172-16-3-150-> psql -h /zptest/pg93/pg_root -p 1922 -U postgres postgres

psql (9.3.3)

Type "help" for help.

postgres=# create table test(id int primary key, info text, crt_time timestamp);

CREATE TABLE

postgres=# create or replace function f_test(v_id int) returns void as $$

declare

begin

update test set info=md5(random()::text),crt_time=now() where id=v_id;

if not found then

insert into test values (v_id, md5(random()::text), now());

end if;

exception when SQLSTATE '23505' then

return;

end;

$$ language plpgsql strict;

CREATE FUNCTION

postgres=# select f_test(1);

f_test

--------

(1 row)

postgres=# select * from test;

id | info | crt_time

----+----------------------------------+----------------------------

1 | 80fe8163f44df605621a557624740681 | 2014-05-16 22:01:38.677335

(1 row)

postgres=# select f_test(1);

f_test

--------

(1 row)

postgres=# select * from test;

id | info | crt_time

----+----------------------------------+----------------------------

1 | 5b17eb0ba878e15f40f213716c05a3c5 | 2014-05-16 22:01:42.130284

(1 row)

pg93@db-172-16-3-150-> vi test.sql

\setrandom id 1 500000

select f_test(:id);

pg93@db-172-16-3-150-> pgbench -M prepared -n -r -f ./test.sql -h /zptest/pg93/pg_root -p 1922 -U postgres -c 16 -j 8 -T 30 postgres

transaction type: Custom query

scaling factor: 1

query mode: prepared

number of clients: 16

number of threads: 8

duration: 30 s

number of transactions actually processed: 121879

tps = 4060.366837 (including connections establishing)

tps = 4062.166607 (excluding connections establishing)

statement latencies in milliseconds:

0.004635 \setrandom id 1 500000

3.930118 select f_test(:id);接下来是直接对ZFS4个文件所在机械硬盘做测试, 测试EXT4的性能.

/dev/sdc3 on /opt type ext4 (rw,noatime,nodiratime)

pg93@db-172-16-3-150-> pg_ctl stop -m fast -D /zptest/pg93/pg_root

waiting for server to shut down.... done

server stopped

pg93@db-172-16-3-150-> exit

logout

[root@db-172-16-3-150 ~]# cp -r /zptest/pg93/pg_root /opt/pg_root

[root@db-172-16-3-150 ~]# chown -R pg93:pg93 /opt/pg_root

[root@db-172-16-3-150 ~]# su - pg93

pg93@db-172-16-3-150-> pg_ctl start -D /opt/pg_root

server starting

pg93@db-172-16-3-150-> LOG: 00000: redirecting log output to logging collector process

HINT: Future log output will appear in directory "pg_log".

LOCATION: SysLogger_Start, syslogger.c:649

pg93@db-172-16-3-150-> psql -h 127.0.0.1 -p 1922 -U postgres postgres

psql (9.3.3)

Type "help" for help.

postgres=# truncate test;

TRUNCATE TABLE

postgres=# \q

pg93@db-172-16-3-150-> cd

pg93@db-172-16-3-150-> pgbench -M prepared -n -r -f ./test.sql -h /opt/pg_root -p 1922 -U postgres -c 16 -j 8 -T 30 postgres

transaction type: Custom query

scaling factor: 1

query mode: prepared

number of clients: 16

number of threads: 8

duration: 30 s

number of transactions actually processed: 39062

tps = 1301.492448 (including connections establishing)

tps = 1302.085710 (excluding connections establishing)

statement latencies in milliseconds:

0.004463 \setrandom id 1 500000

12.278317 select f_test(:id);

显然, ZFS在使用了LOG和二级缓存的情况下性能完胜.

最后, 直接使用SSD, 对比一下性能, 因为本例LOG是使用两个文件来代替块设备的, 而不是文件系统, 所以和直接在SSD上的EXT4有性能差距 :

接下来把EXT4放到文件中对比.

[参考] 4. http://en.wikipedia.org/wiki/Non-RAID_drive_architectures

pg93@db-172-16-3-150-> pg_ctl stop -m fast -D /opt/pg_root

waiting for server to shut down.... done

server stopped

pg93@db-172-16-3-150-> exit

logout

You have new mail in /var/spool/mail/root

[root@db-172-16-3-150 ~]# cp -r /zptest/pg93/pg_root /ssd4/

database/ pg92/ pg931/ pgxl/ test.pl zfs.log2

lost+found/ pg93/ pg94/ ssd3/ zfs.log1

[root@db-172-16-3-150 ~]# cp -r /zptest/pg93/pg_root /ssd4/pg_root

[root@db-172-16-3-150 ~]# chown -R pg93:pg93 /ssd4/pg_root

[root@db-172-16-3-150 ~]# su - pg93

pg93@db-172-16-3-150-> pg_ctl start -D /ssd4/pg_root

server starting

pg93@db-172-16-3-150-> LOG: 00000: redirecting log output to logging collector process

HINT: Future log output will appear in directory "pg_log".

LOCATION: SysLogger_Start, syslogger.c:649

pg93@db-172-16-3-150-> psql -h 127.0.0.1 -p 1922 -U postgres postgres

psql (9.3.3)

Type "help" for help.

postgres=# truncate test;

TRUNCATE TABLE

postgres=# \q

pg93@db-172-16-3-150-> pgbench -M prepared -n -r -f ./test.sql -h /ssd4/pg_root -p 1922 -U postgres -c 16 -j 8 -T 30 postgres

transaction type: Custom query

scaling factor: 1

query mode: prepared

number of clients: 16

number of threads: 8

duration: 30 s

number of transactions actually processed: 266565

tps = 8876.805761 (including connections establishing)

tps = 8881.884999 (excluding connections establishing)

statement latencies in milliseconds:

0.003877 \setrandom id 1 500000

1.793971 select f_test(:id);接下来把EXT4放到文件中对比.

[root@db-172-16-3-150 ~]# cd /ssd4

[root@db-172-16-3-150 ssd4]# dd if=/dev/zero of=./test.img bs=1024k count=1024

[root@db-172-16-3-150 ssd4]# mkfs.ext4 ./test.img

[root@db-172-16-3-150 ssd4]# mount -o loop ./test.img /mnt

[root@db-172-16-3-150 ssd4]# su - pg93

pg93@db-172-16-3-150-> pg_ctl stop -m fast -D /ssd4/pg_root

waiting for server to shut down.... done

server stopped

pg93@db-172-16-3-150-> exit

logout

[root@db-172-16-3-150 ssd4]# cp -r /ssd4/pg_root /mnt/

[root@db-172-16-3-150 ssd4]# chown -R pg93:pg93 /mnt/pg_root

[root@db-172-16-3-150 ssd4]# su - pg93

pg93@db-172-16-3-150-> pg_ctl start -D /mnt/pg_root

server starting

pg93@db-172-16-3-150-> LOG: 00000: redirecting log output to logging collector process

HINT: Future log output will appear in directory "pg_log".

LOCATION: SysLogger_Start, syslogger.c:649

pg93@db-172-16-3-150-> psql -h 127.0.0.1 -p 1922 -U postgres postgres

psql (9.3.3)

Type "help" for help.

postgres=# truncate test;

TRUNCATE TABLE

postgres=# \q

pg93@db-172-16-3-150-> pgbench -M prepared -n -r -f ./test.sql -h /mnt/pg_root -p 1922 -U postgres -c 16 -j 8 -T 30 postgres

transaction type: Custom query

scaling factor: 1

query mode: prepared

number of clients: 16

number of threads: 8

duration: 30 s

number of transactions actually processed: 192445

tps = 6410.026454 (including connections establishing)

tps = 6412.254485 (excluding connections establishing)

statement latencies in milliseconds:

0.004118 \setrandom id 1 500000

2.486914 select f_test(:id);[参考] 4. http://en.wikipedia.org/wiki/Non-RAID_drive_architectures