因为zfs对zil的使用有阈值限制, 例如单次提交的写超过阈值则直接写VDEV.

还有如果设置了logbias=throughput也是直接写vdev的.

参考

Note that this issue seems to impact all ZFS implementations, not just ZFS On Linux.

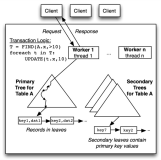

ZFS uses a complicated process when it comes to deciding whether a write should be logged in indirect mode (written once by the DMU, the log records store a pointer) or in immediate mode (written in the log record, rewritten later by the DMU). Basically, it goes like this:

Write in indirect mode to the data vdevs if:

logbias=throughput, or

There is no slog and the write is larger than zfs_immediate_write_sz.

Write in immediate mode to the data vdevs if logbias=latency and:

There is no slog and the write is smaller than zfs_immediate_write_sz, or

There is a slog and the total commit size if larger than zil_slog_limit.

Write in immediate mode to the slog vdevs if logbias=latency, there is a slog, and the total commit size is smaller than zil_slog_limit.

The decision to use indirect or immediate mode is implemented in zfs_log_write() and zvol_log_write(). The decision to use the slog or the normal vdevs is implemented in the USE_SLOG() macro used by zil_lwb_write_start.

The issue is, this decision process makes sense except for one particularly painful edge case, when these conditions are all true:

logbias=latency, and

There is a slog, and

There are large writes in the ZIL to be commited (e.g. > 100 MB).

In this situation, the optimal choice would be to write to the normal pool in indirect mode, which should give us the minimum latency considering this is a large sequential write. Indeed, for very large writes, you don't want to use immediate mode because it means writing the data twice. Even if you write the log records to the slog, this will be slower with most pool configurations with e.g. lots of spindles and one SSD slog because the aggregate sequential write throughput of all the spindles is usually greater than the SSD's.

Instead, the algorithm makes the worst decision possible: it writes the data in immediate mode to the main data disks. This means that all the (large) data will be commited as ZIL log records on the data disks first, then immediately after, it will get written again by the DMU. This means the overall throughput is halved, and if this is a sustained load, the ZIL commit latency will be doubled compared to indirect mode.

It is shockingly easy to reproduce this issue. In pseudo-code:

open(file)

write(file, lots of data) // e.g. 2 GB

fsync(file)

Watch the zil_stats kstat page when that runs.

If you don't have a slog in your pool, then the fsync() call will complete in roughly the time it takes to write 2 GB sequentially to your main disks. This is optimal.

If you have a slog in your pool, then the fsync() call will generate twice as much write activity, and will write up to 4 GB to your main disks. Ironically, the slog won't be used at all when that happens.

The solution would be to modify the algorithm zfs_log_write() and zvol_log_write() so that, in the conditions mentioned above, it switches to indirect writes when the commit size reaches a certain threshold (e.g. 32 MB).

I would gladly write a patch, but I won't have the time to do it, so I'm just leaving the result of my research here in case anyone's interested. If anyone wants to write the patch, it should be very simple to implement it.