集群状态:

[root@prod02 ~]# crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.CRS.dg

ONLINE ONLINE prod01

ONLINE ONLINE prod02

ora.LISTENER.lsnr

ONLINE ONLINE prod01

ONLINE ONLINE prod02

ora.OCR.dg

ONLINE ONLINE prod01

ONLINE ONLINE prod02

ora.asm

ONLINE ONLINE prod01 Started

ONLINE ONLINE prod02 Started

ora.gsd

OFFLINE OFFLINE prod01

OFFLINE OFFLINE prod02

ora.net1.network

ONLINE ONLINE prod01

ONLINE ONLINE prod02

ora.ons

ONLINE ONLINE prod01

ONLINE ONLINE prod02

ora.registry.acfs

ONLINE ONLINE prod01

ONLINE ONLINE prod02

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE prod02

ora.cvu

1 ONLINE ONLINE prod02

ora.oc4j

1 ONLINE ONLINE prod02

ora.ora.db

1 ONLINE ONLINE prod01 Open

2 ONLINE ONLINE prod02 Open

ora.prod01.vip

1 ONLINE ONLINE prod01

ora.prod02.vip

1 ONLINE ONLINE prod02

ora.scan1.vip

1 ONLINE ONLINE prod02 asm 状态

SQL> @c

DG_NAME DG_STATE TYPE DSK_NO DSK_NAME PATH MOUNT_S FAILGROUP STATE

--------------- ---------- ------ ---------- ---------- -------------------------------------------------- ------- -------------------- --------

CRS MOUNTED NORMAL 2 CRS_0002 /dev/oracleasm/disks/DISK03 CACHED ZCDISK NORMAL

CRS MOUNTED NORMAL 5 CRS_0005 /dev/oracleasm/disks/VOTEDB02 CACHED CRS_0001 NORMAL

CRS MOUNTED NORMAL 9 CRS_0009 /dev/oracleasm/disks/VOTEDB01 CACHED CRS_0000 NORMAL

OCR MOUNTED NORMAL 0 OCR_0000 /dev/oracleasm/disks/NODE01DATA01 CACHED OCR_0000 NORMAL

OCR MOUNTED NORMAL 1 OCR_0001 /dev/oracleasm/disks/NODE02DATA01 CACHED OCR_0001 NORMAL

DISK_NUMBER NAME PATH HEADER_STATUS OS_MB TOTAL_MB FREE_MB REPAIR_TIMER V FAILGRO

----------- ---------- -------------------------------------------------- -------------------- ---------- ---------- ---------- ------------ - -------

0 OCR_0000 /dev/oracleasm/disks/NODE01DATA01 MEMBER 5120 5120 3185 0 N REGULAR

1 OCR_0001 /dev/oracleasm/disks/NODE02DATA01 MEMBER 5120 5120 3185 0 N REGULAR

9 CRS_0009 /dev/oracleasm/disks/VOTEDB01 MEMBER 2048 2048 1584 0 Y REGULAR

5 CRS_0005 /dev/oracleasm/disks/VOTEDB02 MEMBER 2048 2048 1648 0 Y REGULAR

2 CRS_0002 /dev/oracleasm/disks/DISK03 MEMBER 5115 5115 5081 0 Y QUORUM

GROUP_NUMBER NAME COMPATIBILITY DATABASE_COMPATIBILITY V

------------ ---------- ------------------------------------------------------------ ------------------------------------------------------------ -

1 OCR 11.2.0.0.0 11.2.0.0.0 N

2 CRS 11.2.0.0.0 11.2.0.0.0 Y

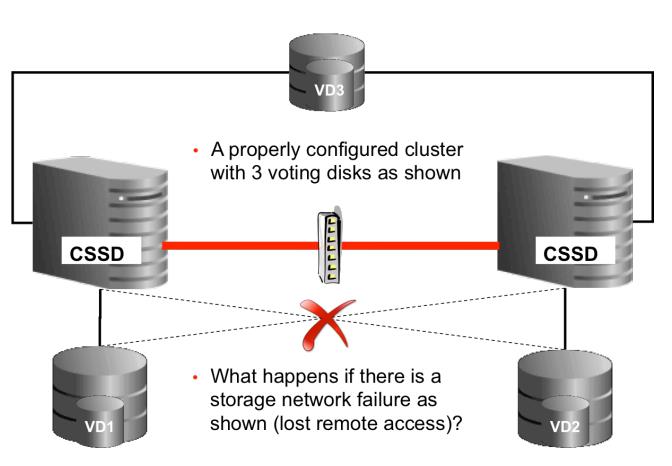

SQL>当存储链路断开磁盘读写情况

prod01能读写磁盘(/dev/oracleasm/disks/DISK03 ,/dev/oracleasm/disks/VOTEDB01,/dev/oracleasm/disks/NODE01DATA01)

prod02能读写磁盘(/dev/oracleasm/disks/DISK03 ,/dev/oracleasm/disks/VOT02,/dev/oracleasm/disks/NODE02DATA01)

存储链路断开(Tue Sep 17 14:35:39 CST 2019)

prod01 grid log:

2019-09-17 14:37:27.636:

[cssd(37119)]CRS-1615:No I/O has completed after 50% of the maximum interval. Voting file /dev/oracleasm/disks/VOTEDB02 will be considered not functional in 99830 milliseconds

2019-09-17 14:37:57.868:

[cssd(37119)]CRS-1649:An I/O error occured for voting file: /dev/oracleasm/disks/VOTEDB02; details at (:CSSNM00060:) in /u01/app/11.2.0/grid/log/prod01/cssd/ocssd.log.

2019-09-17 14:37:57.868:

[cssd(37119)]CRS-1649:An I/O error occured for voting file: /dev/oracleasm/disks/VOTEDB02; details at (:CSSNM00059:) in /u01/app/11.2.0/grid/log/prod01/cssd/ocssd.log.

2019-09-17 14:37:58.253:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37016)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/prod01/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-09-17 14:37:58.324:

[ohasd(36900)]CRS-2765:Resource 'ora.asm' has failed on server 'prod01'.

2019-09-17 14:37:58.333:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37016)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/prod01/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-09-17 14:37:58.394:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37832)]CRS-5011:Check of resource "ora" failed: details at "(:CLSN00007:)" in "/u01/app/11.2.0/grid/log/prod01/agent/crsd/oraagent_oracle/oraagent_oracle.log"

2019-09-17 14:37:58.396:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37832)]CRS-5011:Check of resource "ora" failed: details at "(:CLSN00007:)" in "/u01/app/11.2.0/grid/log/prod01/agent/crsd/oraagent_oracle/oraagent_oracle.log"

[client(42082)]CRS-10001:17-Sep-19 14:37 ACFS-9250: Unable to get the ASM administrator user name from the ASM process.

2019-09-17 14:37:58.702:

[/u01/app/11.2.0/grid/bin/orarootagent.bin(37668)]CRS-5016:Process "/u01/app/11.2.0/grid/bin/acfsregistrymount" spawned by agent "/u01/app/11.2.0/grid/bin/orarootagent.bin" for action "check" failed: details at "(:CLSN00010:)" in "/u01/app/11.2.0/grid/log/prod01/agent/crsd/orarootagent_root/orarootagent_root.log"

2019-09-17 14:38:00.278:

[cssd(37119)]CRS-1604:CSSD voting file is offline: /dev/oracleasm/disks/VOTEDB01; details at (:CSSNM00069:) in /u01/app/11.2.0/grid/log/prod01/cssd/ocssd.log.

2019-09-17 14:38:00.278:

[cssd(37119)]CRS-1626:A Configuration change request completed successfully

2019-09-17 14:38:00.289:

[cssd(37119)]CRS-1601:CSSD Reconfiguration complete. Active nodes are prod01 prod02 .

2019-09-17 14:38:03.818:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37016)]CRS-5019:All OCR locations are on ASM disk groups [OCR], and none of these disk groups are mounted. Details are at "(:CLSN00100:)" in "/u01/app/11.2.0/grid/log/prod01/agent/ohasd/oraagent_grid/oraagent_grid.log".

2019-09-17 14:38:04.893:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37016)]CRS-5019:All OCR locations are on ASM disk groups [OCR], and none of these disk groups are mounted. Details are at "(:CLSN00100:)" in "/u01/app/11.2.0/grid/log/prod01/agent/ohasd/oraagent_grid/oraagent_grid.log".

2019-09-17 14:38:17.581:

[cssd(37119)]CRS-1614:No I/O has completed after 75% of the maximum interval. Voting file /dev/oracleasm/disks/VOTEDB02 will be considered not functional in 49890 milliseconds

2019-09-17 14:38:29.720:

[/u01/app/11.2.0/grid/bin/oraagent.bin(42335)]CRS-5011:Check of resource "ora" failed: details at "(:CLSN00007:)" in "/u01/app/11.2.0/grid/log/prod01/agent/crsd/oraagent_oracle/oraagent_oracle.log"

2019-09-17 14:38:34.895:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37016)]CRS-5019:All OCR locations are on ASM disk groups [OCR], and none of these disk groups are mounted. Details are at "(:CLSN00100:)" in "/u01/app/11.2.0/grid/log/prod01/agent/ohasd/oraagent_grid/oraagent_grid.log".

2019-09-17 14:38:39.036:

[ctssd(37246)]CRS-2409:The clock on host prod01 is not synchronous with the mean cluster time. No action has been taken as the Cluster Time Synchronization Service is running in observer mode.

2019-09-17 14:38:47.588:

[cssd(37119)]CRS-1613:No I/O has completed after 90% of the maximum interval. Voting file /dev/oracleasm/disks/VOTEDB02 will be considered not functional in 19890 milliseconds

2019-09-17 14:39:04.910:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37016)]CRS-5019:All OCR locations are on ASM disk groups [OCR], and none of these disk groups are mounted. Details are at "(:CLSN00100:)" in "/u01/app/11.2.0/grid/log/prod01/agent/ohasd/oraagent_grid/oraagent_grid.log".

2019-09-17 14:39:07.592:

[cssd(37119)]CRS-1604:CSSD voting file is offline: /dev/oracleasm/disks/VOTEDB02; details at (:CSSNM00058:) in /u01/app/11.2.0/grid/log/prod01/cssd/ocssd.log.

2019-09-17 14:39:07.592:

[cssd(37119)]CRS-1606:The number of voting files available, 1, is less than the minimum number of voting files required, 2, resulting in CSSD termination to ensure data integrity; details at (:CSSNM00018:) in /u01/app/11.2.0/grid/log/prod01/cssd/ocssd.log

2019-09-17 14:39:07.592:

[cssd(37119)]CRS-1656:The CSS daemon is terminating due to a fatal error; Details at (:CSSSC00012:) in /u01/app/11.2.0/grid/log/prod01/cssd/ocssd.log

2019-09-17 14:39:07.629:

[cssd(37119)]CRS-1652:Starting clean up of CRSD resources.

2019-09-17 14:39:08.872:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37664)]CRS-5016:Process "/u01/app/11.2.0/grid/opmn/bin/onsctli" spawned by agent "/u01/app/11.2.0/grid/bin/oraagent.bin" for action "check" failed: details at "(:CLSN00010:)" in "/u01/app/11.2.0/grid/log/prod01/agent/crsd/oraagent_grid/oraagent_grid.log"

2019-09-17 14:39:09.477:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37664)]CRS-5016:Process "/u01/app/11.2.0/grid/bin/lsnrctl" spawned by agent "/u01/app/11.2.0/grid/bin/oraagent.bin" for action "check" failed: details at "(:CLSN00010:)" in "/u01/app/11.2.0/grid/log/prod01/agent/crsd/oraagent_grid/oraagent_grid.log"

2019-09-17 14:39:09.483:

[cssd(37119)]CRS-1654:Clean up of CRSD resources finished successfully.

2019-09-17 14:39:09.483:

[cssd(37119)]CRS-1655:CSSD on node prod01 detected a problem and started to shutdown.

2019-09-17 14:39:09.499:

[/u01/app/11.2.0/grid/bin/orarootagent.bin(37668)]CRS-5822:Agent '/u01/app/11.2.0/grid/bin/orarootagent_root' disconnected from server. Details at (:CRSAGF00117:) {0:3:7} in /u01/app/11.2.0/grid/log/prod01/agent/crsd/orarootagent_root/orarootagent_root.log.

2019-09-17 14:39:09.502:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37664)]CRS-5822:Agent '/u01/app/11.2.0/grid/bin/oraagent_grid' disconnected from server. Details at (:CRSAGF00117:) {0:1:8} in /u01/app/11.2.0/grid/log/prod01/agent/crsd/oraagent_grid/oraagent_grid.log.

2019-09-17 14:39:09.505:

[ohasd(36900)]CRS-2765:Resource 'ora.crsd' has failed on server 'prod01'.

2019-09-17 14:39:09.703:

[cssd(37119)]CRS-1660:The CSS daemon shutdown has completed

2019-09-17 14:39:10.579:

[ohasd(36900)]CRS-2765:Resource 'ora.ctssd' has failed on server 'prod01'.

2019-09-17 14:39:10.583:

[ohasd(36900)]CRS-2765:Resource 'ora.evmd' has failed on server 'prod01'.

2019-09-17 14:39:10.586:

[crsd(42517)]CRS-0805:Cluster Ready Service aborted due to failure to communicate with Cluster Synchronization Service with error [3]. Details at (:CRSD00109:) in /u01/app/11.2.0/grid/log/prod01/crsd/crsd.log.

2019-09-17 14:39:10.903:

[/u01/app/11.2.0/grid/bin/oraagent.bin(37016)]CRS-5011:Check of resource "+ASM" failed: details at "(:CLSN00006:)" in "/u01/app/11.2.0/grid/log/prod01/agent/ohasd/oraagent_grid/oraagent_grid.log"

2019-09-17 14:39:11.076:

[ohasd(36900)]CRS-2765:Resource 'ora.asm' has failed on server 'prod01'.

2019-09-17 14:39:11.090:

[ohasd(36900)]CRS-2765:Resource 'ora.crsd' has failed on server 'prod01'.

2019-09-17 14:39:11.114:

[ohasd(36900)]CRS-2765:Resource 'ora.cssdmonitor' has failed on server 'prod01'.

2019-09-17 14:39:11.135:

[ohasd(36900)]CRS-2765:Resource 'ora.cluster_interconnect.haip' has failed on server 'prod01'.

2019-09-17 14:39:11.605:

[ctssd(42530)]CRS-2402:The Cluster Time Synchronization Service aborted on host prod01. Details at (:ctss_css_init1:) in /u01/app/11.2.0/grid/log/prod01/ctssd/octssd.log.

2019-09-17 14:39:11.628:

[ohasd(36900)]CRS-2765:Resource 'ora.cssd' has failed on server 'prod01'.

2019-09-17 14:39:12.098:

[ohasd(36900)]CRS-2878:Failed to restart resource 'ora.cluster_interconnect.haip'

2019-09-17 14:39:12.099:

[ohasd(36900)]CRS-2769:Unable to failover resource 'ora.cluster_interconnect.haip'.

2019-09-17 14:39:13.637:

[cssd(42565)]CRS-1713:CSSD daemon is started in clustered mode

2019-09-17 14:39:14.328:

[cssd(42565)]CRS-1656:The CSS daemon is terminating due to a fatal error; Details at (:CSSSC00012:) in /u01/app/11.2.0/grid/log/prod01/cssd/ocssd.log

2019-09-17 14:39:14.376:

[cssd(42565)]CRS-1603:CSSD on node prod01 shutdown by user.

2019-09-17 14:39:15.616:

[ohasd(36900)]CRS-2878:Failed to restart resource 'ora.ctssd'

2019-09-17 14:39:15.617:

[ohasd(36900)]CRS-2769:Unable to failover resource 'ora.ctssd'.prod01 asm log:

Tue Sep 17 14:37:57 2019

WARNING: Read Failed. group:1 disk:1 AU:1 offset:0 size:4096

WARNING: Read Failed. group:1 disk:1 AU:1 offset:4096 size:4096

ERROR: no read quorum in group: required 2, found 0 disks

WARNING: could not find any PST disk in grp 1

ERROR: GMON terminating the instance due to storage split in grp 1

GMON (ospid: 37538): terminating the instance due to error 1092

Tue Sep 17 14:37:57 2019

ORA-1092 : opitsk aborting process

Tue Sep 17 14:37:58 2019

System state dump requested by (instance=1, osid=37538 (GMON)), summary=[abnormal instance termination].

System State dumped to trace file /u01/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_diag_37510_20190917143758.trc

Dumping diagnostic data in directory=[cdmp_20190917143758], requested by (instance=1, osid=37538 (GMON)), summary=[abnormal instance termination].

Tue Sep 17 14:37:58 2019

ORA-1092 : opitsk aborting process

Tue Sep 17 14:37:58 2019

License high water mark = 11

Instance terminated by GMON, pid = 37538

USER (ospid: 42050): terminating the instance

Instance terminated by USER, pid = 42050prod01 db log:

Tue Sep 17 14:37:57 2019

WARNING: Read Failed. group:1 disk:1 AU:1383 offset:49152 size:16384Tue Sep 17 14:37:57 2019

WARNING: Read Failed. group:1 disk:1 AU:1399 offset:16384 size:16384

WARNING: failed to read mirror side 1 of virtual extent 0 logical extent 0 of file 260 in group [1.4129785012] from disk OCR_0001 allocation unit 1399 reason error; if possible, will try another mirror side

WARNING: failed to read mirror side 1 of virtual extent 4 logical extent 0 of file 260 in group [1.4129785012] from disk OCR_0001 allocation unit 1383 reason error; if possible, will try another mirror side

WARNING: Read Failed. group:1 disk:1 AU:1399 offset:65536 size:16384

WARNING: failed to read mirror side 1 of virtual extent 0 logical extent 0 of file 260 in group [1.4129785012] from disk OCR_0001 allocation unit 1399 reason error; if possible, will try another mirror side

NOTE: successfully read mirror side 2 of virtual extent 0 logical extent 1 of file 260 in group [1.4129785012] from disk OCR_0000 allocation unit 1405

WARNING: Read Failed. group:1 disk:1 AU:0 offset:0 size:4096WARNING: Write Failed. group:1 disk:1 AU:1399 offset:49152 size:16384

ERROR: cannot read disk header of disk OCR_0001 (1:3914822728)

Errors in file /u01/app/oracle/diag/rdbms/ora/ora1/trace/ora1_ckpt_37932.trc:

ORA-15080: synchronous I/O operation to a disk failed

ORA-27061: waiting for async I/Os failed

Linux-x86_64 Error: 5: Input/output error

Additional information: -1

Additional information: 16384

NOTE: process _lmon_ora1 (37910) initiating offline of disk 1.3914822728 (OCR_0001) with mask 0x7e in group 1

WARNING: failed to write mirror side 1 of virtual extent 0 logical extent 0 of file 260 in group 1 on disk 1 allocation unit 1399

Tue Sep 17 14:37:57 2019

NOTE: ASMB terminating

Errors in file /u01/app/oracle/diag/rdbms/ora/ora1/trace/ora1_asmb_37940.trc:

ORA-15064: communication failure with ASM instance

ORA-03113: end-of-file on communication channel

Process ID:

Session ID: 921 Serial number: 13

Errors in file /u01/app/oracle/diag/rdbms/ora/ora1/trace/ora1_asmb_37940.trc:

ORA-15064: communication failure with ASM instance

ORA-03113: end-of-file on communication channel

Process ID:

Session ID: 921 Serial number: 13

ASMB (ospid: 37940): terminating the instance due to error 15064

Tue Sep 17 14:37:57 2019

System state dump requested by (instance=1, osid=37940 (ASMB)), summary=[abnormal instance termination].

System State dumped to trace file /u01/app/oracle/diag/rdbms/ora/ora1/trace/ora1_diag_37900_20190917143757.trc

Dumping diagnostic data in directory=[cdmp_20190917143757], requested by (instance=1, osid=37940 (ASMB)), summary=[abnormal instance termination].

Instance terminated by ASMB, pid = 37940prod02 grid log:

2019-09-17 14:37:24.306:

[cssd(4189)]CRS-1615:No I/O has completed after 50% of the maximum interval. Voting file /dev/oracleasm/disks/VOTEDB01 will be considered not functional in 99370 milliseconds

2019-09-17 14:37:54.191:

[cssd(4189)]CRS-1649:An I/O error occured for voting file: /dev/oracleasm/disks/VOTEDB01; details at (:CSSNM00060:) in /u01/app/11.2.0/grid/log/prod02/cssd/ocssd.log.

2019-09-17 14:37:54.205:

[cssd(4189)]CRS-1649:An I/O error occured for voting file: /dev/oracleasm/disks/VOTEDB01; details at (:CSSNM00059:) in /u01/app/11.2.0/grid/log/prod02/cssd/ocssd.log.

2019-09-17 14:37:54.931:

[crsd(9545)]CRS-2765:Resource 'ora.asm' has failed on server 'prod01'.

2019-09-17 14:37:54.948:

[crsd(9545)]CRS-2765:Resource 'ora.ora.db' has failed on server 'prod01'.

2019-09-17 14:37:54.987:

[crsd(9545)]CRS-2765:Resource 'ora.OCR.dg' has failed on server 'prod01'.

2019-09-17 14:37:54.995:

[crsd(9545)]CRS-2765:Resource 'ora.CRS.dg' has failed on server 'prod01'.

2019-09-17 14:37:55.257:

[crsd(9545)]CRS-2765:Resource 'ora.registry.acfs' has failed on server 'prod01'.

2019-09-17 14:37:56.824:

[cssd(4189)]CRS-1626:A Configuration change request completed successfully

2019-09-17 14:37:56.839:

[cssd(4189)]CRS-1601:CSSD Reconfiguration complete. Active nodes are prod01 prod02 .

2019-09-17 14:38:26.066:

[crsd(9545)]CRS-2878:Failed to restart resource 'ora.ora.db'

2019-09-17 14:38:26.067:

[crsd(9545)]CRS-2769:Unable to failover resource 'ora.ora.db'.

2019-09-17 14:38:26.276:

[crsd(9545)]CRS-2769:Unable to failover resource 'ora.ora.db'.

2019-09-17 14:38:58.279:

[crsd(9545)]CRS-2769:Unable to failover resource 'ora.ora.db'.

2019-09-17 14:38:58.281:

[crsd(9545)]CRS-2878:Failed to restart resource 'ora.ora.db'

2019-09-17 14:39:06.149:

[cssd(4189)]CRS-1625:Node prod01, number 1, was manually shut down

2019-09-17 14:39:06.163:

[cssd(4189)]CRS-1601:CSSD Reconfiguration complete. Active nodes are prod02 .

2019-09-17 14:39:06.171:

[crsd(9545)]CRS-5504:Node down event reported for node 'prod01'.

2019-09-17 14:39:08.834:

[crsd(9545)]CRS-2773:Server 'prod01' has been removed from pool 'ora.ora'.

2019-09-17 14:39:08.834:

[crsd(9545)]CRS-2773:Server 'prod01' has been removed from pool 'Generic'.prod02 asm log:

Tue Sep 17 14:37:54 2019

WARNING: Write Failed. group:1 disk:0 AU:1 offset:1044480 size:4096

WARNING: Hbeat write to PST disk 0.3916045786 in group 1 failed. [4]

WARNING: Write Failed. group:2 disk:9 AU:1 offset:1044480 size:4096

WARNING: Hbeat write to PST disk 9.3916045794 in group 2 failed. [4]

Tue Sep 17 14:37:54 2019

NOTE: process _user30806_+asm2 (30806) initiating offline of disk 0.3916045786 (OCR_0000) with mask 0x7e in group 1

NOTE: checking PST: grp = 1

GMON checking disk modes for group 1 at 98 for pid 27, osid 30806

Tue Sep 17 14:37:54 2019

NOTE: process _b001_+asm2 (36442) initiating offline of disk 9.3916045794 (CRS_0009) with mask 0x7e in group 2

NOTE: checking PST: grp = 2

NOTE: group OCR: updated PST location: disk 0001 (PST copy 0)

NOTE: checking PST for grp 1 done.

NOTE: initiating PST update: grp = 1, dsk = 0/0xe96a1dda, mask = 0x6a, op = clear

GMON updating disk modes for group 1 at 99 for pid 27, osid 30806

Tue Sep 17 14:37:54 2019

Dumping diagnostic data in directory=[cdmp_20190917143758], requested by (instance=1, osid=37538 (GMON)), summary=[abnormal instance termination].

Tue Sep 17 14:37:55 2019

Reconfiguration started (old inc 32, new inc 34)

List of instances:

2 (myinst: 2)

Global Resource Directory frozen

* dead instance detected - domain 2 invalid = TRUE

* dead instance detected - domain 1 invalid = TRUE

Communication channels reestablished

Master broadcasted resource hash value bitmaps

Non-local Process blocks cleaned out

Tue Sep 17 14:37:55 2019

LMS 0: 0 GCS shadows cancelled, 0 closed, 0 Xw survived

Set master node info

Submitted all remote-enqueue requests

Dwn-cvts replayed, VALBLKs dubious

All grantable enqueues granted

Post SMON to start 1st pass IR

Tue Sep 17 14:37:55 2019

Errors in file /u01/app/grid/diag/asm/+asm/+ASM2/trace/+ASM2_smon_9236.trc:

ORA-15025: could not open disk "/dev/oracleasm/disks/NODE01DATA01"

ORA-27041: unable to open file

Linux-x86_64 Error: 6: No such device or address

Additional information: 3

Submitted all GCS remote-cache requests

Post SMON to start 1st pass IR

Fix write in gcs resources

Reconfiguration complete

WARNING: GMON has insufficient disks to maintain consensus. Minimum required is 2: updating 2 PST copies from a total of 3.

NOTE: group CRS: updated PST location: disk 0002 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0005 (PST copy 1)

NOTE: group OCR: updated PST location: disk 0001 (PST copy 0)

GMON checking disk modes for group 2 at 100 for pid 31, osid 36442

NOTE: group CRS: updated PST location: disk 0002 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0005 (PST copy 1)

NOTE: checking PST for grp 2 done.

NOTE: initiating PST update: grp = 2, dsk = 9/0xe96a1de2, mask = 0x6a, op = clear

NOTE: PST update grp = 1 completed successfully

NOTE: initiating PST update: grp = 1, dsk = 0/0xe96a1dda, mask = 0x7e, op = clear

NOTE: SMON starting instance recovery for group OCR domain 1 (mounted)

NOTE: SMON skipping disk 0 (mode=00000015)

NOTE: F1X0 found on disk 1 au 2 fcn 0.7444

GMON updating disk modes for group 2 at 101 for pid 31, osid 36442

NOTE: starting recovery of thread=1 ckpt=6.291 group=1 (OCR)

NOTE: group CRS: updated PST location: disk 0002 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0005 (PST copy 1)

GMON updating disk modes for group 1 at 102 for pid 27, osid 30806

NOTE: group OCR: updated PST location: disk 0001 (PST copy 0)

NOTE: cache closing disk 0 of grp 1: OCR_0000

NOTE: PST update grp = 2 completed successfully

NOTE: ASM recovery sucessfully read ACD from one mirror sideNOTE: PST update grp = 1 completed successfully

NOTE: initiating PST update: grp = 2, dsk = 9/0xe96a1de2, mask = 0x7e, op = clear

Errors in file /u01/app/grid/diag/asm/+asm/+ASM2/trace/+ASM2_smon_9236.trc:

ORA-15062: ASM disk is globally closed

ORA-15062: ASM disk is globally closed

NOTE: SMON waiting for thread 1 recovery enqueue

NOTE: SMON about to begin recovery lock claims for diskgroup 1 (OCR)

GMON updating disk modes for group 2 at 103 for pid 31, osid 36442

NOTE: cache closing disk 0 of grp 1: (not open) OCR_0000

NOTE: group CRS: updated PST location: disk 0002 (PST copy 0)

NOTE: group CRS: updated PST location: disk 0005 (PST copy 1)

NOTE: cache closing disk 9 of grp 2: CRS_0009

NOTE: SMON successfully validated lock domain 1

NOTE: advancing ckpt for group 1 (OCR) thread=1 ckpt=6.291

NOTE: SMON did instance recovery for group OCR domain 1

NOTE: PST update grp = 2 completed successfully

NOTE: cache closing disk 9 of grp 2: (not open) CRS_0009

NOTE: SMON starting instance recovery for group CRS domain 2 (mounted)

NOTE: F1X0 found on disk 5 au 2 fcn 0.197448

NOTE: SMON skipping disk 9 (mode=00000001)

NOTE: starting recovery of thread=2 ckpt=25.706 group=2 (CRS)

NOTE: SMON waiting for thread 2 recovery enqueue

NOTE: SMON about to begin recovery lock claims for diskgroup 2 (CRS)

NOTE: SMON successfully validated lock domain 2

NOTE: advancing ckpt for group 2 (CRS) thread=2 ckpt=25.706

NOTE: SMON did instance recovery for group CRS domain 2

Tue Sep 17 14:37:56 2019

NOTE: Attempting voting file refresh on diskgroup CRS

NOTE: Refresh completed on diskgroup CRS

. Found 3 voting file(s).

NOTE: Voting file relocation is required in diskgroup CRS

NOTE: Attempting voting file relocation on diskgroup CRS

NOTE: Successful voting file relocation on diskgroup CRS

Reconfiguration started (old inc 34, new inc 36)

List of instances:

1 2 (myinst: 2)

Global Resource Directory frozen

Communication channels reestablished

Tue Sep 17 14:37:59 2019

* domain 0 valid = 1 according to instance 1

* domain 2 valid = 1 according to instance 1

* domain 1 valid = 1 according to instance 1

Master broadcasted resource hash value bitmaps

Non-local Process blocks cleaned out

LMS 0: 0 GCS shadows cancelled, 0 closed, 0 Xw survived

Set master node info

Submitted all remote-enqueue requests

Dwn-cvts replayed, VALBLKs dubious

All grantable enqueues granted

Submitted all GCS remote-cache requests

Fix write in gcs resources

Reconfiguration complete

NOTE: Attempting voting file refresh on diskgroup CRS

NOTE: Refresh completed on diskgroup CRS

. Found 2 voting file(s).

NOTE: Voting file relocation is required in diskgroup CRS

NOTE: Attempting voting file relocation on diskgroup CRS

NOTE: Successful voting file relocation on diskgroup CRS

NOTE: cache closing disk 9 of grp 2: (not open) CRS_0009

Tue Sep 17 14:39:07 2019

Reconfiguration started (old inc 36, new inc 38)

List of instances:

2 (myinst: 2)

Global Resource Directory frozen

Communication channels reestablished

Master broadcasted resource hash value bitmaps

Non-local Process blocks cleaned out

Tue Sep 17 14:39:07 2019

LMS 0: 0 GCS shadows cancelled, 0 closed, 0 Xw survived

Set master node info

Submitted all remote-enqueue requests

Dwn-cvts replayed, VALBLKs dubious

All grantable enqueues granted

Post SMON to start 1st pass IR

Submitted all GCS remote-cache requests

Post SMON to start 1st pass IR

Fix write in gcs resources

Reconfiguration complete

Tue Sep 17 14:39:15 2019

WARNING: Disk 0 (OCR_0000) in group 1 will be dropped in: (12960) secs on ASM inst 2

WARNING: Disk 9 (CRS_0009) in group 2 will be dropped in: (12960) secs on ASM inst 2

Tue Sep 17 14:39:18 2019

NOTE: successfully read ACD block gn=2 blk=0 via retry read

Errors in file /u01/app/grid/diag/asm/+asm/+ASM2/trace/+ASM2_lgwr_9232.trc:

ORA-15062: ASM disk is globally closed

NOTE: successfully read ACD block gn=2 blk=0 via retry read

Errors in file /u01/app/grid/diag/asm/+asm/+ASM2/trace/+ASM2_lgwr_9232.trc:

ORA-15062: ASM disk is globally closed

Tue Sep 17 14:42:18 2019

WARNING: Disk 0 (OCR_0000) in group 1 will be dropped in: (12777) secs on ASM inst 2

WARNING: Disk 9 (CRS_0009) in group 2 will be dropped in: (12777) secs on ASM inst 2

Tue Sep 17 14:42:21 2019

NOTE: successfully read ACD block gn=2 blk=0 via retry read

Errors in file /u01/app/grid/diag/asm/+asm/+ASM2/trace/+ASM2_lgwr_9232.trc:

ORA-15062: ASM disk is globally closed

NOTE: successfully read ACD block gn=2 blk=0 via retry read

Errors in file /u01/app/grid/diag/asm/+asm/+ASM2/trace/+ASM2_lgwr_9232.trc:

ORA-15062: ASM disk is globally closedprod02 db log:

Tue Sep 17 14:37:54 2019

WARNING: Read Failed. group:1 disk:0 AU:1381 offset:0 size:16384

Tue Sep 17 14:37:54 2019

WARNING: Write Failed. group:1 disk:0 AU:1405 offset:65536 size:16384

WARNING: failed to read mirror side 1 of virtual extent 5 logical extent 0 of file 260 in group [1.4130008359] from disk OCR_0000 allocation unit 1381 reason error; if possible, will try another mirror side

NOTE: successfully read mirror side 2 of virtual extent 5 logical extent 1 of file 260 in group [1.4130008359] from disk OCR_0001 allocation unit 1374

WARNING: Read Failed. group:1 disk:0 AU:0 offset:0 size:4096

ERROR: cannot read disk header of disk OCR_0000 (0:3916045786)

Errors in file /u01/app/oracle/diag/rdbms/ora/ora2/trace/ora2_ckpt_22649.trc:

ORA-15080: synchronous I/O operation to a disk failed

ORA-27061: waiting for async I/Os failed

Linux-x86_64 Error: 5: Input/output error

Additional information: -1

Additional information: 16384

ORA-27072: File I/O error

Linux-x86_64 Error: 5: Input/output error

Additional information: 4

Additional information: -1

ORA-27072: File I/O error

Linux-x86_64 Error: 5: Input/output error

Additional information: 4

Additional information: 3311648

Additional information: -1

WARNING: failed to write mirror side 2 of virtual extent 0 logical extent 1 of file 260 in group 1 on disk 0 allocation unit 1405 NOTE: process _mmon_ora2 (22659) initiating offline of disk 0.3916045786 (OCR_0000) with mask 0x7e in group 1

Tue Sep 17 14:37:54 2019

Dumping diagnostic data in directory=[cdmp_20190917143757], requested by (instance=1, osid=37940 (ASMB)), summary=[abnormal instance termination].

Tue Sep 17 14:37:55 2019

NOTE: disk 0 (OCR_0000) in group 1 (OCR) is offline for reads

NOTE: disk 0 (OCR_0000) in group 1 (OCR) is offline for writes

NOTE: successfully read mirror side 2 of virtual extent 5 logical extent 1 of file 260 in group [1.4130008359] from disk OCR_0001 allocation unit 1374

Tue Sep 17 14:37:56 2019

Reconfiguration started (old inc 12, new inc 14)

List of instances:

2 (myinst: 2)

Global Resource Directory frozen

* dead instance detected - domain 0 invalid = TRUE

Communication channels reestablished

Master broadcasted resource hash value bitmaps

Non-local Process blocks cleaned out

Tue Sep 17 14:37:56 2019

LMS 1: 0 GCS shadows cancelled, 0 closed, 0 Xw survived

Tue Sep 17 14:37:56 2019

LMS 0: 0 GCS shadows cancelled, 0 closed, 0 Xw survived

Set master node info

Submitted all remote-enqueue requests

Dwn-cvts replayed, VALBLKs dubious

All grantable enqueues granted

minact-scn: master found reconf/inst-rec before recscn scan old-inc#:12 new-inc#:12

Post SMON to start 1st pass IR

Tue Sep 17 14:37:56 2019

Instance recovery: looking for dead threads

Beginning instance recovery of 1 threads

Submitted all GCS remote-cache requests

Post SMON to start 1st pass IR

Fix write in gcs resources

Reconfiguration complete

parallel recovery started with 7 processes

Started redo scan

Completed redo scan

read 0 KB redo, 0 data blocks need recovery

Started redo application at

Thread 1: logseq 5, block 493, scn 984621

Recovery of Online Redo Log: Thread 1 Group 1 Seq 5 Reading mem 0

Mem# 0: +OCR/ora/onlinelog/group_1.261.1019221639

Completed redo application of 0.00MB

Completed instance recovery at

Thread 1: logseq 5, block 493, scn 1004622

0 data blocks read, 0 data blocks written, 0 redo k-bytes read

Thread 1 advanced to log sequence 6 (thread recovery)

Tue Sep 17 14:38:09 2019

minact-scn: master continuing after IR

minact-scn: Master considers inst:1 dead

Tue Sep 17 14:38:56 2019

Decreasing number of real time LMS from 2 to 0集群软状态:

[root@prod02 ~]# crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.CRS.dg

ONLINE ONLINE prod02

ora.LISTENER.lsnr

ONLINE ONLINE prod02

ora.OCR.dg

ONLINE ONLINE prod02

ora.asm

ONLINE ONLINE prod02 Started

ora.gsd

OFFLINE OFFLINE prod02

ora.net1.network

ONLINE ONLINE prod02

ora.ons

ONLINE ONLINE prod02

ora.registry.acfs

ONLINE ONLINE prod02

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE prod02

ora.cvu

1 ONLINE ONLINE prod02

ora.oc4j

1 ONLINE ONLINE prod02

ora.ora.db

1 ONLINE OFFLINE

2 ONLINE ONLINE prod02 Open

ora.prod01.vip

1 ONLINE INTERMEDIATE prod02 FAILED OVER

ora.prod02.vip

1 ONLINE ONLINE prod02

ora.scan1.vip

1 ONLINE ONLINE prod02

[root@prod02 ~]#ASM 磁盘组状态

[grid@prod02 ~]$ sqlplus / as sysasm

SQL*Plus: Release 11.2.0.4.0 Production on Tue Sep 17 14:45:11 2019

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Real Application Clusters and Automatic Storage Management options

SQL> @c

DG_NAME DG_STATE TYPE DSK_NO DSK_NAME PATH MOUNT_S FAILGROUP STATE

--------------- ---------- ------ ---------- ---------- -------------------------------------------------- ------- -------------------- --------

CRS MOUNTED NORMAL 2 CRS_0002 /dev/oracleasm/disks/DISK03 CACHED ZCDISK NORMAL

CRS MOUNTED NORMAL 5 CRS_0005 /dev/oracleasm/disks/VOTEDB02 CACHED CRS_0001 NORMAL

CRS MOUNTED NORMAL 9 CRS_0009 MISSING CRS_0000 NORMAL

OCR MOUNTED NORMAL 0 OCR_0000 MISSING OCR_0000 NORMAL

OCR MOUNTED NORMAL 1 OCR_0001 /dev/oracleasm/disks/NODE02DATA01 CACHED OCR_0001 NORMAL

DISK_NUMBER NAME PATH HEADER_STATUS OS_MB TOTAL_MB FREE_MB REPAIR_TIMER V FAILGRO

----------- ---------- -------------------------------------------------- -------------------- ---------- ---------- ---------- ------------ - -------

0 OCR_0000 UNKNOWN 0 5120 3185 12777 N REGULAR

9 CRS_0009 UNKNOWN 0 2048 1584 12777 N REGULAR

1 OCR_0001 /dev/oracleasm/disks/NODE02DATA01 MEMBER 5120 5120 3185 0 N REGULAR

5 CRS_0005 /dev/oracleasm/disks/VOTEDB02 MEMBER 2048 2048 1648 0 Y REGULAR

2 CRS_0002 /dev/oracleasm/disks/DISK03 MEMBER 5115 5115 5081 0 Y QUORUM

GROUP_NUMBER NAME COMPATIBILITY DATABASE_COMPATIBILITY V

------------ ---------- ------------------------------------------------------------ ------------------------------------------------------------ -

1 OCR 11.2.0.0.0 11.2.0.0.0 N

2 CRS 11.2.0.0.0 11.2.0.0.0 Y

SQL>prod01 集群状态

[grid@prod01 ~]$ crsctl stat res -t -init

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.asm

1 ONLINE OFFLINE Abnormal Termination

ora.cluster_interconnect.haip

1 ONLINE OFFLINE

ora.crf

1 ONLINE ONLINE prod01

ora.crsd

1 ONLINE OFFLINE

ora.cssd

1 ONLINE OFFLINE STARTING

ora.cssdmonitor

1 ONLINE ONLINE prod01

ora.ctssd

1 ONLINE OFFLINE

ora.diskmon

1 OFFLINE OFFLINE

ora.drivers.acfs

1 ONLINE ONLINE prod01

ora.evmd

1 ONLINE OFFLINE

ora.gipcd

1 ONLINE ONLINE prod01

ora.gpnpd

1 ONLINE ONLINE prod01

ora.mdnsd

1 ONLINE ONLINE prod01

[grid@prod01 ~]$结论

prod01 被驱逐,数据库关闭,prod02运行正常。