2019年,国际语音交流协会INTERSPEECH第20届年会将于9月15日至19日在奥地利格拉茨举行。Interspeech是世界上规模最大,最全面的顶级语音领域会议,近2000名一线业界和学界人士将会参与包括主题演讲,Tutorial,论文讲解和主会展览等活动,本次阿里论文有8篇入选,本文为Siqi Zheng, Gang Liu, Hongbin Suo, Yun Lei的论文《Towards A Fault-tolerant Speaker Verification System: A Regularization Approach To Reduce The Condition Number》

点击下载论文

文章解读

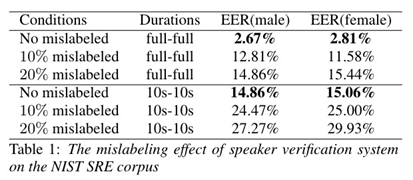

在常用的声纹模型的训练中,当部分训练数据被污染时,模型对错误标注数据会表现得较为敏感。如表格1所示,当随机将10%-20%的训练数据的label替换后,在各种condition下,训练出的模型的EER均绝对下降超过10%。这使得我们难以有效利用大量线上未标注的语音交互数据进行训练。

因此,在深度神经网络的训练过程中,需要让模型对错误标注的训练数据表现出更高的鲁棒性。换言之,如果将声纹模型训练的问题定义为一个函数 ,将训练数据x映射到学习到的模型y,则我们的目标是尽可能减小该函数的condition number,

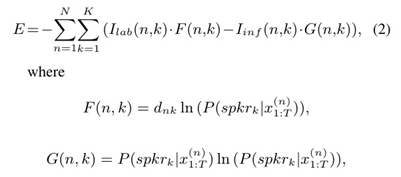

为了达到该目的,本文提出了3种方法。首先,提出了一种regularized entropy loss,如下:

在传统的entropy loss中,训练数据的label取值范围为0和1 两个值。我们将该判决改为一个基于置信度的软判决,置信度来自神经网络输出的后验概率。

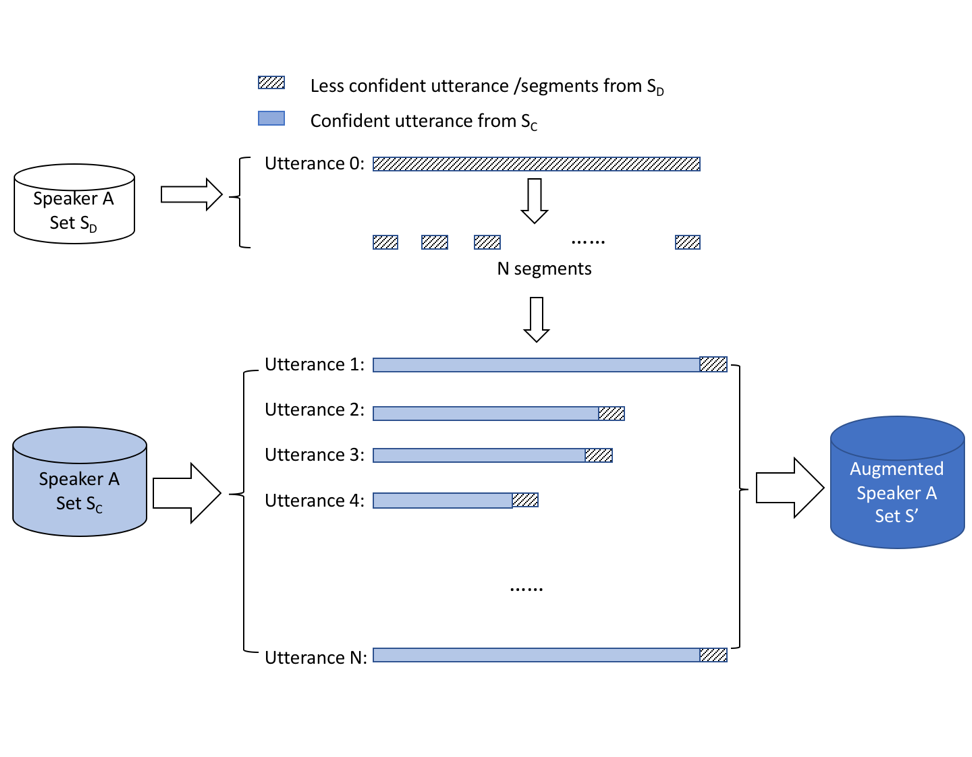

其次,我们提出对数据进行segments level的reshuffling方法,通过将置信度较低的语音频谱特征片段随机与其它语音的频谱特征进行拼接的操作,同时起到了数据增强和清洗的作用,从而提升了模型对训练数据的鲁棒性。

最后,我们利用Co-Training的思想,同时训练两个说话人空间,当两个说话人空间相对独立时,在说话人空间A中呈现高置信度的数据样本,当投影到说话人空间B中时,其分布会呈一定随机性。因此,这些样本对说话人空间B的训练更有帮助。反之亦然。两个说话人空间的在训练过程中的相互监督,可减少误标数据带来的影响,从而达到降低condition number的目的。

文章摘要

**Large-scale deployment of speech interaction devices makes it possible to harvest tremendous data quickly, which also introduces the problem of wrong labeling during data mining. Mislabeled training data has a substantial negative effect on the performance of speaker verification system. This study aims to enhance the generalization ability and robustness of the model when the training data is contaminated by wrong labels. Several regularization approaches are proposed to reduce the condition number of the speaker verification problem, making the model less sensitive to errors in the inputs. They are validated on both NIST SRE corpus and far-field smart speaker data. The results suggest that the performance deterioration caused by mislabeled training data can be significantly ameliorated by proper regularization.

Index Terms: Speaker Verification, Mislabeled Data, Entropy Minimization, Loss Regularization, Co-Training, Condition Number

阿里云开发者社区整理