节点管理

节点状态

please refer: https://kubernetes.io/docs/concepts/architecture/nodes/#node-status

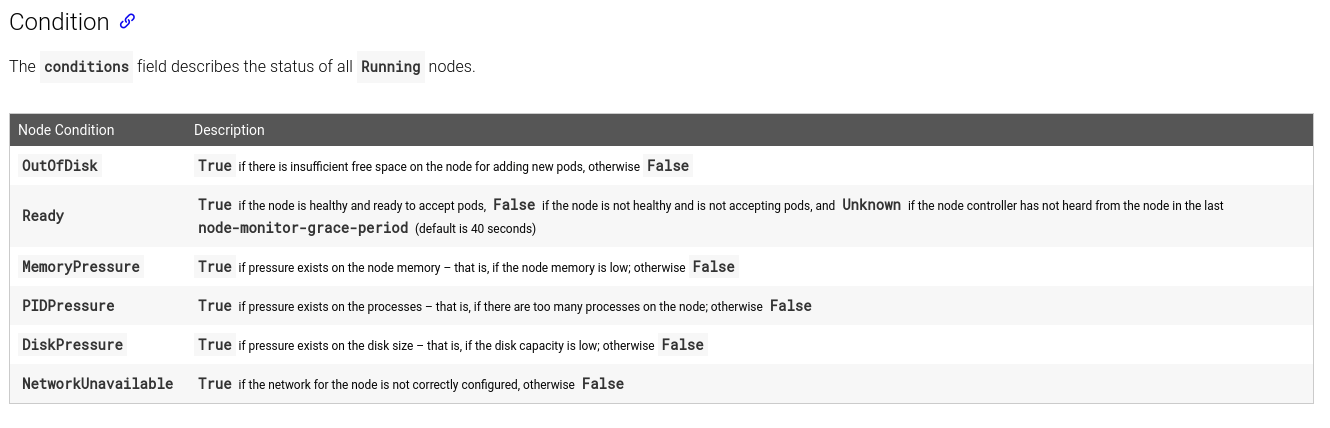

这里我们重点关注下Condition部分

如文档描述

节点失联 or 节点宕机

查看节点列表

~ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

worker01 Ready master 21h v1.14.3 192.168.101.113 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.9.5

worker02 Ready <none> 21h v1.14.3 192.168.100.117 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.9.5

# 查看pod和其运行所在节点

~ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6657c9ffc-6q2pt 1/1 Running 1 19h 10.244.0.11 worker01 <none> <none>

nginx-6657c9ffc-msd6t 1/1 Running 0 19h 10.244.1.17 worker02 <none> <none>现在我们使worker02关机

~ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6657c9ffc-6q2pt 1/1 Running 1 20h 10.244.0.11 worker01 <none> <none>

nginx-6657c9ffc-msd6t 1/1 Running 0 20h 10.244.1.17 worker02 <none> <none>

~ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

worker01 Ready master 22h v1.14.3 192.168.101.113 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.9.5

worker02 Ready <none> 21h v1.14.3 192.168.100.117 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.9.5

~ ping 192.168.100.117

PING 192.168.100.117 (192.168.100.117) 56(84) bytes of data.

^C

--- 192.168.100.117 ping statistics ---

2 packets transmitted, 0 received, 100% packet loss, time 1007ms可以看到虽然查看状态还是正常,实际上节点已经无法ping通,再次查看,发现节点已经处于NotReady状态

~ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

worker01 Ready master 22h v1.14.3 192.168.101.113 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.9.5

worker02 NotReady <none> 21h v1.14.3 192.168.100.117 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.9.5

~ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6657c9ffc-6q2pt 1/1 Running 1 20h 10.244.0.11 worker01 <none> <none>

nginx-6657c9ffc-msd6t 1/1 Running 0 20h 10.244.1.17 worker02 <none> <none>查看节点详情,发现节点已经被打上node.kubernetes.io/unreachable:NoExecute和node.kubernetes.io/unreachable:NoSchedule两个污点,conditions也随之变化

~ kubectl describe node worker02

Name: worker02

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

...

Annotations: flannel.alpha.coreos.com/backend-data: {"VtepMAC":"4e:0a:b4:88:0d:83"}

...

CreationTimestamp: Sat, 08 Jun 2019 12:42:00 +0800

Taints: node.kubernetes.io/unreachable:NoExecute

node.kubernetes.io/unreachable:NoSchedule

Unschedulable: false

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure Unknown Sun, 09 Jun 2019 10:03:52 +0800 Sun, 09 Jun 2019 10:04:52 +0800 NodeStatusUnknown Kubelet stopped posting node status.

DiskPressure Unknown Sun, 09 Jun 2019 10:03:52 +0800 Sun, 09 Jun 2019 10:04:52 +0800 NodeStatusUnknown Kubelet stopped posting node status.

PIDPressure Unknown Sun, 09 Jun 2019 10:03:52 +0800 Sun, 09 Jun 2019 10:04:52 +0800 NodeStatusUnknown Kubelet stopped posting node status.

Ready Unknown Sun, 09 Jun 2019 10:03:52 +0800 Sun, 09 Jun 2019 10:04:52 +0800 NodeStatusUnknown Kubelet stopped posting node status.再次查看pod,发现已经被调度到其他节点

~ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6657c9ffc-6q2pt 1/1 Running 1 20h 10.244.0.11 worker01 <none> <none>

nginx-6657c9ffc-msd6t 1/1 Terminating 0 20h 10.244.1.17 worker02 <none> <none>

nginx-6657c9ffc-n9s4p 1/1 Running 0 71s 10.244.0.14 worker01 <none> <none>查看正在terminating的pod状态:

~ kubectl describe pod/nginx-6657c9ffc-msd6t

Name: nginx-6657c9ffc-msd6t

Node: worker02/192.168.100.117

Status: Terminating (lasts 2m5s)

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events: <none>发现两个污点容忍tolerations选项,解释了为什么节点处于NotReady后,pod还是running状态一段时间后被terminate重建

更多细节,please refer https://kubernetes.io/docs/concepts/architecture/nodes/#node-controller

移除节点

为了实验效果,先把pod扩容到10个

~ kubectl scale --replicas=10 deployment/nginx

deployment.extensions/nginx scaled

~ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6657c9ffc-6lfbp 1/1 Running 0 8s 10.244.1.20 worker02 <none> <none>

nginx-6657c9ffc-6q2pt 1/1 Running 1 20h 10.244.0.11 worker01 <none> <none>

nginx-6657c9ffc-8cbpl 1/1 Running 0 8s 10.244.1.23 worker02 <none> <none>

nginx-6657c9ffc-dbr7c 1/1 Running 0 8s 10.244.1.22 worker02 <none> <none>

nginx-6657c9ffc-kk84d 1/1 Running 0 8s 10.244.1.21 worker02 <none> <none>

nginx-6657c9ffc-kp64c 1/1 Running 0 8s 10.244.0.16 worker01 <none> <none>

nginx-6657c9ffc-n9s4p 1/1 Running 0 40m 10.244.0.14 worker01 <none> <none>

nginx-6657c9ffc-pxbs2 1/1 Running 0 8s 10.244.1.19 worker02 <none> <none>

nginx-6657c9ffc-sb85v 1/1 Running 0 8s 10.244.1.18 worker02 <none> <none>

nginx-6657c9ffc-sxb25 1/1 Running 0 8s 10.244.0.15 worker01 <none> <none>使用drain放干节点,然后删除节点

~ kubectl drain worker02 --force --grace-period=900 --ignore-daemonsets

node/worker02 already cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/kube-flannel-ds-amd64-crq7k, kube-system/kube-proxy-x9h7m

evicting pod "nginx-6657c9ffc-sb85v"

evicting pod "nginx-6657c9ffc-dbr7c"

evicting pod "nginx-6657c9ffc-6lfbp"

evicting pod "nginx-6657c9ffc-8cbpl"

evicting pod "nginx-6657c9ffc-kk84d"

evicting pod "nginx-6657c9ffc-pxbs2"

pod/nginx-6657c9ffc-kk84d evicted

pod/nginx-6657c9ffc-dbr7c evicted

pod/nginx-6657c9ffc-8cbpl evicted

pod/nginx-6657c9ffc-pxbs2 evicted

pod/nginx-6657c9ffc-sb85v evicted

pod/nginx-6657c9ffc-6lfbp evicted

node/worker02 evicted

# 执行完后发现所有pod已经运行在worker01上了

~ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6657c9ffc-45svt 1/1 Running 0 63s 10.244.0.18 worker01 <none> <none>

nginx-6657c9ffc-489zz 1/1 Running 0 63s 10.244.0.21 worker01 <none> <none>

nginx-6657c9ffc-49vqv 1/1 Running 0 63s 10.244.0.20 worker01 <none> <none>

nginx-6657c9ffc-6q2pt 1/1 Running 1 20h 10.244.0.11 worker01 <none> <none>

nginx-6657c9ffc-fq7gg 1/1 Running 0 63s 10.244.0.22 worker01 <none> <none>

nginx-6657c9ffc-kl6j9 1/1 Running 0 63s 10.244.0.19 worker01 <none> <none>

nginx-6657c9ffc-kp64c 1/1 Running 0 4m31s 10.244.0.16 worker01 <none> <none>

nginx-6657c9ffc-n9s4p 1/1 Running 0 44m 10.244.0.14 worker01 <none> <none>

nginx-6657c9ffc-ssmph 1/1 Running 0 63s 10.244.0.17 worker01 <none> <none>

nginx-6657c9ffc-sxb25 1/1 Running 0 4m31s 10.244.0.15 worker01 <none> <none>

# 删除节点

~ kubectl delete node worker02

node "worker02" deleted

~ kubectl get node

NAME STATUS ROLES AGE VERSION

worker01 Ready master 22h v1.14.3更多详情 please refer https://kubernetes.io/docs/concepts/workloads/pods/disruptions/

加入节点

使用kubeadm初始化master节点后,会有一段提示,说明如何加入新节点,那个务必要保存好

接下来我们将刚刚被下线的worker02重置,然后重新加入集群

~ kubeadm reset

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W0609 11:00:23.206928 7376 reset.go:234] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] No etcd config found. Assuming external etcd

[reset] Please manually reset etcd to prevent further issues

[reset] Stopping the kubelet service

[reset] unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of stateful directories: [/var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes]

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually.

For example:

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

~ iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X执行kubeadm join

~ kubeadm join 192.168.101.113:6443 --token ss6flg.csw4u0ok134n2fy1 \

--discovery-token-ca-cert-hash sha256:bac9a150228342b7cdedf39124ef2108653db1f083e9f547d251e08f03c41945

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

查看节点,发现已经添加成功

~ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

worker01 Ready master 23h v1.14.3 192.168.101.113 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.9.5

worker02 Ready <none> 33s v1.14.3 192.168.100.117 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.9.5