By Alibaba Cloud Senior Technical Expert Lin Xiaobin

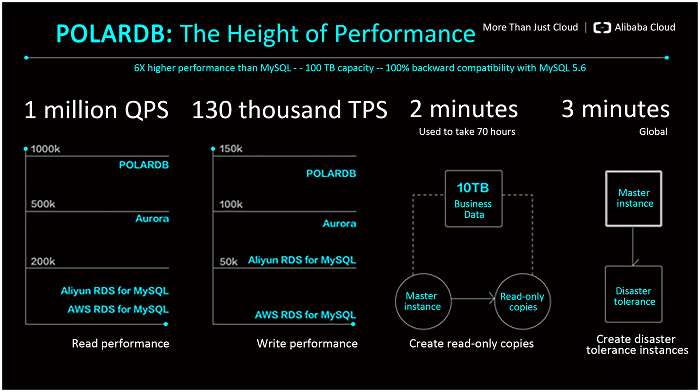

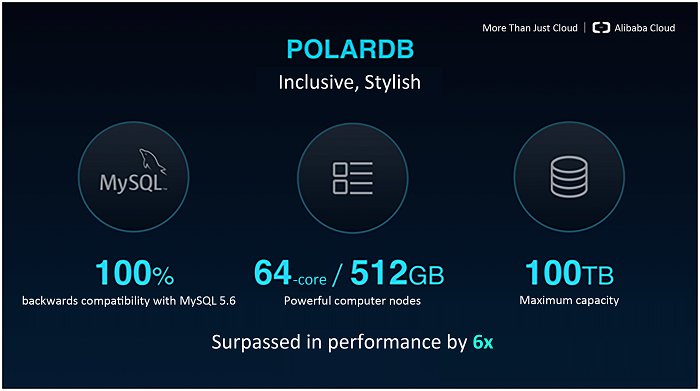

PolarDB is a commercial relational database product developed by the Alibaba Cloud database team on the foundation of the 3rd generation cloud computing framework. It features 100% backward compatibility with MySQL 5.6 and the ability to expand the capacity of a single database to over 100TB. Users can expand the computing engine and storage capability in just a matter of seconds! PolarDB offers a 6x performance improvement over MySQL 5.6 and a significant drop in costs compared to other commercial databases.

Advantages of the PolarDB Framework

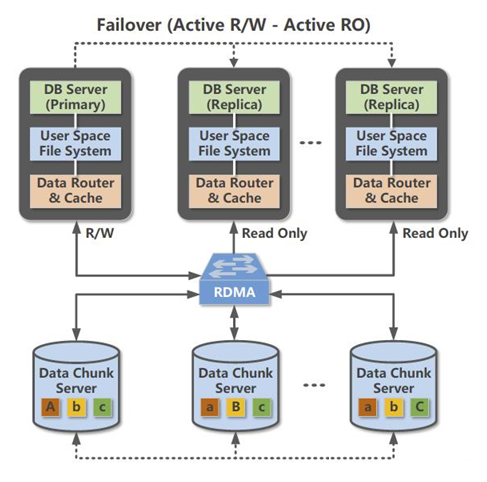

First, the 3rd generation distributed shared storage framework allows PolarDB to be divided into computing nodes (mainly servers that perform SQL parsing and storage engine calculations) and storage nodes (servers that primarily perform storage of data blocks and database snapshots). This enables the user to quickly expand services and perform O&M.

As we are all aware, the time taken to perform operations like expanding capacity, performing backups, and executing migrations for a traditional database is directly proportional to the capacity of the database in question. For a database with terabytes of data, simply adding a read-only backup can take one or even two days. On PolarDB, however, capacity can be expanded seamlessly, no matter how much data you have to work with. Creating a read-only copy only takes up to 2 minutes, while a full backup can be created in under 1 minute. This provides businesses with the ability to quickly and flexibly expand their services.

Furthermore, unlike traditional cloud databases where there is a separate copy of the data for each instance, each of the nodes in a PolarDB database (including read/write nodes and read-only nodes) can access the same data on a storage node. This reduces response times to a matter of seconds. (Backup times have no correlation to the amount of underlying data)

Finally, with the help of the excellent RDMA network and the newest block storage technology, PolarDB is able to keep providing services even after a server crash and without having to restart the machine. This satisfies the high database availability requirements of businesses operating in an internet environment.

Performance Optimizations of the PolarDB Storage Engine

Sustained release hardware

It is common knowledge that a relational database is an IO intensive application, so increasing IO performance is crucial to increasing the performance of the database. Over the past ten years, in the field of databases, the replacement of hard drives with solid state drives has produced a considerable increase in data processing throughput.

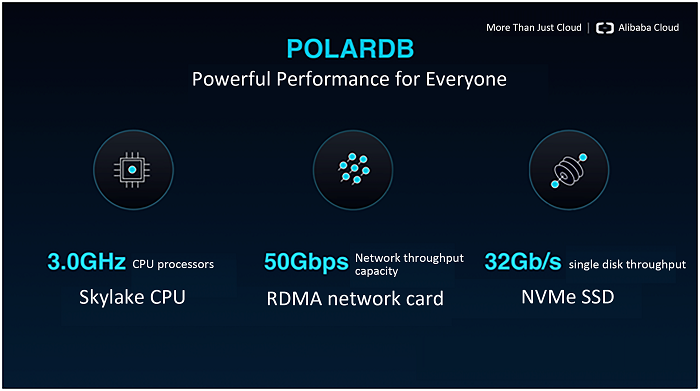

PolarDB uses the leading hardware technology, including using the Optane storage card as a medium to 3DXpoint, as well as NVMe SSD, and RoCE RDMA networks. Furthermore, it achieves software-hardware integration optimization targeted at hardware frameworks. From databases to file systems, network communication protocols, distributed storage systems, and hardware drivers, PolarDB has achieved deep optimizations of the entire IO chain across all layers of the software stack.

In order to combine the high performance of the 3DXpoint kernel and low costs of the 3D NAND kernel, PolarDB combined the high speed Optane card and high throughput capacity NVMe SSD card on the software layer to form a mixed storage layer. This ensures low write latency, high throughput, and high QoS for the database, and keeps the ratio of performance to cost relatively low for the entire system.

Bypassing the core to squeeze out more hardware performance

In the pursuit of higher performance with lower latency, the Alibaba Cloud database team boldly abandoned the functionalities provided by Linux kernels, like block devices, file systems like ext4, the TCP/IP stack, and programming interfaces in favor of building their own kernel. In the end, PolarDB became a complete IO and network stack for running in user mode.

The PolarDB user mode protocol stack solves the issue of a slow core IP protocol stack. User programs in user mode are able to operate hardware directly through DMA. They can use polling to listen for hardware completing IP tasks, and eliminate overhead from context switching and interruptions. User programs can also perform IO processing thread and CPU mapping, where each IO processing thread independently occupies the CPU, mutually processing different IO requests and binding them to different IO device queues. Each IO request is handled on a single thread and a single CPU from beginning to end, eliminating the need for mutual exclusivity between locking and running. This technology maximizes performance interactions between high speed devices, allowing a single CPU to perform up to 200,000 IO processes per second while maintaining linear thread scalability. This means that 4 CPUs can reach up to 800,000 IO processes per second, which is significantly higher than the kernel in terms of both performance and economics.

Networks are similar. In the traditional Ethernet networks of the past, one network card would send a message to another machine with a switch in the middle of the transaction. The entire process would take between 100-200ms. PolarDB supports ROCE Ethernet networks, where the application passes through an RDMA network to write the contents of its memory directly into the memory address of the target machine, or read data directly from the memory of the target machine and into its own memory. In the middle, the communication protocol codec and relay mechanism are both handled by the RDMA card, without the need for any participation from the CPU. This significantly improves performance, requiring only 6-7ms to send a 4kb message from one machine to another. Just as the kernel’s IO protocol stack can’t keep up with the capabilities of high speed storage devices, its TCP/IP protocol stack is likewise unable to keep up with high speed networking devices, thus it is necessarily replaced by the PolarDB user mode network protocol stack.

Multiple database copies achieved by hardware DWA and physical copies

Everyone knows ACID, the acronym representing the special characteristics of relational databases. Atomicity, Consistency, Isolation, and Durability.

PolarDB seeks to improve database copying using a two-pronged approach. On the one hand, PolarDB ensures the D (Durability) in ACID by providing DMA capability stringing for the network and storage software, and utilizing hardware pathways to efficiently store logs from the leader database to the hard drives on three storage nodes. On the other hand, it enables high efficiency read-only nodes, synchronizing data between the leader database and read-only nodes using physical copies that allow data to be directly updated to the memory of the read-only node. How is this accomplished?

PolarDB achieves log durability by sending it to the hard drives of three storage nodes. The leader database uses RDMA to send log data to the memory of a storage node, which in turn use RDMA to communicate copies between itself and other storage nodes. Each storage node uses SPDK to write the data to the storage medium of an NVMe interface. Throughout the entire process, it is completely unnecessary for the CPU to access the payload achieving zero-copy data.

At the same time, PolarDB completes data transmission and persistence between the RDMA network card and the NVMe controller. The CPU is only responsible for maintaining the status machine, stringing together the network card and storage card with consensus protocols. Synchronization between storage nodes uses the Parallel Raft protocol. Just like in the Raft protocol, resolutions are serially generated and submitted on the leader node, however, in the Parallel Raft protocol, the leader is allowed to disorderly synchronize. To put it simply, when follower nodes miss several nodes, they are allowed to first commit and apply the later logs and then asynchronously add the missed logs afterward. Performance and stability during data synchronization are both consistent with the Raft protocol.

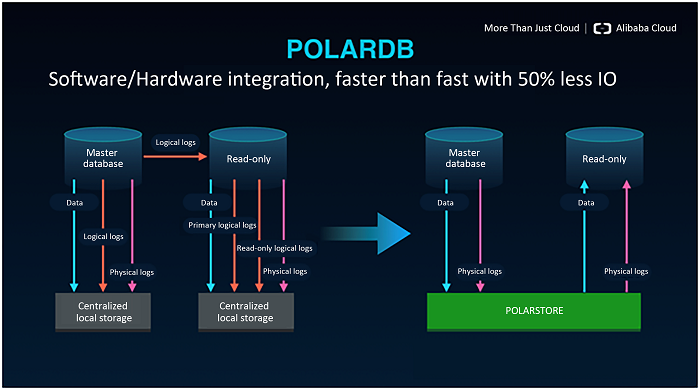

PolarDB abandons logical copying based on binlog for data flow between the leader database and read-only instances. Rather it achieves physical copying based on redolog from innodb, providing a noticeable performance increase for both the leader database and its followers.

On the leader database, the original engine needs to perform XA tasks with binlog, which can only be returned after binlog and redolog write to disk at the same time. After eliminating binlog, XA tasks can also be done away with, which greatly shortens a tasks execution path and reduces IO overhead. On the follower database, since redolog is a physical copy, it simply needs to be compared to the LSN of the page to determine whether or not to playback. It can intrinsically be executed on multiple threads, and it helps to improve the accuracy of the data. Furthermore, after PolarDB receives the redolog, all it needs to do is refresh the page in memory, without any disk or IO involvement. This reduces overhead significantly compared to follower databases in traditional multiple copy systems.

Smart Storage aimed at accelerating the database

PolarDB’s storage nodes make targeted optimizations aimed at improving the IO workload of the database.

IO priority optimization: PolarDB opens a green path in both the file system and storage node layers, prioritizing redolog files, which reduces queues and increases IO priority. Redolog is also realigned from 512 to 4k, improving SSD performance.

Double write optimization. PolarDB storage nodes support 1MB atomic writes, so they can safely close double write, reducing IO overhead by nearly half.

Group commit optimization: In PolarDB, a single group commit can write several hundred kilobytes in a single IO. For an SSD, delay and IO size are linear, and PolarDB makes a number of optimizations from the file system to storage nodes to ensure that this type of IO is able to operate smoothly, and stripes redolog files, cutting a several kilobyte IO into a batch of smaller IOs of only 16 kilobytes each. They are then sent separately to multiple storage nodes and different disks, further reducing lag on key IO paths.

Performance Optimizations for the PolarDB Compute Engine

Using shared storage physical copies

Because PolarDB uses shared storage and physical copies, restoring a database from backups is completely reliant on redolog, so binlog is done away with. This reduces the IO consumption of each individual task, effectively reduces statement response time and improves throughput. Furthermore it avoids the XA task logic that has to be executed with binlog, shortening the execution path for task statements.

Lock optimizations

PolarDB targets high concurrency situations with a series of optimizations to internal engine locks. For example, breaking up latch into smaller granularity locks, or changing locks into enumerating methods to avoid lock competition, like Undo segment mutex, log system mutex. PolarDB also changes the data structure of a portion of hotspots into a Lock Free structure, for example the MDL lock on the Server layer.

Log submission optimizations

Redolog’s sequential write performance has a large impact on the performance of the database. In order to reduce the performance impact of Redolog switching, we use methods like Fallocate on the backend to preemptively distribute log files. Furthermore, several modern solid state drives are 4K allocated, while MySQL code creates logs according to the old 512 byte allocation used in early hard drives. This causes the hard drive to perform several unnecessary operations, holding back the performance of solid state drives, so we have implemented optimizations on this front as well. We have implemented the Group Commit optimization for log submission times, further using Double RedoLog Buffer to increase concurrency.

Copy performance

Physical copy performance is crucial in PolarDB. We increase performance not only from the database page dimension, but also optimized the processes necessary to making copies. For example, we added a length field to MTR logs, thus reducing CPU overhead during the log Parse phase. This simple optimization reduces log Parse time by 60%. We also use the memory data structure in Dummy Index to reduce overhead in Malloc/Free to further improve performance.

Read node performance

In PolarDB, the Replica node and logs are currently applied in batches. Therefore, before a new batch of logs are applied, the read request in Replica does not need to re-create a new ReadView, rather it can use the previous one stored in memory. This optimization can also increase Replica read performance.

Minimizing Costs with PolarDB

Storage resource pooling

PolarDB uses a structure that separates computing and storage. The database itself runs on computing nodes, which make up a computing resource pool, while data is all stored on the storage nodes which make up a storage resource pool. If there are not enough CPU and memory resources available, then the system can draw from the computing resource pool. If it lacks capacity or IOPS, then it can draw from the storage resource pool. Both pools expand or contract according to need. Furthermore, the computing and storage nodes can be split and optimized in two different directions. Storage nodes can tolerate low CPU and memory configurations and improved storage density, while computing nodes can be configured with low storage capacity, using only a small SSD to run the operating system, but adding more CPU cores.

The traditional database deployment model, on the other hand, is a chimney model. A single host runs both the database and the data, which introduces two problems. First, it’s difficult to choose the right type of machine. The ratio of CPU to hard disk is dependent on the demands of the service, which can be hard to anticipate. The second is the issue of hard drive fragmentation. In a production cluster, there will always be some machines with low hard drive utilization, some won't even reach 10%. However, because of application stability demands, the system will need to exclusively occupy the CPU, which means that the SSD on such a machine is wasted. Both of these issues are solved by a storage resource pool, as SSD utilization is improved, costs are naturally reduced.

Transparent compression

Aside from providing 1MB atomic writes for ibd files, PolarDB storage nodes also eliminate double write overhead and support transparent compression for ibd file data blocks. The compression rate is up to 2.4x, which contributes to reducing storage costs.

Compared to compression methods in traditional databases, storage nodes are capable of achieving transparent compression without utilizing the CPU on a computing node, thereby creating zero impact on the performance of the database as a whole. It uses the QAT card to increase speed and FGPA to accelerate the IO channel. PolarDB’s storage node also supports snapshot (dedup) functionality. If the page has not been changed between two adjacent snapshots, then they will be linked to the same read-only page, so that only one copy is stored physically.

0 storage cost read-only instances

When a traditional database creates a read-only instance, it utilizes the one write many reads method by creating a read-only copy. First the most recent full backup is copied and used to restore a temporary instance. Then this instance connects to the leader or another binlog source and synchronizes the missing data. Once the data is up to date, the instance is upgraded to a read-only copy. This method is time consuming, as the time it takes to create a read-only instance is proportionate to the amount of data in the database. Furthermore, it adds expensive storage costs. For example, if the user purchases an instance and five read-only instances, then he needs to spend 7-8x the money on storage. (This is determined by whether the leader has two or three copies).

Both of these problems are solved in the PolarDB framework. On the one hand, creating a new read-only instance does not require copying data, so no matter how big the database is, the instance can be completed in 2 minutes or less. Furthermore, the leader database shares storage resources with the read-only instances. Using these two frameworks to add a read-only copy allows us to add instances with 0 new storage costs. The user need only pay for the extra CPU and memory resources.

Alibaba Cloud Suite of Database Services

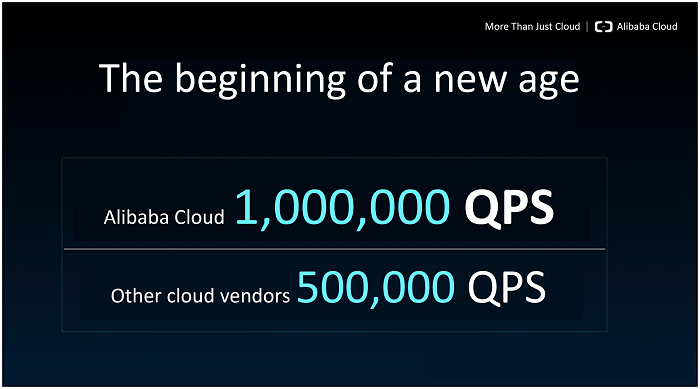

PolarDB is a prototype of the future, all-in-one, database. It meets the full variety of current mixed use requirements. Alibaba Cloud has put on display its advantages in the R&D of the PolarDB product, realizing the opportunity to design an integration of database OLTP and OLAP, and thus has begun a revolution in the IT infrastructure architectures required by companies as they go through the process of digitization.

With Alibaba Cloud's cutting-edge research and technologies, it is no surprise that the ApsaraDB and HybridDB family of products are one of the most popular and reliable options in the industry. Visit www.alibabacloud.com today to discover more innovative products by Alibaba Cloud!