Asynchronous Methods for Deep Reinforcement Learning

ICML 2016

深度强化学习最近被人发现貌似不太稳定,有人提出很多改善的方法,这些方法有很多共同的 idea:一个 online 的 agent 碰到的观察到的数据序列是非静态的,然后就是,online的 RL 更新是强烈相关的。通过将 agent 的数据存储在一个 experience replay 单元中,数据可以从不同的时间步骤上,批处理或者随机采样。这种方法可以降低 non-stationarity 然后去掉了更新的相关性,但是与此同时,也限制了该方法只能是 off-policy 的 RL 算法。

Experience replay 有如下几个缺点:

1. 每一次交互 都会耗费更多的内存和计算;

2. 需要 off-policy 的学习算法从更老的策略中产生的数据上进行更新。

本文中我们提出了一种很不同的流程来做 DRL。不用 experience replay,而是以异步的方式,并行执行多个 agent,在环境中的多个示例当中。这种平行结构可以将 agent的数据“去相关(decorrelates)”到一个更加静态的过程当中去,因为任何给定的时间步骤,并行的 agent 都会经历不同的状态。这个简单的idea 确保了一个更大的范围,基础的 on-policy RL 算法,如:Sarsa,n-step methods,以及 actor-critic 方法,以及 off-policy 算法,如:Q-learning,利用 CNN 可以更加鲁棒 和 有效的进行应用。

本文的并行 RL 结构也提供了一些实际的好处,前人的方法都是基于特定的硬件,如:GPUs 或者 大量分布式结构,本文的算法可以在单机上用多核 CPU 来执行。取得了比 之前基于 GPU 的算法 更好的效果, asynchronous advantage actor-critic(A3C)算法更是很牛的样子,接着往下看。

文章第3节讲 DQN 的背景知识,该部分透露出 Q-learning的几个问题:

one-step Q-learning 是朝着 one-step return 的方向去更新 action value Q(s, a)。但是利用 one-step 方法的缺陷在于:得到一个奖励 r 仅仅直接影响了 得到该奖励的状态动作对 (s, a) 的值(obtain a reward r only directly affects the value of the state action pairs s, a that led to the reward)。其他 state action pairs的值仅仅间接的通过更新 value Q(s, a) 来影响。这就使得学习过程缓慢,因为许多更新都需要传播一个 reward 给相关进行的 states 和 actions。

一种快速的传播奖赏的方法是利用 n-step returns。 在 n-step Q-learning 中,Q(s, a) 是朝向 n-step return 进行更新。这样就使得一个奖赏 r 直接地影响了 n 个正在进行的 state action pairs 的值。这也使得给相关 state-action pairs 的奖赏传播更加有效。

另外就是定义了“优势函数(advantage function A)”,即:利用 Q值 减去 状态值,定义为:

$A(a_t, s_t) = Q(a_t, s_t) - V(s_t)$;

这种方法也可以看做是 actor-critic 结构,其中,policy $\pi$ 可以看做是 actor,baseline $b_t$ 是 critic。

3. Reinforcement Learning Background

我们考虑标准的强化学习设定,一个 agent 通过几个离散的时间步骤来环境进行交互.在每个时间步骤 t,根据策略 $\pi$, 我们的 agent 收到一个状态 $S_t$,并且从可能的动作集合中选择一个 action $a_t$.其中,策略可以看做是状态到动作的一个映射.作为回报,agent 收到下一个状态 $S_{t+1}$,收到一个可变的奖赏 $r_t$.重复这个过程直到 agent 达到一个停止状态.The Return $R_t$ 是总的累计的奖赏.agent 的目标就是在每一个状态下都最大化期望的 return.动作值 $Q^{\pi}(s, a)$ 是遵循策略,在状态s下,选择动作a所带来的期望 return.最优值函数 $Q^*(s, a) = max_{\pi} Q^{\pi}(s, a)$ 给出了最大动作值.类似的,在策略 $\pi$下,状态 s 的值定义为:$V^{\pi}(s) = E [R_t|s_t = s] $ 是在状态 s 下采取策略 $\pi$ 后得到的简单的期望 return.

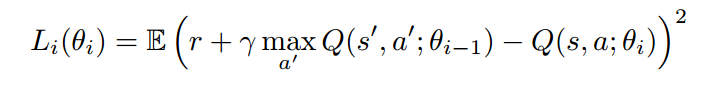

在基于值的 model-free RL 方法中,动作值函数 (action value function) 用一个函数近似表示,例如:神经网络.用 $Q(s, a; \theta)$ 是一个近似的带有参数 $\theta$ 的动作值函数.对参数的更新可以从很多RL算法中得到.有一个称为 Q-Learning 的算法,目标就是直接估计最优动作值函数:$Q^*(s, a) ≈ Q(s, a; \theta)$. 在 one-step Q-learning 中,动作值函数 $Q(s, a; \theta)$ 的参数可以通过迭代的最小化一个序列的损失函数来学习到,第 i 个损失函数的定义为:

其中,s^' 是s转移之后的状态.

我们将上述方法称为 one-step Q-learning,因为其更新了动作值 Q(s, a) 朝向一步 return $r+\gammamax_{a^'}Q(s', a';\theta)$. 这种方法的一个缺点是:得到一个奖赏 r 仅仅直接影响导致这个奖赏的状态动作值对 (the state action pair) s, a.这就使得学习过程非常缓慢,因为需要执行许多更新以将奖赏传递到相关的状态和动作上.有一个方法可以快速的进行传递奖赏,即:采用 n-step returns. 在这个 n-step Q-learning 过程中,Q(s, a) 是朝向 n-step return 定义为:$ r_t + \gamma r_{t+1} + ... + \gamma^{n-1} r_{t+n-1} + max_a \gamma^n Q(s_{t+n}, a)$.这个结果在一次奖赏 r 直接影响了 n 个后续状态动作对(n proceding state action pairs).这就使得传递奖赏的过程变得非常有效.

对比 value-based methods, policy-based model-free methods 直接参数化策略 $\pi (a|s; \theta)$ and 通过执行 $E[R_t]$ 梯度下降来更新参数.

值函数学习到的估计通常用作 baseline $b_t(s_t) ≈ V^{\pi} (s_t)$ 得到了一个较低的策略梯度的方差估计.当估计的值函数被当做 baseline时,$R_t - b_t$ 用来 scale the policy gradient 可以看做是在状态 $s_t$ 下 action $a_t$ 的优势(advantage)的估计,或者说是:$A(a_t, s_t) = Q(a_t, s_t) - V(s_t)$,因为 $R_t$ 是 $Q^{\pi} (a_t, s_t)$ and $b_t$ 是 $V^{\pi}(s_t)$ 的预测.这个方法可以看做是 an actor-critic architecture, where the policy $\pi$ is the actor and the baseline $b_t$ is the critic.

Asynchronous RL Framework

本文提出了多线程的各种算法的异步变种,即:one-step Sarsa,one-step Q-learning,n-step Q-leanring 以及 advantage actor-critic。设计这些算法的目的是找到 RL 算法可以训练深度神经网络策略 而不用花费太多的计算资源。但是 RL 算法又不相同,actor-critic 算法是 on-policy 策略搜索算法,但是 Q-learning 是 off-policy value-based 方法,我们利用两种主要的 idea 来实现四种算法以达到我们的目标。

首先,我们利用异步 actor-learners,利用单机的多CPU线程,将这些 learner 保持在一个机器上,就省去了 多个learner之间通信的花销,使得可以利用 Hogwild! 的方式更新而完成训练。

第二,我们观察到 multiple actors-learners 并行的运行更可能去探索环境的不同部分。

此外, 我们还可以显示的利用每一个 actor-learner 不同的探索策略来最大化这个多样性。通过在不同的线程中不同的探索策略,并行 online 的改变参数,更可能在时间上不相关,相比较 单个 agent 采用 online 的更新方式。所以,我们不采用 经验回放,二是依赖于 parallel actors 采用不同的探索策略来执行 DQN 中 experience replay 的角色。

除了使得学习过程更加稳定,利用多个并行的 actor-learner 有多个优势:

1. 我们获得了训练时间的大幅度降低,因为时间大致和 并行的 actor-learners 的个数呈线性关系。

2. 因为我们不在依赖于 experience replay 来稳定学习,我们可以利用 on-policy reinforcement learning methods 像:Sarsa, actor-critic 来训练神经网络。

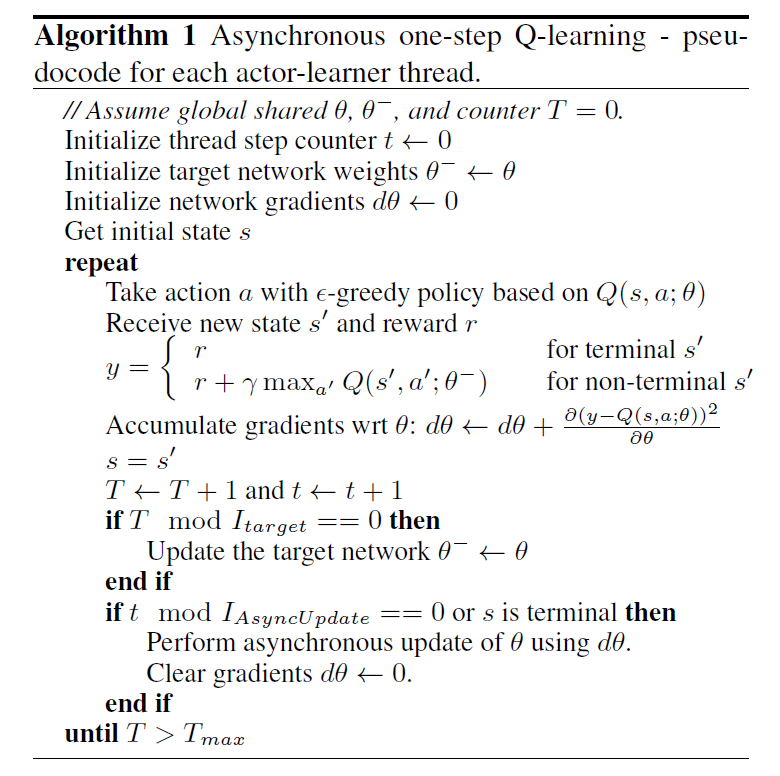

Asynchronous one-step Q-learning :

Each thread interacts with its own copy of the envionment and at each step computes a gradient of the Q-learning loss.

每一个线程有他自己独自 copy 过来的交互环境,每一个时间步骤计算 Q-learning loss 的一个梯度。

We use a shared and slowly changing target network in computing the Q-learning loss, as was proposed in the DQN training method.

我们利用 DQN当中提出来的 target network 计算 Q-learning loss 。

We also accumultate gradients over multiple timesteps before they are applied, which is similar to using minibatches.

我们也累积多个时间步骤的梯度,像 minibatches。

They reduces the chances of multiple actor learners overwriting each other's updates.

这降低了每个 actor learner 覆盖相互更新的概率。

Accumulating updates over several steps also provides some ability to trade off computional efficiency for data efficiency.

多个时间步骤的累积更新,也提供了计算效率的平衡。

Finally, we found that giving each thread a different exploration policy helps improve robustness.

最终,我们发现给定每一个线程,不同的探索策略,可以帮助改进鲁棒性。

虽然有很多探索策略,我们采用 $\epsilon-greedy$ exploitation policy 。

Asynchronous n-step Q-learning :

这种算法看起来并不是非常的 “常规” ,因为它在前向角度操作时,通过显示的计算 n-step returns,和更常见的 后向角度相反。

==>> The algorithm is somewhat unusual because it operates in the forward view by explicitly computing n-step returns, as opposed to the more common backward view used by techniques like eligibility traces.

我们发现当利用基于动量的方法(momentum-based methods)和后向传播(BP)训练神经网络的时候,利用前向视角更加简单。 In order to compute a single update, the algorithm first selects actions using its exploration policy for up to $t_max$ steps or until a terminal state is reached. This process results in the agent receiving up to $t_max$ rewards from the environment since its last update. The algorithm then computes gradients for n-step Q-learning updates for each of the state-action pairs encountered since the last update.

另外,也可以参加博文: https://blog.acolyer.org/2016/10/10/asynchronous-methods-for-deep-reinforcement-learning/

Asynchronous methods for deep reinforcement learning Mnih et al. ICML 2016

You know something interesting is going on when you see a scalability plot that looks like this:

That’s a superlinear speedup as we increase the number of threads, giving a 24x performance improvement with 16 threads as compared to a single thread. The result comes from the Google DeepMind team’s research onasynchronous methods for deep reinforcement learning. In fact, of the four asynchronous algorithms that Mnih et al experimented with, the “asynchronous 1-step Q-learning” algorithm whose scalability results are plotted above is not the best overall. That honour goes to “A3C”, the Asynchronous Advantage Actor-Critic, which exhibits regular slightly sub-linear scaling as you add threads. How come it’s the best then? Because itsabsolute performance, as measured by how long it takes to achieve a given reference score when learning to play Atari games, is the best.

DeepMind’s DQN sytem is a Deep-Q-Network reinforcement learning system that learned to play Atari games. DQN relied heavily on GPUs. A3C beats DQN easily, using just CPUs:

When applied to a variety of Atari 2600 domains, on many games asynchronous reinforcement learning achieves better results, in far less time than previous GPU-based algorithms, using far less resource than massively distributed approaches.

Here you can see a comparison of the learning speed of the asynchronous algorithms vs DQN, with DQN trained on a single GPU, and the asynchronous algorithms trained using 16 CPU cores on a single machine.

(Click for larger view)

And when it comes to overall performance levels achieved, look how well A3C does compared to many other state of the art systems, despite significantly reduced training times.

Let’s take a step back and explore what’s going on here.

Asynchronous learning

We’re talking about reinforcement learning systems, and in particular for the experiments conducted in this paper, reinforcement learning systems used to learn how to play Atari games (57 of them), drive a car in the TORCS car racing simulator:

Whereas those methods are variations on a theme of ‘do the same thing (or a very close approximation to the same thing), but in parallel’, the asynchronous methods here exploit the parallel nature of multiple threads to enable a different approach altogether. DQN and other deep reinforcement learning algorithms use experience replay, capturing an agent’s data which can subsequently be batched and/or sampled over different time-steps.

Deep RL algorithms based on experience replay have achieved unprecedented success in challenging domains such as Atari 2600. However, experience replay has several drawbacks: it uses more memory and computation per real interaction; and it requires off-policy learning algorithms that can update from data generated by an older policy.

Instead of experience replay, one of the key insights in this paper is that you can achieve many of the same objectives of experience replay by playing many instances of the game in parallel.

… we make the observation that multiple actor-learners running in parallel are likely to be exploring different parts of the environment. Moreover, one can explicitly use different exploration policies in each actor-learner to maximize this diversity. By running different exploration policies in different threads, the overall changes being made to the parameters by multiple actor-learners applying online updates in parallel are likely to be less correlated in time than a single agent applying online updates. Hence, we do not use a replay memory and rely on parallel actors employing different exploration policies to perform the stabilizing role undertaken by experience replay in the DQN training algorithm.

This explains the superlinear speed-up in training time required to reach a given level of skill: the more games are being explored in parallel, the better the training input to the network.

I really like this idea that the very nature of doing things in parallel opens up the possibility to use a fundamentally different approach. I don’t think that insight would naturally occur to me, and it makes me wonder if there are other scenarios where it might also apply. A

The algorithms

In reinforcement learning an agent interacts with an environment by taking actions and receiving a reward. At each time step the agent receives the state of the world and a reward score from the previous time step, and selects an action from some universe of possible actions. An action value function, typically represented as Q determines the expected reward for choosing a given action in a given state when following some policy π. There are two broad approaches to learning: value-based and policy-based.

In value-based model-free reinforcement learning methods the action value function is represented using a function approximation, such as a neural network…. In contrast to value-based methods, policy-based model-free methods directly parameterize the policy π(a|s;θ) and update the parameters θ by performing, typically approximate, gradient descent.

(a represents an action, s the state).

Because the parallel approach no longer relies on experience replay, it becomes possible to use ‘on-policy’ reinforcement learning methods such as Sarsa and actor-critic. The authors create asynchronous variants of one-step Q-learning, one-step Sarsa, n-step Q-learning, and advantage actor-critic. Since the asynchronous advantage actor-critic (A3C) algorithm appears to dominate all the others, I’ll just concentrate on that one.

A3C uses a ‘forward-view’ and n-step updates. Forward view means that the algorithm selects actions using its exploration policy for up to tmaxsteps in the future. The agent will then receive up to tmax rewards from the environment since its last update. The policy and value functions are then updated for each state-action pair and associated reward over the tmaxsteps. For each update, the algorithm use “the longest possible n-step return.” In other words, the update includes all steps up to and including the step we are currently performing the update for: a 2-step update for the second state-action, reward pair, a 3-step update for the third , and so on.

Here’s the pseudo-code for the algorithm, taken from the supplementary materials:

(V is a function that determines the value of some state s under policy π.)

We typically use a convolutional neural network that has one softmax output for the policy π(at|st;θ) and one linear output for the value function _V(st;θv), with all non-output layers shared.

Experiments

If you watch some of the videos I linked earlier you can see how well A3C learns to perform a variety of tasks. Playing Atari games has been well covered before. The TORCS car racing simulator is more challenging:

TORCS not only has more realistic graphics than Atari 2600 games, but also requires the agent to learn the dynamics of the car it is controlling…. A3C reached between roughly 75% and 90% of the score obtained by a human tester on all four game configurations in about 12 hours of training.

The Mujoco physics engine simulations required a reinforcement learning approach adapted to continuous actions, which A3C was able to do. It was tested on a number of manipulation and locomotion tasks, and found good solutions in less than 24 hours, and often just a few hours.

The final experiments used A3C on a new 3D maze environment called Labyrinth:

This task is much more challenging than the TORCS driving domain because the agent is faced with a new maze in each episode and must learn a general strategy for exploring random mazes… The final average score indicates that the agent learned a reasonable strategy for exploring random 3D mazes using only a visual input.

Closing thoughts

We’ve seen a number of papers showing how various machine learning tasks can be made more efficient in terms of elapsed training time by exploiting asynchronous parallel workers, as well as more efficient algorithms. There’s another kind of efficiency that’s equally important though: data efficiency, a concept that was much discussed at the recent London Deep Learning Summit. Data efficiency refers to the amount of data that an algorithm needs to achieve a given level of performance. Breakthroughs in data efficiency could have an even bigger impact than breakthroughs in computational efficiency.

And on the topic of computers learning to play games, since Go has now fallen, when will we see a reinforcement learning system beat the (human) champions in esports games too? That would make a great theatre for a battle.